-

Notifications

You must be signed in to change notification settings - Fork 487

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add MBart support for BetterTransformer

#516

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hi @ravenouse !

Thanks a lot for your PR! Glad that the conversion worked! We're almost there!

It seems that MBart uses pre-attention layer norm, could you try to set the attribute to True (as suggested on the suggestion below)

Let me know how it goes!

Co-authored-by: Younes Belkada <[email protected]>

Hi Younes, thank you so much for the advice! It worked! This time the bt pipeline yields the exactly same results with Please let me know what else needed to be done! Thanks again! |

|

Very glad it worked @ravenouse ! Can't wait to see this PR to be merged! Step 1: Finish the integration for

Step 2: Add the integration for

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks so much @ravenouse !

I left a small comment, could you please update ALL_ENCODER_DECODER_MODELS instead so that the tests will run on the new models? Of course let me know how it goes!

| "hf-internal-testing/tiny-random-MBartModel", | ||

| "hf-internal-testing/tiny-random-nllb", |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think that you have to move them to ALL_ENCODER_DECODER_MODELS: the test pytest tests/bettertransformer/test_bettertransformer_encoder.py::BetterTransformersEncoderDecoderTest will only run on the models listed on ALL_ENCODER_DECODER_MODELS ;)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

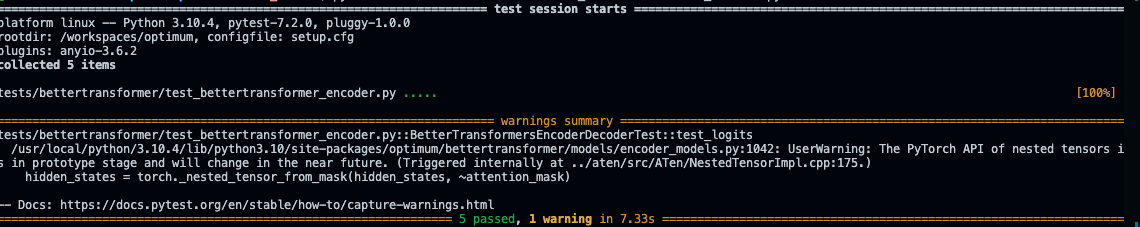

Hi @younesbelkada. Thank you so much for the explanations! Now I have a better understanding of what's going on in the test files. I have moved the two test models to the right list. I run the pytest again and pass it.

Please let me know what else I can do!

|

The documentation is not available anymore as the PR was closed or merged. |

|

Thanks a lot! |

|

Hi @younesbelkada. I have run the |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looking great!

Thanks a lot for your clean implementation of M2M100 support for BetterTransformer 💪

Looking forward to your next contributions ;)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM!

|

Hi @younesbelkada and @michaelbenayoun ! |

|

Thanks so much @ravenouse ! |

This PR adds the

MBartEncoderLayerBetterTransformerclass to supportMBart.During testing, the code is runnable but it yields different results compared with the original transformer model, as shown in the below picture. The specific models I tested are

facebook/mbart-large-50andfacebook/mbart-large-cc25. The downstream task I tested is fill masksCould you tell me what I can do to solve this problem and what other tests I need to run?

Thank you so much!