-

Notifications

You must be signed in to change notification settings - Fork 487

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add MBart support for BetterTransformer

#516

Merged

Merged

Changes from 8 commits

Commits

Show all changes

10 commits

Select commit

Hold shift + click to select a range

255251f

added MBartEncoderLayerBetterTransformer

ravenouse d68a8f4

fixed some bugs

ravenouse 478473f

Update optimum/bettertransformer/models/encoder_models.py

ravenouse 89274c6

added test and doc for the mbart implementation

ravenouse 4546193

ended merge

ravenouse b93a3ea

fixed the mistake made in the last push

ravenouse 58377cc

fixed the mistakes in the previous steps

ravenouse 77b3467

added test and doc for M2M100

ravenouse 3c1b345

moved the test cases/models for the right list

ravenouse d0720cc

reformatted the code

ravenouse File filter

Filter by extension

Conversations

Failed to load comments.

Loading

Jump to

Jump to file

Failed to load files.

Loading

Diff view

Diff view

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think that you have to move them to

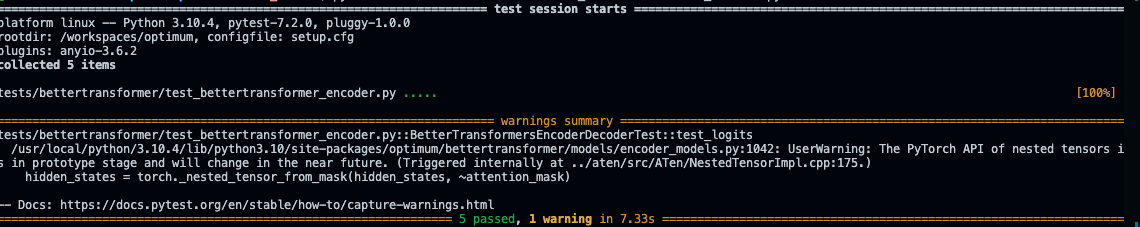

ALL_ENCODER_DECODER_MODELS: the testpytest tests/bettertransformer/test_bettertransformer_encoder.py::BetterTransformersEncoderDecoderTestwill only run on the models listed onALL_ENCODER_DECODER_MODELS;)There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hi @younesbelkada. Thank you so much for the explanations! Now I have a better understanding of what's going on in the test files. I have moved the two test models to the right list. I run the pytest again and pass it.

Please let me know what else I can do!