-

Notifications

You must be signed in to change notification settings - Fork 5.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

2019.2.1/2019.2.0 pip failures even when not using pip #54755

Comments

|

@Reiner030 thanks for reporting this. Would you be able to provide the state file in question that you were trying to run when this error happened? Thanks! |

|

I'm using SaltStack for some years now and I must admit that almost EVERY release comes with important and obvious regressions showing a bunch of errors. Yes code quality control isn't a simple thing to deal with, yes SaltStack has a lot of modules witten by many people but what could be worse than wating for months (sometimes years) that a good PR is merged, packaged and deployed on one hand and then having bad troubles every time we update SaltStack on the other? I know that this comment is a bit rude but please understand that this is very frustrating. |

|

this bug was likely introduced with 238fd0f

|

|

I'm hitting this issue with states using |

|

we also traced it down to that states using the following state is working: but it fails with as soon as |

|

A similar issue also affects anyone using pip between versions 10.0 and 18.1, due to this: The exceptions module was moved from See: #54772 |

|

Also seeing the issue when targeting state using "Unless", as well as with "OnlyIf" logic. However the root cause seems to be related to changes to the pip_state in 2019.2.1 See issue: |

|

I can confirm that installing |

|

Similar to @maennlse, this is fixed on Debian Buster by installing the |

|

I can also confirm that it works on Ubuntu 16.04 when installing python-pip first. |

|

Related to #53570 |

|

+1 to this happening when using states with onlyif and unless requisites. Furthermore, even AFTER installing python-pip, something feels wrong... a state as simple as below takes ~10sec (!!!) to execute whereas it takes ~150ms to execute without the requisites. |

|

Hello, So this is patched in #54826 and merged, do you have any idea when the fix will be available in packages ? Do you plan to release a new release soon ? The bug is blocking on our side (we need it the latest version for debian 10 ^^), installing pip is not really a good solution and we would like to know how long we should expect to wait :) Thanks! |

|

Maintainers. Just some advice... If you'd start vendoring the Python modules that salt-stack's modules depend on instead of sharing modules with Python's site-packages and having to suffer the mercy of version skew, the majority of these types of problems will go away until a dever gets a chance to actually look at it. Something like You can't develop a complex application such as this against a variety of moving targets. Perl's CPAN has taught this to us Perl developers the hard way. This is not an uncommon pattern either, as golang and rust have solutions for dealing with these problems as well. |

|

@arizvisa the issue here is that the code was being tested in an environment with a package installed that was not marked as a dep for installation. It just so happened that there was a bug if and when that module was NOT installed. There was another issue pertaining to proxy minions that was missed because their testing harness was testing against the checked out repo rather that the sdist that's bundled for pypi. I don't think either of these problems would have been solved by vendorized modules. OTOH, the team would benefit from creating the sdist and testing against that in a clean-room environment. |

|

Actually, the first issue would actually be solved by vendorizing said module. Actually it would've removed the need for the breaking-commit even. Bundling the deps that you're deving against would also mean that the second case would never happen. Without bundling module versions, your testers essentially need to test how things work with all different versions and without said core module in order to test comprehensively. This can be unreasonable for testers despite potentially being rockstars. Another benefit is that it'll fix that need to Writing a quick testcase that is similar to the This code when calling Now when calling Yea, that's 4.4 seconds vs 0.5 seconds for python2, and 1.3 vs 0.3 seconds for python3.. This is an exaggerated number of cases of course, but literally every single core module in salt checks for a likely non-existent module, especially during matching. So if there's a dependent-module that's not found, that But since you use On another note, I sympathize for you guys because everytime you hit a release you can tell that you're in crunchtime because there's lapses in the responses to bug reports. If this has been like this since 2016 as noted by tomlaredo, you should consider putting things on pause while you profile and refactor as long as your "paying" customers allow for it. |

|

Vendorizing it fixes it by making it a component of the release... which comes with other disadvantages (drift from upstream out side of the release, which is a double-edged sword... added memory consumption for those who formerly did not have or use pip come to mind). Unsure how this approach would handle modules like pyzmq which have C extensions too... |

|

Vendorizing might (or might not) be a long term strategy to manage dependencies. Right now, @rallytime please help us with releasing this fix as soon as possible, this makes latest saltstack release (again!) completely broken for some cases. |

|

At the very least, things won't break until a developer finally gets a chance to look at it and test it against the latest version (instead of having to check against all possible versions for every release). You also get the benefit in that you can ask your community to synchronize the versions if you're busy since it's typically an easy PR. Don't forget that you guys aren't the only one that works on this. Because of saltstack's unfortunate lack of robustness, each of us are essentially required to be Python programmers. |

|

I use salt-cloud to create VMs on Azure, then salt-minion is bootstrapped on the machine by salt-cloud and in the end state.apply rolls out config. |

|

@cskowronnek You can use the The Another option is to pin Salt to a previous stable version (e.g., |

|

@max-arnold Thank your the fast help. script_args: -p python-pip did the trick! |

|

so you guys decided to remove existing package from the repository instead of releasing a version including the fix? :-) |

|

Is there any news on this one? |

|

If you've installed salt from a repo I recommend downgrading to 2019.2.0. That worked for me. |

|

Yes, I edited my previous post as we're not looking for a workaround but a real solution. |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. If this issue is closed prematurely, please leave a comment and we will gladly reopen the issue. |

|

bad bot! |

|

Thank you for updating this issue. It is no longer marked as stale. |

|

Oh, lol. Looky here, another issue that could've been fixed by vendorizing module deps: #54392 |

|

We found a similar issue by using 'latest'. A lot of packages were not being installed at all so we had to downgrade to 2019.2 per @CaptainSanders #54755 (comment) Here's the error for us: Traceback (most recent call last): |

|

Not sure why the stale bot is attacking this issue, but attempting to rid the ticket of the enemy evil doer with |

|

Since this issue re-emerged in 3000.1 can we keep it open? |

Agreed. We're having to fix this on our RHEL6 and RHEL7 minions currently. salt-3000-1.el6 and salt-3000-1.el7 . |

While testing my setup with Debian Buster and "stable" Saltstack version (2019.2.0) on a vagrant instance I run into weird problems...

Especially i didn't use pip and most of the other modules notified in the failure messages I was search for over half hour for the cause:

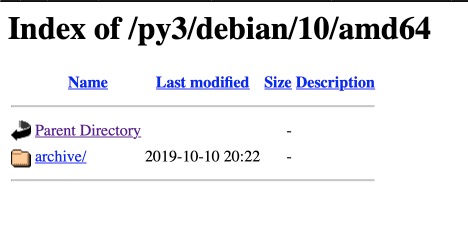

After wasting my nightly time I found luckily that the system has installed a new version which is officially not yet relased:

2019.2.1And thx to the autoupdate function now also my other instances where influenced and I have to manually downgrade dist to

Interesting also that my common vagrant setup has setup the line with

stretchand notbuster, but this seems because you reuse stretch packages for buster ?AFTER 10 MINUTES of REPAIRING EACH INSTANCE caused by addition broken package dependencies I can run my states again as usual:

ah... now documentation shows the buggy version as released...

The text was updated successfully, but these errors were encountered: