-

Notifications

You must be signed in to change notification settings - Fork 912

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Expose window 'surface' size, distinct from the 'inner' size #2308

Comments

|

Hm, I wonder what the problem with This is also what is being used by downstream consumers.

The inner size is the size you should be using for drawing. What you want is |

|

The main problem is that "inner_size" currently has two different usages (physical and safe size), and yeah there's some potential for splitting the other way like you're suggesting (adding a new api for the safe area). I'm not sure it's as clear cut as you suggest though... For example on iOS the inner_size right now is the safe_area not the physical size. It was also suggested in #2235 that the inner size could represent the safe area on Android. I think it's fair to say that intuitively, just based on the vocabulary used, 'inner size' could reasonably be expected to return the safe size, perhaps more so than the physical size. The vocabulary right now doesn't seem like a good match for reporting the physical size (and in fact it doesn't consistently report the physical size) One other technical reason to consider splitting it the other way (e.g. add a physical_size API instead of adding a safe_size API) is that the existing Hope that clarifies my thinking bit. |

|

I agree that I'm not sure the better name is "physical size", What is expected of the |

|

|

|

Right, another name might help avoid confusion with the existing "PhysicalSize" APIs. Physical size was my first preference since I think that's what I'd want to call it if there was no conflict - and Bevy was at least one example that also seems to show they prefer the term "physical size" for this. "surface size" might be a good alternative though, since I'm generally talking about the size that would be used to create a rendering surface, such as an EGL/GLX/WGPU surface. "content" size could be confused with the "inner" size I think - i.e. that sounds like the area where the application will draw which might be smaller than the surface size if there's a safe area. Summary of TerminologyThis is how I could see the terms begin defined:

Any API to change the outer or inner size is implicitly going to have to also resize the surface size, and the exact relationship between the surface size and inner/outer sizes may be backend specific. |

|

I'm fine with that naming scheme, especially if we add a small section in the docs where we specify this terminology. |

|

Just to note here, I did a fairly sweeping edit of the original issue, to refer to "surface size" instead of "physical size" since I think there was some agreement that would be better terminology (would hopefully avoid confusion with the |

|

I think the However we must add the Wayland could also get a protocol for that, given that such stuff is exposed in edid iirc, so compositors could deliver it. How does it sound @rib? |

|

I think if the 'inner' size effectively becomes the size for surfaces then it ends up as being a misleading / inappropriate name. I believe "inner" in the current API name was originally intended to mean that it was the size of the window inside of the the frame. That's still a useful thing to be able to query but it varies across window systems whether that's related to the size of the surface. I tried to distinguish these concepts in the table, but maybe the table is over complicated / unclear, I'm not sure. If there's an API that would be specifically documented to provide the size that surfaces can be allocated at, why would you want to call it the "inner_size" ? |

|

I'd suggest to rename |

|

I think it doesn't make sense to include The "surface size" is unlikely to change often, but So I think these two concepts deserve to be differentiated. Overall the proposal looks good to me. |

|

@daxpedda the occlusion here was a bit confusing, it's mostly about the notches due to hardware (read macbooks or phone cameras). It's commonly called a safe area though (area where you can draw and your content won't be obscured by the hardware limitations). I'd have to look though what usually such APIs expose. Also, maybe we should call |

|

I like it! |

|

I think it should be |

|

If we can expose it I would also be in favor of calling it |

Being clear that the size is the size that should be used for creating vulkan / egl surfaces was the reason why I think there should be a "surface_size" API - that would be the unambiguous purpose of the API, to know the size that should be used when creating GPU API surfaces. Referring to that as a "view" size doesn't really mean anything to me sorry - how would you define what a 'view' is? If it weren't called "surface_size" I would probably want to simply refer to it as the "size" or "window_size" - i.e. the canonical size of the window which can be documented as the size that surfaces should be allocated at. Is there a reason you want to stop exposing the 'inner' / 'outer' sizes as a way of exposing the geometry of window frames? I don't have strong opinions about that since I don't really know when I would ever need to know the size of a window frame (except if they are client-side). Insets could maybe be queryable with an enum, since there can be lots of different insets on mobile platforms. E.g. for android see: It's notable also that it can be desirable for applications to e.g. render underneath an onscreen navigation bar on android (which may be transparent) but they need to be aware that the nav bar won't be sensitive to application input (only for the nav buttons) and so the semantics of different insets are pretty important. |

|

I think the default winit should allow users to draw into what physically is possible. And then they should use insets to offset their content, how does this sound, @rib ? My only "issue" with How folks on android usually handle all of that? Do they use |

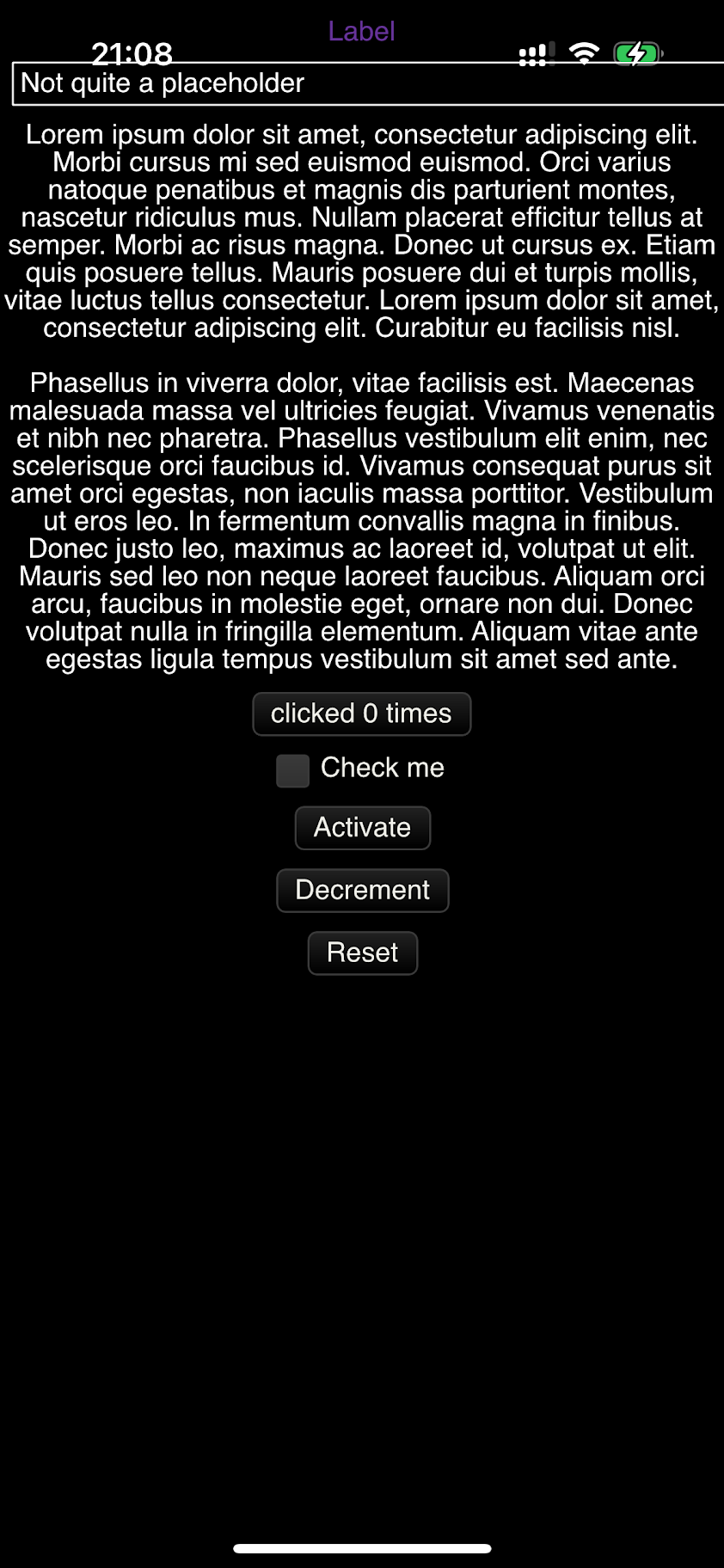

As I understand it, on iOS the outer_size corresponds to the size of the window, meaning when the surface is rendered using inner_size(the safe/non-obscured size) the elements get stretched. There's an open issue in winit about clarifying/standardizing the different sizes, but until that's done switching to outer_size fixes the issue. The touch positions now also match the rendering Winit issue: rust-windowing/winit#2308 Fixes #419 <details> <summary>Before</summary>  </details> <details> <summary>After</summary>  </details>

This comment was marked as off-topic.

This comment was marked as off-topic.

|

@bjornkihlberg what backend and |

|

I bet that was wayland and what was reported is correct. On Wayland you're the one controlling the sizes all the way, winit tells you mostly about the suggestions from compositor and both sizes are correct. If you end up with smaller visually sizes, it's on you. |

|

@kchibisov to then ask the question that I wanted to ask: is there some math that adds the "expected" title bar size to the surface size? But in the end we use CSD to render it within the surface? The screenshot has a window with Or is that actual |

|

@MarijnS95 we add them ourselves because outer size is the size of the window including the decorations. |

It was on Wayland. |

@kchibisov then who rendered the decorations within |

|

Decorations are not within the @bjornkihlberg could you provide a |

It looks like we are misunderstanding each other. I pointed out the same, because that is not what seems to be happening in #2308 (comment), and said that this must be some kind of a bug. So we agree :) |

I mean, they've asked for |

|

@kchibisov download the first screenshot and open it in your favorite image editor. Count or measure the pixels. The total window size _including decorations is That doesn't match the returned |

|

Also it looks like GitHub is having a bug with timezones. I just posted #2308 (comment) in reply to #2308 (comment) (which was in reply to #2308 (comment)): Probably because the servers are in the US, whose time went back one hour from CDT to CST, 10 minutes ago? |

|

@kchibisov sure, let's wait for At least it makes sense that if CSD is never rendered to the subsurfaces around the window, |

size is entirely controlled by the user as I said, whatever they draw whatever is displayed, if they draw less, it's their issue, not winit one. |

I completely don't understand you. They requested 800x600 for inner size, got 800x635 for outer size, an the decorations are in the (0,-35) location. I have no idea where they get other sizes from, and we put decorations at the (0, -35) to the surface origin, so in the negative coordinate space. If the compositor is broken, it could maybe move them into the surface for whatever reason. |

Edited: to use "surface size" instead of "physical size" as discussed. The edit also tries to clarify the table information.

Currently the general, go-to API for querying the size of a winit window is

.inner_size()which has conflicting requirements due to some downstream consumers wanting to know the size to create render surfaces and other downstream consumers wanting to know the safe, inner bounds of content (which could exist within a potentially larger surface).For lower-level rendering needs, such as for supporting integration with wgpu and or directly with opengl / vulkan etc then what downstream wants to know is the size of the surface they should create, which effectively determines how much memory to allocate for a render target and the width and height of that render target.

Incidentally, 'physical size' is how the Bevy engine refers to the size it tracks, based on reading the winit

.inner_size()which is a good example where it's quite clear what semantics they are primarily looking for (since they will pass the size to wgpu to configure render surfaces). In this case Bevy is not conceptually interested in knowing about insets for things like frame decorations or mobile OS safe areas.Conceptually the

inner_size()is 'inner' with respect to the 'outer' size, and theouter_size()is primarily applicable to desktop window systems which may have window frames that extend outside the content area of the applications window, and may also be larger than the surface size that's used by the application for rendering. For example on X11 the inner size will technically relate to a separate smaller child window that's parented by the window manager onto a larger frame window.Incidentally on Wayland which was designed to try and encourage client-side window decorations and also sandbox clients the core protocol doesn't let you introspect frame details or an outer screen position.

Here's a matrix to try and summarize the varying semantics for the inner/outer and surface sizes across window systems to help show why I think it would be useful to expose the physical size explicitly, to decouple it from the inner_size:

This may vary but I want to highlight that on X11 due to it's async nature there are times where it makes sense to optimistically refer to a pending/requested size (so e.g.

.set_inner_size()followed by.inner_size()can return a pending size) but the 'surface' size would be the remote, server-side sizeIn some situations the driver may need to reconcile mismatched surface/window sizes due to async resizing of window, e.g. using a top-left gravity and no scaling.

Protocol has no notion of frames but clients may draw their own window decorations and impose their own inner inset.

Notably there may be a scale and rotation between the wl_buffer and the wl_surface to account for the physical display (e.g. client may render rotated 90 degrees for a display that's connected with an internal rotation that doesn't match the product from the user's pov)

Tbh this is the window system I'm least familiar with so I'm not clear on how the compositor maps rendered buffers to windows, and what kind of transforms it allows, if any)

Note that in wgpu it looks like they ignore sizes given by the application for configuring surfaces which is lucky considering winit reports an inset safe area which also gets treated as a physical size for configuring render surfaces

Possibly transposed according to how a display is physically mounted

Note: similar to Wayland it's possible to have a render-surface transform to account for how a display is physically mounted to take full advantage of hardware compositing

An example where the conflation of inner and surface size is problematic is on Android where we want to let downstream APIs like Bevy know what size they should allocate render surfaces but we also want to let downstream users (e.g. also Bevy, or any UI toolkit such as egui) know what the safe inner bounds are for rendering content that won't be obscured by things like camera notches or system

toolbars. (ref: #2235)

The text was updated successfully, but these errors were encountered: