-

Notifications

You must be signed in to change notification settings - Fork 56

Teletext

Previous Page Identifying VBI data

Next Page Decoding RF Captures

BBC Testcard F 1994 PAL VHS sample with teletext in the VBI space.

Starting in the 1970's and ending in 2009~2015 it was an information system widely used in the United Kingdom & Mainland Europe, with some very limited use in North America.

(Still in use in some European/Eastern nations)

Teletext data packets were embedded into the top VBI picture area on live broadcasts, this was used daily for the news, weather, BBS boards, being highly regraded for same day stocks and sports information at a users fingertips in the analogue era there was even interactive games.

Thanks to Ali1234 for the R&D into this.

Teletext decoding has NVidia CUDA/OpenGL acceleration available alongside CPU if no GPU is available.

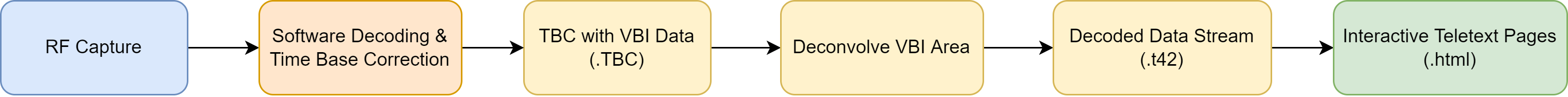

Decode "Deconvolve" Teletext Data With

teletext deconvolve --keep-empty -c tbc INPUT.tbc

View Teletext Data With

teletext vbiview -c tbc INPUT.tbc

So what you want is the --keep-empty mode when deconvolving. It will insert empty packets in the t42 when there's no line identified, thus the output t42 hs the same number of "lines" as the input tbc, and you can go from a t42 packet back to the original. - Ali123 (2024)

This will eventually have a proper GUI viwer for extracted data.

Raspberry Pi based Closed Captions & Teletext generator tool that uses the composite output, this can be used with a TRRS 3.5mm breakout jack with current pi boards or older pi models with a dedicated yellow composite RCA jack.

The HackTV application can generate CVBS or modulated TV signals to file or pipe them to SDRs such as the FL2K & HackRF units.

Zapping - Linux

Teletext Archive Proposal

Capture full tapes to a single file, then import into the database.

Use the splits tool (to be written) to mark sections of the capture containing a contiguous signal. Enter metadata and commit to the database.

Once the splits are created, deconvolution can be performed. This is very resource intensive and should not be performed on the server. The server should instead bundle up the work and serve it to clients for distributed processing.

Once the full split has been deconvolved it can be parsed into raw subpages. These are just lists of packets, unmodified. This is a fast operation and can be done on the server.

Squashing means finding all instances of the same subpage in a split, and comparing them to find the most common character in each location. This reduces error. This operation is not very CPU intensive, but it would be easy to distribute it. The resulting subpage is a child of all subpages used to make it.

Finally the squashed subpages can be manually fixed using a web editor or other tool that can communicate with the API. Whenever a page is modified this way, the old revision should be kept.

Once all the pages have been restored, the fixed lines can be back-propagated to the original splits they came from to produce a clean packet stream.

Users should be presented with a timeline and channel selector. This should indicate availability of pages. Upon selection they are presented with the main index page for that service. Navigation can then be performed via hyperlinks in the HTML rendered pages, or by entering page numbers.

Users should be able to search by channel, date, keyword, and quality. Results should show some (three?) lines from the page, rendered as teletext. Only pages above a certain level of quality should be indexed, and results should favour the best quality pages.

Pages should be available as HTML or images. Whenever the user is presented with a page, they should be able to see the full provenance of that page - who captured it, what recovery steps have been done, and the original VBI lines (or at least how to get them).

The API should cover all functions needed to implement the recovery workflow, as well as search and retrieval functions. Pages should be available as HTML, image, or packet data. A streaming API should also be available, allowing software inserters to request a packet stream for a given date and channel. This stream should be generated using back-propagation from the recovered subpages to the original split lines. If no pages are available, this should return an error page in teletext format.

Records metadata about TV channels.

- Full Name

- Call sign (eg BBC1)

- Company/group

- Country

- Language (as locale code eg en_UK, no_NN etc)

- TS 101 231 metadata (may be multiple fields)

A local version of a channel.

- Channel

- Region

- Others?

A source is a raw VBI capture. It is a sequence of samples organized into lines of a fixed length. It may contain separate recordings from different dates and channels. This corresponds to any raw capture file on disk with unknown contents.

- GUID

- Owner (Person)

- Source Format (VHS, Beta, LD, Live etc)

- Recording Device (If known)

- Playback Device (If known)

- Capture Device (BT8x8, DdD etc)

- Sample Format (eg unsigned 8 bit)

- Line length (samples per line)

- Field lines (lines per field)

- Field range (which lines in each field may contain teletext)

A split is a subsection of a source which contains a single contiguous teletext stream, for example an uninterrupted recording of a whole episode of a TV show.

- Source

- Start Line

- End Line

- Start TimeDate (Approximate)

- End TimeDate (Approximate. Can be calculated)

- Channel

- Region (if known)

A deconvolution is the result from deconvolving a split. It is a teletext packet stream. Ideally it should be possible to link each line in the deconvolution back to the raw VBI samples it came from.

- Split

- Person (Who deconvolved it)

- Software/version used

- Subjective Quality (as decided by the uploader and/or others)

- Objective Quality (as decided by some algorithm yet to be written)

A reconstructed subpage. Should record the method used for restoration, and all lines used to compile. If it is a revision of a previous subpage, reference to that subpage.

- Page Number

- Subpage Number

- TimeDate (Approximate. Calculated from earliest header?)

- Packets

- Raw (has it been modified from the deconvolution at all?)

- Spellchecked (has it been passed through an automated spelling checker?)

- Manual restoration (has it been manually fixed by a human?)

- Subjective Quality (as decided by the uploader and/or other viewers)

- Objective Quality (as decided by some algorithm yet to be written)

- Parents (if this is squashed or manually restored), or:

- Source Deconvolution

- Source Lines (the lines from the Split used to generate it)

Raw VBI data is fixed size depending on the length of the capture. Currently the BT8x8 captures are the largest size with 2048 8-bit samples per line. Raw Domesday Duplicator output is larger but contains the whole picture which we do not need.

- 1 hour: 5.75 GB

- A four hour tape: 23 GB

- 9000 four hour tapes: 207 TB

- 1 hour per day for 40 years and 5 channels: 415 TB

- All teletext ever broadcast on UK terrestrial analog: 10 PB

Each packet is 42 bytes without the fixed CRI, one packet per line. It is closely related to the length of the original capture, but lines which do not contain teletext can be discarded. Numbers below assume all lines are used.

- 1 hour: 120 MB

- A four hour tape: 480 MB

- 9000 four hour tapes: 4.32 TB

- 1 hour per day for 40 years and 5 channels: 9 TB

- All teletext ever broadcast on UK terrestrial analog: 210 TB

A single subpage is approximately 27 packets, or about 1kB. A service is a set of subpages broadcast in a looping sequence. It is difficult to quantify an exact size as the number of subpages varies greatly between channels and years. However a typical service seems to be about 2 MB.

- 9000 four hour tapes, assuming half-hour recordings: 72000 services, 144 GB

- 1 service per day for 40 years and 5 channels: 73000 services, 146 GB

Raw VBI samples can be compressed using a variety of algorithms. Compression used should be lossless. There are broadly three types of algorithms to consider: data, video, and audio. Each has pros and cons.

- General data compression algorithms are always lossless, but they provide the worst compression ratios and are often extremely slow. They can’t store metadata about the samples.

- Video codecs have the advantage of being able to encode line and frame sizes, and are designed with very large files in mind. They provide compression somewhat better than data algorithms, but are not optimal because VBI samples have no vertical or temporal correlation. Compression is often very slow.

- Audio codecs give by far the best compression ratio and the fastest encodes but are usually not designed to store such large amounts of data. As a result of this, seeking can be slow.

Note that deconvolved packets are much smaller and compress well with data algorithms like gzip due to their primarily textual content.

| Algorithm | Type | Size | Encode Time | Command |

|---|---|---|---|---|

| raw | raw | 25GB | 4 hours | Uncompressed - encode time is length. |

| gzip | data | 16GB | 2 hours | gzip -9 sample.vbi |

| xz | data | 15GB | 8 hours | xz -9 sample.vbi |

| flac | audio | 10GB | 15 minutes | flac --best --sample-rate=48000 --sign=unsigned --channels=1 --endian=little --bps=8 --blocksize=65535 --lax -f sample.vbi |

| x264 | video | 13GB | 2 hours | ffmpeg -f rawvideo -pix_fmt y8 -s:v 2048x32 -r 25 -i sample.vbi -c:v libx264 -crf 0 -preset slow sample-h264.mkv |

| x265 | video | 18GB | 3 hours | ffmpeg -f rawvideo -pix_fmt y8 -s:v 2048x32 -r 25 -i sample.vbi -c:v libx264 -x264-params "profile=monochrome8:lossless=1:preset=slow" sample-h265.mkv |

| ffv1 | video | 13GB | 1 hour | ffmpeg -f rawvideo -pix_fmt y8 -s:v 2048x32 -r 25 -i sample.vbi -c:v ffv1 -level 3 sample-ffv1.mkv |

| ffv1 | video | 12GB | 16 minutes | ffmpeg -f rawvideo -pix_fmt y8 -s:v 2048x32 -r 25 -i 0001.vbi -c:v ffv1 -level 3 -coder 1 -threads 8 -context 1 -slices 4 sample-ffv1.l3.c1.large.mkv |

| aec | pcm | 14GB | 18 minutes | aec -n 8 sample.vbi sample.sz |

These are very large. Will the user upload them to the archive, or just upload deconvolved data?

Each deconvolved line needs to be mapped back to the original samples. There are a few ways to do this:

- Make the deconvolved output have the same number of lines as the input. This means adding filler for VBI lines that don’t contain teletext.

- Store line numbers in the deconvolved data. This makes it harder to parse.

- Store the mapping in a separate file.

- Store the mapping directly in the database.

Previous Page Identifying VBI data

Next Page Decoding RF Captures

- FAQ - Frequently Asked Questions

- Diagram Breakdowns

- Visual-Comparisons

- VCR Reports / RF Tap Examples

- Download & Contribute Data

- Speed Testing

- Visual VBI Data Guide

- Closed Captioning

- Teletext

- WSS Wide - Screen Signalling

- VITC Timecode

- VITS Signals

- XDS Data (PBS)

- Video ID IEC 61880

- Vapoursynth TBC Median Stacking Guide

- Ruxpin-Decode & TV Teddy Tapes

- Tony's GNU Radio For Dummies Guide

- Tony's GNU Radio Scripts

- DomesDay Duplicator Utilities

- ld-decode Utilities