-

Notifications

You must be signed in to change notification settings - Fork 4.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Multi-node Prototype #2539

Multi-node Prototype #2539

Conversation

* tell kubelet to use host-only IP (eth1) * enable CNI * hard-code flannel's podSubnet * configure hostname-override

| @@ -32,7 +32,8 @@ import ( | |||

| "github.com/pkg/errors" | |||

| "golang.org/x/sync/errgroup" | |||

| "k8s.io/minikube/pkg/minikube/assets" | |||

| "k8s.io/minikube/pkg/minikube/bootstrapper" | |||

| "k8s.io/minikube/pkg/minikube/bootstrapper" | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@pbitty It seems go fmt was not run for this file.

|

@afbjorklund , thanks for the feedback.

I would lean toward this option, as to not complicate the experience for single-node users. My assumption is that most of the time people will be operating in single-node mode, so it should probably remain the core focus of the minikube UX. My suggestion would be to start this out small, as an experimental feature and see how it gets used.

Please tell me more. I am not sure why the current solutions will not cut it.

Sounds good to me. If this an experimental feature, it can be limited in scope and support, and grow as needed.

I don't think a change in distro would be needed for this feature. Currently it only requires an image that runs systemd and docker (and maybe some subtle details I am overlooking). Everything else is provided by the kubeadm bootstrapper. For simplicity, we might want to keep the choice of distro as a separate (but related?) issue. |

|

You are going to need distributed storage, what if your upgraded pod ends up on another node ? Then all host paths are "gone". You might even need a registry Ignore the comment about the distro, I was just chasing down yet another bug specific to minikube and was annoyed... Those other images are still a lot larger, by default |

Ah, I understand - you are referring to |

|

@pbitty Thanks for the PR. It is much appreciated. I'll give it a deep look soon. We should also set up some time to discuss this in more depth, since its something we'd be interested in. |

|

@pbitty : I think a good start would be to have it work just-as-it-does-now for the master node. And then add some kind of addons, to make it possible to share storage and images to the worker nodes...

So once you grow out of single-node clusters, you will have to move to persistent volumes and registry ? |

|

I am trying to run this, but I ran into several problems with starting nodes:

Still have some kind of cert error, investigating. |

|

@nebril I only tested this with |

|

@r2d4 If you haven't started looking at this yet, I have a cleaner proposal doc coming in another PR. |

|

// We use --ignore-preflight-errors=DirAvailable since we have our own custom addons

// that we also stick in /etc/kubernetes/manifests

// We use --ignore-preflight-errors=Swap since minikube.iso allocates a swap partition.

// (it should probably stop doing this, though...)See #2403 and |

|

@pbitty thanks for pointing this out, I was trying to run 1.9. With 1.8 I also keep getting |

|

Ok, I messed up, I started master node with |

|

I tried to update the code to later versions, think the code is somewhat OK but some tests are still failing: https://github.com/afbjorklund/minikube/tree/multi-node Most of the original comments still hold, and there's some additional discussions in the proposal. |

|

Any news on this? |

|

@christopher-avila : I updated my branch, but neither me nor @pbitty have time to continue with it... |

|

Really wish to see it completed&merged someday... |

|

Hi All, I'm really sorry for the long silence. I built this prototype pretty quickly (and messily) to see how it would look. After chatting about it more with @r2d4 and @afbjorklund, I realized that working with the community to implement it and maintain it would take up much more time than I have available. If anyone is interested in taking this on, I suggest talking about it with @r2d4 . I am happy to contribute ideas and give my own feedback as well Sorry if I wasted anyone's time. I am closing this for now. |

|

@pbitty: thank you for your effort! I tried to merge the previous code with The previous versions (3cf034a 27fdea4 ccb1fce a6f5e77) were in a happier place, but if you are going to add multi-node support to minikube now it is probably better to start over with the implementation. I don't think I will spend any more time on this, if you are interested in doing multiple nodes you are probably better off without Documentation for doing that is at: https://kubernetes.io/docs/setup/independent/install-kubeadm/ |

|

@afbjorklund , sorry I missed your last message for some reason. Thanks for the reply. If I need multi-node before it is supported, I'll definitely do that. Sorry I couldn't follow through with a clean implementation. |

|

@pbitty : no worries, I just meant that a small typo in the help text is probably not what is left for multi-node. Thank you for initial implementation, and we still have multi-node on the roadmap even if it has competition:

* based on the trust level and other configuration, see docs I personally think that multi-node is one of the most important aspects when learning Kubernetes, and if we can provide that locally (without disturbing the single-node workflow) it would be excellent! As per #94. |

This is a working prototype I made (not to be merged), inspired by #94. I am very interested in adding this feature. If it is something that the maintainers are interested in adding, I'd love to work to work out the design with whoever is interested and help implement it.

I've made notes on the prototype in docs/multi-node-prototype.md.

The code in this branch is hacky, but it does work (on my machine at least😄 ).

I'm not sure if this is the best way to discuss the feature, but I figure it's a way to start off the discussion, and if there is interest I could submit a more specific design doc/proposal.

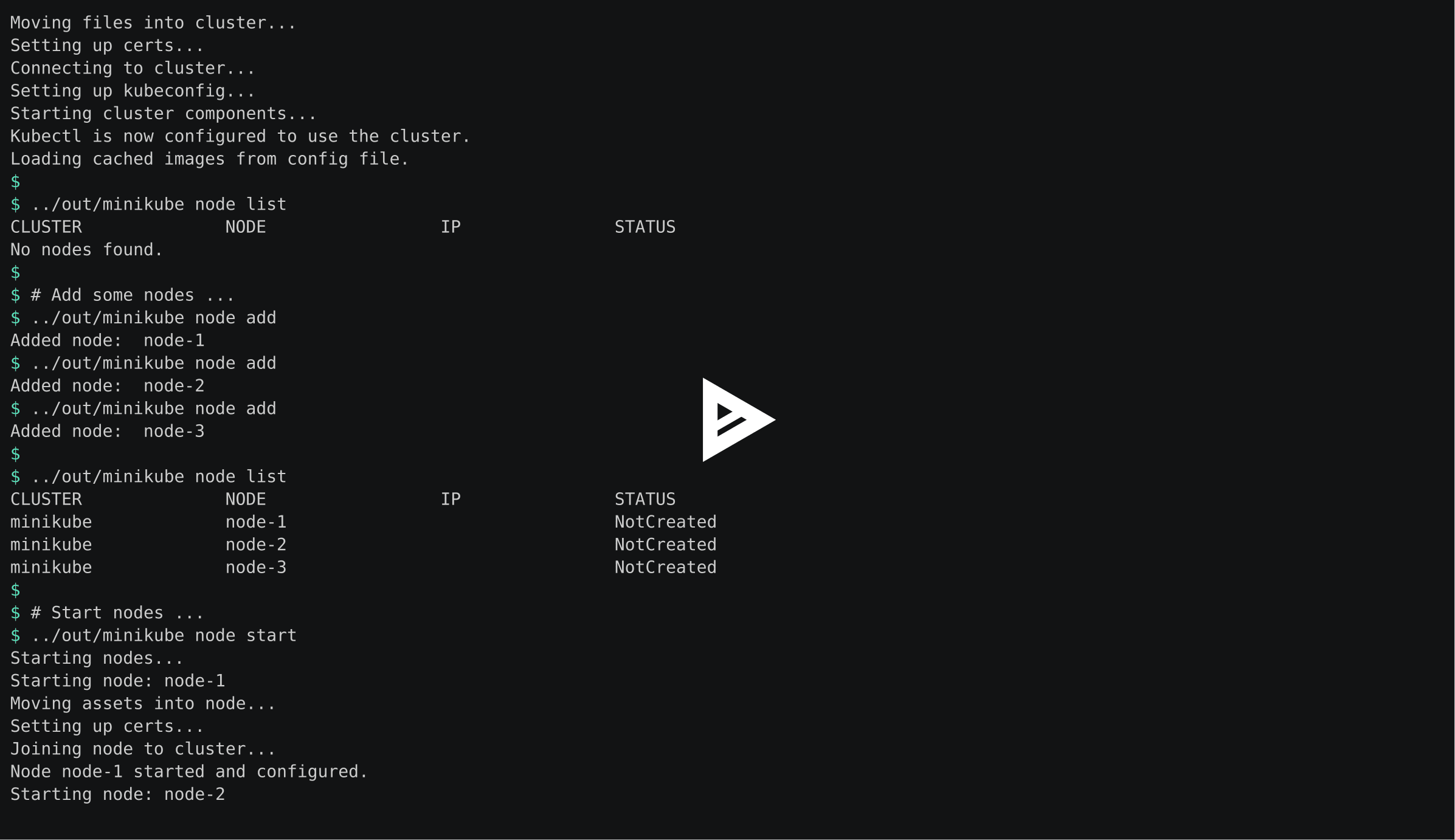

Here's a demo: