-

Notifications

You must be signed in to change notification settings - Fork 5.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Feature request] ZFS Zpool properties on Linux #2616

Comments

|

I think this only requires a minor re-write of the zfs_linux.go plugin code, and an explaination in the telegraf.conf inputs.zfs stanza I have some working code currently which I will submit as a PR soon, does influxdata prefer single (merged) commits for PRs ? |

|

For commits you can leave them separate, when we merge PRs we generally squash them. |

|

Thanks @danielnelson. |

|

I started a rebuild for it, we need to improve our test script to not pull the docker image all the time. If something like this happens again just leave a comment on the PR. |

|

Any update on your work, @tomtastic? I've found this issue while trying to figure out if any of the detailed parameters that are collected by the existing

Several of these are what you are addressing with this issue (and your patch work), and I think they are significantly more useful than the low level stats that exist right now! On the |

|

Hi @rwarren, sorry for the very slow reply. I'll pull my finger out and commit the code very shortly. |

|

@tomtastic -- awesome, thanks! Grafana is what I'm using for visuals as well, and that looks good. Hopefully your PR will be accepted. |

|

You can test it from here : https://github.com/tomtastic/telegraf/tree/better_zpool_linux I'm reminded looking at the code, that 'size, alloc, free, dedup' aren't presented currently as ZFSonLinux doesn't get the syntax for 'zpool list -Hp' (p for parsable) until version 0.7.0. I need to write some unit tests for it all before I submit the PR. |

|

Having trouble getting the unit tests to build, I can run 'make test-short', but it only indicates the zfs plugin fails to build, no useful errors. @danielnelson Is there anyone I could ask for help with the unit-tests here? |

|

I can try to help, can you paste the output here? |

|

Its quite likely the code for the unit tests is garbage, the required changes are small but I don't have enough knowledge of golang to make it any better. |

|

Looks like |

|

@tomtastic Any chance to get this moving again? ZFS could be really useful. |

|

I don't know enough Golang currently to fix the unit test code, sorry @mazhead. But if you take the zfs plugin code from my branch https://github.com/tomtastic/telegraf/tree/better_zpool_linux you can compile up a working version, it's just the unit-tests I can't sort out for submitting the PR to get this merged. |

|

Parsing zpool output approach won't work well in practice. Also, it includes only a small fraction of the interesting info. The approach I've started taking that does work better is a zpool replacement that directly calls zfs ioctl and gets the information as nvlists. These are then written out as influxdb line protocol. This is called as an inputs.exec, but could also be a self-timed daemon. For both zpool command and a zfs ioctl consumer, there is a blocking situation that can occur and must be dealt with: when the going gets tough, zpool command blocks. Timeouts already exist for inputs.exec and it is unclear to me if other telegraf agents inherit the timeouts feature in such a way that blocking in the zfs ioctl is properly handled. Architecturally, is feels cumbersome to include a zfs ioctl caller as native golang agent. The ioctl interface is not a stable interface and will cause integration pain forever. By contrast, the zpool replacement can be distributed with the ZFSonLinux code, thus keeping up with the zfs ioctl changes thus keeping the interface boundary at the influxdb line protocol layer. Thoughts? |

|

To spur the conversation, here's an example of the output of scan statistics collector: it is simply not possible to get that data from screen-scraping Of more interest to me personally, the performance histograms are stored as nvlist arrays. |

|

Sounds great, have you already proposed the addition of your tool to the ZFSonLinux team? Will it output in line protocol directly? |

|

@danielnelson the tool outputs line protocol directly. I have not yet proposed it upstream, but it slides into the source tree nicely as a new command, so there aren't any conflicts with existing. To properly complete it, there is more work to complete and automated tests to be built. Think of it as a goal of "zpool status + zpool iostat + zpool get" all rolled into one and output as influxdb line protocol. |

|

That would be great for us, when you feel it is ready we can link to it from the zfs README as well. |

|

@richardelling I pretty much gave up on finishing the unit-test for my PR when you announced your work on this. |

|

Hi @tomtastic I have made some progress, but it is not clear that operating as a telegraf plugin is the best approach. Why? Because if there is a failure in the zfs.ko module or a pool becomes suspended then the data collection will hang indefinitely. This is also true for |

|

It's been just over one year since I had code to support getting better zpool properties on Linux and (now that Ubuntu and others are distributing zfsonlinux 0.7.0+) that code has zpool-property-feature-parity with the FreeBSD code. |

|

@tomtastic I've put an initial commit for zpool_influxdb collector to be used with telegraf's inputs.exec plugin. More testing required, of course. I'm still concerned about how this will hang during some pool suspension events and how that could affect execs over prolonged time. This should be similar to hangs when you exec the https://github.com/richardelling/zfs-linux-tools/tree/influxdb-wip/telegraf |

|

@tomtastic: Somewhat unrelated to this PR, but... what plugin did you use for making that great "zpool status" table in Grafana? I'm trying to replicate it with no luck yet. I can't get rid of the Time column with the Table plugin, nor can I get the rows right. |

|

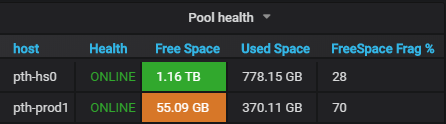

@tomtastic Never mind... I finally figured it out as a combo of many things (using I also mapped my zpool health to integers in rough order of severity so I could use thresholding and get proper alerting. This conversion of health to an integer is really useful should probably be done in this plugin as well! And here's how I did the health state integer mapping in order to enable alerts and colorizing (green for ONLINE, orange for DEGRADED, red for worse): The generated |

|

@rwarren the "health" you see in zpool command output is actually derived from the internal |

|

An additional severity level would work, but I decided to merged severity

with the code (by sorting them) so I could easily see durations for a

certain status on a chart.

After sleeping on it I now think I should have gone with larger ints to

allow clean non-float room for future status enums. e.g. 0 for ONLINE, 11

for DEGRADED, then 21, 22, 23, etc for the error conditions. I think this

is better and I'm going to change to this.

BTW - I have this hacked together in a Python cronjob since I unfortunately

don't know Go and can't currently take the time to learn it.

|

|

Thanks rwarren, cool use of Grafana, could you pop your panel JSON for that into a gist for me ? |

|

@nyanloutre Thank you, exactly what I was looking for. |

Add freeing and leaked options collection

|

@danielnelson can you please merge #6724 which will close this feature request ? |

|

Thank you for this @yvasiyarov, I'm very happy you've closed this issue :) I might add it's a shame that rather than receiving any help/collaboration 2.5 years ago with the unit tests on my (otherwise working) code to close this issue, we ended up waiting for someone to re-write it in a way they preferred, but seemingly never released. |

|

After such a long time it's probably easier to rewrite than to update. But now that's done can we close this and merge the other pull-request? I've been waiting for this for almost 3 years now 😄. |

|

Happily closing this! |

|

@tomtastic please do not close the future request until PR is get merged. |

|

Looking forward to this. |

|

I see that this is closed, but this still doesn't not seem to have been merged, has it?! |

|

FYI, a |

|

In case it benefits anyone else while waiting for this PR to be sorted out, I put together a Go utility to be invoked by a telegraf exec plugin that parses both |

|

Are there any updates on this? |

|

Richards' |

Yes, and I am really looking forward to this but since this is not implemented in telegraf we have to upgrade our whole system to accomplish that. |

|

You simply use it with telegraf like this : |

|

You will still need to muck about with value mappings for the |

Unfortunately, this will never happen. The interfaces used to get pool information are not stable across releases. So you'd have to update telegraf every time ZFS changed... ugly. It is more stable to have changes in ZFS reflected in the |

With regards to a capacity %, that is easy to do in a query. If you'd like to see it from |

|

Capacity would be great and would align with the standard

The whole reason I'm here is it's literally not easy to do in a query ;) Flux is not a simple query language, and while after a bit of hacking I managed it in a query, translating that again into a query suitable for an influxdb alert was not possible. As @rwarren mentioned 5 years ago, while we all appreciate every little esoteric arc value is shipped by telegraf for monitoring, the most objectively useful one not available. Edit: I guess this is the best solution right now: |

|

Capacity for mounted datasets is available in the inputs.disk data for Linux. Are you looking for capacity of zvols? Unfortunately, the interface for the |

|

This worked for me: #!/usr/bin/python3

import json

from subprocess import check_output

columns = ["NAME", "SIZE", "ALLOC", "FREE", "CKPOINT", "EXPANDSZ", "FRAG", "CAP", "DEDUP", "HEALTH", "ALTROOT"]

health = {'ONLINE':0, 'DEGRADED':11, 'OFFLINE':21, 'UNAVAIL':22, 'FAULTED':23, 'REMOVED':24}

stdout = check_output(["zpool", "list", "-Hp"],encoding='UTF-8').split('\n')

parsed_stdout = list(map(lambda x: dict(zip(columns,x.split('\t'))), stdout))[:-1]

for pool in parsed_stdout:

for item in pool:

if item in ["SIZE", "ALLOC", "FREE", "FRAG", "CAP"]:

pool[item] = int(pool[item])

if item in ["DEDUP"]:

pool[item] = float(pool[item])

if item == "HEALTH":

pool[item] = health[pool[item]]

print(json.dumps(parsed_stdout)) |

Feature Request

Currently, only FreeBSD has a zfs input plugin which reports zpool list properties. Linux should have this too ideally.

Proposal:

Using the existing inputs.zfs 'poolMetrics = true||false' option, to decide whether or not to try executing : '/bin/zpool list -Hp' and parsing the output like the FreeBSD code does.

Current behavior:

Only kstats are queried on Linux platforms, whereas FreeBSD queries both kstats and the userspace

'zpool list -Hp' command.

Desired behavior:

telegraf allowed to run 'zpool list' and collection of stats enabled :

Use case:

Monitoring zpool health and fragmentation is a really useful metric for ZFS users,

as it can alert administrators to problems requiring urgent attention.

The text was updated successfully, but these errors were encountered: