-

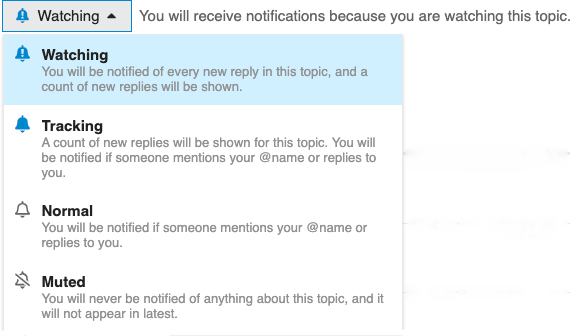

Notifications

You must be signed in to change notification settings - Fork 1.2k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Provide simple option for limiting total memory usage #1268

Comments

|

Agree. We use it for a simple key-value lookup with a couple of billion records (database directory is 700 GB). It uses about 200 GB of RAM which is unacceptable. The culprit are memory mapped files according to Process Explorer. |

|

@gonzojive I will have a look at the high memory usage. What is the size of your data directory and the size of the backup file? |

|

The backup file is 100GB (99,969,891,936 bytes) In this case, backup.go's Load function. seems to be a major offender. It does not account for the size of the values at all. Added logging shows huge key/value accumulation and no flushing: I'm guessing there are many places where value size is not accounted for when making memory management decisions. |

|

I modified backup.go to flush when accumulated key + value size exceeds 100 MB. I can send a pull request for this at some point. Before backup.go modifications the process consumes memory until the OS kills it: After: When I set the threshold to 500MB instead of 100 MB, memory usage still causes a crash for some reason. |

The previous behavior only accouned for key size. For databases where keys are small (e.g., URLs) and values are much larger (megabytes), OOM errors were easy to trigger during restor operations. This change does not set the threshold use for flushing elegantly - a const is used instead of a configurable option. Related to dgraph-io#1268, but not a full fix.

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

|

Although I have a fix for the backup restoration issue, this issue as a whole has not been addressed. I'm not aware of what causes badger to take up the amount of memory that it does. That seems like the first step towards introducing a flag for setting a fixed memory limit. May someone from the badger team weigh in? |

The amount of memory being used depends on your DB options. For instance, each table has a bloom filter and these bloom filters are kept in memory. Each bloomfilter takes up 5 MB of memory. So if you have 100 GB of data, that means you have (1001000/64) = 1562 tables, and 15625 MB is about 7.8 GB of memory. So your bloom filters alone would take up 7.8 GB of memory. We have a separate cache in badger v2 to reduce the memory used by bloom filters. Other things that might affect memory usage is the table loading mode. If you set the table loading mode to fileIO, the memory usage should reduce but then your reads would be very slow. |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

|

Here are the runtime.Memstats for a similar process to the screenshot above. edit: It could be that the OS is not reclaiming memory freed by Go as discussed in this bug: golang/go#14521. However, I'm not sure how to confirm this. Badger also makes low-level system calls that might not be tracked by the above memory profiles (mmap). |

|

On the other hand, sometimes memory usage is quite high & there is a lot of allocing. I can't find a way to force the OS to reclaim the memory freed by Go, which seems to use MADV_FREE on recent linux version (https://golang.org/src/runtime/mem_linux.go). It would be helpful to force the OS to reclaim such memory get a more accurate picture of what's going on. |

|

In my case, it would help if prefetchValues had an option to restrict prefetches based on value byte size, not number of values. Perhaps the IteratorOptions could become // IteratorOptions is used to set options when iterating over Badger key-value

// stores.

//

// This package provides DefaultIteratorOptions which contains options that

// should work for most applications. Consider using that as a starting point

// before customizing it for your own needs.

type IteratorOptions struct {

// Indicates whether we should prefetch values during iteration and store them.

PrefetchValues bool

// How many KV pairs to prefetch while iterating. Valid only if PrefetchValues is true.

PrefetchSize int

// If non-zero, specifies the maximum number of bytes to prefetch while

// prefetching iterator values. This will overrule the PrefetchSize option

// if the values fetched exceed the configured value.

PrefetchBytesSize int

Reverse bool // Direction of iteration. False is forward, true is backward.

AllVersions bool // Fetch all valid versions of the same key.

// The following option is used to narrow down the SSTables that iterator picks up. If

// Prefix is specified, only tables which could have this prefix are picked based on their range

// of keys.

Prefix []byte // Only iterate over this given prefix.

prefixIsKey bool // If set, use the prefix for bloom filter lookup.

InternalAccess bool // Used to allow internal access to badger keys.

}Even better would be a database-wide object for restricting memory use to a strict cap. |

|

@gonzojive How big are your values? The memory profile you shared shows that

From https://golang.org/pkg/runtime/, So heapIdle - heapreleased in your case is which is 5.6 GB. That's the amount of memory golang runtime is holding. |

|

In this case, the values are 25 MB or more. The memory usage was from prefetching 100 values for each request, and many requests are run in parallel. Limiting prefetching fixed the specific issue I was having, but the general feature request remains open. |

|

Ah, that makes sense. Thanks for debugging it @gonzojive . The feature request still remains open. |

|

Github issues have been deprecated. |

What version of Go are you using (

go version)?What version of Badger are you using?

v1.6.0

Does this issue reproduce with the latest master?

As far as I know, yes.

What are the hardware specifications of the machine (RAM, OS, Disk)?

32 GB RAM

AMD Ryzen 9 3900X 12-core, 24-Thread

1 TB Samsung SSD

What did you do?

Used the default options to populate a table with about 1000 key/val pairs where each value is roughly 30MB.

The badger database directory is 101GB according to du. There are 84 .vlog files.

When I start my server up, it quickly consumes 10 GB of ram and dies due to OOM. dmesg output:

What did you expect to see?

I would expect the database to provide a simple option to limit memory usage to an approximate cap.

What did you see instead?

The recommended mechanism of tweaking a many-dimension parameter space is confusing and hasn't worked for me.

The memory related parameters are not explained in much detail. For example, the docstring for options.MemoryMap doen't indicate roughly how expensive MemoryMap is vs FileIO.

I haven't managed to successfully reduce memory usage using the following parameters:

I can create an example program if the issue is of interest.

The text was updated successfully, but these errors were encountered: