Alterations to microvascular flow are responsible for a number of ocular and systemic conditions including diabetes, dementia and multiple sclerosis. The challenge is developing of methods to capture and quantify retinal capillary flow in the human eye. We built a bespoke adaptive optics scanning laser ophthalmoscope with two spatially offset detection channels, with configurable offset aperture detection schemes to image microvascular flow. In this research we sought to develop an automatic tool that detects and tracks erythrocytes. A deep learning convolutional neural network is proposed for classifying blood-cell from non-blood-cell patches in each frame. The patch classification is coupled with a localisation process to detect the positions of the red blood cells. A capillary segmentation method is also presented to increase the efficiency and performance of the localisation process. Finally, a technique is presented to match corresponding cells between the two channels in the raster, allowing for a fully automatic blood flow velocity measurement. Results from various experiments are reported and compared to give the most accurate configuration. The deep learning basis of the tool allows for a continual and adaptable learning that can improve the performance of the tool as more samples are collected from subjects. In addition, the modular nature of the tool allows for replacing its components, such as the capillary segmentation, with state-of-the-art techniques allowing for the software’s longevity.

Towards a Deep Learning Pipeline for Measuring Retinal Bloodflow PDF

Please click the following picture to go to the youtube video of the thesis presentation.

For blood cell classification we train a CNN with positives and negatives patches extracted from the training videos.

Positive patches are centered around erythrocytes while negative patches are patches extracted arround but not centered on erythrocytes.

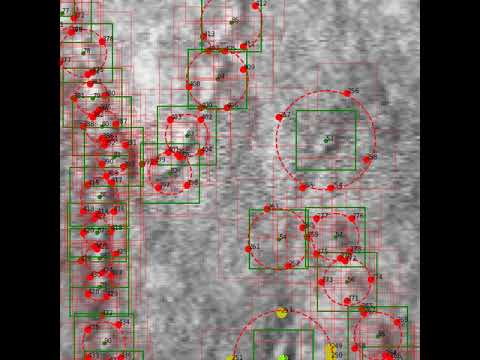

At inference time we extract a patch arround each pixel of the image and assign a probability of it being an erythrocite based on the output of the CNN.

This process produces a probability map from which the locations of the cells are estimated.

For more info please refer to the 3.2 section of the thesis pdf.

Here is an example of a registered input video of the retina on the right and the output probability map on the left. In the probability map the blue dots centered around the probability blobs signify the estimated erythrocyte location.

| Input Registered Video | Probability Map with estimated cell locations as blue dots |

|---|---|

|

|

To improve the accuracy of our estimation and also optimise the process of estimating the locations of the erythrocytes for each frame of the input we must reduce the search-space to the capillaries of the retina as thhere can't be erythrocytes outside of the capillaries.

To improve this we extract a vessel mask for each video by applying an image processing pipeline to the standard deviation image of the video.

For more info please refer to the 3.4 section of the thesis pdf.

| Standar Deviation Image | Output Vessel Mask |

|---|---|

|

|

Videos from the two different channels have a vertical offset. We calculate the vertical offset by matching the vessel masks from the two channels of the registered videos.

For more info please refer to the 3.5 section of the thesis pdf.

As a final step the estimated cell locations between the two channels must be matched. To do this we select a straight capillary segment in which we want to estimate the velocity of the cells. An average cell is computed for each frame of the two channels. The average cells are then matched to calculate the displacement.

For more info please refer to the 3.6 section of the thesis pdf.

- Download data from : https://liveuclac-my.sharepoint.com/personal/smgxadu_ucl_ac_uk/_layouts/15/onedrive.aspx?id=%2Fpersonal%2Fsmgxadu%5Fucl%5Fac%5Fuk%2FDocuments%2FShared%5FVideos&ct=1583323140391&or=OWA-NT&cid=9c7726fb-db68-e102-a4a7-f93127374108&originalPath=aHR0cHM6Ly9saXZldWNsYWMtbXkuc2hhcmVwb2ludC5jb20vOmY6L2cvcGVyc29uYWwvc21neGFkdV91Y2xfYWNfdWsvRWx0WXpFMFBVWHREc1RBY0NoQk5TY1lCSllSV2dkQmVOMmZuWHNZSmhCZ1BDQT9ydGltZT0wdG1lYXpQQTEwZw

- Extract data to Shared_Videos folder

-

- PATCH EXTRACTION (shows patch extraction methods)

-

- VESSEL MASK CREATION (demonstrates the vessel mask creation for the validation videos)

-

- AVERAGE CELL MATCHING

-

- UID6-validation (shows the results on running model with unique id 6 on the validation data. shows the worst and best probability maps for each validation video)

-

- CHANNEL REGISTRATION (shows the channel registration results) "# blood-cell-tracking"