-

Notifications

You must be signed in to change notification settings - Fork 82

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

[parquet-sdk]migrate objects [pt2] (#641)

## Description

- migrate objects

- for parquet, we want to avoid lookups for deleted resource. so we replaced with default values, which will be handled during the load as a part of qc pipeline.

```

Self {

transaction_version: txn_version,

write_set_change_index,

object_address: resource.address.clone(),

owner_address: DELETED_RESOURCE_OWNER_ADDRESS.to_string(), <-------

state_key_hash: resource.state_key_hash.clone(),

guid_creation_num: BigDecimal::default(), <-------

allow_ungated_transfer: false, <-------

is_deleted: true,

untransferrable: false, <-------

block_timestamp: chrono::NaiveDateTime::default(), <-------

```

these fields are the ones from the prev owner

## Test Plan

- Compared the number of rows from DE teams BigQuery table, testing BigQuery table, legacy processor table, and sdk processor table

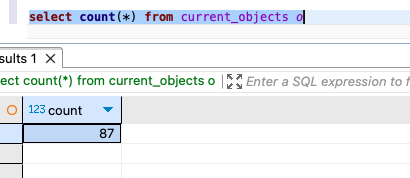

### objects

### current objects

- Loading branch information

Showing

19 changed files

with

669 additions

and

360 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.