-

-

Notifications

You must be signed in to change notification settings - Fork 183

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Finalize assignments: Chapter 7. Performance #9

Comments

|

@igrigorik any interest in peer reviewing this chapter? 😀 |

|

Thanks for the heads-up. I'll take a look tonight

…On Fri, May 24, 2019, 09:45 Rick Viscomi ***@***.***> wrote:

@JMPerez <https://github.com/JMPerez> @OBTo <https://github.com/obto>

I've updated the list of current metrics above. Let me know if there's

anything you'd change.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#9?email_source=notifications&email_token=AAFHFS5ASWVEZTHQ5H7FI63PW75R5A5CNFSM4HOOL7R2YY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGODWFTCHY#issuecomment-495661343>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AAFHFSY7AGWLJ72F34AZQ23PW75R5ANCNFSM4HOOL7RQ>

.

|

|

I'd like to see additional performance metrics that reflect the user experience included as well. Metrics like start render, speedindex and hero times that capture what a user is actually seeing on screen. Happy to be a reviewer if you're looking for more people. |

|

I agree with adding a metric like SpeedIndex that gives some info about the overall loading experience. Combined with FID and FCP would give a better picture. Hero times might be difficult to get. At Google I/O it was announced the Largest Contentful Paint (also Layout Stability). I assume both of them are in beta. If they are being tracked on HTTP Archive the Almanac could be a suitable way to share about them. |

|

|

Also, I think it'd be really valuable to have a quick Google Meet sometime in the next few weeks to bounce ideas off each other or just get on the same page. We'd accomplish a lot in just 20 minutes. Let me know. |

|

@zeman yes, it'd be great to have you as a reviewer! Thanks!

I was planning to draw from the Chrome UX Report dataset, which includes real user data for FP, FCP, DCL, OL, and FID. Start render should be covered by FCP. SI and hero timing are good ideas. We'll have to be sure to clearly denote which metrics are measured in the field and which are from the lab.

HTTP Archive uses 3G throttling for mobile and cable for desktop. Probably best not to make any changes to these configs until after the Almanac is out for consistency across tests.

Would love to have this and it'd be useful as a dimension for pretty much every other chapter as well. The thing is that I'm not aware of a public dataset that we could use for mapping websites to industry.

Yeah I looked into this before, for example: https://discuss.httparchive.org/t/cms-performance/1468?u=rviscomi Since it's CMS specific maybe it's best to include in #16 and have something like "For CMS-specific performance, see Chapter 14"? cc @amedina FYI

Similarly, this might be best done in #19. cc @andydavies @colinbendell FYI

For these dimensions in particular I'm not sure they're clear enough signals from which to draw meaningful conclusions. "Do web fonts or webp affect performance?" seems like a question only an A/B test can reliably answer.

Great idea! I'm happy to meet if there's a time that works for everyone. Not sure how feasible that would be but open to trying it. |

|

|

@pmeenan has enabled hero rendering times for the next HTTPArchive crawl so there are metrics like first/last painted hero or H1 rendering time we'll be able to use to represent/compare when users actually see important content on the page. Hero rendering times do have some important caveats around animated content and overlapping content that I'm happy to help write up. |

|

Nice! @zeman I'd be happy to add you as a coauthor and you can take the lab-based performance metrics. WDYT? |

|

@rviscomi sure, happy to take a crack at it and do a first pass assuming there's help with querying the data. |

|

Great! Yes, we're forming a team of data analysts to offload the query work from the authors. See #23 |

|

I'll be giving every chapter I'm a part of a final look through again tonight |

|

@rviscomi I was assuming that all the existing HTTP Archive metrics are available as well. But if we need to list them then here's the other important ones I'd want to look at. I wouldn't necessarily write about all these, but I'd like to explore the data to see if there are interesting correlations. Hero times: Largest Image Does CRUX have long tasks? That would be good to look at for JS performance. We find it more meaningful than FID at the moment. |

|

Thanks @zeman! Yes all HTTP Archive metrics are fair game. Keep in mind that each metric we list here will need to be queried by the Data Analyst team, so it'd be good to narrow it down as best we can. For lab metrics, I think we can drop timing metrics like FP, FCP, DCL, OL which are available in CrUX. Lab timing data also tends to be more reliable as an indicator of trends than as absolute numbers. Also things like CPU stats are interesting to see what the shape of their distributions are, but individual stats like median JS parse time for example may be less conclusive/applicable. CrUX doesn't currently have long tasks. I'd keep an eye on https://github.com/WICG/layout-instability/blob/master/README.md though. 🤐 |

|

@zeman how do these lab metrics sound to you?

Let's lock them in and we can pass them off to the analysts. |

|

Thanks @sergeychernyshev, updated to include the lab metrics from the top comment. |

|

@sergeychernyshev @zeman @JMPerez @OBTo I've updated the list of metrics in #9 (comment) based on our discussions and I think we should be good to go now. I'll close this issue. Feel free to reopen if you have any concerns. |

|

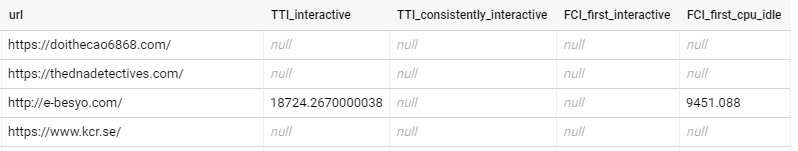

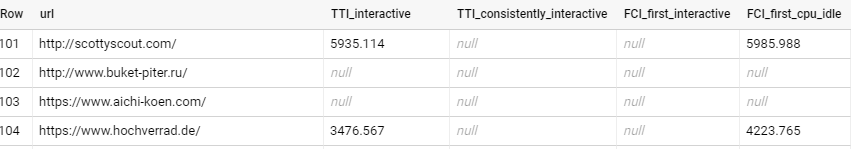

Have mapped the following three performance metrics as shown below

Refer 160-162 of the Metrics Triage sheet. Wanted to check if the understanding is correct. |

|

Thanks @raghuramakrishnan71. That mapping LGTM. cc @pmeenan @zeman @mathiasbynens in case they have any suggestions/corrections. |

For scripting CPU time, what really matters is the time spent on the main thread (because that’s what potentially delays TTI), not necessarily the total time. I also noticed that parsing is not included? In terms of RCS categories, it seems like you’d want "Parse" (but not "Parse-Background"), "Compile" (but not "Compile-Background"), "Optimize" (but not "Optimize-Background") and "JavaScript" (for execution). cc @verwaest |

|

@zeman @rviscomi

Ran the following query on the sample set. Some of the values appear to have NULLs; so maybe we need take the value which is not NULL. In that case, is there a preferred attribute? |

|

The wpt-reported CPU times are all main-thread.

…On Wed, Jun 19, 2019 at 7:56 AM Mathias Bynens ***@***.***> wrote:

Scripting CPU time = _cpu.v8.compile + _cpu.FunctionCall +

_cpu.EvaluateScript (e.g. CAST(JSON_EXTRACT(payload,

"$['_cpu.v8.compile']") as INT64) compile)

For scripting CPU time, what really matters is the time spent on the main

thread (because that’s what potentially delays TTI), not necessarily the

total time. I also noticed that parsing is not included?

In terms of RCS <https://v8.dev/docs/rcs> categories, it seems like you’d

want "Parse" (but not "Parse-Background"), "Compile" (but not

"Compile-Background"), "Optimize" (but not "Optimize-Background") and

"JavaScript" (for execution).

cc @verwaest <https://github.com/verwaest>

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#9?email_source=notifications&email_token=AADMOBMTKH4QCAKNWUEVIDLP3ING7A5CNFSM4HOOL7R2YY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGODYBUABA#issuecomment-503529476>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AADMOBODIE7B6CFSWR4374DP3ING7ANCNFSM4HOOL7RQ>

.

|

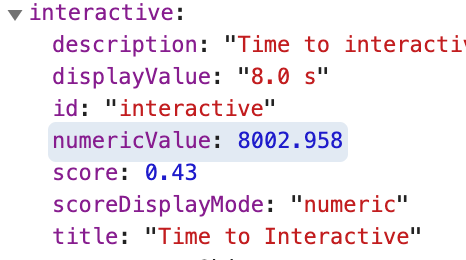

first-cpu-idle

interactive

You may need to look at the Thanks for looking into these @raghuramakrishnan71! |

|

Good chance it depends on which dataset you look at. T believe it changed

from rawValue to numericValue in Lighthouse 5 so if you are looking at a

comparison you may need to pull both.

…On Mon, Jun 24, 2019 at 8:53 AM Rick Viscomi ***@***.***> wrote:

For the Lighthouse metrics, you may need to look at the numericValue

field as opposed to rawValue. Example for https://www.kcr.se/:

[image: image]

<https://user-images.githubusercontent.com/1120896/60020203-d0daca00-968f-11e9-9f84-57c718339927.png>

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#9?email_source=notifications&email_token=AADMOBIPYP6XIIB6QGPN7EDP4C7WFA5CNFSM4HOOL7R2YY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGODYM2JEA#issuecomment-504997008>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AADMOBO75WTFMR2PYJTLS3DP4C7WFANCNFSM4HOOL7RQ>

.

|

|

@rviscomi I am not very clear about the metric "header volume" (Content Distribution/CDN). Does it refer to the size of the HTTP headers? |

|

@zcorpan Does the metric "Attribute usage (stretch goal)" (Page Content/Markup) refer to the usage of HTML attributes. In that case we may be able to find out distribution (https://discuss.httparchive.org/t/usage-of-aria-attributes/778) |

|

@raghuramakrishnan71 could you post these comments in their respective chapters? #19 for CDN and #5 for Markup |

* start traduction * process trad * # This is a combination of 9 commits. # This is the 1st commit message: update # The commit message #2 will be skipped: # review # The commit message #3 will be skipped: # review #2 # The commit message #4 will be skipped: # advance # The commit message #5 will be skipped: # update # The commit message #6 will be skipped: # update translation # The commit message #7 will be skipped: # update # The commit message #8 will be skipped: # update # # update # The commit message #9 will be skipped: # update * First quick review (typofixes, translating alternatives) * Preserve original line numbers To facilitate the review of original text vs. translation side-by-side. Also: microtypo fixes. * Review => l338 * End of fine review * Adding @allemas to translators * Rename mise-en-cache to caching * final updates * update accessibility * merge line * Update src/content/fr/2019/caching.md Co-Authored-By: Barry Pollard <[email protected]> * Update src/content/fr/2019/caching.md If it's not too much effort, could you also fix this in the English version as part of this PR as looks wrong there: 6% of requests have a time to time (TTL) should be: 6% of requests have a Time to Live (TTL) Co-Authored-By: Barry Pollard <[email protected]> * Update src/content/fr/2019/caching.md Do we need to state that all the directives are English language terms or is that overkill? If so need to check this doesn't mess up the markdown->HTML script. Co-Authored-By: Barry Pollard <[email protected]> Co-authored-by: Boris SCHAPIRA <[email protected]> Co-authored-by: Barry Pollard <[email protected]>

* start traduction * process trad * # This is a combination of 9 commits. # This is the 1st commit message: update # The commit message #2 will be skipped: # review # The commit message #3 will be skipped: # review #2 # The commit message #4 will be skipped: # advance # The commit message #5 will be skipped: # update # The commit message #6 will be skipped: # update translation # The commit message #7 will be skipped: # update # The commit message #8 will be skipped: # update # # update # The commit message #9 will be skipped: # update * First quick review (typofixes, translating alternatives) * Preserve original line numbers To facilitate the review of original text vs. translation side-by-side. Also: microtypo fixes. * Review => l338 * End of fine review * Adding @allemas to translators * Rename mise-en-cache to caching * final updates * update accessibility * merge line * Update src/content/fr/2019/caching.md Co-Authored-By: Barry Pollard <[email protected]> * Update src/content/fr/2019/caching.md If it's not too much effort, could you also fix this in the English version as part of this PR as looks wrong there: 6% of requests have a time to time (TTL) should be: 6% of requests have a Time to Live (TTL) Co-Authored-By: Barry Pollard <[email protected]> * Update src/content/fr/2019/caching.md Do we need to state that all the directives are English language terms or is that overkill? If so need to check this doesn't mess up the markdown->HTML script. Co-Authored-By: Barry Pollard <[email protected]> * generate caching content in french * Update src/content/fr/2019/caching.md Co-Authored-By: Barry Pollard <[email protected]> * Update src/content/fr/2019/caching.md Co-Authored-By: Barry Pollard <[email protected]> Co-authored-by: Boris SCHAPIRA <[email protected]> Co-authored-by: Barry Pollard <[email protected]>

Due date: To help us stay on schedule, please complete the action items in this issue by June 3.

To do:

Current list of metrics:

👉 AI (coauthors): Finalize which metrics you might like to include in an annual "state of web performance" report powered by HTTP Archive. Community contributors have initially sketched out a few ideas to get the ball rolling, but it's up to you, the subject matter experts, to know exactly which metrics we should be looking at. You can use the brainstorming doc to explore ideas.

The metrics should paint a holistic, data-driven picture of the web perf landscape. The HTTP Archive does have its limitations and blind spots, so if there are metrics out of scope it's still good to identify them now during the brainstorming phase. We can make a note of them in the final report so readers understand why they're not discussed and the HTTP Archive team can make an effort to improve our telemetry for next year's Almanac.

Next steps: Over the next couple of months analysts will write the queries and generate the results, then hand everything off to you to write up your interpretation of the data.

Additional resources:

The text was updated successfully, but these errors were encountered: