| Document Control | Srikant Jakilinki |

|---|---|

| Contact Points | [email protected] |

| Approved By | Sridhar Panuganti ([email protected]) |

| Co-Developed By | Q-Engineering ([email protected]) |

| Status | External Release |

| Distribution List | Management, QEngineering |

| DAJA | Definitions, Acronyms, Jargon and Abbreviations |

|---|---|

| ALPR | Automatic License Plate Recognition |

| SoA | State of Art |

| YOLO | You Only Look Once |

| OCR | Optical Character Recognition |

| BBOX | Bounding Box |

| GPU | Graphics Processing Unit |

| CUDA | Compute Unified Device Architecture |

This repository is our implementation of ALPR on nVIDIA-Jetson-Nano with SoA [1, 2] YOLO weights/networks/models using Darknet which has been claimed to achieve 96.9% accuracy.

In the original paper and work, researchers have created 3 models - for vehicle-detection (for cars and bikes), for license-plate-detection (for many geographies) and license-plate-recognition (not based on OCR). An image/frame is sent to 1st model to get vehicle-BBOX(s) each of which are manually cropped and sent to 2nd model to get plate-BBOX which is again manually cropped to get the alphanumeral-BBOX(s) in the plates by 3rd model which also outputs the recognized alphanumerals to console.

This work automates the manual cropping and cascades these models on GPU/CUDA-enabled Darknet on nVIDIA-Jetson-Nano taking one or more image/video/RTSP streams as input and gives the license-plate alphanumerals of all the vehicles detected in image/video/RTSP streams as output in near-real-time.

[1] R. Laroca, L. A. Zanlorensi, G. R. Gonçalves, E. Todt, W. R. Schwartz, D. Menotti, “An Efficient and Layout-Independent Automatic License Plate Recognition System Based on the YOLO Detector,” IET Intelligent Transport Systems, vol. 15, no. 4, pp. 483-503, 2021 https://web.inf.ufpr.br/vri/publications/layout-independent-alpr/

[2] R. Laroca, E. Severo, L. A. Zanlorensi, L. S. Oliveira, G. R. Gonçalves, W. R. Schwartz, D. Menotti, “A Robust Real-Time Automatic License Plate Recognition Based on the YOLO Detector,” in International Joint Conference on Neural Networks (IJCNN), July 2018, pp. 1–10. https://web.inf.ufpr.br/vri/publications/laroca2018robust/

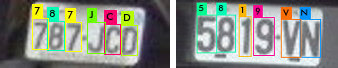

| IMAGE | DETECTIONS | RESULTS |

|---|---|---|

|

|

|

To run the application, you need to have:

- A member of the Jetson family, like a Jetson Nano or Xavier.

- OpenCV 64-bit installed.

- Darknet (the Alexey version) installed.

- MongoDB, Node.js and JSON for C++ installed.

- The darknet models downloaded from Gdrive.

- Code::Blocks installed, if you like to work with the C++ source.

Start with some evergreens

$ sudo apt-get update

$ sudo apt-get upgrade

$ sudo apt-get install curl libcurl3

$ sudo apt-get install cmake wget

$ sudo apt-get install libcanberra-gtk*

$ curl -sL https://deb.nodesource.com/setup_12.x | sudo -E bash -

$ sudo apt-get install -y nodejs

$ sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv 9DA31620334BD75D9DCB49F368818C72E52529D4

$ echo "deb [ arch=amd64,arm64 ] https://repo.mongodb.org/apt/ubuntu xenial/mongodb-org/4.0 multiverse" | sudo tee /etc/apt/sources.list.d/mongodb-org-4.0.list

$ sudo apt-get update

$ sudo apt-get install -y openssl mongodb-org

# Start service

$ sudo systemctl start mongod

# Enable service on boot

$ sudo systemctl enable mongod

written by Niels Lohmann.

$ git clone https://github.com/nlohmann/json.git

$ cd json

$ mkdir build

$ cd build

$ cmake ..

$ make -j4

$ sudo make install

$ sudo apt-get install codeblocks

Follow this guide.

Follow this guide.

Due to their large size, all darknet models are stored at Gdrive ALPR_models.zip.

After downloading you can unzip and save the models in the appropriate folder.

To extract and run the network in Code::Blocks

$ mkdir MyDir

$ cd MyDir

$ git clone https://github.com/xactai/qengineering-01.git

Your MyDir folder must now look like this:

.

├── models

│ ├── lp-detection-layout-classification.cfg

│ ├── lp-detection-layout-classification.data

│ ├── lp-detection-layout-classification.names

│ ├── lp-detection-layout-classification.weights

│ ├── lp-recognition.cfg

│ ├── lp-recognition.data

│ ├── lp-recognition.names

│ ├── lp-recognition.weights

│ ├── vehicle-detection.cfg

│ ├── vehicle-detection.data

│ ├── vehicle-detection.names

│ └── vehicle-detection.weights

├── include

│ ├── darkunistd.h

│ ├── http_stream.h

│ ├── MJPG_sender.h

│ ├── Numbers.h

│ ├── Regression.h

│ ├── RTSPcam.h

│ └── Tjson.h

├── src

│ ├── http_stream.cpp

│ ├── MJPG_sender.cpp

│ ├── main.cpp

│ ├── Regression.cpp

│ ├── RTSPcam.cpp

│ └── Tjson.cpp

├── CMakeLists.txt

├── config.json

└── YOLO_ALPR.cbp

All required settings are listed in the config.json file. Without this file, the app will not start.

{

"VERSION": "1.0.0",

"VIDEO_INPUT": "CCTV",

"VIDEO_INPUTS_PARAMS": {

"image": "./images/4.jpg",

"folder": "./inputs/images",

"video": "./images/demo.mp4",

"usbcam": "v4l2src device=/dev/video0 ! video/x-raw, framerate=30/1, width=640, height=360 ! videoconvert ! appsink",

"CSI1": "nvarguscamerasrc sensor_id=0 ! video/x-raw(memory:NVMM),width=640, height=480, framerate=15/1, format=NV12 ! nvvidconv ! video/x-raw, format=BGRx, width=640, height=480 ! videoconvert ! video/x-raw, format=BGR ! appsink",

"CSI2": "nvarguscamerasrc sensor_id=1 ! video/x-raw(memory:NVMM),width=640, height=480, framerate=15/1, format=NV12 ! nvvidconv ! video/x-raw, format=BGRx, width=640, height=480 ! videoconvert ! video/x-raw, format=BGR ! appsink",

"CCTV": "rtsp://192.168.178.129:8554/test/",

"remote_hls_gstreamer": "souphttpsrc location=http://YOUR_HLSSTREAM_URL_HERE.m3u8 ! hlsdemux ! decodebin ! videoconvert ! videoscale ! appsink"

},

"RoI": {

"x_offset": 220,

"y_offset": 500,

"width": 640,

"height": 480

},

"MJPEG_PORT": 8090,

"MJPEG_WIDTH": 320,

"MJPEG_HEIGHT": 240,

"VEHICLE_MODEL": "./models/vehicle-detection",

"LICENSE_MODEL": "./models/lp-detection-layout-classification",

"OCR_MODEL": "./models/lp-recognition",

"PRINT_ON_CLI": true,

"PRINT_ON_RENDER": true,

"FoI_FOLDER": "none",

"VEHICLES_FOLDER": "none",

"PLATES_FOLDER": "none",

"JSONS_FOLDER": "./outputs/jsons",

"RENDERS_FOLDER": "none",

"HEURISTIC_ON": true,

"THRESHOLD_VERHICLE": 0.25,

"THRESHOLD_PLATE": 0.01,

"THRESHOLD_OCR": 0.5

}Select your video input. It can be one of the sources listed under VIDEO_INPUTS_PARAMS:

file, movie, usbcam, raspberrycam, remote_cam or remote_hls_gstreamer.

Default choice is an RTSP video stream.

| Item | Description |

|---|---|

| image | Name and location of the picture. It must be a jpg or png file. |

| folder | Directory containing the pictures. They must be jpg or png. |

| video | Name and location of the video file. |

| usbcam | The GStreamer pipeline connecting the ALPR to an USB camera. |

| CSI1 | The GStreamer pipeline connecting the ALPR to an MIPI camera (port 0). |

| CSI2 | The GStreamer pipeline connecting the ALPR to an MIPI camera (port 1). |

| CCTV | The GStreamer pipeline connecting the ALPR to an RTSP source. |

| remote_hls_gstreamer | The GStreamer pipeline connecting the ALPR to an HLS source. |

The coordinates of the cropped image that will be analyzed. All parameters are checked in advance.

At run time, they can be modified if necessary to avoid crashes. The size and height take precedence over the x and y offset.

The port number of the local host to which the video is streamed.

The image size send to the local host.

The name and location where the darknet deep learning models can be found.

You need three sets: one for detecting the vehicle, one for detecting a license plate and one for optical character recognition.

Each set constist of three files. The topology (.cfg) file, the weights (.weights) file and the labels (.names).

When the boolean PRINT_ON_CLI is true, license plates are printed on the terminal.

When false, the license plates are still detected and sent as JSON string to local port 8070, but are not shown on the terminal window.

When the boolean PRINT_ON_RENDER is true, vehicles, license plates and OCR outcomes are drawn into the output window.

Locations where debug images are stored. When the folder name is none the storage is inhibited.

FoI_FOLDER: Every frame being analysed.

VEHICLES_FOLDER: Every vehicle found.

PLATES_FOLDER: Every lisence plate found.

JSONS_FOLDER: OCR json files.

RENDERS_FOLDER: Frames shown with boxes around found items.

👉 Note that the amount of data stored can be huge! Within a few minutes, the SD card can be completely written.

When the boolean HEURISTIC_ON is true, the characters found are sorted on position and doubles are removed.

The threshold appied to the darknet model mentioned

Before compiling and running the application, modify the config.json to your situation.

Once done, you have two options.

The first option is using CMake. Please follow the next commands

$ cd *MyDir* <br/>

$ mkdir build

$ cd build

$ cmake ..

$ make

The second option is using Code::Blocks, an GNU C++ IDE.

Load the project file YOLO_ALPR.cbp in Code::Blocks and run <F9>.

More info follow the instructions at Hands-On.

Updating ReadME to check the contributor list in Github repo.