-

Notifications

You must be signed in to change notification settings - Fork 41

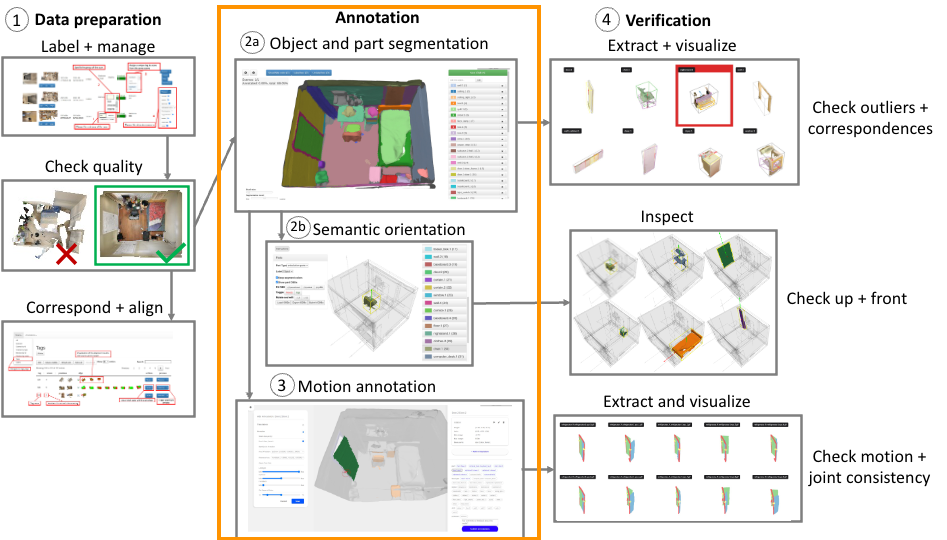

Multiscan Annotation Pipeline

The SSTK provides the 3D annotation tools used for creating the MultiScan dataset, which consists of reconstructed indoor 3D environments annotated with object parts and motion parameters.

The 3D annotation pipeline consists of the following steps:

- Triangle-based semantic segmentation labeling of objects and parts

- Specifying the semantic orientation of the bounding boxes of each object using the Scan OBB Aligner

- Annotation of articulation and motion parameters with the Articulation Annotator

How to use the annotation pipeline (once it is setup) is provided as part of the MultiScan documentation.

If you use any of the annotation tools for MultiScan, please cite:

@inproceedings{mao2022multiscan,

author = {Mao, Yongsen and Zhang, Yiming and Jiang, Hanxiao and Chang, Angel X and Savva, Manolis},

title = {Multi{S}can: Scalable {RGBD} scanning for {3D} environments with articulated objects},

booktitle = {Advances in Neural Information Processing Systems},

year = {2022}

}

An overview of the architecture of the annotation pipeline for MultiScan is shown below. The annotators provided by the SSTK is highlighted in orange.

The annotation pipeline use a MySQL database for storing annotations.

See MySQL installation instructions for installing your own instance of MySQL and run the provided DDL scripts in scripts/db to create the annotation tables. Once you have setup a MySQL server, you should edit config.annDb in server/config/index.js

to point to your MySQL instance.

Please see Preparing assets for annotation for how to prepare your assets for annotation. The metadata file used for the multiscan annotators has been updated and will have additional fields, so please see https://github.com/smartscenes/sstk-metadata/blob/master/data/multiscan/multiscan.json for an example.

For Multiscan annotation, we assume that you have a reconstructed, textured mesh (e.g. .obj with mtl).

Once you have a textured mesh, you should make sure that the metadata file for your assets indicates where the textured mesh can be found.

Example (adding decimated textured mesh to metadata file):

"dataTypes": {

...

"mesh": [

{

"name": "textured-v1.1",

"format": "obj",

"path": "${rootPath}/${id}/v1.1/textured_mesh_2048/${id}.obj",

"defaultUp": [ 0, 0, 1 ], "defaultFront": [ 0, -1, 0], "defaultUnit": 1,

"defaultMaterialType": "basic",

"materialSidedness": "Front",

"options": { "ignoreZeroRGBs": true, "computeNormals": true, "smooth": false, "keepVertIndices": true }

}

]

}

Below are some details of how to process and prepare the textured mesh and different steps of the annotation process.

The main Multiscan WebUI will include links to the different annotation stages. In addition, you can add customized grouped annotation views for easy access to your scans.

Examples of grouped annotations view are at:

-

grouped-annotator.pug- for accessing the different annotation tools -

grouped-viewer.pug- for viewing annotations

Other views used for Multiscan annotators are found in server/proj/multiscan/views

In this step, you will provide annotation of objects and parts of 3D reconstructions of scans.

To annotate your reconstructed, textured mesh with objects and parts, first make sure that it has a set of multi-level segmentations that can be used in the annotation pipeline.

If you have a mesh of the scan, use the MultiScan Segmentator to create a multi-granularity segmentation using Felzenswalb and Huttenlocher's Graph Based Image Segmentation algorithm on computed mesh normals.

Once you have those, you need to update your asset's metadata file so it knows about the segmentation.

Example (adding hierarchical segmentation to metadata file):

"dataTypes": {

...

"segment": [

{

"name": "triseg-hier-v1.1",

"format": "indexedSegmentation",

"variants": {

"varying": ["granularity"],

"granularity": ["0.500000", "0.100000", "0.050000"],

"name": ["triseg-hier-v1.1", "triseg-fine-hier-v1.1", "triseg-finest-hier-v1.1"],

"file": "${rootPath}/${id}/v1.1/segs_tri/${id}_decimated_colored.${granularity}.segs.json"

},

"createdFrom": { "dataType": "mesh", "name": "textured-v1.1" }

}

]

}

In this example we specify three different granularities, with the each stored at the path of the pattern ${rootPath}/${id}/v1.1/segs_tri/${id}_decimated_colored.${granularity}.segs.json. For instance, for granularity 0.050000, the segmentation is expected to be at ${rootPath}/${id}/v1.1/segs_tri/${id}_decimated_colored.0.050000.segs.json. The name field is an array of the same length as the granularity array, and provides a handle for referring to each of the segmentations of different granularity. For instance, triseg-finest-hier-v1.1 will be used to refer to the segmentation with granularity 0.050000. This allows for independent segmentations at different granularities to be generated and hooked up and tested with the SSTK to see with granularity works well. In this case, we will use the most granular segmentation: triseg-finest-hier-v1.1.

After adding this entry, the asset should be ready for semantic segmentation annotation.

Testing access. To test access, substitute for the modelId URL parameter with the full ID of your asset ([yourAssetName.assetId]) in this URL: http://localhost:8010/multiscan/segment-annotator?modelId=[fullAssetId]&format=textured-v1.1&segmentType=triseg-finest-hier-v1.1&condition=manual&palette=d3_unknown_category19&taskMode=fixup&startFrom=latest

Note that format and segmentType parameters specifies the mesh and segmentation to use. These should correspond to the exact names you specified in the metadata. In the above example, three granularities of triangle segmentation are specified: triseg-hier-v1.1, triseg-fine-hier-v1.1, triseg-finest-hier-v1.1, and in the above url we specify the last one triseg-finest-hier-v1.1.

The condition parameter is a field specifying the annotation condition (provided for filtering later if you have different conditions). The palette parameter specifies the color palette to use, and taskMode=fixup&startFrom=latest indicates that you want to start from the latest annotation. This is good for continuing from your previous annotations, but as you are just setting up the segment annotator and hooking up your asset, it will start with an unannotated scan.

See Multiscan Semantic Annotation for how to use the semantic annotation interface and the labeling conventions used in MultiScan for labeling objects and parts.

Please note that it is important to follow the convention of <object_name>.<object_instance_id>:<part_name>.<part_instance_id> (e.g. cabinet.1:door.1) as that is how the rest of the annotation tools will identify object instance and parts. If an object does not have any parts, the part information can be omitted (e.g. wall.1).

In MultiScan, we have multiple scans of the same environments. To avoid manual effort in semantic annotation, we allow for the projection of labels from one scan to another. Note that the projection will result in noisy labels that will still need to be manually fixed up, as the two scans may not be perfected aligned, and the objects and their states are likely to have changed between the two scans. To allow for the projection, you will need to tell the SSTK where to find the alignment information between a set of scans. This is specified by adding the following to the metadata file.

Example (specifying information about how this scan aligns to a reference scan)

"dataTypes": {

...

"alignments": [

{

"name": "alignment-to-ref",

"file": "${rootPath}/${id}/v1.1/${id}_pair_align.json"

}

]

}

The semantically annotated parts need to be added to the metadata file so that they can be used by downstream annotators (e.g. the Scan OBB Aligner and Articulation Annotator. You can either make the annotation from the DB directly available or export the annotations to path file (so they don't change) and only then allow for downstream annotation.

The following examples shows how to update the metadata file so that annotated parts (from the DB) are directly available:

"dataTypes": {

...

"parts": [

{

"name": "articulation-parts",

"partType": "annotated-segment-triindices",

"files": {

"parts": "${baseUrl}/annotations/latest?itemId=${fullId}&task=multiscan-annotate&type=segment-triindices"

},

"createdFrom": {

"dataType": "mesh",

"name": "textured-v1.1"

}

}

]

}

For MultiScan style triangle level annotations, the semantic annotations are stored in the main annotations table with type=segment-triindices.

Annotated records can be retrieved using /annotations/list/latest/ids?type=segment-triindices

Use /annotations/latest?itemId=[fullAssetId]&type=segment-triindices to fetch annotation for the given asset.

Use /annotations/get/[annotationId] to fetch annotation for a specific annotation id (useful for looking at earlier annotation records).

Example of exported segmentation annotation:

{

"id": ..., # Unique id for this annotation

"appId":"SegmentAnnotator.v6-20220822", # App name, version, and date

"itemId": "multiscan.xxxx", # Full asset id

"task": "multiscan-annotate", # task name

"taskMode": "fixup", # task mode

"type": "segment-triindices", # annotation type

"workerId": "...", # worker name

"data": {

"stats": { # statistics about annotation progress

"initial": {

"annotatedFaces":0,

"unannotatedFaces":28276,

"totalFaces":28276,

"annotatedFaceArea":0,

"unannotatedFaceArea":41122.76432144284,

"totalFaceArea":41122.76432144284,

"percentComplete":0

},

"delta": { ... } # similar structure as for initial indicating delta

"total": { ... } # similar structure as for initial indicating total

},

"timings": { # timing information

"times": {

"initial":1681827396738,

"modelLoad": {

"start":1681827397606,

"end":1681827400043,

"duration":2437

},

"annotatorReady":1681827400604,

"annotationSubmit":1681827795164

},

"durations": { # estimated duration (in ms of how long things took)

"modelLoad":2437,

"annotatorReady":3866,

"annotationSubmit":398426

}

},

"metadata": { # metadata indicating what mesh and segmentation was used

"segmentType": "triseg-finest-hier-v1.1",

"meshName": "textured-v1.1",

"startFrom": "latest",

"startAnnotations": "aggr"

},

"annotations": [

... # array of annotations

{

"partId": 1, # partId

"objectId": 1, # objectId

"label":"oil_bottle.1", # label

"obb": {

"centroid": [...], # vector3 indicating obb centroid

"axesLengths": [...], # vector3 indicating size of obb

"normalizedAxes": [...], # 3x3 matrix (as column order vector) indicating basis of obb

"min": [...], # vector3 indicating minimum of obb

"max": [...] # vector3 indicating maximum of obb

},

"dominantNormal": [...], # vector3 indicating direction of dominant normal of obb

"triIndices": [...] # array of indices of triangles belonging to this label

}

...

]

}

}

Use ssc/export-annotated-ply.js to export PLY colored using the semantic segmentation annotations.

Once you have semantically annotated objects, then you can specify the semantic orientation using the Scan OBB Aligner

See above for making sure the paths to the segmentation annotations are available.

Once you have semantically annotated parts, then you are ready to use the Articulation Annotator to specify the motion parameters.

See above for making sure the paths to the segmentation annotations are available.

Example (adding articulations for export):

"dataTypes": {

...

"articulations": [

{

"name": "articulations",

"files": {

"articulations": "${baseUrl}/articulation-annotations/load-annotations?modelId=${fullId}"

},

"createdFrom": {

"dataType": "mesh",

"name": "textured-v1.1"

}

}

]

}

- Home

- Main

- Annotators

- Assets

- Batch processing

- Development

- File formats

- Rendering

- Scene Tools

- Voxels