-

Notifications

You must be signed in to change notification settings - Fork 915

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Optimization to decoding of parquet level streams (#13203)

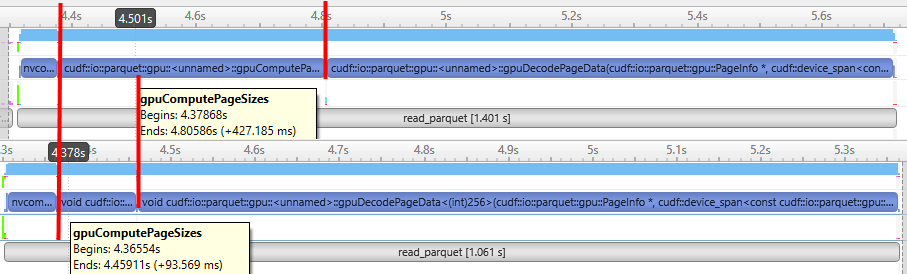

An optimization to the decoding of the definition and repetition level streams in Parquet files. Previously, we were decoding these streams using 1 warp. With this optimization we do it arbitrarily wide (currently set for 512 threads). This gives a dramatic improvement. The core of the work is in the new file `rle_stream.cuh` which encapsulates the decoding into an `rle_stream` object. This PR only applies the opimization to the `gpuComputePageSizes` kernel, used for preprocessing list columns and for the chunked read case involving strings or lists. In addition, the `UpdatePageSizes` function has been improved to also work at the block level instead of just using a single warp. Testing with the cudf parquet reader list benchmarks result in as much as a **75%** reduction in time in the `gpuComputePageSizes` kernel. Future PRs will apply this to the gpuDecodePageData kernel. Leaving as a draft for the moment - more detailed benchmarks and numbers forthcoming, along with some possible parameter tuning. Benchmark info. A before/after sample from the `parquet_reader_io_compression` suite on an A5000. The kernel goes from 427 milliseconds to 93 milliseconds. This seems to be a pretty typical situation, although it will definitely be affected by the encoded data (run lengths, etc).  The reader benchmarks that involve this kernel yield some great improvements. ``` parquet_read_decode (A = Before. B = After) | data_type | io | cardinality | run_length | bytes_per_second (A) | bytes_per_second (B) |-----------|---------------|-------------|------------|----------------------|---------------------| | LIST | DEVICE_BUFFER | 0 | 1 | 5399068099 | 6044036091 | | LIST | DEVICE_BUFFER | 1000 | 1 | 5930855807 | 6505889742 | | LIST | DEVICE_BUFFER | 0 | 32 | 6862874160 | 7531918407 | | LIST | DEVICE_BUFFER | 1000 | 32 | 6781795229 | 7463856554 | ``` ``` parquet_read_io_compression (A = Before. B = After) io | compression | bytes_per_second (A) | bytes_per_second(B) |---------------|-------------|----------------------|-------------------| | DEVICE_BUFFER | SNAPPY | 307421363 | 393735255 | | DEVICE_BUFFER | SNAPPY | 323998549 | 426045725 | | DEVICE_BUFFER | SNAPPY | 386112997 | 508751604 | | DEVICE_BUFFER | SNAPPY | 381398279 | 498963635 | ``` Authors: - https://github.com/nvdbaranec Approvers: - Yunsong Wang (https://github.com/PointKernel) - Vukasin Milovanovic (https://github.com/vuule) URL: #13203

- Loading branch information

1 parent

403c83f

commit 1581773

Showing

8 changed files

with

725 additions

and

203 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,7 +1,7 @@ | ||

| /* | ||

| * Copyright 2019 BlazingDB, Inc. | ||

| * Copyright 2019 Eyal Rozenberg <[email protected]> | ||

| * Copyright (c) 2020-2022, NVIDIA CORPORATION. | ||

| * Copyright (c) 2020-2023, NVIDIA CORPORATION. | ||

| * | ||

| * Licensed under the Apache License, Version 2.0 (the "License"); | ||

| * you may not use this file except in compliance with the License. | ||

|

|

@@ -44,7 +44,7 @@ namespace util { | |

| * `modulus` is positive. The safety is in regard to rollover. | ||

| */ | ||

| template <typename S> | ||

| S round_up_safe(S number_to_round, S modulus) | ||

| constexpr S round_up_safe(S number_to_round, S modulus) | ||

| { | ||

| auto remainder = number_to_round % modulus; | ||

| if (remainder == 0) { return number_to_round; } | ||

|

|

@@ -67,7 +67,7 @@ S round_up_safe(S number_to_round, S modulus) | |

| * `modulus` is positive and does not check for overflow. | ||

| */ | ||

| template <typename S> | ||

| S round_down_safe(S number_to_round, S modulus) noexcept | ||

| constexpr S round_down_safe(S number_to_round, S modulus) noexcept | ||

| { | ||

| auto remainder = number_to_round % modulus; | ||

| auto rounded_down = number_to_round - remainder; | ||

|

|

||

Large diffs are not rendered by default.

Oops, something went wrong.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.