-

Notifications

You must be signed in to change notification settings - Fork 2.2k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Error on notify: context deadline exceeded #282

Comments

|

That seems highly unlikely to be related to the grouping. The most likely thing here is that notification requests to one of your receivers are timing out. Your configuration changes then cause no requests being sent to that receiver in the first place. For further debugging we need the full configuration (before AND after) and the label sets of the four alerts. |

|

Difference in old & new configuration is "group_by" & "repeat_interval" value New alertmanager.conf: Complete Error: With old configuration: I have 5 alerts for jira_chk receiver; 4 in a group & 1 in another. |

|

I am also facing the same issue. Does alertmanager retries fail notifications? |

|

Here are my logs (using latest stable version - v0.3.0): |

|

@rohit01 yes, Alertmanager retries failed notifications. |

|

@rohit01 could you share your configuration as well? Did it also happened after you changed something or was it there from the beginning? |

|

It started happening automatically one day without any config change. All of a sudden, we stopped getting any alerts. Did random things like restart container, minor config changes and it started working again with intermittent failures. Here's the configuration: |

|

This is worrying as it's two cases. But OTOH in both cases the error messages indicate a timeout when sending the notification (not anywhere in our code before or after). So I'm wondering if it's just an unlucky coincidence. Have you verified whether you can curl the respective notification endpoint (PagerDuty in your case @rohit01) within the timeout? |

|

@fabxc What is the timeout value? Unable to find in code. |

|

@rohit01 I was not quite precise earlier. The general timeout is the grouping interval, which you can think of the "update frequency" for a group of alerts. You have set yours very low, it should generally be at the order of 1 minute or more as it is meant to avoid getting spammed. PagerDuty for that matter will not even page you again and has issues updating the data (last time I checked). So there's no value in having it that low here. Lines 314 to 316 in 7b20b77

Now for your error logs on the failed retry attempts. This seems to be an issue here: We retry notification with backoff based on a ticker. We attempt to receive a signal from that ticker, but want to abort that if the context timed out. In the code we just do a plain select over both signals, the choice is random. Does that make sense? So your problem would most likely disappear if you set Sorry about addressing your issue super late, @kavyakul. Your error logs also simply indicates a failure to reach the notification endpoint: |

This addresses the misleading error messages reported in #212 Explanation: #282 (comment) Fixes #282

|

@fabxc Thanks for the explanation. We were running with group_interval as 1s as well. Now, I think we know the reason of our pain :) |

|

Yes, I suppose we could think about making changes to have a timeout larger than @brian-brazil what's your opinion? |

|

I'd not consider any group interval under a minute to be sane. I think our current behaviour is fine. If you want some form of automated action faster than that, then you want a dedicated realtime control system because humans can't respond that fast. |

|

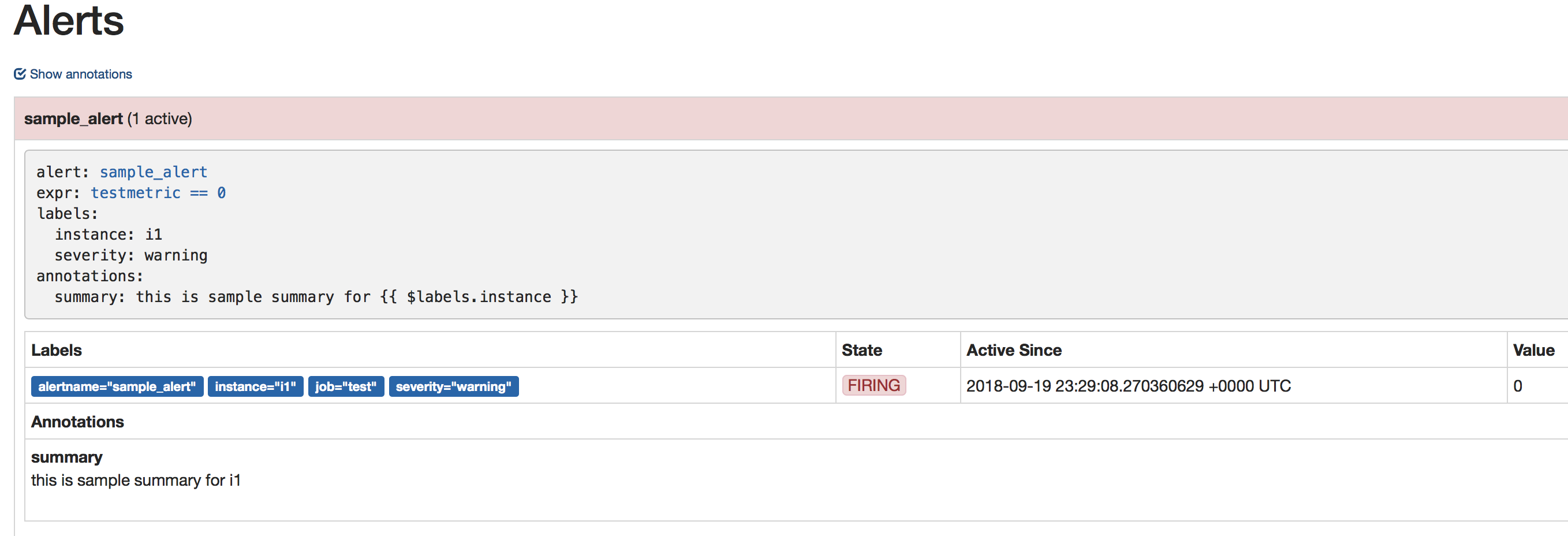

Hi here is my alert rules config: alert.rules

alertmanager.yaml global: route:

Alert: Can connect to endpoint:

Error: level=error ts=2018-09-19T23:31:48.278890325Z caller=notify.go:332 component=dispatcher msg="Error on notify" err="context deadline exceeded" Could you please let me know for any solution for this. Regards, |

|

also having the same issue here My alertmanager and prometheus are both in a kubernetes cluster. And using helm with the stable/prometheus chart. here is my configuration for alertmanager. and here are the logs

|

If I group my alerts in such a way that 4 alerts fall in a group I get following error:

ERRO[0300] Error on notify: context deadline exceeded source=notify.go:152

ERRO[0300] Notify for 1 alerts failed: context deadline exceeded source=dispatch.go:238

& if I modify grouping so that 1 group has one alert each, then thing move smoothly.

Please help to correct where I am wrong.

alertmanager.conf:

The text was updated successfully, but these errors were encountered: