-

Notifications

You must be signed in to change notification settings - Fork 8

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

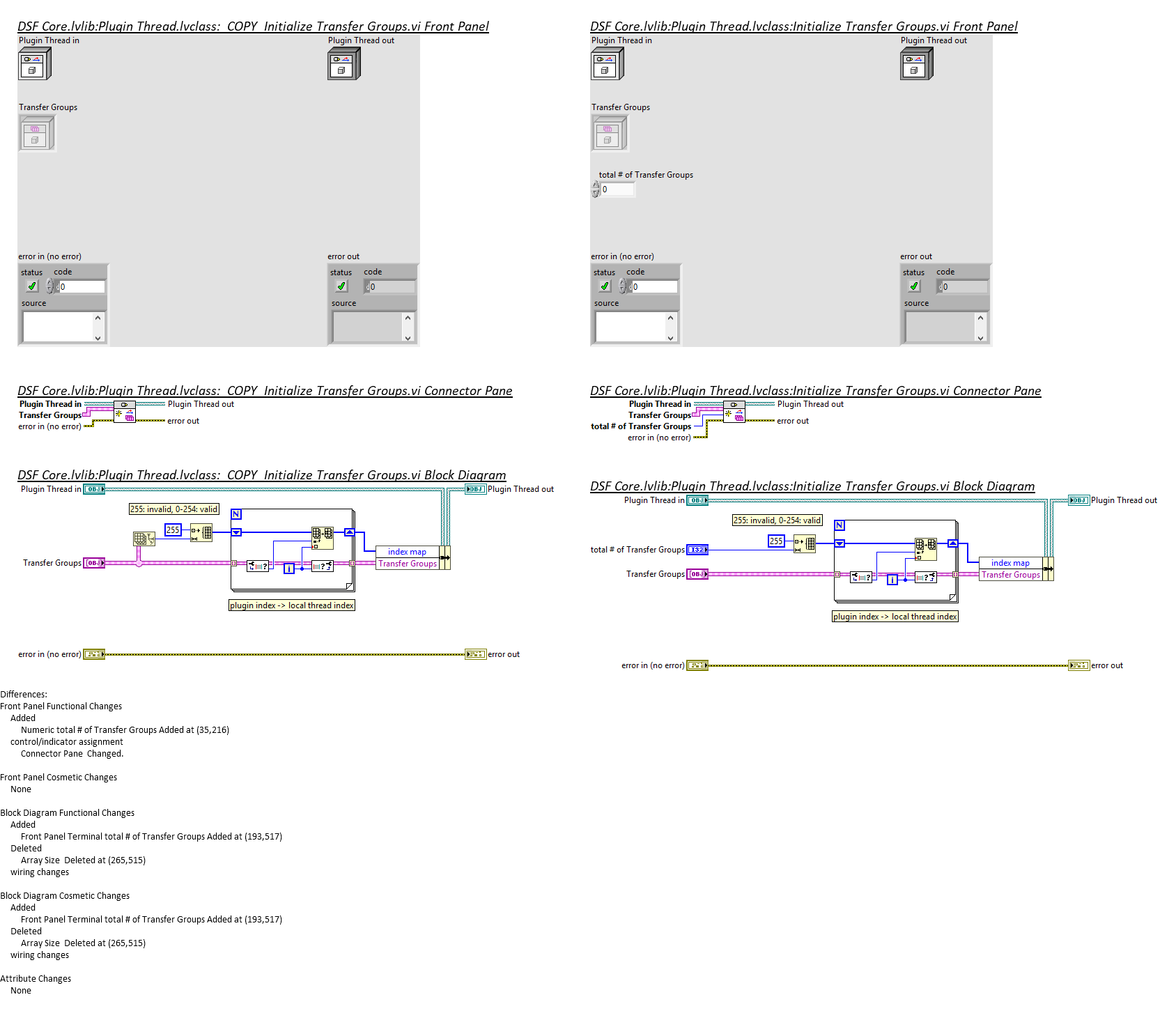

Resize the transfer group index map to the total number of transfer groups #40

Conversation

…roups It seems like this array was consistently undersized, which resulted in reads for all but the first thread being out-of-bounds and returning zero.

|

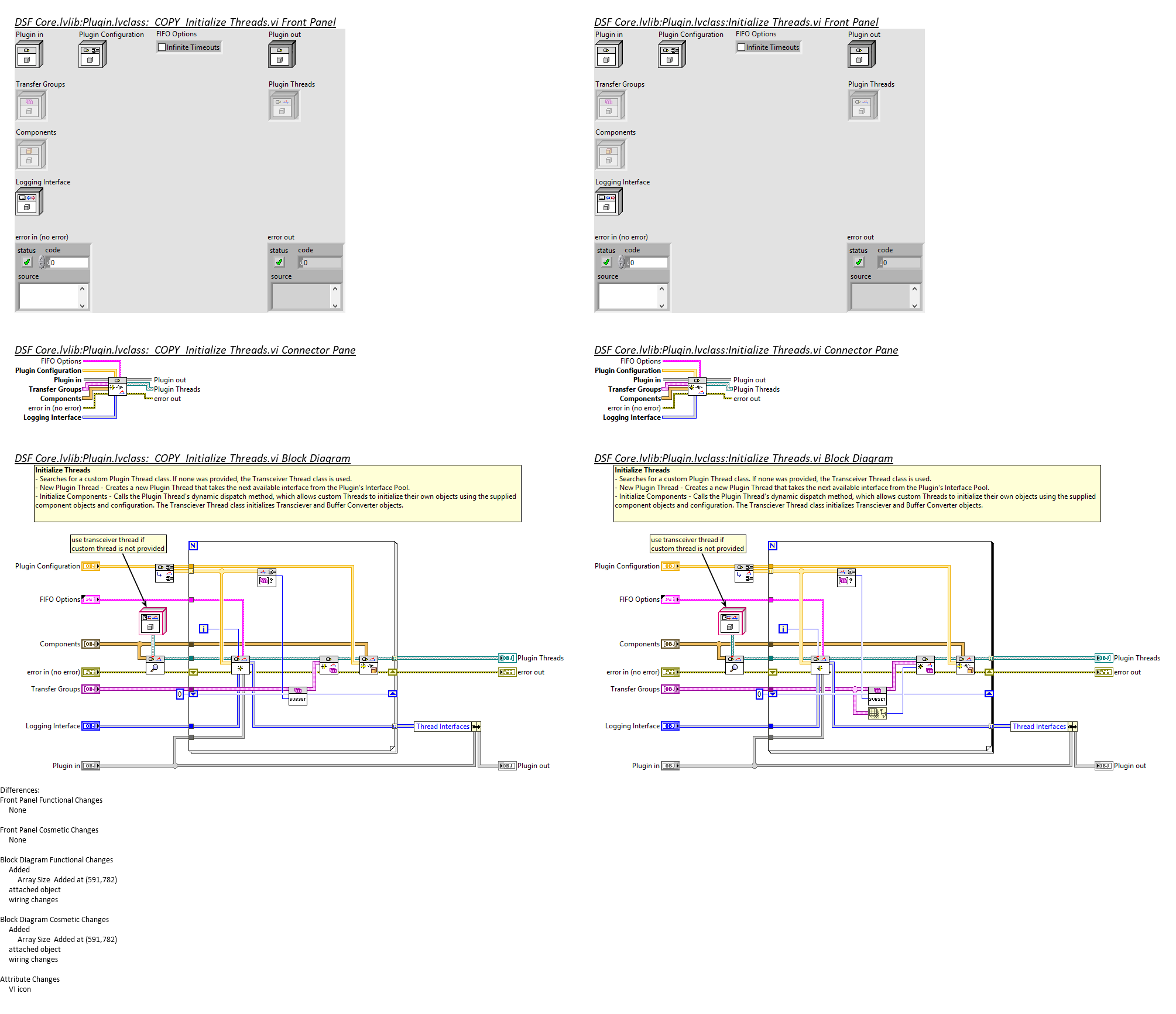

Bleep bloop! LabVIEW Diff Robot here with some diffs served up hot for your pull request. Notice something funny? Help fix me on my GitHub repo. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Changes to both VIs look good. Array Size does not change within For Loop in Initialize Threads VI, but there is no performance hit, and I like your clean, readable code.

|

We should create a tech debt item to catch the error if number of transfer groups exceeds 255. |

I'd prefer to keep the implementation details of |

See work item 1172889. |

It was actually because you had to pass the size of the array to initialize into the subVI that prompted the thought. We now pass total number of groups into the subVI which seems like more information than it should need to initialize the index map for the subset of groups, but I agree that more of the details about initialization are encapsulated with current implementation. |

Ahh, now I see what you're saying. That makes sense. I'm going to leave it as-is, pending @niphilj's review. |

I thought about this a lot, and tried to way the options. I do like the clarity of the code and even talked myself out of other options. Really, I appreciate the naming of the new terminal to be so clear, and ok with the code as-is. |

|

I tested this fix with 2 different configurations that exhibited the issue and the previously observed problem is not showing up anymore. |

What does this Pull Request accomplish?

Make the transfer group index map array size the total number of transfer groups, rather than the number of transfer groups in that specific thread. This array appears to be used to convert between the global transfer group id to the relative position of the transfer group in the owning thread.

We're being slightly wasteful with memory here: each thread is now getting an array equal in size to the total number of transfer groups, instead of either sharing a global copy of this array or shifting which index we look at in the originally sized array.

If

tis the number of threads,gthe total number of groups, andg_tis the number of groups in that thread, we previously allocatingt * g_t = gbytes (and probably could continue to do so if we reworked some of the surrounding infrastructure), and are now allocatingt * gbytes. That said, this change seemed to match the spirit of the existing code, and also seemed like the easiest implementation.Why should this Pull Request be merged?

Fixes #39. Threads with multiple transfer groups were accidentally having the first transfer group configuration applied to all transfer groups (except possibly in certain cases, like being in the first thread) due to an out-of-bounds indexing operation returning zero.

What testing has been done?

Notes for reviewers

The modified VIs are responsible for building the transfer group index map. This map is consumed in

DSF Core.lvlib:Plugin Thread.lvclass:Cast Command to Transfer Group.vi(and only there, as far as I can tell).My understanding of

Cast Command to Transfer Group.viis that the incomingTransfer Group IDis the global index of the transfer group, and that the outgoingTransfer Group idis the local index within the thread. For example, if there are two threads, the first with one group and the second with two groups, an incomingTransfer Group IDof1would correspond to the first group in the second thread, and the expected output would be0as it is the first group in that thread.