-

Notifications

You must be signed in to change notification settings - Fork 1.2k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

run/repro: add option to not remove outputs before reproduction #1214

Comments

|

What about corrupting the cache, @efiop ? Is this for |

|

@MrOutis Great point! We should |

|

Adding my +1 here. This would be helpful for doing a warm start during parameter search. Some of our stages are very long running and starting from the previous results would help it complete much faster. |

|

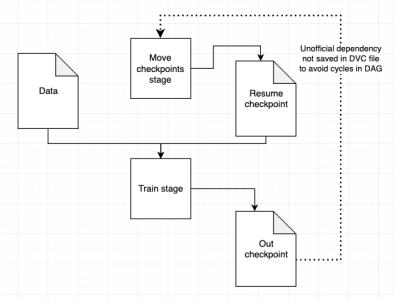

@AlJohri WDYT of this (hacky) possible solution? This should allow each git commit to describe an accurate snapshot of a training run - the checkpoint you start from, and the checkpoint that resulted. |

|

Probably should look something like: which would add something like to the dvc file for ckpt output, so that |

|

Should be closed by #1759 |

|

@AlJohri there is new option in run for this case, Ill add it to docs soon, but you can find it in closing issue name. |

|

What should I do if from time to time I want to start fresh? Use |

|

@piojanu actually what this option is doing is just setting persist flag inside stage file. So if you want to edit behaviour after some time, just change this flag in |

|

@piojanu It is also worth noting, that this behaviour is only possible, if user actually appends data to output. If, for example, our |

|

Yea, I understand that :) But maybe it is worth including it in docs. Also, should |

|

@piojanu it should work, even now, since patch entered master, it was my mistake, didn't test whether remove works for persistent outputs. |

|

Very good guys! This is a very important feature, I already started using it to great effect instead of my above workaround: https://dagshub.com/Guy/fairseq/src/dvc/dvc-example/train.dvc I vote for having a short flag for it, I think it will be commonly used for anyone that wants to train with checkpoints, or for scenarios like this: https://discuss.dvc.org/t/version-checking-with-dvc/168/5 Maybe |

|

@guysmoilov Thanks a lot for your input on Mind creating a feature request for it? 🙂 |

|

@AlJohri quick question, but the "warm start" and "reusing previous results" - are you referring to reusing the previous model (trained with a different set of params) to continue training it with a new set? Or is it something different in your case? We would really appreciate your input here! Thanks! |

|

hey there, sorry I'm actually not 100% sure what I meant before. it was related to more efficient hyperparameter searching but I can't remember the particular use case. I had a job where the parameter search took a very long time but I don't recall how I thought a warm start might solve that in this scenario "warm start machine learning" has a lot of hits on google so perhaps you can read more about the more general use case |

|

Hello, @guysmoilov , @AlJohri, @pared , @efiop ! The idea to resume a process from a certain point could be handled by the script itself (writing to a temporary file not specified on the It would be great if you can come up with an example of how you are using this feature today 😃 |

https://discordapp.com/channels/485586884165107732/485596304961962003/540232443769258014

https://discordapp.com/channels/485586884165107732/485586884165107734/547430257821483008

https://discordapp.com/channels/485586884165107732/485596304961962003/557823591379369994

The text was updated successfully, but these errors were encountered: