A GitHub Action for running Lighthouse audits automatically in a workflow with a rich set of extra features. Simple implementation or advanced customization including Slack notifications, AWS S3 HTML report uploads, and more!

This project provides two ways of running audits - "locally" by default in a dockerized GitHub environment or remotely via Automated Lighthouse Check API. For basic usage, running locally will suffice, but if you'd like to maintain a historical record of Lighthouse audits and utilize other features, you can follow the steps and examples.

|

|

|

Simple configuration or choose from a variety of features below. See the example Lighthouse Check action implementation.

- 💛 Lighthouse audit multiple URLs or just one.

- 💛 Save a record of all your audits via Automated Lighthouse Check.

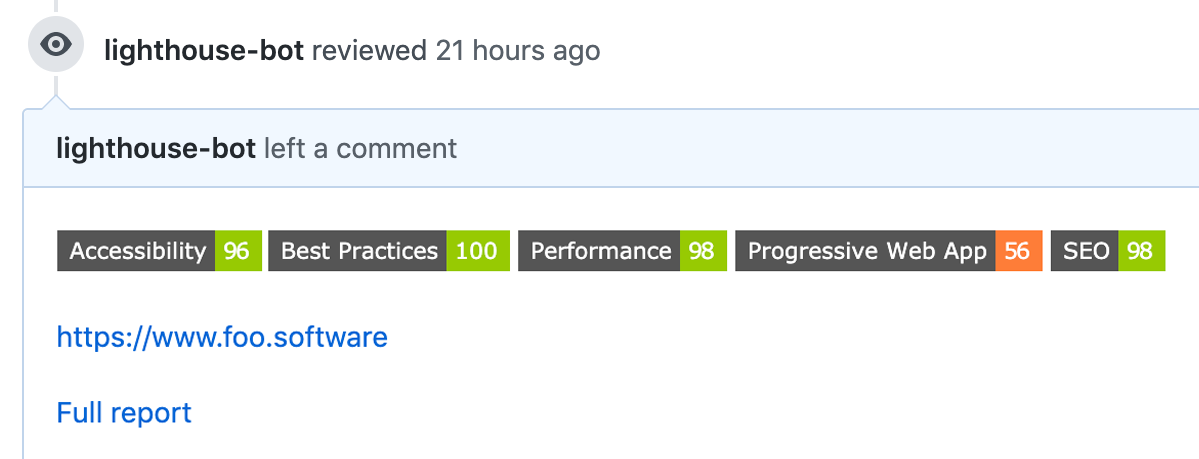

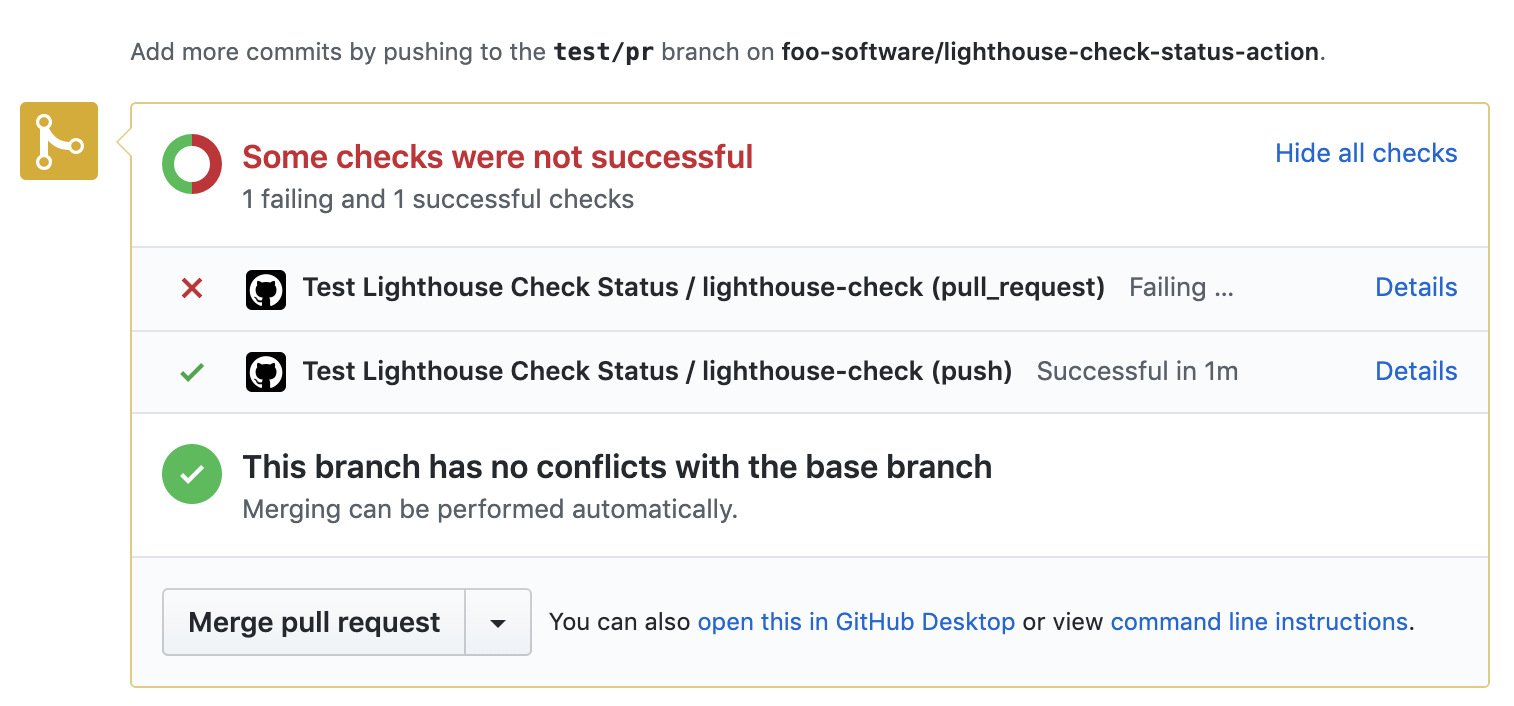

- 💗 PR comments of audit scores.

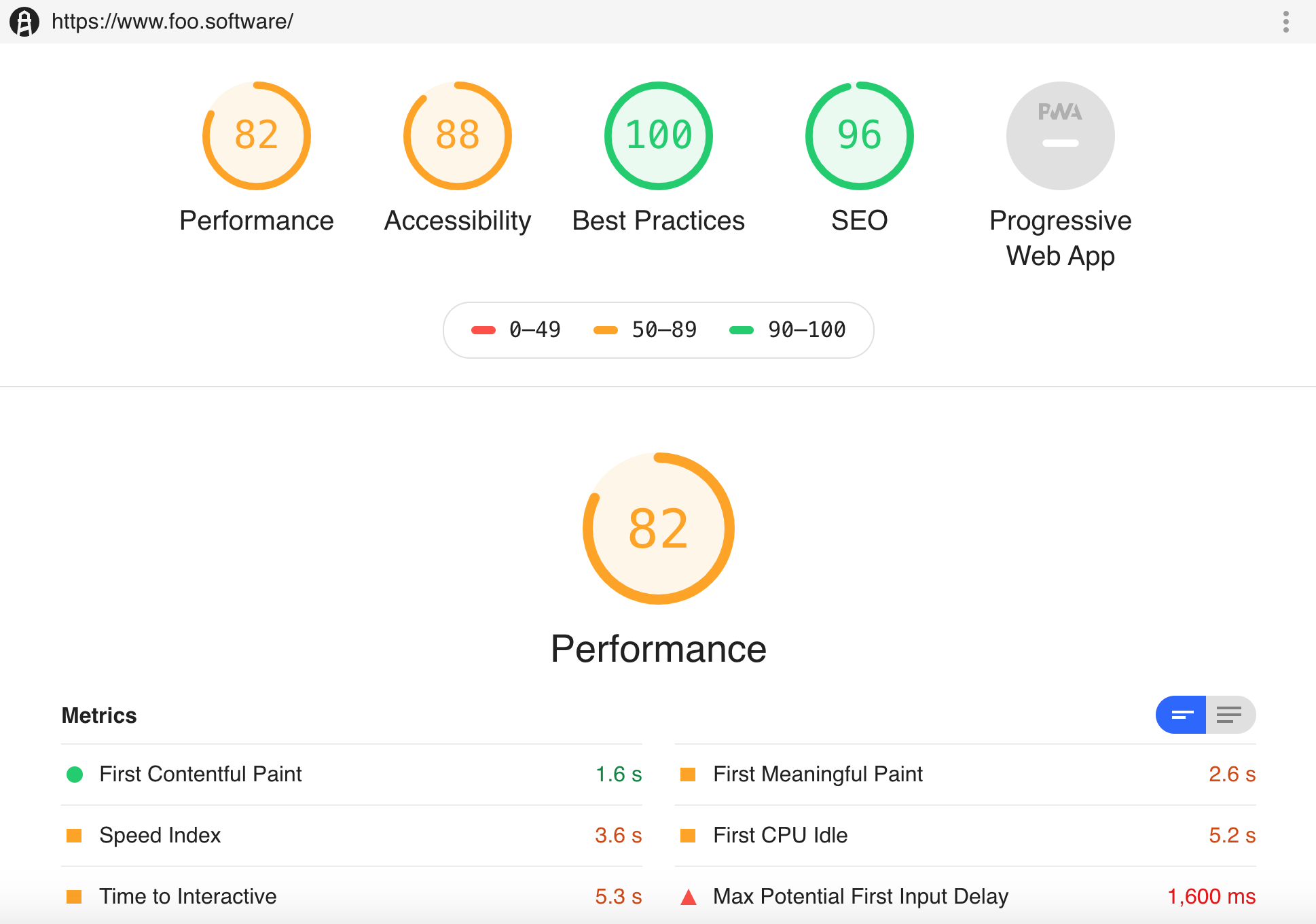

- 💗 Save HTML reports locally.

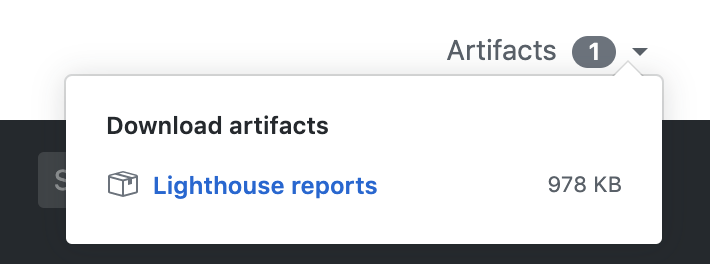

- 💚 Upload HTML reports as artifacts.

- 💙 Upload HTML reports to AWS S3.

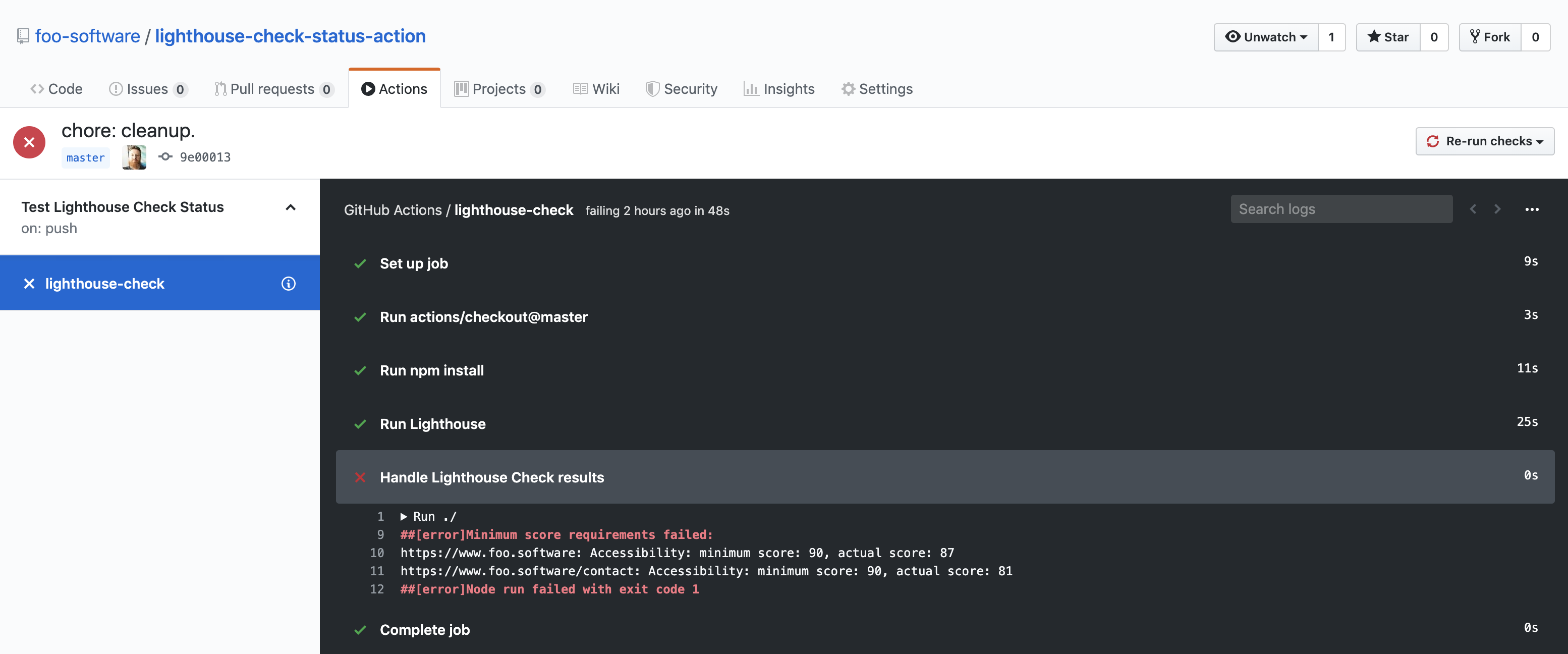

- ❤️ Fail a workflow when minimum scores aren't met. Example at the bottom.

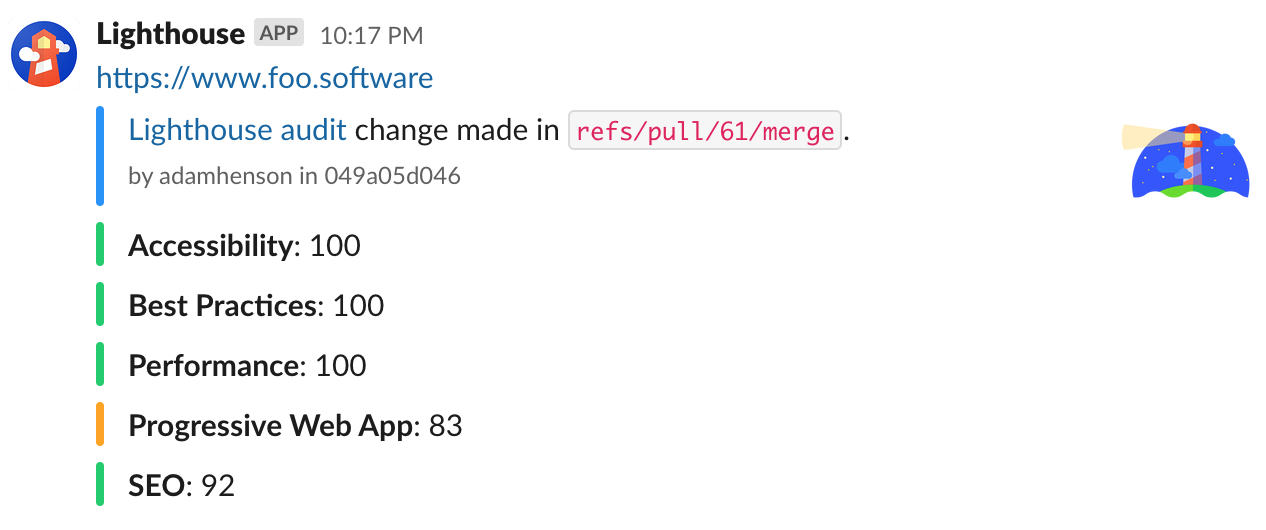

- 💜 Slack notifications.

- 💖 Slack notifications with Git info (author, branch, PR, etc).

Screenshots below for visual look at the things you can do.

You can choose from two ways of running audits - "locally" in a dockerized GitHub environment (by default) or remotely via the Automated Lighthouse Check API. For directions about how to run remotely see the Automated Lighthouse Check API Usage section. We denote which options are available to a run type with the Run Type values of either local, remote, or both respectively.

| Name | Description | Type | Run Type | Default |

|---|---|---|---|---|

accessToken |

Access token of a user (to do things like post PR comments for example). | string |

both |

undefined |

apiToken |

The automated-lighthouse-check.com account API token found in the dashboard. | string |

remote |

undefined |

author |

For Slack notifications: A user handle, typically from GitHub. | string |

both |

undefined |

awsAccessKeyId |

The AWS accessKeyId for an S3 bucket. |

string |

local |

undefined |

awsBucket |

The AWS Bucket for an S3 bucket. |

string |

local |

undefined |

awsRegion |

The AWS region for an S3 bucket. |

string |

local |

undefined |

awsSecretAccessKey |

The AWS secretAccessKey for an S3 bucket. |

string |

local |

undefined |

branch |

For Slack notifications: A version control branch, typically from GitHub. | string |

both |

undefined |

configFile |

A configuration file path in JSON format which holds all options defined here. This file should be relative to the file being interpretted. In this case it will most likely be the root of the repo ("./") | string |

both |

undefined |

emulatedFormFactor |

Lighthouse setting only used for local audits. See lighthouse-check comments for details. |

oneOf(['mobile', 'desktop'] |

local |

mobile |

extraHeaders |

Stringified HTTP Header object key/value pairs to send in requests. example: '{ "x-hello-world": "foobar", "x-some-other-thing": "hi" }' |

string |

local |

undefined |

locale |

A locale for Lighthouse reports. Example: ja |

string |

local |

undefined |

outputDirectory |

An absolute directory path to output report. You can do this an an alternative or combined with an S3 upload. | string |

local |

undefined |

overridesJsonFile |

A JSON file with config and option fields to overrides defaults. Read more here. | string |

local |

undefined |

prCommentEnabled |

If true and accessToken is set scores will be posted as comments. |

boolean |

both |

true |

prCommentSaveOld |

If true and PR comment options are set, new comments will be posted on every change vs only updating once comment with most recent scores. |

boolean |

both |

false |

sha |

For Slack notifications: A version control sha, typically from GitHub. |

string |

both |

undefined |

slackWebhookUrl |

A Slack Incoming Webhook URL to send notifications to. | string |

both |

undefined |

tag |

An optional tag or name (example: build #2 or v0.0.2). |

string |

remote |

undefined |

throttlingMethod |

Lighthouse setting only used for local audits. See lighthouse-check comments for details. |

oneOf(['simulate', 'devtools', 'provided']) |

local |

simulate |

throttling |

Lighthouse setting only used for local audits. See lighthouse-check comments for details. |

oneOf(['mobileSlow4G', 'mobileRegluar3G']) |

local |

mobileSlow4G |

timeout |

Minutes to timeout. If wait is true (it is by default), we wait for results. If this timeout is reached before results are received an error is thrown. |

number |

local |

10 |

urls |

A comma-separated list of URLs to be audited. | string |

both |

undefined |

verbose |

If true, print out steps and results to the console. |

boolean |

both |

true |

wait |

If true, waits for all audit results to be returned, otherwise URLs are only enqueued. |

boolean |

remote |

true |

An object of the below shape.

| Name | Description | Type |

|---|---|---|

code |

A code set by lighthouse-check to represent success or failure. Success will be SUCCESS while errors will look something line ERROR_${reason} |

string |

data |

An array of results with the payload illustrated below. | array |

An array of objects with the below shape. Only applicable data will be populated (based on inputs).

| Name | Description | Type |

|---|---|---|

url |

The corresponding URL of the Lighthouse audit. | string |

report |

An AWS S3 URL of the report if S3 inputs were specified and upload succeeded. | string |

scores |

An object of Lighthouse scores. See details below. | object |

An object of scores. Each value is a number. Names should be self-explanatory - representing the score of each Lighthouse audit type.

| Name |

|---|

accessibility |

bestPractices |

performance |

progressiveWebApp |

seo |

Below are example combinations of ways to use this GitHub Action.

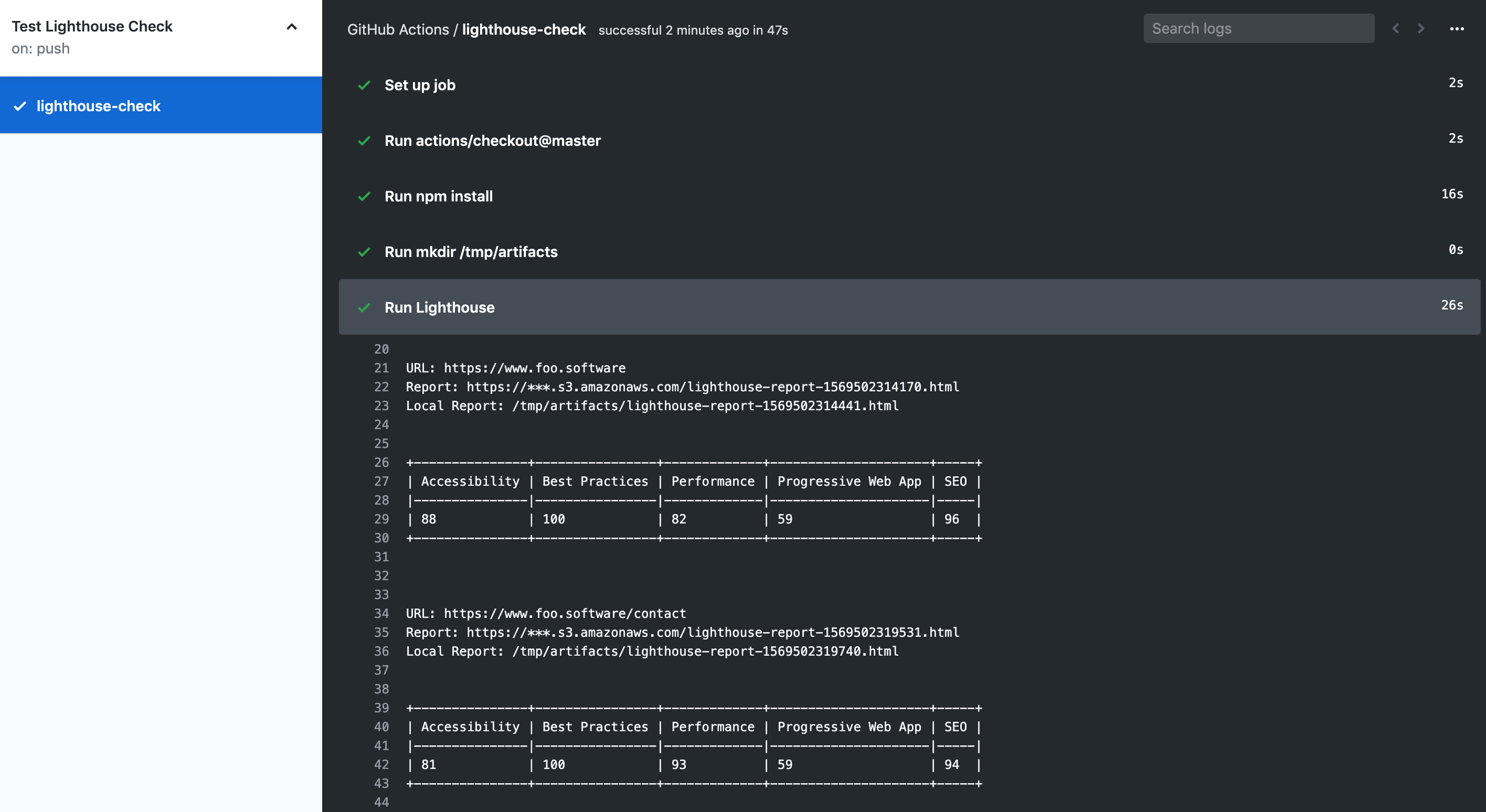

In the below example we run Lighthouse on two URLs, log scores, save the HTML reports as artifacts, upload reports to AWS S3, notify via Slack with details about the change from Git data. By specifying the pull_request trigger and accessToken - we allow automatic comments of audits on the corresponding PR from the token user.

name: Test Lighthouse Check

on: [pull_request]

jobs:

lighthouse-check:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@master

- run: mkdir /tmp/artifacts

- name: Run Lighthouse

uses: foo-software/lighthouse-check-action@master

with:

accessToken: ${{ secrets.LIGHTHOUSE_CHECK_GITHUB_ACCESS_TOKEN }}

author: ${{ github.actor }}

awsAccessKeyId: ${{ secrets.LIGHTHOUSE_CHECK_AWS_ACCESS_KEY_ID }}

awsBucket: ${{ secrets.LIGHTHOUSE_CHECK_AWS_BUCKET }}

awsRegion: ${{ secrets.LIGHTHOUSE_CHECK_AWS_REGION }}

awsSecretAccessKey: ${{ secrets.LIGHTHOUSE_CHECK_AWS_SECRET_ACCESS_KEY }}

branch: ${{ github.ref }}

outputDirectory: /tmp/artifacts

urls: 'https://www.foo.software,https://www.foo.software/contact'

sha: ${{ github.sha }}

slackWebhookUrl: ${{ secrets.LIGHTHOUSE_CHECK_WEBHOOK_URL }}

- name: Upload artifacts

uses: actions/upload-artifact@master

with:

name: Lighthouse reports

path: /tmp/artifactsWe can expand on the example above by optionally failing a workflow if minimum scores aren't met. We do this using foo-software/lighthouse-check-status-action.

name: Test Lighthouse Check with Minimum Score Enforcement

on: [push]

jobs:

lighthouse-check:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@master

- run: npm install

- name: Run Lighthouse

uses: foo-software/lighthouse-check-action@master

id: lighthouseCheck

with:

urls: 'https://www.foo.software,https://www.foo.software/contact'

# ... all your other inputs

- name: Handle Lighthouse Check results

uses: foo-software/lighthouse-check-status-action@master

with:

lighthouseCheckResults: ${{ steps.lighthouseCheck.outputs.lighthouseCheckResults }}

minAccessibilityScore: "90"

minBestPracticesScore: "50"

minPerformanceScore: "50"

minProgressiveWebAppScore: "50"

minSeoScore: "50"Automated Lighthouse Check can monitor your website's quality by running audits automatically! It can provide a historical record of audits over time to track progression and degradation of website quality. Create a free account to get started. With this, not only will you have automatic audits, but also any that you trigger additionally. Below are steps to trigger audits on URLs that you've created in your account.

- Navigate to your account details, click into "Account Management" and make note of the "API Token".

- Use the account token as the

apiTokeninput.

Basic example

name: Lighthouse Check

on: [push]

jobs:

lighthouse-check:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@master

- run: npm install

- name: Run Lighthouse

uses: foo-software/lighthouse-check-action@master

id: lighthouseCheck

with:

apiToken: 'myaccountapitoken'

# ... all your other inputs- Navigate to your account details, click into "Account Management" and make note of the "API Token".

- Navigate to your dashboard and once you've created URLs to monitor, click on the "More" link of the URL you'd like to use. From the URL details screen, click the "Edit" link at the top of the page. You should see an "API Token" on this page. It represents the token for this specific page (not to be confused with an account API token).

- Use the account token as the

apiTokeninput and page token (or group of page tokens) asurlsinput.

Basic example

name: Lighthouse Check

on: [push]

jobs:

lighthouse-check:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@master

- run: npm install

- name: Run Lighthouse

uses: foo-software/lighthouse-check-action@master

id: lighthouseCheck

with:

apiToken: 'myaccountapitoken'

urls: 'mypagetoken1,mypagetoken2'

# ... all your other inputsYou can combine usage with other options for a more advanced setup. Example below.

Runs audits remotely and posts results as comments in a PR

name: Lighthouse Check

on: [pull_request]

jobs:

lighthouse-check:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@master

- run: npm install

- name: Run Lighthouse

uses: foo-software/lighthouse-check-action@master

id: lighthouseCheck

with:

accessToken: ${{ secrets.LIGHTHOUSE_CHECK_GITHUB_ACCESS_TOKEN }}

apiToken: 'myaccountapitoken'

urls: 'mypagetoken1,mypagetoken2'

# ... all your other inputsRuns audits on a ZEIT Now ephemeral instance, posts results as comments in a PR and saves results on Automated Lighthouse Check. The example would trigger on pushes to master and pull request changes when master is the base. Note the urls input can be used as explained in the Trigger Audits on Only Certain Pages in an Account section, but extended with two colons like so to denote the page token and custom URL (which can be different than the one specified in the account): {page token}::{custom url}.

name: Lighthouse

on:

push:

branches:

- master

pull_request:

branches:

- master

jobs:

lighthouse:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@master

- uses: amondnet/now-deployment@master

id: now

with:

zeit-token: ${{ secrets.ZEIT_NOW_TOKEN }}

now-org-id: ${{ secrets.ZEIT_NOW_ORG_ID }}

now-project-id: ${{ secrets.ZEIT_NOW_PROJECT_ID }}

- name: Run Lighthouse

uses: foo-software/lighthouse-check-action@master

with:

accessToken: ${{ secrets.LIGHTHOUSE_CHECK_GITHUB_ACCESS_TOKEN }}

apiToken: ${{ secrets.LIGHTHOUSE_CHECK_API_TOKEN }}

tag: GitHub Action

urls: ${{ secrets.LIGHTHOUSE_CHECK_URL_TOKEN }}::${{ steps.now.outputs.preview-url }}Note: this approach is only available when running "locally" (not using the REST API)

You can override default config and options by specifying overridesJsonFile option which is consumed by path.resolve(overridesJsonFile). Contents of this overrides JSON file can have two possible fields; options and config. These two fields are eventually used by Lighthouse to populate opts and config arguments respectively as illustrated in Using programmatically. The two objects populating this JSON file are merged shallowly with the default config and options.

Example content of

overridesJsonFile

{

"config": {

"settings": {

"onlyCategories": ["performance"]

}

},

"options": {

"disableStorageReset": true

}

}

This package was brought to you by Foo - a website performance monitoring tool. Create a free account with standard performance testing. Automatic website performance testing, uptime checks, charts showing performance metrics by day, month, and year. Foo also provides real time notifications. Users can integrate email, Slack and PagerDuty notifications.