-

Notifications

You must be signed in to change notification settings - Fork 4.4k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

grpc: reduce allocations on Codec.{Unm,M}arshal codepaths #1478

Conversation

|

With The misuse of proto.Buffer is indicative here: Where |

|

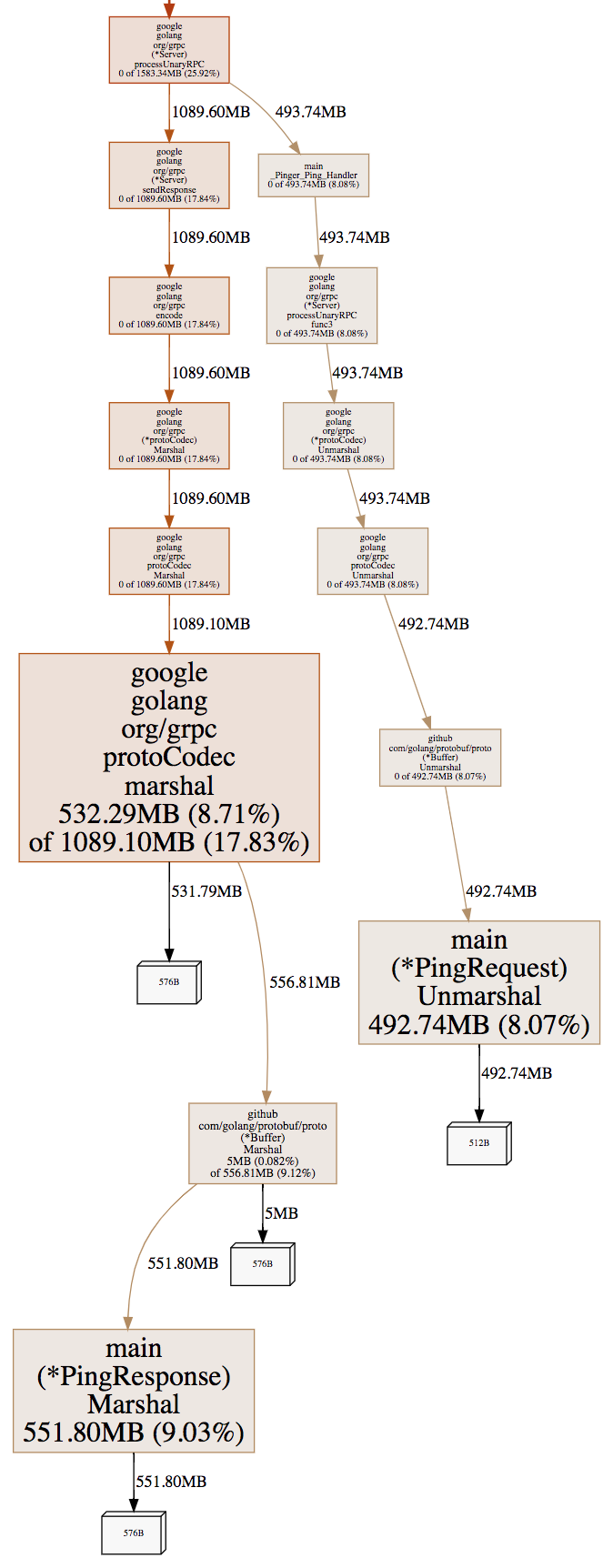

This is the svg view of the same showing the dual allocation above. |

|

What benchmark are these measurements from? Id' note the goal of the change that started using cached Measuring total allocations by the benchmark in https://performance-dot-grpc-testing.appspot.com/explore?dashboard=5636470266134528&widget=952298825&container=846542667 (using small messages), over a roughly 30 second perioud, showed about 16GB of total allocations on the server, with a little more than 8GB due to allocation of One thing I'm wondering is if the protos generated from an updated generator would have an effect here though. |

Included above but it's running the simplest ping server https://github.com/irfansharif/pinger for a 30s duration on my local macbook-pro. Note for this run |

Lower GC pressure for whenever temporary Buffer types are allocated. Evidently it's an issue grpc/grpc-go ran into in the past. +cc grpc/grpc-go#1478. Additionally remove TODOs left regarding exactly this.

Lower GC pressure for whenever temporary Buffer types are allocated, evidently it's an issue grpc/grpc-go ran into in the past. Additionally remove the TODOs left regarding exactly this. +cc grpc/grpc-go#1478.

|

@apolcyn: I wrote up golang/protobuf#418, which I think is a more appropriate fix for the issue you mentioned above ( EDIT: gah, I only just realized that generated proto messages don't always have |

Yes those are ran about daily. Running manually is slightly involved but there are some docs on it in https://github.com/grpc/grpc/blob/master/tools/run_tests/performance/README.md.

I see, I think that change in protobuf can make sense. Also yes that is what I meant to say - current |

|

golang/protobuf#418 was unfortunately rejected due to what seems to be them exporting their internal version and wanting to avoid merge conflicts. This PR still benefits the group of users providing In any case it's worth noting that with the current state of things across here and golang/protobuf, for every marshaled byte through grpc we allocate twice as much as necessary (within these codepaths) and explicitly copy once more than necessary (within these codepaths). |

|

@irfansharif Thanks for reading through the code, understanding it and finding optimization hot-spots. However, we do wish you had talked to us a little, before writing out all the code that you have in all your 3 PRs. We're working on optimization quite actively and are aware of these optimizations. Some of your changes albeit good, introduce issues. For instance, in your buffer re-use PR you're making a copy of data to add it to headers. Also, some of these changes will be rendered obsolete once we're done with the code restructuring that's underway currently. |

|

@MakMukhi: it's no issue haha, like I mentioned in #1482 I was just chomping through some of our grpc bottlenecks within cockroachdb/cockroach and these seemed like easy enough pickings to upstream. Nothing ventured, nothing gained!

I'm glad to hear, I should have consulted earlier but wanted to see how far I could get over the weekend. I have a host of other PRs on a similar vein that I'll hold off on in the interim.

Are plans for this publicly visible? I did look for indication of this (mailing lists and here) but did not come across any. In all cases if there's anything here I can help with, do let me know. Looking to upstream as many of our results as possible. Feel free to close this PR if needed. |

The previous use of proto.Buffer was slightly incorrect. proto.Buffer is

only useful when {un,}marshalling proto messages into and from the same

buffer. The current grpc.Codec interface does not have enough

specificity to make good use of this pattern.

In the previous usage we retrieved a cachedProtoBuffer/proto.Buffer from

a sync.Pool pool for every {Unm,M}arshal call, initialized/allocated/set

a completely new buffer and {un,}marshalled into and from it. There was

effectively no re-use of an allocated buffer across invocations of

{Unm,M}arshal. Moreover by simply having used proto.Buffer as an

intermediary between all proto.{Unm,M}arshal calls, we ended up

effectively allocating 2x bytes when only 1x was sufficient. This is due

to the internal implementation details of proto.Buffer where for every

proto.(Marshaler).Marshal() call that returned a byte slice,

proto.Buffer copies it over into it's internal buffer (which we

explicitly initialize/set ourselves).

For types that satisfy the {Unm,M}arshaler interface however we can

simply fall back to {Unm,M}arshaling it directly, without needing to go

through a proto.Buffer. This is what the default proto.{Unm,M}arshal

does with:

// Marshal takes the protocol buffer

// and encodes it into the wire format, returning the data.

func Marshal(pb Message) ([]byte, error) {

// Can the object marshal itself?

if m, ok := pb.(Marshaler); ok {

return m.Marshal()

}

p := NewBuffer(nil)

err := p.Marshal(pb)

// ...

Falling back to the proto.Buffer for when {Unm,M}arshaler is not

satisfied. The "right" fix for our use of sync.Pool for proto.Buffers at

this level should probably exist in golang/protobuf instead. This patch

wasn't accepted upstream in light of a reworked internal version they're

looking to export.

9f22559 to

aaffef0

Compare

|

@MakMukhi, @apolcyn: PTAL here, reworked it in light of golang/protobuf#418 not getting in. We should should have the best of both worlds, no extra proto.Buffer allocs and no extra copying for users providing {Unm,M}arshal helpers. Commit message updated accordingly. |

|

@irfansharif This PR looks good. Can you run our benchmarks on this to see that it doesn't have any detrimental effects on the normal case(proto object doesn't implement Marshal or Unmarshal). |

|

Ping. |

|

Thank you for your pull request. Before we can look at your contribution, we need to ensure all contributors are covered by a Contributor License Agreement. After the following items are addressed, please respond with a new comment here, and the automated system will re-verify.

Regards, |

The previous use of

proto.Bufferwas slightly incorrect.proto.Bufferisonly useful when {un,}marshalling proto messages into and from the same

buffer. The current

grpc.Codecinterface does not have enoughspecificity to make good use of this pattern.

In the previous usage we retrieved a

cachedProtoBuffer/proto.Bufferfroma

sync.Poolpool for every{Unm,M}arshalcall, initialized/allocated/seta completely new buffer and {un,}marshalled into and from it. There was

effectively no re-use of an allocated buffer across invocations of

{Unm,M}arshal. Moreover by simply having usedproto.Bufferas anintermediary between all

proto.{Unm,M}arshalcalls, we ended upeffectively allocating 2x bytes when only 1x was sufficient. This is due

to the internal implementation details of

proto.Bufferwhere for everyproto.(Marshaler).Marshal()call that returned a byte slice,proto.Buffercopies it over into it's internal buffer (which weexplicitly initialize/set ourselves). This is shown below and not included in

the commit message.

For types that satisfy the {Unm,M}arshaler interface however we can

simply fall back to {Unm,M}arshaling it directly, without needing to go

through a proto.Buffer. This is what the default proto.{Unm,M}arshal

does with:

Falling back to the proto.Buffer for when {Unm,M}arshaler is not

satisfied. The "right" fix for our use of sync.Pool for proto.Buffers at

this level should probably exist in golang/protobuf instead. This patch

wasn't accepted upstream in light of a reworked internal version they're

looking to export.

+cc @bdarnell / @tamird