-

Notifications

You must be signed in to change notification settings - Fork 17.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

x/build: frequent timeouts running js-wasm TryBots #35170

Comments

|

One change was that the builder was recently bumped to Node 13. |

|

I think that's unrelated. When I've seen this, the error is also as Bryan quoted above: a timeout doing a gRPC call to maintner, asking whether we should even do this build. We used to need to skip builds for js/wasm if the git history of the rev to be built didn't include js-wasm support. But now that we've supported js/wasm for a number of releases (since Go 1.11) and we don't even support Go 1.10 (or even Go 1.11) any more, we can just remove that condition on that builder. But also: maintner shouldn't be so slow at that query. |

|

Change https://golang.org/cl/203757 mentions this issue: |

…js/wasm It's supported it for ages. We no longer support or build branches that didn't have js/wasm support. See golang/go#35170 (comment) Updates golang/go#35170 (still need a maintner fix, ideally) Change-Id: If5489dd266dea9a3a48bbc29f83528c0f939d23b Reviewed-on: https://go-review.googlesource.com/c/build/+/203757 Reviewed-by: Bryan C. Mills <[email protected]>

|

Not very scientific, but the has-ancestor RPCs are taking only 100ms for me. (includes TCP+TLS setup, etc) Not anywhere near 30 seconds. |

…js/wasm It's supported it for ages. We no longer support or build branches that didn't have js/wasm support. See golang/go#35170 (comment) Updates golang/go#35170 (still need a maintner fix, ideally) Change-Id: If5489dd266dea9a3a48bbc29f83528c0f939d23b Reviewed-on: https://go-review.googlesource.com/c/build/+/203757 Reviewed-by: Bryan C. Mills <[email protected]>

|

@dmitshur - Would you be able to check if this is still happening ? |

|

@dmitshur Do you know if this is still an issue? |

|

I feel like I've seen some discussion about this still happening occasionally quite recently, more so than the comments in this issue would suggest. Perhaps it was in another thread. I'll keep an eye out for this. |

|

Yes, it is still happening. Last week I caught the js-wasm trybot taking 25 minutes when most were done in 15, Any advice about what to do when js-wasm looks like it is stuck/very slow and how to debug further would be greatly appreciated. |

I suggest trying to determine if there is a specific place where the build progresses more slowly than others. I.e., is it perhaps that time to first test is delayed, then it's fast, or is one of the package tests very slow, while the rest is fast, or is slowness equally distributed across all packages? I'll also try to look into logs for the two occurrences you've shared and see if I can determine it from that. |

|

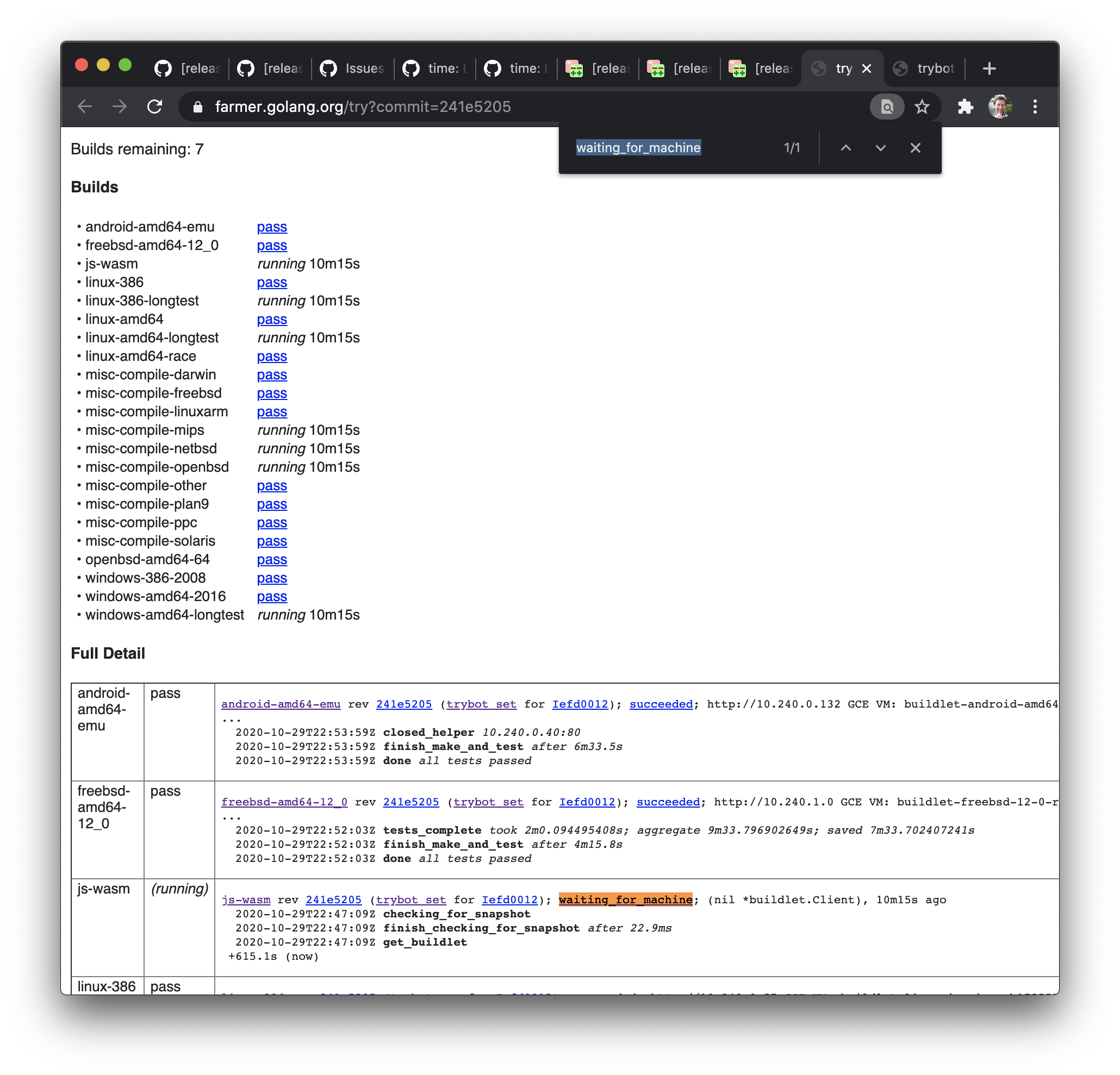

From CL 266374 where I recently started trybots: In this instance, the js-wasm builder is in waiting_for_machine state for over 10 minutes. That is very unexpected—it's a GCP builder that we should be able to spin up without running out of physical machines. Perhaps we're running out of some quota. Not sure why only js-wasm and not other GCP builders. But this is a lead. Edit: Here's the scheduler state at the time: |

|

In several occurrences that I saw, the If we don't have the bandwidth to diagnose this at the moment, perhaps we should temporarily demote |

|

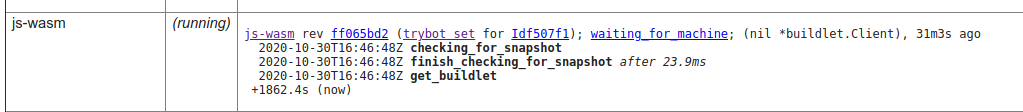

I'm also experiencing js-wasm slowdowns for my trybot runs. Here is an example: Seems as though it is not getting past "get buildlet". [Edit: did finally make progress after 40 min wait.] |

|

I don't know much about farmer, but on https://farmer.golang.org/#sched it seems to me as if multiple trybot runs are waiting on the js-wasm tests, but I can only see very few buildlets with the prefix |

It seems so. I don't have an idea about what it might be yet. It may be related to #42285. I'm going to look more later today. |

|

I extracted some raw data from some Wasm trybot runs, which I was going to analyze more and post something here, but then this work got pre-empted by other work, and by now I've lost it due to a forced browser update. From memory, the high-level summary was that the Wasm trybot runs are generally quick, with two components that contribute a fairly significant proportion of the overall time:

There's a commonly used dist-test adjust policy However, it seems the root problem here was with the scheduling of the Wasm builders and starting the trybot runs, not the length of trybot test execution itself under a typical "happy" run. There are many possible places to look next. As @bcmills also pointed out in #42699, there is a problem of stalls sometimes resulting in extended test executions that eventually instead of what should be failures. Stalls could be due to something Wasm-specific (e.g., #31282) or something more general. We also have some possible blindspots on the side of coordinator (e.g., #39349, #39665). As I've recently experienced during #42379 (comment), it's possible for stalls to happen when a test execution is sharded and not happen during a sequential all.bash test run. All this is to say that more work needs to be done to get closer to finding and resolving the root issue(s) here. I think we have confirmation that this problem is intermittent and skipping a deterministically slow test is not an available option. |

|

Change https://golang.org/cl/347295 mentions this issue: |

The reboot test verifies that the modified version of Go can be used for bootstrapping purposes. The js-wasm builder is configured with GOHOSTOS=linux and GOHOSTARCH=amd64 so that it builds packages and commands for host, linux/amd64, which is used during bootstrapping in the reboot test. Since this doesn't provide additional coverage beyond what we get from linux-amd64's execution of the reboot test, disable this test on the js-wasm builder. Also remove some accidental duplicate code. For golang/go#35170. Change-Id: Ic770d04f6f5adee7cb836b1a8440dc818fdc5e5b Reviewed-on: https://go-review.googlesource.com/c/build/+/347295 Trust: Dmitri Shuralyov <[email protected]> Run-TryBot: Dmitri Shuralyov <[email protected]> Reviewed-by: Bryan C. Mills <[email protected]> Reviewed-by: Alexander Rakoczy <[email protected]>

|

Is anyone still observing this issue?

A new idea by now is that it might've been hitting the GCE VM quota. Some of those quotas are visible in the first line of https://farmer.golang.org/#pools. If there are no frequent timeouts affecting this builder by now, perhaps we should close this. |

|

I haven't seen any of these recently, but I also haven't been looking for them. I suspect that the “failure being buried by retry logic” that I mentioned in #35170 (comment) may have been the one you fixed in CL 407555. Since we can identify a specific change that plausibly could have fixed this issue, I'd be ok with closing it until / unless we observe it again. |

I've noticed that the

js-wasmTryBots on my recent CLs seem to start with one or more spurious failures before finally running successfully.I suspect that that increases load on the TryBots generally, and may increase overall TryBot latency in some circumstances.

A log from a recent example is here (https://farmer.golang.org/temporarylogs?name=js-wasm&rev=9516a47489b92b64e9cf5633e3ab7e37def3353f&st=0xc00ab39b80):

CC @dmitshur @toothrot @bradfitz

The text was updated successfully, but these errors were encountered: