-

Notifications

You must be signed in to change notification settings - Fork 4.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

admin: Streaming /stats implementation #19693

Conversation

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

|

Drive-by comment to start: I think it's important that by default, all the stats are sampled as close together in time as possible. If the stats are sampled over a long period of time (if there's a slow client reading them), it's hard to reason about the relative values. From a quick glance through this code, I think it would still work fine if by default it uses a ChunkSize of infinity (or UINT64_MAX). |

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

|

Thanks @ggreenway that's a good point. But sampling all the stats quickly requires storing them in a large map, which requires visiting all of them, which with a large # of clusters can take a pretty significant chunk of CPU time wall-clock time, and memory. E.g. with 10k clusters it takes 2 seconds to wget the /stats endpoint without this change, and about 0.5 seconds with it. So you might not be getting that good a correlation even now, and you are also potentially impacting other uses of the main thread. I also tried 100k clusters and with this PR it's 15 seconds and without it it's about 3.5 minutes. It gets non-linear because currently we have to do a flat sort across the entire set of stats. Moreover there's a memory burst measured in gigabytes, whereas when we chunk we get to bound the memory we use. I'll try to capture that data also. I'll see if I can get some timing though for how long it takes to sample all the stats today. It's not optimal even now as we store the stats in a std::map<string,int> which means we are paying for keeping it sorted while sampling. We could sample into a hash_map<CounterSharedPtr,int> which would collect faster. Then we could sort and stream the data out. WDYT of having Is skew between different stats you collect an issue for you right now? Also would a more sophisticated filter mechanism help, beyond a simple regex? If you could express (say) 1000 stat of interest those would all come out in one chunk anyway. |

|

@ggreenway I measured the time it takes to run block of code where we sample the values with 10k clusters at HEAD -- it takes 2 seconds which is 4x as long as the new system takes to do the entire request. So I think the proposed changes here improve skew, unless there is a slow client, relative to the current system. However I think skew could be improved in the current system by capturing the values in an array first and then sorting it. One possible direction is to have a query-param to disable chunked encoding so a user can minimize skew at the cost of memory, and we can make the default chunk size pretty high so we will get minimal skew within each chunk, and typical servers will only get one chunk. WDYT? |

Signed-off-by: Joshua Marantz <[email protected]>

|

Capturing in an array and then sorting makes a lot of sense; latching all the values as quickly as possible results in better stats consistency. I think it's undesirable for the consistency of the stats to change depending on admin-client's network conditions, even if it's faster when on a fast network. Possible example (although I'm not sure if this works as I think it might): |

|

Greg: WDYT of initially going forward with this approach, which improves skew relative to the current code because:

As a follow-up, if we think the skew needs to be improved further at the expense of peak memory and overall speed, we can go back to collecting a huge array, maybe with a query-param. |

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

This reverts commit 0613f53. Signed-off-by: Joshua Marantz <[email protected]>

|

With the current state of this PR, no flow-control happens, so I'm not sure where that 100M gets buffered, but there is nothing in the current code to block it from capturing all the data and streaming it. It all completes in the (ugly) loop I added to AdminFilter::onComplete().. The thing that's ugly about it (and what IMO prevents this PR from being reviewable) is that it keeps calling the handler callback until it returns something other than HTTP 100. The 100 never makes it to the wire. This should instead be a handler class with a constructor that fills the response-headers, and nextChunk() API that returns true/false, rather than the 100-hack. I think from a memory usage perspective this should ultimately allow flow-controlled chunks but that could be a separate PR with its own debate, and maybe a flag, as you'd trade off skew vs memory consumption. |

Signed-off-by: Joshua Marantz <[email protected]>

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This looks great, aside from the (probably accidental?) bazel change.

/wait

.bazelversion

Outdated

| @@ -1 +1 @@ | |||

| 5.0.0 | |||

| 5.1.0 | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This shouldn't be part of this PR

Signed-off-by: Joshua Marantz <[email protected]>

Signed-off-by: Joshua Marantz <[email protected]>

removed the accidental modification to the bazel version file.

|

/retest |

|

Retrying Azure Pipelines: |

Creates a JSON sanitizer that delegates to the nlohmann library when there's any escapes required, or characters with the 8th bit set, but otherwise just returns its input. This PR supersedes #20428 which has a faster hand-rolled sanitizer, but the speed penalty for nlohmann is not too bad, compared with the protobuf sanitizer, and will generally be rarely needed anyway. -------------------------------------------------------------------- Benchmark Time CPU Iterations -------------------------------------------------------------------- BM_ProtoEncoderNoEscape 1445 ns 1444 ns 455727 BM_NlohmannNoEscape 9.79 ns 9.79 ns 71449511 BM_ProtoEncoderWithEscape 1521 ns 1521 ns 462697 BM_NlohmannWithEscape 215 ns 215 ns 3264218 Additional Description: This is intended to be adapted into the JSON renderer introduced in #19693 Risk Level: low -- this is layered on top of an already well-trusted library. Testing: //test/... Docs Changes: n/a Release Notes: n/a Platform Specific Features: n/a Signed-off-by: Joshua Marantz <[email protected]>

…yproxy#20170) * test: adding a multi-envoy test (envoyproxy#20016) Functionally this handles the multi-envoy signal handler crash skips instantiating a runtime singleton (off by default, must stay off until remove global runtime: rebase runtime features on ABSL_flags envoyproxy#19847 is closed) Multi-envoy does not correctly support runtime flags or deprecation stats due to envoyproxy#19847 being incomplete. It can still handle proxy traffic client - L1 - L2 - upstream as shown in test. Risk Level: low Testing: yes Docs Changes: n/a Release Notes: n/a Part of envoyproxy/envoy-mobile#2003 Signed-off-by: Alyssa Wilk <[email protected]> Signed-off-by: Rex Chang <[email protected]> * Add a congestionWindowInBytes method to Envoy::Network::Connection (envoyproxy#20105) Signed-off-by: Bin Wu <[email protected]> Signed-off-by: Rex Chang <[email protected]> * Update QUICHE from 50f15e7a5 to cf1588207 (envoyproxy#20154) https://github.com/google/quiche/compare/50f15e7a5..cf1588207 $ git log 50f15e7a5..cf1588207 --date=short --no-merges --format="%ad %al %s" 2022-02-28 wub Deprecate --gfe2_reloadable_flag_quic_crypto_noop_if_disconnected_after_process_chlo. 2022-02-27 vasilvv Remove QuicheMemSlice(QuicUniqueBufferPtr, size_t) constructor. 2022-02-26 fayang Use std::string instead of absl::string_view in CryptoBufferMap. 2022-02-25 bnc Ignore incoming HTTP/3 MAX_PUSH_ID frames. 2022-02-25 bnc Remove Http3DebugVisitor::OnMaxPushIdFrameSent(). 2022-02-25 bnc Remove QuicSpdySession::CanCreatePushStreamWithId(). 2022-02-25 fayang Deprecate gfe2_reloadable_flag_quic_single_ack_in_packet2. Signed-off-by: Alyssa Wilk <[email protected]> Signed-off-by: Rex Chang <[email protected]> * build(deps): bump actions/stale from 4.1.0 to 5 (envoyproxy#20159) Bumps [actions/stale](https://github.com/actions/stale) from 4.1.0 to 5. - [Release notes](https://github.com/actions/stale/releases) - [Changelog](https://github.com/actions/stale/blob/main/CHANGELOG.md) - [Commits](actions/stale@v4.1.0...v5) --- updated-dependencies: - dependency-name: actions/stale dependency-type: direct:production update-type: version-update:semver-major ... Signed-off-by: dependabot[bot] <[email protected]> Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com> Signed-off-by: Rex Chang <[email protected]> * admin: improve test coverage and increase the coverage-percent threshold (envoyproxy#20025) Adds a missing test for recent lookups now that there are no more fake symbol tables. Adds tests for a variety of override methods defined in admin.h that were previously hard to hit. Adds a benchmark test to establish a baseline for the speedups in envoyproxy#19693 Signed-off-by: Joshua Marantz <[email protected]> Signed-off-by: Rex Chang <[email protected]> * test: removing a bunch of direct runtime singleton access (envoyproxy#19993) Signed-off-by: Alyssa Wilk <[email protected]> Signed-off-by: Rex Chang <[email protected]> * build(deps): bump grpcio-tools in /examples/grpc-bridge/client (envoyproxy#20040) Bumps [grpcio-tools](https://github.com/grpc/grpc) from 1.43.0 to 1.44.0. - [Release notes](https://github.com/grpc/grpc/releases) - [Changelog](https://github.com/grpc/grpc/blob/master/doc/grpc_release_schedule.md) - [Commits](grpc/grpc@v1.43.0...v1.44.0) --- updated-dependencies: - dependency-name: grpcio-tools dependency-type: direct:production update-type: version-update:semver-minor ... Signed-off-by: dependabot[bot] <[email protected]> Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com> Signed-off-by: Rex Chang <[email protected]> * adds to spellcheck Signed-off-by: Rex Chang <[email protected]> * xray tracer: set subsegment type for child spans (#2) * xray tracer: set subsegment type for child spans Signed-off-by: Rex Chang <[email protected]> * adds test coverage Signed-off-by: Rex Chang <[email protected]> * Xray subsegment (#3) * xray tracer: set subsegment type for child spans Signed-off-by: Rex Chang <[email protected]> * adds test coverage Signed-off-by: Rex Chang <[email protected]> * updates xray subsegment name to use operation name (instead of parent's span name) Signed-off-by: Rex Chang <[email protected]> * updates doc Signed-off-by: Rex Chang <[email protected]> * updates doc Signed-off-by: Rex Chang <[email protected]> * Xray subsegment (#4) * xray tracer: set subsegment type for child spans Signed-off-by: Rex Chang <[email protected]> * adds test coverage Signed-off-by: Rex Chang <[email protected]> * updates xray subsegment name to use operation name (instead of parent's span name) Signed-off-by: Rex Chang <[email protected]> * updates doc Signed-off-by: Rex Chang <[email protected]> * updates doc Signed-off-by: Rex Chang <[email protected]> * adds to spell check dictionary Signed-off-by: Rex Chang <[email protected]> * fixes spellcheck Signed-off-by: Rex Chang <[email protected]> * adds to spellcheck Signed-off-by: Rex Chang <[email protected]> xray tracer: set subsegment type for child spans (#2) * xray tracer: set subsegment type for child spans Signed-off-by: Rex Chang <[email protected]> * adds test coverage Signed-off-by: Rex Chang <[email protected]> Xray subsegment (#3) * xray tracer: set subsegment type for child spans Signed-off-by: Rex Chang <[email protected]> * adds test coverage Signed-off-by: Rex Chang <[email protected]> * updates xray subsegment name to use operation name (instead of parent's span name) Signed-off-by: Rex Chang <[email protected]> * updates doc Signed-off-by: Rex Chang <[email protected]> * updates doc Signed-off-by: Rex Chang <[email protected]> Xray subsegment (#4) * xray tracer: set subsegment type for child spans Signed-off-by: Rex Chang <[email protected]> * adds test coverage Signed-off-by: Rex Chang <[email protected]> * updates xray subsegment name to use operation name (instead of parent's span name) Signed-off-by: Rex Chang <[email protected]> * updates doc Signed-off-by: Rex Chang <[email protected]> * updates doc Signed-off-by: Rex Chang <[email protected]> * adds to spell check dictionary Signed-off-by: Rex Chang <[email protected]> fixes spellcheck Signed-off-by: Rex Chang <[email protected]> * fixes spell check Signed-off-by: Rex Chang <[email protected]> Co-authored-by: alyssawilk <[email protected]> Co-authored-by: Bin Wu <[email protected]> Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com> Co-authored-by: Joshua Marantz <[email protected]>

Commit Message: Implements streaming /stats API for JSON and Text. Prometheus can be done in a follow-up. Additional Description: The speed up with this algorithm is dramatic for the "/stats" endpoint on 10k clusters, improving a 1.76 second CPU/memory burst into less than half a second, and speeding up JSON delivery from 5.2 seconds to 2.5 seconds. There is still a bottleneck is the JSON serialization library, which is needed to ensure we have proper escaping of stat-names with special characters, and a larger bottleneck whenever regex filers are used because std::regex has poor performance. In the future, better mechanisms for filtering can be added, such as an HTML UI for navigating stats by name hierarchy (envoyproxy#18670) or prefix/suffix matching or using RE2. This PR fully resolves envoyproxy#16981 but does not address envoyproxy#16139 at all. It might be possible to generalize the solution here to cover Prometheus by keeping our statss_map_ indexed by tagExtractedStatName, but that would be a whole separate effort, and we'd have to figure out if related-stats could span scopes. This PR partially resolves envoyproxy#18675 but there's still a non-trivial burst of CPU especially when there's a regex filter. envoyproxy#18670 would (arguably) fully resolve envoyproxy#18675. BEFORE: ``` ------------------------------------------------------------------ Benchmark Time CPU Iterations ------------------------------------------------------------------ BM_AllCountersText 1760 ms 1760 ms 1 BM_UsedCountersText 118 ms 118 ms 6 BM_FilteredCountersText 4290 ms 4289 ms 1 BM_AllCountersJson 5221 ms 5218 ms 1 BM_UsedCountersJson 152 ms 152 ms 5 BM_FilteredCountersJson 4162 ms 4161 ms 1 ``` AFTER ``` ------------------------------------------------------------------ Benchmark Time CPU Iterations ------------------------------------------------------------------ BM_AllCountersText 454 ms 454 ms 2 BM_UsedCountersText 37.7 ms 37.7 ms 19 BM_FilteredCountersText 4056 ms 4055 ms 1 BM_AllCountersJson 2707 ms 2706 ms 1 BM_UsedCountersJson 63.4 ms 63.4 ms 11 BM_FilteredCountersJson 4008 ms 4008 ms 1 ``` I also ran a manual test with 100k clusters sending `/stats` to the admin port with output to /dev/null. Before this change, this takes 35 seconds and after this change it takes 7 seconds, and consumes about 2G less memory. These were measured with `time wget ...` and observing the process with `top`. To run that I had to set up the clusters to reference static IP addresses and make the stats-sink interval 299 seconds, as others DNS resolution and stats sinks dominate the CPU and make other measurements difficult. Risk Level: medium -- the stats generation code in admin is largely rewritten and is important in some flows. Testing: //test/... Docs Changes: n/a -- no functional changes in this PR -- just perf improvements Release Notes: n/a Platform Specific Features: n/a Fixes: envoyproxy#16981 Signed-off-by: Joshua Marantz <[email protected]>

Creates a JSON sanitizer that delegates to the nlohmann library when there's any escapes required, or characters with the 8th bit set, but otherwise just returns its input. This PR supersedes envoyproxy#20428 which has a faster hand-rolled sanitizer, but the speed penalty for nlohmann is not too bad, compared with the protobuf sanitizer, and will generally be rarely needed anyway. -------------------------------------------------------------------- Benchmark Time CPU Iterations -------------------------------------------------------------------- BM_ProtoEncoderNoEscape 1445 ns 1444 ns 455727 BM_NlohmannNoEscape 9.79 ns 9.79 ns 71449511 BM_ProtoEncoderWithEscape 1521 ns 1521 ns 462697 BM_NlohmannWithEscape 215 ns 215 ns 3264218 Additional Description: This is intended to be adapted into the JSON renderer introduced in envoyproxy#19693 Risk Level: low -- this is layered on top of an already well-trusted library. Testing: //test/... Docs Changes: n/a Release Notes: n/a Platform Specific Features: n/a Signed-off-by: Joshua Marantz <[email protected]>

Add support for streaming/chunking of prometheus stats - basically adapts the algorithm introduced in #19693 for prometheus stats (while keeping common parts of the algorithm in a shared class to avoid code duplication). With this patch we should be able to improve the issue described here #16139 Signed-off-by: rulex123 <[email protected]>

Commit Message: Reproduces a scenario where it's difficult to use a streaming optimization for Prometheus stats based on scopes without introducing a bug. Context: Issue that /stats/prometheus endpoint takes too much much memory: Prometheus stats handler used too much memory. #16139 Solution for non-Prometheus endpoints to use less memory for /stats and run faster: admin: Streaming /stats implementation #19693 which I believe is working well. This solution mapped to Prometheus: Prom stats perf improvements #24998 A case where this solution has a duplicate# TYPE line due to two stats with the same tag-extracted-stat name from two different scopes: stats: prometheus scrape failed #27173 Note that the existing unit tests did not exercise that scenario so this PR adds a testcase. Signed-off-by: Joshua Marantz <[email protected]>

Add support for streaming/chunking of prometheus stats - basically adapts the algorithm introduced in envoyproxy#19693 for prometheus stats (while keeping common parts of the algorithm in a shared class to avoid code duplication). With this patch we should be able to improve the issue described here envoyproxy#16139 Signed-off-by: rulex123 <[email protected]> Signed-off-by: Ryan Eskin <[email protected]>

…xy#27239) Commit Message: Reproduces a scenario where it's difficult to use a streaming optimization for Prometheus stats based on scopes without introducing a bug. Context: Issue that /stats/prometheus endpoint takes too much much memory: Prometheus stats handler used too much memory. envoyproxy#16139 Solution for non-Prometheus endpoints to use less memory for /stats and run faster: admin: Streaming /stats implementation envoyproxy#19693 which I believe is working well. This solution mapped to Prometheus: Prom stats perf improvements envoyproxy#24998 A case where this solution has a duplicate# TYPE line due to two stats with the same tag-extracted-stat name from two different scopes: stats: prometheus scrape failed envoyproxy#27173 Note that the existing unit tests did not exercise that scenario so this PR adds a testcase. Signed-off-by: Joshua Marantz <[email protected]> Signed-off-by: Ryan Eskin <[email protected]>

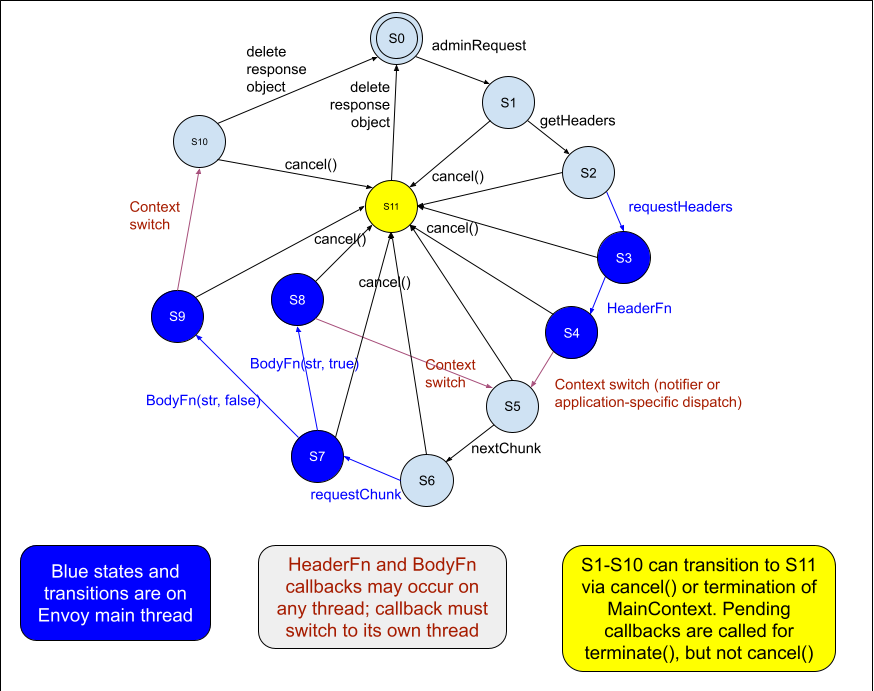

Commit Message: In #19693 a streaming stats implementation was introduced, dramatically reducing the amount of time and memory it takes to generate an admin http response. /config_dump (#32054) and /clusters_ (#32054) can also be improved in this way. However, in some environments we do not want to expose an HTTP port for admin requests, and we must use the C++ API. However that API is not streaming: it buffers the entire content. This PR adds a new streaming API. Mechanically this new functionality was easy to add as an externally callble C++ API as the Admin class already supported this model on behalf of /stats. However there's a fair amount of complexity managing potential races between active streaming requests, server exit, and explicit cancellation of an in-progress request. So much of the effort and complexity in this PR is due to making that thread-safe and testing it. Additional Description:  Note to reviewers: if this PR is too big it can be broken into 2. The significant additions to main_common.cc, main_common.h, and main_common_test.cc need to stay together in one PR, the rest of the changes are non-functional refactors which are needed for the larger changes to work; they could be reviewed and merged first. Risk Level: medium -- this is a new API but it does refactor some existing functionality. Using the new functionality will add some risk also as there is some careful thought required to believe we have protected against all possibilities of shutdown/cancel/admin-request races. Testing: //test/..., and lots of tsan repeated tests for the new streaming tests. Lots of iteration to ensure every new line of main_common.cc is hit by coverage tests. Docs Changes: n/a Release Notes: n/a Platform Specific Features: n/a Fixes: #31755 Signed-off-by: Joshua Marantz <[email protected]>

Commit Message: Implements streaming /stats API for JSON and Text. Prometheus can be done in a follow-up.

Additional Description:

The speed up with this algorithm is dramatic for the "/stats" endpoint on 10k clusters, improving a 1.76 second CPU/memory burst into less than half a second, and speeding up JSON delivery from 5.2 seconds to 2.5 seconds. There is still a bottleneck is the JSON serialization library, which is needed to ensure we have proper escaping of stat-names with special characters, and a larger bottleneck whenever regex filers are used because std::regex has poor performance.

In the future, better mechanisms for filtering can be added, such as an HTML UI for navigating stats by name hierarchy (#18670) or prefix/suffix matching or using RE2.

This PR fully resolves #16981 but does not address #16139 at all. It might be possible to generalize the solution here to cover Prometheus by keeping our statss_map_ indexed by tagExtractedStatName, but that would be a whole separate effort, and we'd have to figure out if related-stats could span scopes.

This PR partially resolves #18675 but there's still a non-trivial burst of CPU especially when there's a regex filter. #18670 would (arguably) fully resolve #18675.

BEFORE:

AFTER

I also ran a manual test with 100k clusters sending

/statsto the admin port with output to /dev/null. Before this change, this takes 35 seconds and after this change it takes 7 seconds, and consumes about 2G less memory. These were measured withtime wget ...and observing the process withtop. To run that I had to set up the clusters to reference static IP addresses and make the stats-sink interval 299 seconds, as others DNS resolution and stats sinks dominate the CPU and make other measurements difficult.Risk Level: medium -- the stats generation code in admin is largely rewritten and is important in some flows.

Testing: //test/...

Docs Changes: n/a -- no functional changes in this PR -- just perf improvements

Release Notes: n/a

Platform Specific Features: n/a

Fixes: #16981