-

Notifications

You must be signed in to change notification settings - Fork 4.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Enabled high-performance Automatic Tensor Parallelism (auto TP) for the MoE models on multiple GPUs/HPUs #6964

base: master

Are you sure you want to change the base?

Conversation

|

Hi @gyou2021 , There is another PR for DeepSeek autotp in this link #6937 What is relationship of your PR to this previous PR regarding on functionality? @Yejing-Lai can you take a look at this PR? |

The difference lies in how the results of the weighted sum of routed experts per layer in the MoE are gathered. In my understanding, each selected routed expert per layer was gathered individually in #6937, meaning the gathering time was proportional to the number of selected routed experts per layer. In this PR, the results are gathered once per layer, regardless of the number of selected routed experts. |

|

Hi @gyou2021 , thanks for this optimization. As we reviewed internally, need to figure out a way to turn on this optimization through knobs in model config.json file. In that way this optimization won't break workloads that does not utilize this optimization. Plus: I think it will be even more user friendly if the colasced allredcuce could be injected by AutoTP. But as I reviewed with @gyou2021 , this seems tricky because no proper injection point can be captured by process nn.module. @tohtana @loadams @tjruwase @Yejing-Lai if you have an idea we can discuss in this thread. |

Added support for the environment variable DS_MOE_TP_SINGLE_ALLREDUCE, which indicates whether the MoE expert all-reduce once optimization was enabled. If the value of DS_MOE_TP_SINGLE_ALLREDUCE is true, that is the optimization was enabled, the Auto_TP will execute corresponding operations to some linear layers. |

|

Hi @loadams, this PR is now ready to review. This PR allows user to explicitly turns off allreduce in experts for AutoTP tensor parallel inference, inorder to delay the allreduce until all experts are reduced locally. Thanks! |

…MoE and DeepSeek-V2 models. Signed-off-by: gyou2021 <[email protected]>

Signed-off-by: gyou2021 <[email protected]>

Signed-off-by: gyou2021 <[email protected]>

Signed-off-by: gyou2021 <[email protected]>

…peedai#6750) FYI @loadams a view operation was missing in some updates compared to the original version https://github.com/microsoft/DeepSpeed/blob/17ed7c77c58611a923a6c8d2a3d21d359cd046e8/deepspeed/sequence/layer.py#L56 add missing view operation. The shape required for the view cannot be easily obtained in the current function, so refactor layout params code. --------- Co-authored-by: Logan Adams <[email protected]> Co-authored-by: Masahiro Tanaka <[email protected]> Signed-off-by: gyou2021 <[email protected]>

…orm` (deepspeedai#6931) - [x] Update PR since `torch.norm` and `torch.linalg.norm` have [different function signatures](https://pytorch.org/docs/stable/generated/torch.linalg.norm.html#torch.linalg.norm). - [x] Check if there are any numeric differences between the functions. - [x] Determine why there appear to be performance differences from others [here](pytorch/pytorch#136360). - [x] Update to `torch.linalg.vectornorm` Follow up PR handles these in the comm folder: deepspeedai#6960 Signed-off-by: gyou2021 <[email protected]>

Following discussion in [PR-6670](deepspeedai#6670), the explict upcast is much more efficient than implicit upcast, this PR is to replace implicit upcast with explict one. The results on 3B model are shown below: | Option | BWD (ms) | Speed up | |------------|-----|------| | Before PR-6670 | 25603.30 | 1x | | After PR-6670 | 1174.31 | 21.8X | | After this PR| 309.2 | 82.8X | Signed-off-by: gyou2021 <[email protected]>

**Auto-generated PR to update version.txt after a DeepSpeed release** Released version - 0.16.3 Author - @loadams Co-authored-by: loadams <[email protected]> Signed-off-by: gyou2021 <[email protected]>

Fix deepspeedai#4998 Signed-off-by: gyou2021 <[email protected]>

…ort. (deepspeedai#6971) Nvidia GDS [does not support Windows](https://developer.nvidia.com/gpudirect-storage). Signed-off-by: gyou2021 <[email protected]>

Signed-off-by: gyou2021 <[email protected]>

Signed-off-by: gyou2021 <[email protected]>

…epspeedai#6932) Co-authored-by: Olatunji Ruwase <[email protected]> Co-authored-by: Logan Adams <[email protected]> Signed-off-by: gyou2021 <[email protected]>

Signed-off-by: gyou2021 <[email protected]>

…ead of Raising Error (deepspeedai#6979) This pull request addresses an issue in setup_env_ranks where, under certain conditions, the function raises an error instead of setting the necessary MPI-related environment variables (LOCAL_RANK, RANK, and WORLD_SIZE). The intended behavior is to properly map Open MPI variables (OMPI_COMM_WORLD_*) to the variables expected by DeepSpeed/PyTorch, but the code currently raises an EnvironmentError if these Open MPI variables are not found. With this fix, setup_env_ranks will: - Correctly detect and map the required Open MPI environment variables. - Only raise an error if there is genuinely no valid way to obtain rank information from the environment (e.g., both Open MPI variables and any fallback mechanism are unavailable). Fix deepspeedai#6895 Co-authored-by: Logan Adams <[email protected]> Co-authored-by: Olatunji Ruwase <[email protected]> Signed-off-by: gyou2021 <[email protected]>

…h 2.6) (deepspeedai#6982) Fixes deepspeedai#6984. The workflow was pulling the updated torch 2.6, which caused CI failures. This keeps us on torch 2.5 for now, since installing torchvision as a dependency later on was pulling torch 2.6 with it which was unintended. This PR also unsets NCCL_DEBUG to avoid a large print out in the case of any errors. Signed-off-by: gyou2021 <[email protected]>

…6974) As discussed in [PR-6918](deepspeedai#6918), padding can occur on multiple ranks with large DP degrees. For example, with: - Flattened tensor size: 266240 - DP degree: 768 - Alignment: 1536 - Required padding: 1024 (1536 * 174 - 266240) - Per-rank partition size: 348 (1536 * 174 / 768) - The padding occurs on last three ranks. This PR removes the single-rank padding assumption for more general cases. --------- Co-authored-by: Sam Foreman <[email protected]> Co-authored-by: Logan Adams <[email protected]> Co-authored-by: Olatunji Ruwase <[email protected]> Signed-off-by: gyou2021 <[email protected]>

Fix deepspeedai#6772 --------- Co-authored-by: Logan Adams <[email protected]> Signed-off-by: gyou2021 <[email protected]>

…deepspeedai#6967) - Issues with nv-sd updates, will follow up with a subsequent PR Signed-off-by: gyou2021 <[email protected]>

NVIDIA Blackwell GPU generation has number 10. The SM code and architecture should be `100`, but the current code generates `1.`, because it expects a 2 characters string. This change modifies the logic to consider it as a string that contains a `.`, hence splits the string and uses the array of strings. Signed-off-by: Fabien Dupont <[email protected]> Signed-off-by: gyou2021 <[email protected]>

Signed-off-by: Olatunji Ruwase <[email protected]> Signed-off-by: Logan Adams <[email protected]> Signed-off-by: Fabien Dupont <[email protected]> Co-authored-by: Fabien Dupont <[email protected]> Signed-off-by: gyou2021 <[email protected]>

Signed-off-by: gyou2021 <[email protected]>

bf16 with moe refresh optimizer state from bf16 ckpt will raise IndexError: list index out of range Signed-off-by: shaomin <[email protected]> Co-authored-by: shaomin <[email protected]> Co-authored-by: Hongwei Chen <[email protected]> Signed-off-by: gyou2021 <[email protected]>

**Auto-generated PR to update version.txt after a DeepSpeed release** Released version - 0.16.4 Author - @loadams Co-authored-by: loadams <[email protected]> Signed-off-by: gyou2021 <[email protected]>

@jeffra and I fixed this many years ago, so bringing this doc to a correct state. --------- Signed-off-by: Stas Bekman <[email protected]> Signed-off-by: gyou2021 <[email protected]>

Description This PR includes Tecorigin SDAA accelerator support. With this PR, DeepSpeed supports SDAA as backend for training tasks. --------- Signed-off-by: siqi <[email protected]> Co-authored-by: siqi <[email protected]> Co-authored-by: Olatunji Ruwase <[email protected]> Co-authored-by: Logan Adams <[email protected]> Signed-off-by: gyou2021 <[email protected]>

More information on libuv in pytorch: https://pytorch.org/tutorials/intermediate/TCPStore_libuv_backend.html Issue tracking the prevalence of the error on Windows (unresolved at the time of this PR): pytorch/pytorch#139990 LibUV github: https://github.com/libuv/libuv Windows error: ``` File "C:\hostedtoolcache\windows\Python\3.12.7\x64\Lib\site-packages\torch\distributed\rendezvous.py", line 189, in _create_c10d_store return TCPStore( ^^^^^^^^^ RuntimeError: use_libuv was requested but PyTorch was build without libuv support ``` use_libuv isn't well supported on Windows in pytorch <2.4, so we need to guard around this case. --------- Signed-off-by: Logan Adams <[email protected]> Signed-off-by: gyou2021 <[email protected]>

Signed-off-by: Logan Adams <[email protected]> Signed-off-by: gyou2021 <[email protected]>

@fukun07 and I discovered a bug when using the `offload_states` and `reload_states` APIs of the Zero3 optimizer. When using grouped parameters (for example, in weight decay or grouped lr scenarios), the order of the parameters mapping in `reload_states` ([here](https://github.com/deepspeedai/DeepSpeed/blob/14b3cce4aaedac69120d386953e2b4cae8c2cf2c/deepspeed/runtime/zero/stage3.py#L2953)) does not correspond with the initialization of `self.lp_param_buffer` ([here](https://github.com/deepspeedai/DeepSpeed/blob/14b3cce4aaedac69120d386953e2b4cae8c2cf2c/deepspeed/runtime/zero/stage3.py#L731)), which leads to misaligned parameter loading. This issue was overlooked by the corresponding unit tests ([here](https://github.com/deepspeedai/DeepSpeed/blob/master/tests/unit/runtime/zero/test_offload_states.py)), so we fixed the bug in our PR and added the corresponding unit tests. --------- Signed-off-by: Wei Wu <[email protected]> Co-authored-by: Masahiro Tanaka <[email protected]> Signed-off-by: gyou2021 <[email protected]>

Signed-off-by: Logan Adams <[email protected]> Signed-off-by: gyou2021 <[email protected]>

Following changes in Pytorch trace rules , my previous PR to avoid graph breaks caused by logger is no longer relevant. So instead I've added this functionality to torch dynamo - pytorch/pytorch@16ea0dd This commit allows the user to config torch to ignore logger methods and avoid associated graph breaks. To enable ignore logger methods - os.environ["DISABLE_LOGS_WHILE_COMPILING"] = "1" To ignore logger methods except for a specific method / methods (for example, info and isEnabledFor) - os.environ["DISABLE_LOGS_WHILE_COMPILING"] = "1" and os.environ["LOGGER_METHODS_TO_EXCLUDE_FROM_DISABLE"] = "info, isEnabledFor" Signed-off-by: ShellyNR <[email protected]> Co-authored-by: snahir <[email protected]> Signed-off-by: gyou2021 <[email protected]>

The partition tensor doesn't need to move to the current device when meta load is used. Signed-off-by: Lai, Yejing <[email protected]> Co-authored-by: Olatunji Ruwase <[email protected]> Signed-off-by: gyou2021 <[email protected]>

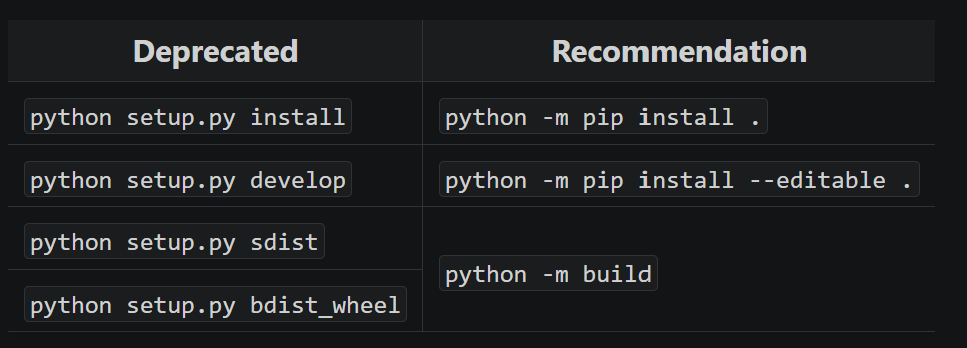

…t` (deepspeedai#7069) With future changes coming to pip/python/etc, we need to modify to no longer call `python setup.py ...` and replace it instead: https://packaging.python.org/en/latest/guides/modernize-setup-py-project/#should-setup-py-be-deleted  This means we need to install the build package which is added here as well. Additionally, we pass the `--sdist` flag to only build the sdist rather than the wheel as well here. --------- Signed-off-by: Logan Adams <[email protected]> Signed-off-by: gyou2021 <[email protected]>

…eepspeedai#7076) This reverts commit 8577bd2. Fixes: deepspeedai#7072 Signed-off-by: gyou2021 <[email protected]>

Add deepseekv3 autotp. Signed-off-by: Lai, Yejing <[email protected]> Signed-off-by: gyou2021 <[email protected]>

Fixes: deepspeedai#7082 --------- Signed-off-by: Logan Adams <[email protected]> Signed-off-by: gyou2021 <[email protected]>

…, which indicates whether moe expert all-reduce once optimization was used. Signed-off-by: gyou2021 <[email protected]>

Signed-off-by: gyou2021 <[email protected]>

Latest transformers causes failures when cpu-torch-latest test, so we pin it for now to unblock other PRs. --------- Signed-off-by: Logan Adams <[email protected]> Signed-off-by: gyou2021 <[email protected]>

Co-authored-by: Logan Adams <[email protected]> Signed-off-by: gyou2021 <[email protected]>

…/runner (deepspeedai#7086) Signed-off-by: Logan Adams <[email protected]> Co-authored-by: Olatunji Ruwase <[email protected]> Signed-off-by: gyou2021 <[email protected]>

These jobs haven't been run in a long time and were originally used when compatibility with torch <2 was more important. Signed-off-by: Logan Adams <[email protected]> Signed-off-by: gyou2021 <[email protected]>

f3c6b43 to

b3c64dd

Compare

Signed-off-by: gyou2021 <[email protected]>

Cleaned the code.

Reduced the routed experts' AllReduce operation times per MoE layer to ONCE for the MoE models such as Qwen2-MoE and DeepSeek-V2. The results of all selected routed experts per layer on GPU/HPU cards will be gathered ONCE using the AllReduce operation, instead of gathering each selected routed expert individually or by the number of selected routed experts. This change will greatly increase performance.

In addition to modifying auto_tp.py, the following files should be updated: modeling_qwen2_moe.py and modeling_deepseek_v2.py. Add the following code after the weighted sum of the output of the selected experts per MoE layer.

if is_deepspeed_available():

from deepspeed import comm as dist

if dist.is_initialized():

dist.all_reduce(final_hidden_states, op=dist.ReduceOp.SUM)

Notes: final_hidden_states is the result of the weighted sum of the output of the selected experts per MoE layer.