-

Notifications

You must be signed in to change notification settings - Fork 3.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

kvserver: add average cpu nanos per replica #92858

Conversation

|

Will link the issue. The extended ci appears like a flake. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is there a reason you didn't add timing to store_raft.processRequestQueue? It seems like some work happens there as well.

Reviewed 10 of 10 files at r1, all commit messages.

Reviewable status:complete! 0 of 0 LGTMs obtained (waiting on @irfansharif, @kvoli, and @nvanbenschoten)

pkg/kv/kvserver/metrics.go line 357 at r1 (raw file):

Unit: metric.Unit_BYTES, } metaAverageNanosPerSecond = metric.Metadata{

Nit: I don't really like this variable name (nanos/second is always 1 billion). Maybe something like metaNanosProcessingPerSecond or metaNanosRunningPerSecond or metaCPUNanosPerSecond.

pkg/kv/kvserver/replica_load.go line 27 at r1 (raw file):

writeBytes *replicastats.ReplicaStats readBytes *replicastats.ReplicaStats nanos *replicastats.ReplicaStats

Nit: Don't necessarily change in this PR, but the fact that the same operations need to be repeated in 4 different places (construction, split counts, merge counts, and reset) for 6 different stats feels a little like a code smell. Maybe structure these into an array so they can just be looped over once?

pkg/kv/kvserver/replica_metrics.go line 339 at r1 (raw file):

func (r *Replica) RequestLocality() *replicastats.RatedSummary { return r.loadStats.batchRequests.SnapshotRatedSummary() }

What is this method for - it doesn't seem to be called or used anywhere. Should this be in a different PR?

pkg/kv/kvserver/store.go line 3215 at r1 (raw file):

averageReadBytesPerSecond float64 averageWriteBytesPerSecond float64 averageNanosPerSecond float64

Nit: Similar naming issue as above.

7588441 to

2dca625

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

TYFTR - replied to comments.

Reviewable status:

complete! 0 of 0 LGTMs obtained (waiting on @andrewbaptist, @irfansharif, and @nvanbenschoten)

pkg/kv/kvserver/metrics.go line 357 at r1 (raw file):

Previously, andrewbaptist (Andrew Baptist) wrote…

Nit: I don't really like this variable name (nanos/second is always 1 billion). Maybe something like metaNanosProcessingPerSecond or metaNanosRunningPerSecond or metaCPUNanosPerSecond.

Updated to be consistently ...CPUNanosPerSecond across the patch.

pkg/kv/kvserver/replica_load.go line 27 at r1 (raw file):

Previously, andrewbaptist (Andrew Baptist) wrote…

Nit: Don't necessarily change in this PR, but the fact that the same operations need to be repeated in 4 different places (construction, split counts, merge counts, and reset) for 6 different stats feels a little like a code smell. Maybe structure these into an array so they can just be looped over once?

I agree that this structure isn't ideal. Overall this area needs a bit of a refactor. A similar vector structure to #91593 is the likely outcome eventually.

This is tracked in #87187.

pkg/kv/kvserver/replica_metrics.go line 339 at r1 (raw file):

Previously, andrewbaptist (Andrew Baptist) wrote…

What is this method for - it doesn't seem to be called or used anywhere. Should this be in a different PR?

Removed.

pkg/kv/kvserver/store.go line 3215 at r1 (raw file):

Previously, andrewbaptist (Andrew Baptist) wrote…

Nit: Similar naming issue as above.

Updated.

abfdded to

2063297

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is there a reason you didn't add timing to store_raft.processRequestQueue? It seems like some work happens there as well.

Initially it wasn't included simply because we didn't include it in the cputime prototype. I've added it, good suggestion.

Reviewable status:

complete! 0 of 0 LGTMs obtained (waiting on @andrewbaptist, @irfansharif, and @nvanbenschoten)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It's cool to see this.

What is the plan for tracking and balancing on load in cases where grunning is disabled?

Also, do we intend to split the CPU attribution across work that a replica performs in the leaseholder role vs. work that it performs in the follower replica role? Now that we can attribute work with precision, it seems valuable to measure exactly the amount of work a lease transfer can shed, and then also exactly the amount of work a replica rebalance can shed.

Reviewed 4 of 10 files at r1, 6 of 6 files at r2, all commit messages.

Reviewable status:complete! 0 of 0 LGTMs obtained (waiting on @andrewbaptist, @irfansharif, and @kvoli)

pkg/kv/kvserver/store_raft.go line 353 at r2 (raw file):

// Record the CPU time processing the request for this replica. This is // recorded regardless of errors that are encountered. grunningStart := grunning.Time()

We have this same logic in a number of places. Consider pushing more of it into a library so that it's easier to use (harder to misuse) and less redundant.

For instance, we use the following pattern elsewhere. As long as it doesn't heap allocate (please check if you go with it), it feels like an improvement:

func (r *Replica) MeasureNanosRunning() func() {

start := grunning.Time()

return func() {

end := grunning.Time()

dur := grunning.Difference(start, end).Nanoseconds()

r.loadStats.cpuNanos.RecordCount(float64(dur), 0 /* nodeID */)

}

}

...

// to use

defer r.MeasureNanosRunning()()pkg/kv/kvserver/store_raft.go line 643 at r2 (raw file):

stats, err := r.handleRaftReady(ctx, noSnap) maybeFatalOnRaftReadyErr(ctx, err) grunningStart := grunning.Time()

This is starting the timer after the handleRaftReady call, so we're missing most of the CPU time inside of Raft. Fixing this should improve the accuracy of the CPU attribution.

pkg/kv/kvserver/store_send.go line 173 at r2 (raw file):

startGrunning := grunning.Time() defer func() { repl, err := s.GetReplica(ba.RangeID)

Can we find a way to record this without passing back through the replicasByRangeID sync map? We have the Replica down below and it's probably safe to assume that all of the work was done by the last Replica touched in that loop.

pkg/ts/catalog/chart_catalog.go line 689 at r2 (raw file):

}, {

nit: stray line

2e6a3dd to

bbc219e

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What is the plan for tracking and balancing on load in cases where grunning is disabled?

tracking: It will record 0.

balancing on load: call to https://github.com/kvoli/cockroach/blob/bbc219e2b6863aa82b27751deba67b6aea0d27b8/pkg/util/grunning/grunning.go#L43-L43 and set the cluster setting to QPS rebalancing or "legacy" in that case, if Supported returns false.

do we intend to split the CPU attribution across work that a replica performs in the leaseholder role vs. work that it performs in the follower replica role?

That is the intention. I was concerned on the memory usage of adding two different replica stats objects at once. They need to be refactored but I was intending on doing that after merging #91593. I don't see why that should block this so I've added both.

I have opted not to include two separate metrics yet. Keeping the same metric as before.

Reviewable status:

complete! 0 of 0 LGTMs obtained (waiting on @andrewbaptist, @irfansharif, and @nvanbenschoten)

pkg/kv/kvserver/store_raft.go line 353 at r2 (raw file):

Previously, nvanbenschoten (Nathan VanBenschoten) wrote…

We have this same logic in a number of places. Consider pushing more of it into a library so that it's easier to use (harder to misuse) and less redundant.

For instance, we use the following pattern elsewhere. As long as it doesn't heap allocate (please check if you go with it), it feels like an improvement:

func (r *Replica) MeasureNanosRunning() func() { start := grunning.Time() return func() { end := grunning.Time() dur := grunning.Difference(start, end).Nanoseconds() r.loadStats.cpuNanos.RecordCount(float64(dur), 0 /* nodeID */) } } ... // to use defer r.MeasureNanosRunning()()

Adopted this method instead.

pkg/kv/kvserver/store_raft.go line 643 at r2 (raw file):

Previously, nvanbenschoten (Nathan VanBenschoten) wrote…

This is starting the timer after the

handleRaftReadycall, so we're missing most of the CPU time inside of Raft. Fixing this should improve the accuracy of the CPU attribution.

That's an error. Updated to be above.

pkg/ts/catalog/chart_catalog.go line 689 at r2 (raw file):

Previously, nvanbenschoten (Nathan VanBenschoten) wrote…

nit: stray line

Updated.

pkg/kv/kvserver/store_send.go line 173 at r2 (raw file):

Previously, nvanbenschoten (Nathan VanBenschoten) wrote…

Can we find a way to record this without passing back through the

replicasByRangeIDsync map? We have theReplicadown below and it's probably safe to assume that all of the work was done by the last Replica touched in that loop.

Moved to repl.SendWithWriteBytes() for now.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Reviewable status:

complete! 0 of 0 LGTMs obtained (waiting on @andrewbaptist, @irfansharif, and @nvanbenschoten)

pkg/kv/kvserver/store_raft.go line 353 at r2 (raw file):

Previously, kvoli (Austen) wrote…

Adopted this method instead.

It looks like it does heap alloc w/ that approach.

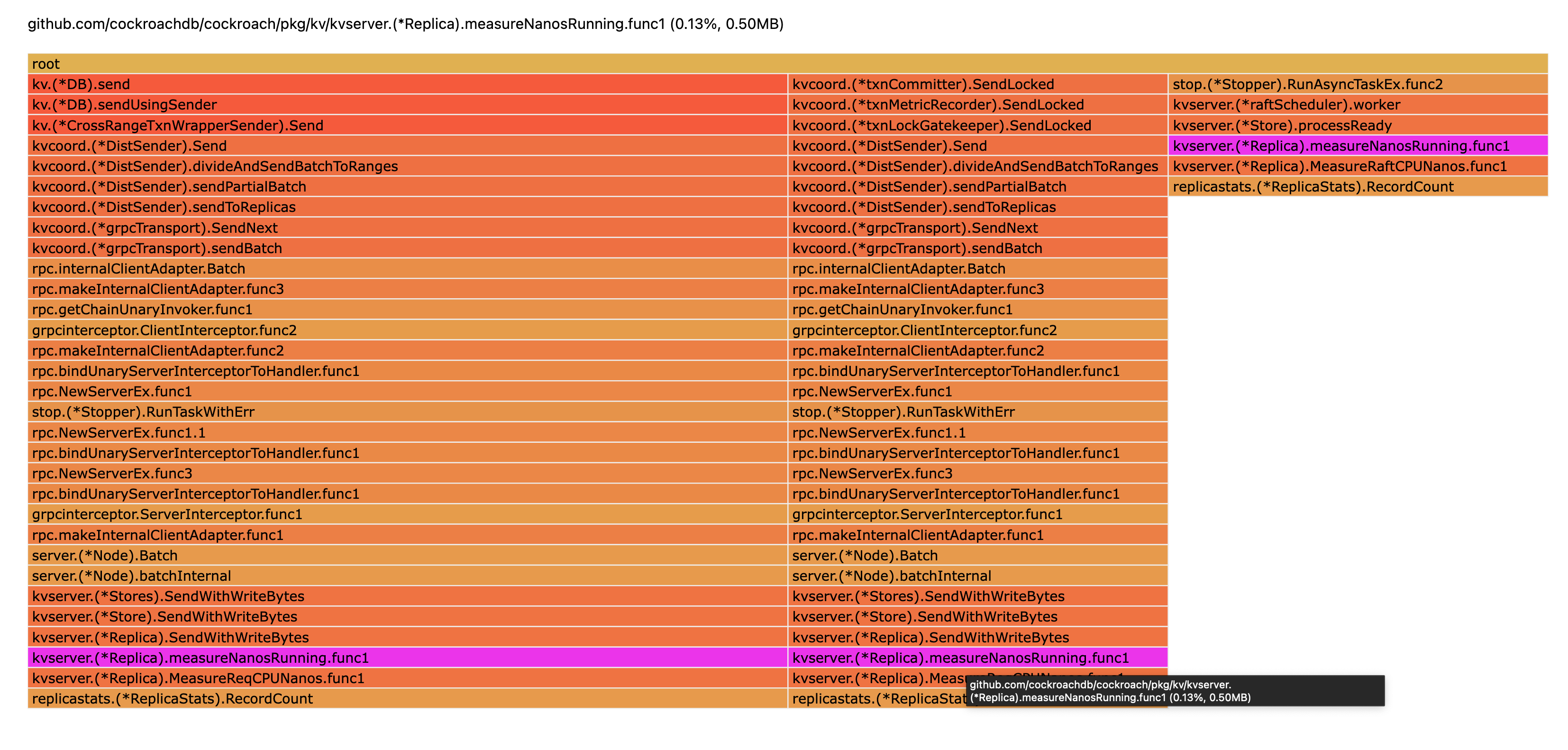

This is recent allocs running kv max-rate=10k.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Reviewed 7 of 8 files at r4, all commit messages.

Reviewable status:complete! 0 of 0 LGTMs obtained (waiting on @andrewbaptist, @irfansharif, and @kvoli)

pkg/kv/kvserver/store_raft.go line 353 at r2 (raw file):

Previously, kvoli (Austen) wrote…

It looks like it does heap alloc w/ that approach.

Albeit a relatively tiny amount (kv max-rate=1k running).

I took a look at this and was also unable to find a way to avoid the heap allocation. It's unfortunate, but the heap allocation is probably not worth paying for. A slightly worse version ergonomically that avoids the closure allocation is:

// MeasureReqCPUNanos measures the cpu time spent on this replica processing

// requests.

func (r *Replica) MeasureReqCPUNanos(start time.Duration) {

r.measureNanosRunning(start, func(dur float64) {

r.loadStats.reqCPUNanos.RecordCount(dur, 0 /* nodeID */)

})

}

// measureNanosRunning measures the difference in cpu time from when this

// method is called, to when the returned function is called. This difference

// is recorded against the replica's cpu time attribution.

func (r *Replica) measureNanosRunning(start time.Duration, f func(float64)) {

end := grunning.Time()

dur := grunning.Difference(start, end).Nanoseconds()

f(float64(dur))

}

...

func (r *Replica) SendWithWriteBytes(

ctx context.Context, ba *roachpb.BatchRequest,

) (*roachpb.BatchResponse, *kvadmission.StoreWriteBytes, *roachpb.Error) {

...

defer r.MeasureReqCPUNanos(grunning.Time())

...

}Consider switching to an approach along these lines.

pkg/kv/kvserver/store_send.go line 173 at r2 (raw file):

Previously, kvoli (Austen) wrote…

Moved to

repl.SendWithWriteBytes()for now.

That seems like a better place for it. Thanks.

This patch adds partial accounting for the time spent on processing requests, for a specific replica. The time spent is recorded in `ReplicaStats` and allows for observing the aggregate cpu time spent on replicas via an exported metric `rebalancing.cpunanospersecond`. resolves cockroachdb#90589 Release note: None

bbc219e to

88c86eb

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Reviewable status:

complete! 0 of 0 LGTMs obtained (waiting on @andrewbaptist, @irfansharif, and @nvanbenschoten)

pkg/kv/kvserver/store_raft.go line 353 at r2 (raw file):

Previously, nvanbenschoten (Nathan VanBenschoten) wrote…

I took a look at this and was also unable to find a way to avoid the heap allocation. It's unfortunate, but the heap allocation is probably not worth paying for. A slightly worse version ergonomically that avoids the closure allocation is:

// MeasureReqCPUNanos measures the cpu time spent on this replica processing // requests. func (r *Replica) MeasureReqCPUNanos(start time.Duration) { r.measureNanosRunning(start, func(dur float64) { r.loadStats.reqCPUNanos.RecordCount(dur, 0 /* nodeID */) }) } // measureNanosRunning measures the difference in cpu time from when this // method is called, to when the returned function is called. This difference // is recorded against the replica's cpu time attribution. func (r *Replica) measureNanosRunning(start time.Duration, f func(float64)) { end := grunning.Time() dur := grunning.Difference(start, end).Nanoseconds() f(float64(dur)) } ... func (r *Replica) SendWithWriteBytes( ctx context.Context, ba *roachpb.BatchRequest, ) (*roachpb.BatchResponse, *kvadmission.StoreWriteBytes, *roachpb.Error) { ... defer r.MeasureReqCPUNanos(grunning.Time()) ... }Consider switching to an approach along these lines.

looks good, adopted.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Reviewed 1 of 6 files at r3, 4 of 4 files at r5, all commit messages.

Reviewable status:complete! 1 of 0 LGTMs obtained (waiting on @andrewbaptist and @irfansharif)

|

bors r=nvanbenschoten |

|

👎 Rejected by code reviews |

|

bors r=nvanbenschoten |

|

Build failed (retrying...): |

|

Build failed (retrying...): |

|

Build failed (retrying...): |

|

Build failed (retrying...): |

|

Build succeeded: |

This patch adds partial accounting for the time spent on processing requests, for a specific replica. The time spent is recorded in

ReplicaStatsand allows for observing the aggregate cpu time spent on replicas via an exported metricrebalancing.cpunanospersecond.Release note: None