-

-

Notifications

You must be signed in to change notification settings - Fork 430

Render Engine

Dream Textures lets you use Stable Diffusion as a render engine, with a powerful node-based system.

Below are the available guides on this page. Project files are provided for each of them.

Learn how to setup the render engine, and create your first node tree.

Learn how to use multiple ControlNet models to influence the result.

Turn simple sketches into photorealistic images with Grease Pencil and ControlNet.

Some of the official demo scenes found on blender.org have been converted to use the Dream Textures render engine. This can provide a good base for comparing the output of this engine against Cycles.

| Segmentation | Depth | Result | Cycles Baseline |

|---|---|---|---|

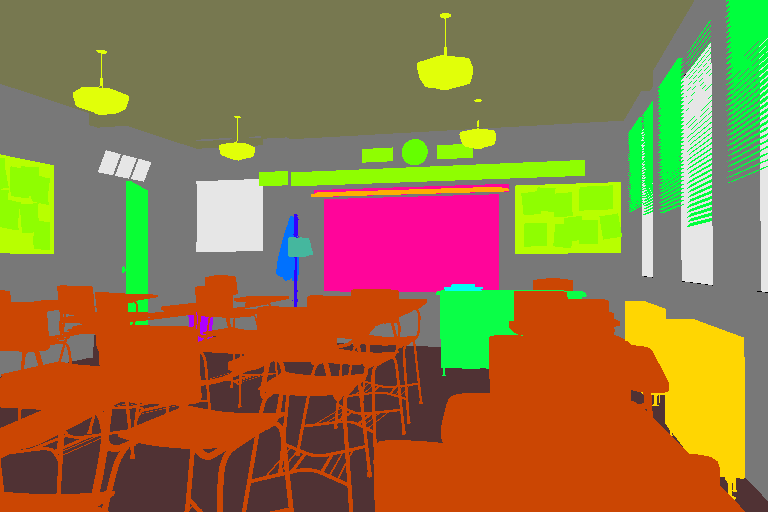

|

|

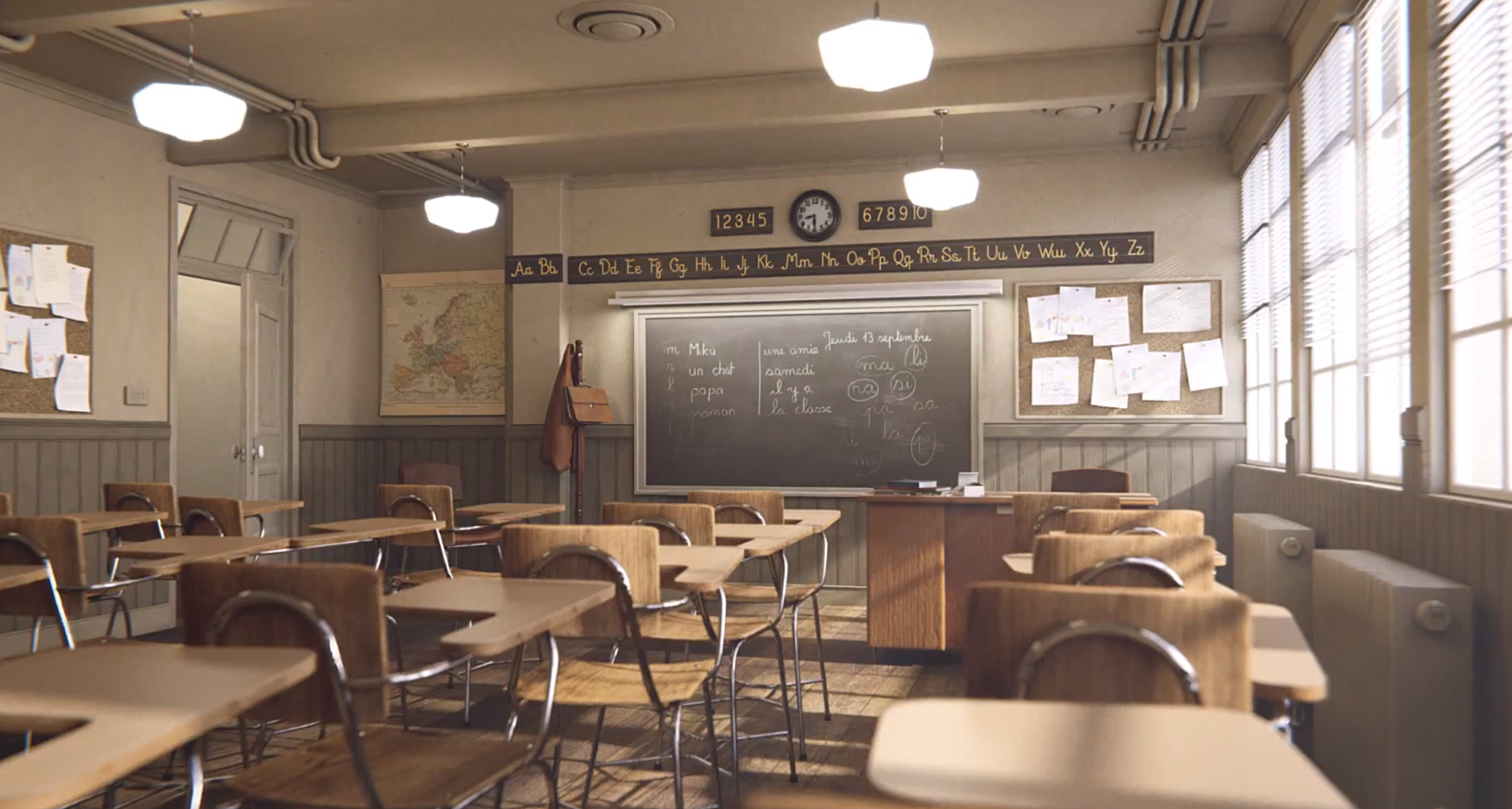

|

|

|

| Depth | Result | Cycles Baseline |

|---|---|---|

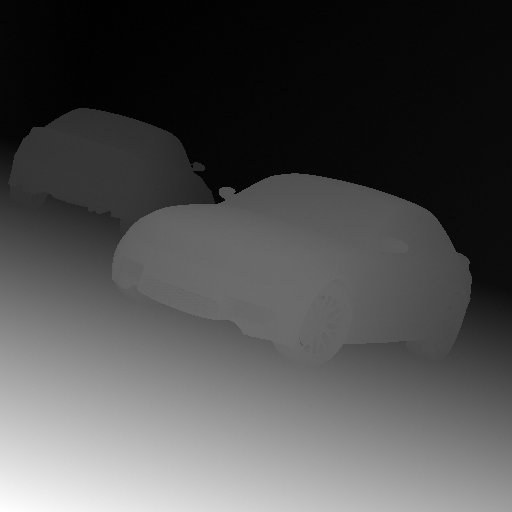

|

|

|

|

The available nodes are grouped into the following categories.

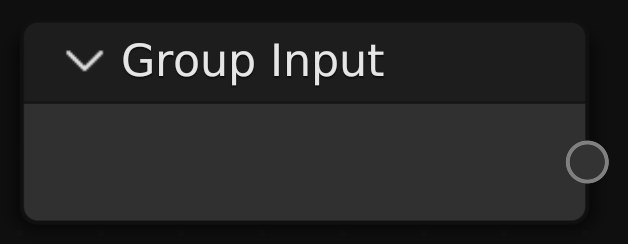

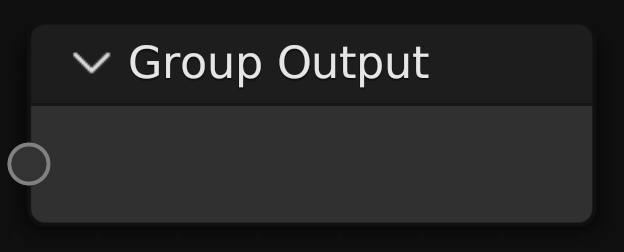

Nodes for managing inputs and outputs.

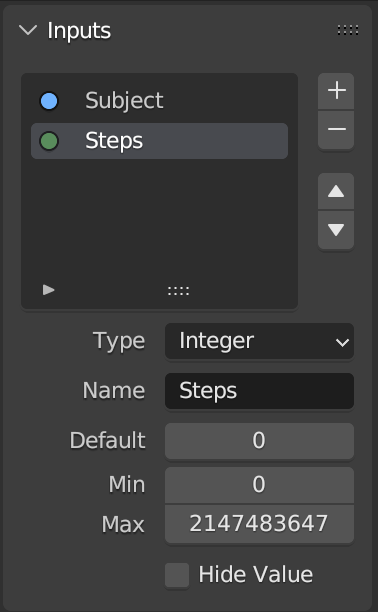

Provides access to the input values for the node tree. Configure the inputs in the sidebar. Values can be provided to the inputs from the Render Properties panel.

|

|

|

The first output must be an image that matches the Output Properties resolution. For example, if your scene is set to render 512x512, the first socket must provide a 512x512 image.

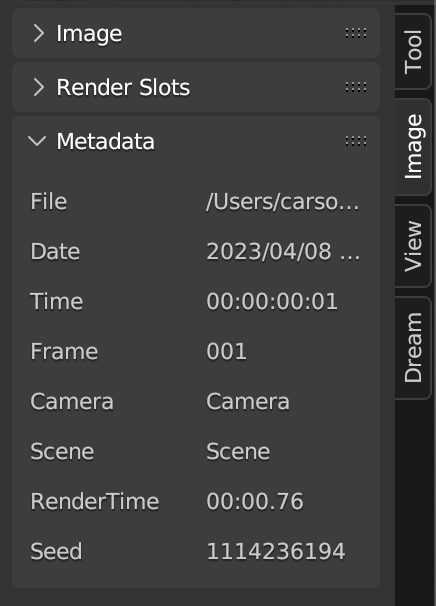

Any other outputs will be included as extra metadata. For example, the output of a Random Seed node could be connected as a second output so the seed can be found after a render.

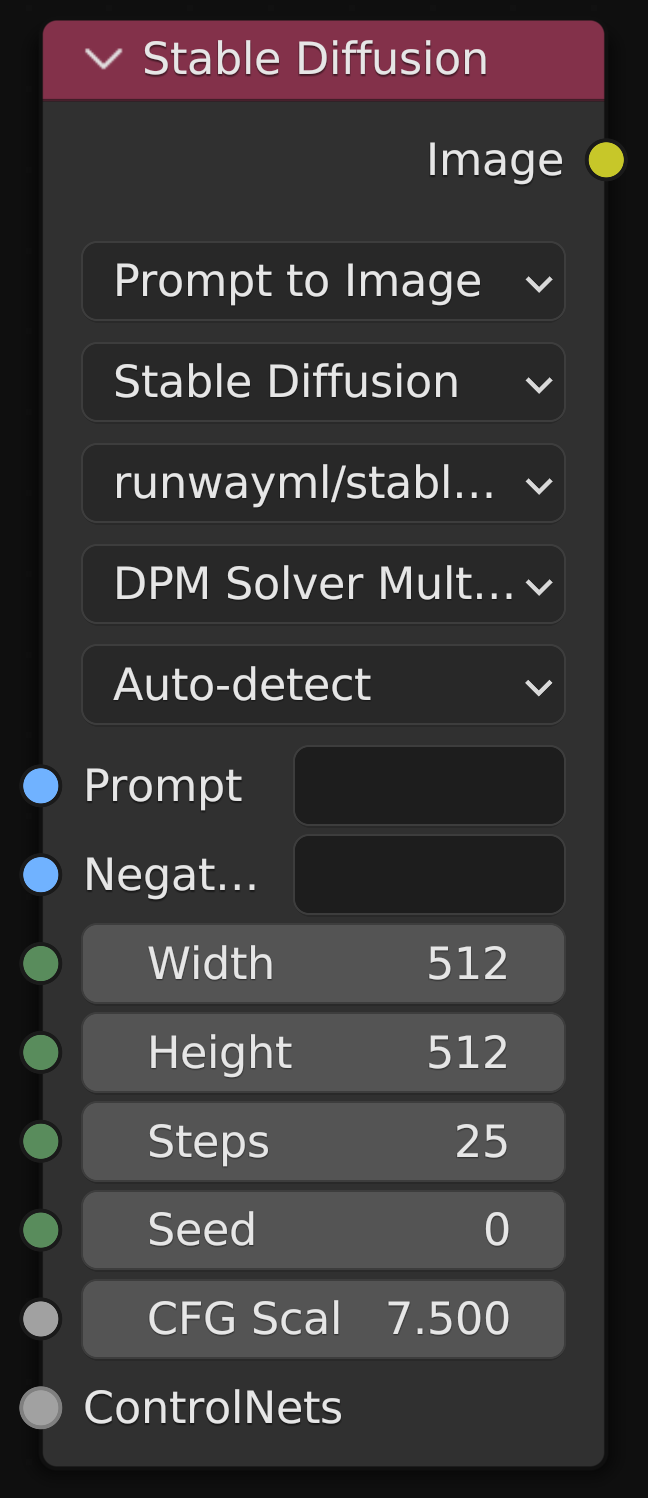

Nodes for executing ML tasks.

Generate an image with a particular model. Multiple ControlNet node outputs can be connected to the ControlNets input. All connected controls will influence the image.

For further information on the options available, see Image Generation.

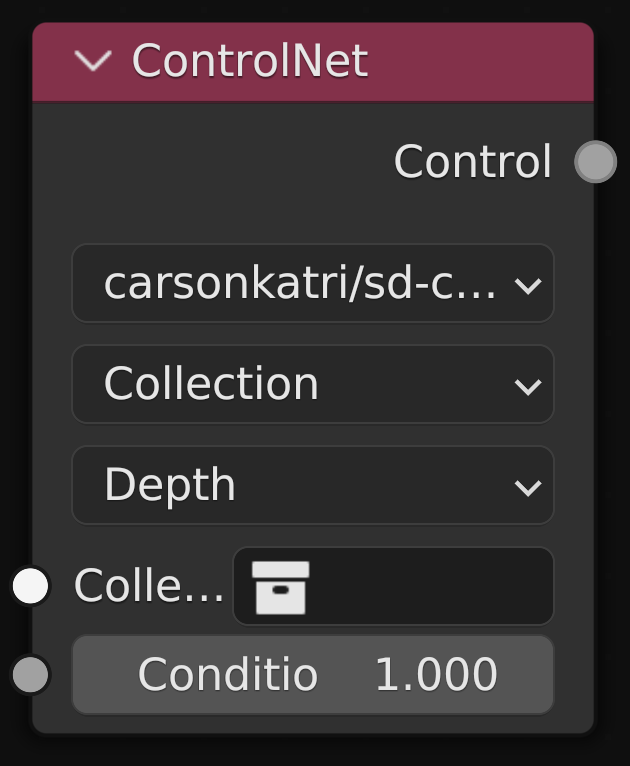

Creates a ControlNet model to use with a Stable Diffusion node.

- Model - The ControlNet model to use

- Control Source - Either a Collection or an Image

- Annotation Type - When using a Collection as the source, specify the type of control image this model expects

- Collection - The collection to render the annotation for

- Image - An pre-rendered image to use as the control, see Annotation Nodes

- Conditioning Scale - The strength of this control

- Control - The ControlNet configuration, can be passed to a Stable Diffusion node

Generate images that can be used as input to ControlNet nodes.

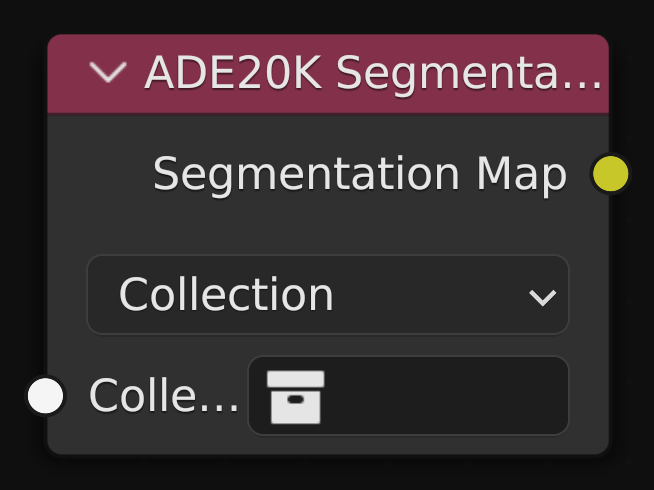

Generates a segmentation map from the given collection or the entire scene.

Intended to be used with the lllyasviel/sd-controlnet-seg model.

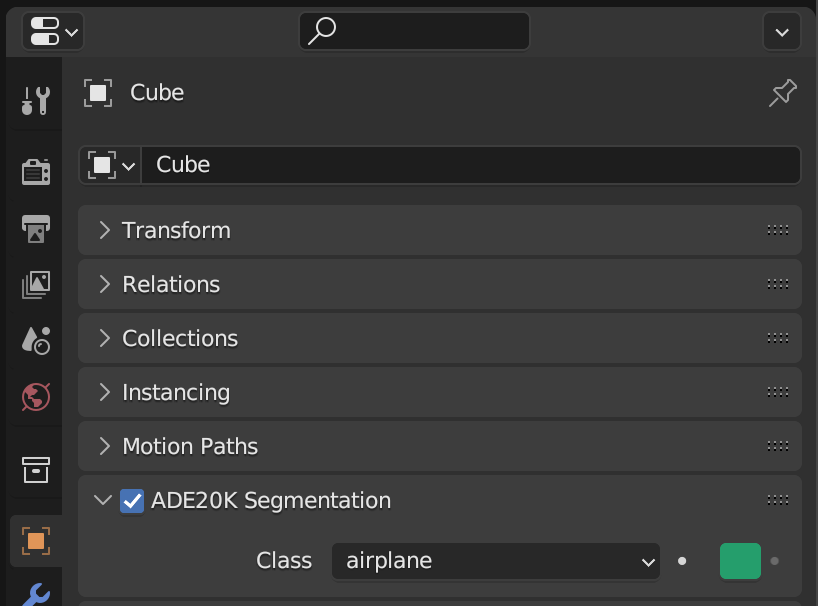

The class to use for a particular object can be set from the Object Properties panel.

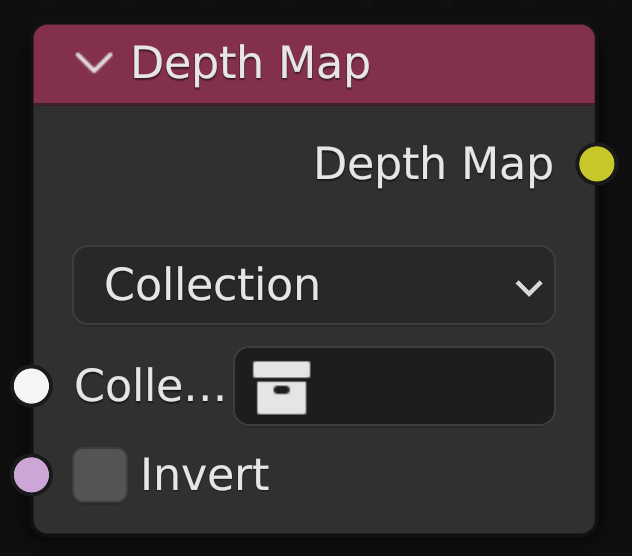

Generates a depth map from the given collection or the entire scene.

Intended to be used with the lllyasviel/sd-controlnet-depth model.

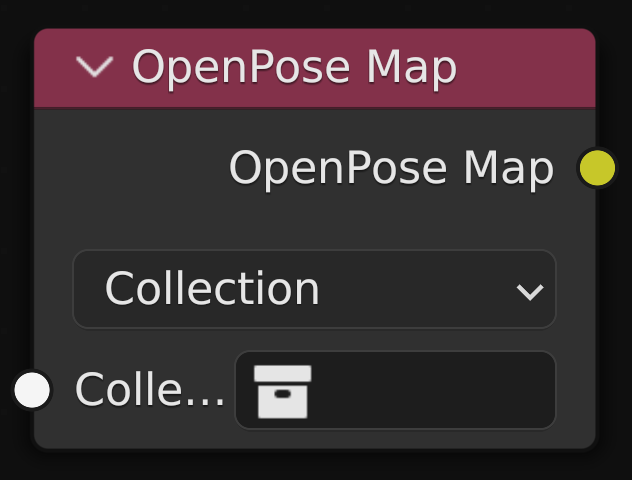

Generates an OpenPose map from the given collection or the entire scene.

Intended to be used with the lllyasviel/sd-controlnet-openpose model.

Bone types can be detected from their name. If the wrong bone is being detected, or a bone is missing, set the type in the Bone Properties panel when in Pose Mode.

Renders the scene as seen from the 3D Viewport.

Warning A 3D Viewport editor must be open for this node to succeed.

Nodes for creating and accessing input values.

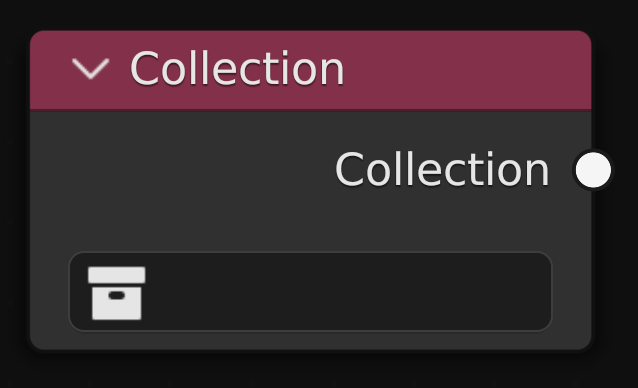

References a collection in the scene.

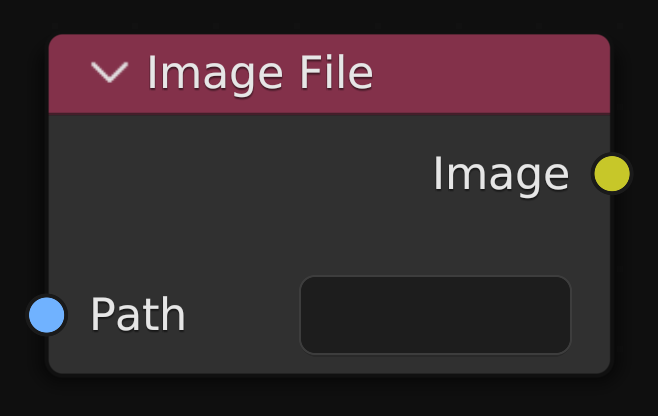

Opens the image at Path using OpenImageIO.

Note This node is only available in Blender 3.5+

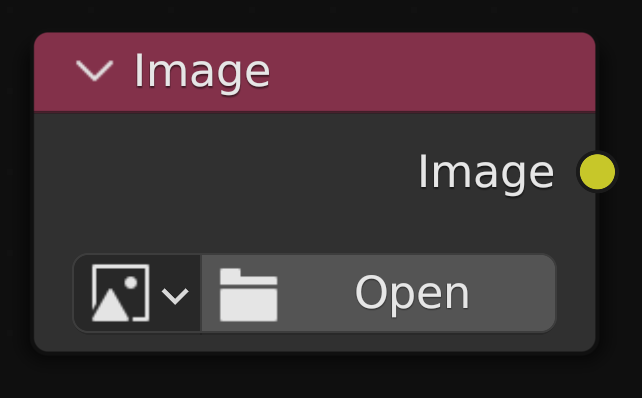

Passes the pixels of the selected image datablock.

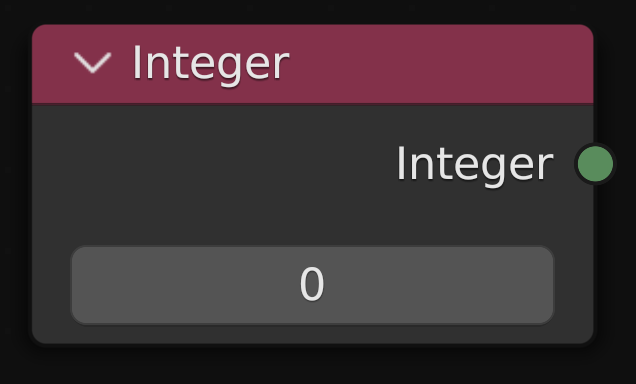

Passes the selected integer value to the next node.

Provides access to information about the render settings.

- Resolution X - The output width

- Resolution Y - The output height

- Output Filepath - The path to the folder frames are saved to

- Frame - The current frame number

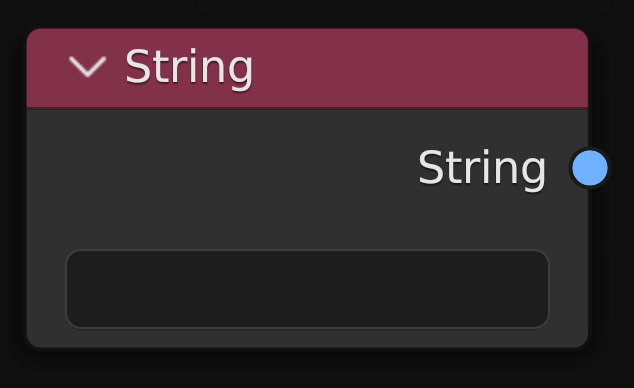

Passes the input string value to the next node.

Provides nodes for helpful operations like math, image manipulation, etc.

Restricts a value to be within certain bounds.

- Value - A number or image channel to clamp

- Min - The lowest allowed value

- Max - The highest allowed value

- Result - The Value clamped between the Min and Max

Creates a full color image from the RGBA channels.

- Red - The red channel of the image

- Green - The green channel of the image

- Blue - The blue channel of the image

- Alpha - The alpha channel of the image

- Color - The final image with the channels combined

Checks a value against a condition.

- Operation - The comparison to make, such as Less Than, Equal, etc.

- A - The left side of the comparison

- B - The right side of the comparison

- Result - A boolean value indicating whether the value matched the condition

Extracts part of an image given a bounding box.

- Image - The image to crop

- X - The left edge of the crop area

- Y - The top edge of the crop area

- Width - The width of the crop area

- Height - The height of the crop area

- Cropped Image - The image cropped to the bounding box

Finds the output path for a given frame.

- Frame - The frame number to get

- Frame Path - The path to the output image at that frame

Stacks images across the given axis.

- Axis - Whether to stack Horizontal or Vertical

- A - The left/top iamge

- B - The right/bottom image

- Joined Images - The combined image, with the combined size of A and B

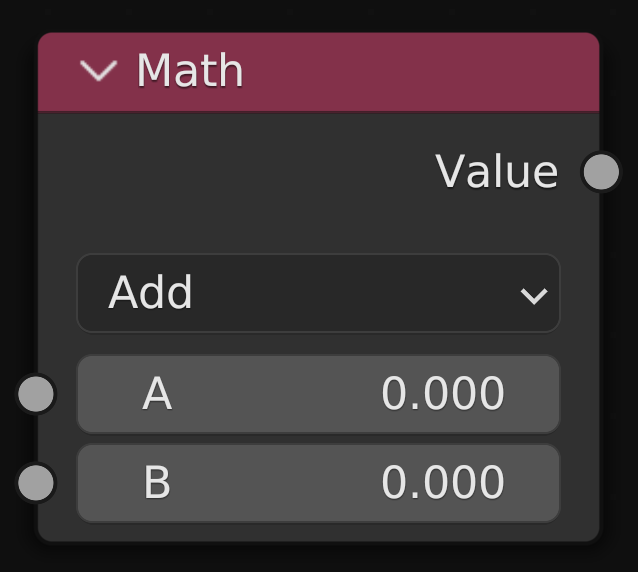

Perform common math operations.

Note Math is not restricted to just numbers. Use the Math node to combine strings, images, and other data types.

- Operation - The math operation to perform

- A - The left operand

- B - The right operand

- Value - The result of the math operation

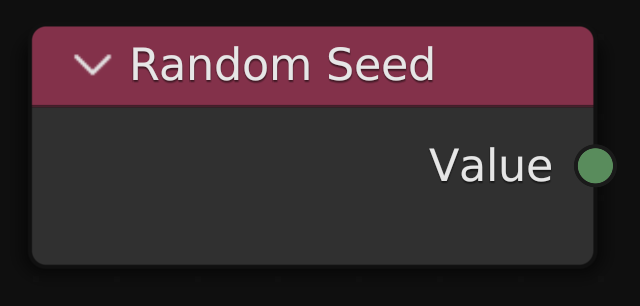

Gets a random positive integer to seed Stable Diffusion.

Note Prefer this node over Random Value for generating seeds, as Random Value can only cover half of the possible values this node can produce.

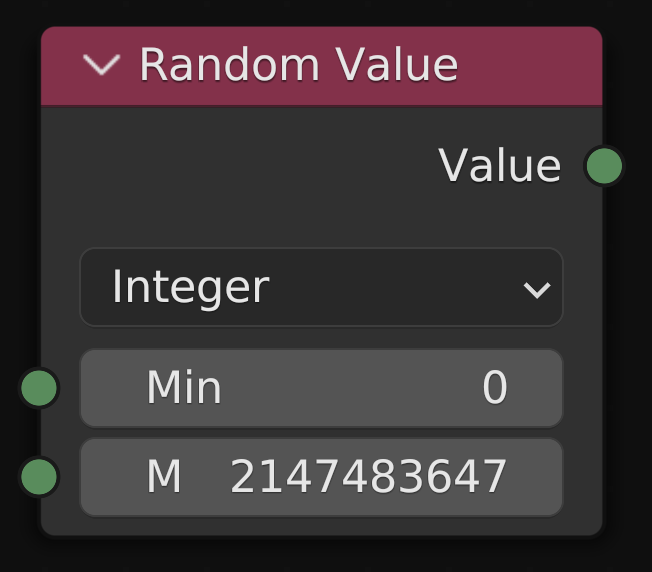

Gets a random value within the provided bounds.

Note Prefer Random Seed for generating seed values, as this node can only produce half of the possible seed values.

- Data Type - The type of number to generate

- Min - The lowest allowed value

- Max - The highest allowed value

- Value - The randomly generated value

Performs a find and replace on the given string.

- Data Type - The type of number to generate

- Min - The lowest allowed value

- Max - The highest allowed value

- Value - The randomly generated value