This repo contains a very simple k8s deployment for:

-

MLflow

-

Argo CD

-

Argo Workflow

-

Argo Events

-

Jupyter notebook

-

TF Operator

-

Seldon

-

Istio

-

MinIO

-

Registry

-

Git

-

Access to dockerhub and logged in via console

-

Access to git and a deploy key with write access (https://github.com/{{account}}/{{repo}}/settings/keys/new)

-

Access to S3 (via cli will inject the credentials)

-

make

-

DVC version 0.80.0 (brew)

-

istioctl version 1.4.3 (brew)

-

helm version 2.16.1 not 3.x (brew)

-

kubernetes > version 1.12 (recommended docker for mac)

-

docker > version 19.03.5 (recommended docker for mac)

-

Kubernetes requirements: 10 CPU and 14 GB memory

Check if you're logged in at the docker registry via cli

$ docker login

if you're already authenticated it will show

Authenticating with existing credentials...

Login Succeeded

Check if the domain docker.me is added to your hosts

$ cat /etc/hosts

127.0.0.1 localhost docker.me

Check for if your credentials are set within via aws cli

$ aws configure get aws_access_key_id --profile {profile}

Verify the profile you want to use

$ cat ~/.aws/credentials

Most likely default will be present at least

Adding all data to a remote versioned environment

$ dvc init in your code repository

$ dvc remote add -d {name_remote} s3://{bucket}/{folder}

Flag -d will ensure that {name_remote} is the default location

-

Set your profile by ENV var for the right AWS account

$ AWS_PROFILE=personal -

Create an S3 bucket of your choice

-

$ S3_BUCKET=bucket HUB_ACCOUNT=account make buildshould build all images needed for the project -

$ HUB_ACCOUNT=account make pushshould push the images to your docker hub -

$ S3_BUCKET=bucket AWS_PROFILE=aws_profile GIT_REPO=account/repo HUB_ACCOUNT=account make createshould create the cluster -

$ S3_BUCKET=bucket AWS_PROFILE=aws_profile GIT_REPO=account/repo HUB_ACCOUNT=account make deleteshould delete the cluster

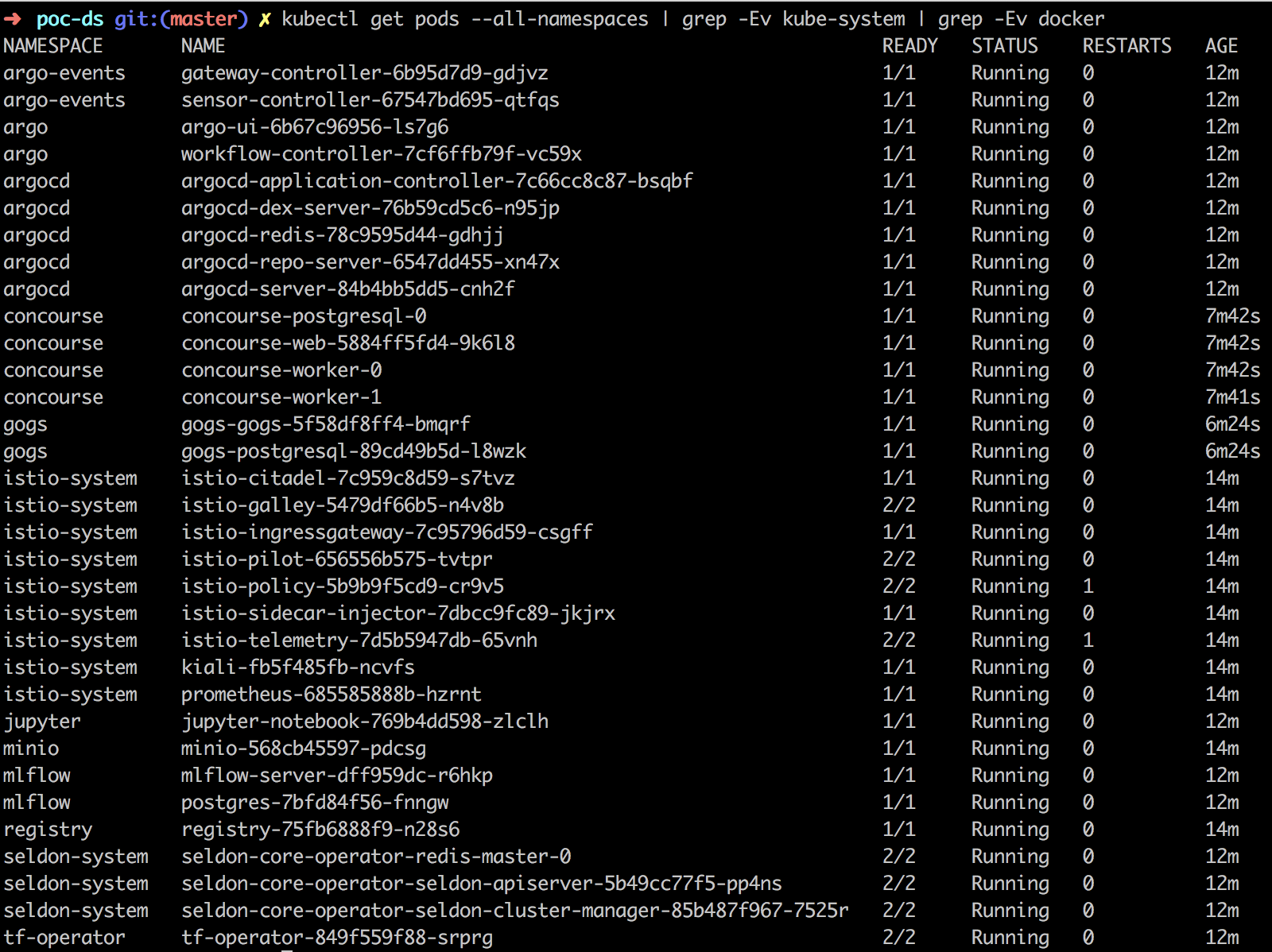

When everything has been executed, following pods should be shown:

TODO: when deleting the cluster it will raise errors on the istio removal, not sure if I want to fix it

TODO: Might need following for proper publishing via mlflow server S3_ENDPOINT=s3-eu-west-1.amazonaws.com AWS_REGION=eu-west-1

TODO: Change all to MinIO, registry and gogs for development purpose

Login argocd by using the username admin and password is the name of the pod

$ kubectl get pods -n argocd | grep argocd-server | awk '{print $1}'

Login kiali has default admin / admin username and password