-

Notifications

You must be signed in to change notification settings - Fork 49

Cache readable item keystroke representations #97

Conversation

|

I checked. behavior, especially to see how UX is improved. I even merged two solution(this PR and #98) and make it switchable by command to evaluate many time quickly. Then my impression is, UX is improved this PR, but #98 is far snappier against initial keystroke on initial-open.

And I feel some strange(not snappy) feeling while rapidly typing. Hope you compare two approach with attempting realistic situation (invoke real command immediately after atom window opened). |

|

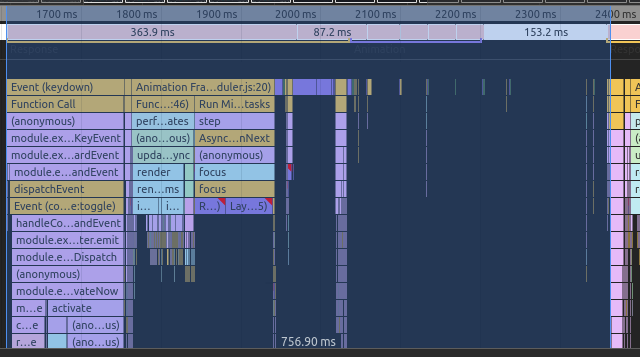

Wow, nice work! I love seeing these kinds of performance improvements. I think @t9md has a point. By deferring the rendering of the additional commands, we potentially interfere with keystrokes that may follow. It's easy to see in the timeline tab. Expand interaction events, I bet you'll see rendering overlapping with key press events. |

|

Thanks for the tip @leroix! I have tested while looking at the interactions, and there are some overlap, but the overlap does not seem to be introduced in this PR based on my comparisons to the master branch. This makes sense because the time from the palette is visible until all items are loaded is initially around 200ms(around avg human reaction time), which is about as fast as I can move my hands from The typing experience from this PR should be on par with the master branch, but typing in #98 surpasses both because of the low number of items. There are some issues however, most notably the delay when pressing the |

|

@t9md @nathansobo I have tracked down the cause of the weird feeling when typing down to the generation of humanized keybindings(the blue boxes to the right in the palette). I've fixed it in this PR, and I now believe this PR is the best solution to #80. I've made a recording to show the performance where I go through several scenarios. There are 700 commands registered(starts with total refresh to flush the cache): https://i.imgur.com/NAMx0ME.gifv |

|

@jarle I checked out your branch and compared it to the current master. While your solution does cause the command palette to appear faster, it actually increases the time to fully load the list of commands by about 50-60ms on the initial load. This is mostly due to the rather large reflow in |

|

@leroix The time to fully load the list increases, but I would argue that the perceived delay from the user's perspective becomes much shorter. This is because, after the initial loading of the palette, the user will be able to reach for the keys they want to press in parallell with the loading of the rest of the items. I measured how long it took for me to start typing in both master and this branch: Because the palette appear earlier, I start typing earlier. But I'm unable to move my hands in to position fast enough to interfere with the loading of the last items. And I like to think I'm not a 🐢 with the keyboard 😄 The reflow caused by |

|

Let me ask. As my understanding, your caching PR #94 is to avoid recreate item element on keyboard navigation( So also want to know this PR also introduce noticeable performance improve? |

|

@t9md #94 causes subsequent calls to These changes provide very noticeable improvements on the current version of command palette, but I understand that the improvements probably won't be as obvious after #101 and atom/atom-select-list#22 are merged. With that said, I don't see any downsides in keeping the cache for now. |

|

@jarle can you merge or rebase this with the latest master just to make sure the tests still pass? |

c20d09a to

034b30e

Compare

|

@leroix Squashed and rebased! |

|

Thanks! |

This PR implements caching of the "humanized" keystrokes for each item. Previously the keystroke representation would be recalculated whenever the query changed, which would cause a slight delay when typing.