-

Notifications

You must be signed in to change notification settings - Fork 184

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Support Task Flow and enhance dynamic task mapping (#314)

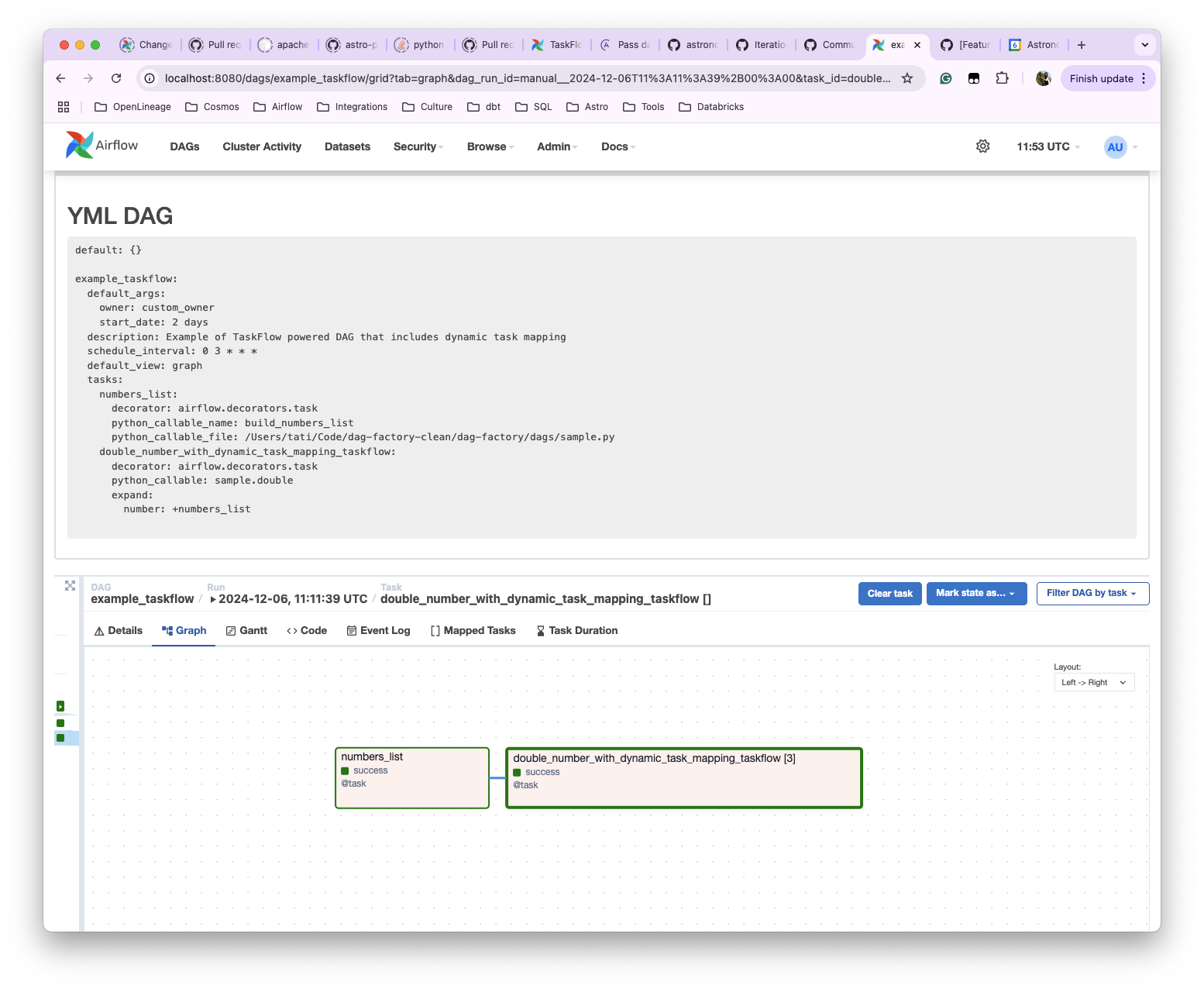

Implement support for [Airflow TaskFlow](https://airflow.apache.org/docs/apache-airflow/stable/core-concepts/taskflow.html), available since 2.0. # How to test The following example defines a task that generates a list of numbers and another that consumes this list and creates dynamically (using Airflow dynamic task mapping) an independent task that doubles each individual number. ``` example_taskflow: default_args: owner: "custom_owner" start_date: 2 days description: "Example of TaskFlow powered DAG that includes dynamic task mapping." schedule_interval: "0 3 * * *" default_view: "graph" tasks: numbers_list: decorator: airflow.decorators.task python_callable: sample.build_numbers_list double_number_with_dynamic_task_mapping_taskflow: decorator: airflow.decorators.task python_callable: sample.double expand: number: +numbers_list # the prefix + tells DagFactory to resolve this value as the task `numbers_list`, previously defined ``` For the `sample.py` file below: ``` def build_numbers_list(): return [2, 4, 6] def double(number: int): result = 2 * number print(result) return result ``` In the UI, it is shown as:  And:  # Scope This PR includes several use cases of [dynamic task mapping](https://airflow.apache.org/docs/apache-airflow/2.10.3/authoring-and-scheduling/dynamic-task-mapping.html): 1. Simple mapping 2. Task-generated mapping 3. Repeated mapping 4. Adding parameters that do not expand (`partial`) 5. Mapping over multiple parameters 6. Named mapping (`map_index_template`) The following dynamic task mapping cases were not tested but are expected to work: * Mapping with non-TaskFlow operators * Mapping over the result of classic operators * Filtering items from a mapped task The following dynamic task mapping cases were not tested and should not work (they were considered outside of the scope of the current ticket): * Assigning multiple parameters to a non-TaskFlow operator * Mapping over a task group * Transforming expanding data * Combining upstream data (aka “zipping”) # Tests The feature is being tested by running the example DAGs introduced in this PR, which validate various scenarios of task flow and dynamic task mapping and serve as documentation. As with other parts of DAG Factory, we can and should improve the overall unit test coverage. Two example DAG files were added, containing multiple examples of TaskFlow and Dynamic Task mapping. This is how they are displayed in the AIrflow UI: <img width="1501" alt="Screenshot 2024-12-06 at 16 11 10" src="https://github.com/user-attachments/assets/c4d12520-31f5-4b9d-b191-dd37523299e1"> <img width="1500" alt="Screenshot 2024-12-06 at 16 11 42" src="https://github.com/user-attachments/assets/ab08749f-aedb-4c8f-9df1-8f0d0451477d"> <img width="1510" alt="Screenshot 2024-12-06 at 16 11 32" src="https://github.com/user-attachments/assets/591e949a-49da-49f6-8d4d-1458fbb88d7f"> # Docs This PR does not contain user-facing docs other than the README. However, we'll address this as part of #278. # Related issues This PR closes two open tickets: Closes: #302 (support named mapping, via the `map_index_template` argument) Example of usage of `map_index_template`: ``` dynamic_task_with_named_mapping: decorator: airflow.decorators.task python_callable: sample.extract_last_name map_index_template: "{{ custom_mapping_key }}" expand: full_name: - Lucy Black - Vera Santos - Marks Spencer ``` Closes: #301 (Mapping over multiple parameters) Example of multiple parameters: ``` multiply_with_multiple_parameters: decorator: airflow.decorators.task python_callable: sample.multiply expand: a: +numbers_list # the prefix + tells DagFactory to resolve this value as the task `numbers_list`, previously defined b: +another_numbers_list # the prefix + tells DagFactory to resolve this value as the task `another_numbers_list`, previously defined ```

- Loading branch information

Showing

7 changed files

with

316 additions

and

79 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,16 @@ | ||

| import os | ||

| from pathlib import Path | ||

|

|

||

| # The following import is here so Airflow parses this file | ||

| # from airflow import DAG | ||

| import dagfactory | ||

|

|

||

| DEFAULT_CONFIG_ROOT_DIR = "/usr/local/airflow/dags/" | ||

| CONFIG_ROOT_DIR = Path(os.getenv("CONFIG_ROOT_DIR", DEFAULT_CONFIG_ROOT_DIR)) | ||

|

|

||

| config_file = str(CONFIG_ROOT_DIR / "example_map_index_template.yml") | ||

| example_dag_factory = dagfactory.DagFactory(config_file) | ||

|

|

||

| # Creating task dependencies | ||

| example_dag_factory.clean_dags(globals()) | ||

| example_dag_factory.generate_dags(globals()) |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,18 @@ | ||

| # Requires Airflow 2.7 or higher | ||

| example_map_index_template: | ||

| default_args: | ||

| owner: "custom_owner" | ||

| start_date: 2 days | ||

| description: "Example of TaskFlow powered DAG that includes dynamic task mapping" | ||

| schedule_interval: "0 3 * * *" | ||

| default_view: "graph" | ||

| tasks: | ||

| dynamic_task_with_named_mapping: | ||

| decorator: airflow.decorators.task | ||

| python_callable: sample.extract_last_name | ||

| map_index_template: "{{ custom_mapping_key }}" | ||

| expand: | ||

| full_name: | ||

| - Lucy Black | ||

| - Vera Santos | ||

| - Marks Spencer |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,16 @@ | ||

| import os | ||

| from pathlib import Path | ||

|

|

||

| # The following import is here so Airflow parses this file | ||

| # from airflow import DAG | ||

| import dagfactory | ||

|

|

||

| DEFAULT_CONFIG_ROOT_DIR = "/usr/local/airflow/dags/" | ||

| CONFIG_ROOT_DIR = Path(os.getenv("CONFIG_ROOT_DIR", DEFAULT_CONFIG_ROOT_DIR)) | ||

|

|

||

| config_file = str(CONFIG_ROOT_DIR / "example_taskflow.yml") | ||

| example_dag_factory = dagfactory.DagFactory(config_file) | ||

|

|

||

| # Creating task dependencies | ||

| example_dag_factory.clean_dags(globals()) | ||

| example_dag_factory.generate_dags(globals()) |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,52 @@ | ||

| example_taskflow: | ||

| default_args: | ||

| owner: "custom_owner" | ||

| start_date: 2 days | ||

| description: "Example of TaskFlow powered DAG that includes dynamic task mapping" | ||

| schedule_interval: "0 3 * * *" | ||

| default_view: "graph" | ||

| tasks: | ||

| some_number: | ||

| decorator: airflow.decorators.task | ||

| python_callable: sample.some_number | ||

| numbers_list: | ||

| decorator: airflow.decorators.task | ||

| python_callable_name: build_numbers_list | ||

| python_callable_file: $CONFIG_ROOT_DIR/sample.py | ||

| another_numbers_list: | ||

| decorator: airflow.decorators.task | ||

| python_callable: sample.build_numbers_list | ||

| double_number_from_arg: | ||

| decorator: airflow.decorators.task | ||

| python_callable: sample.double | ||

| number: 2 | ||

| double_number_from_task: | ||

| decorator: airflow.decorators.task | ||

| python_callable: sample.double | ||

| number: +some_number # the prefix + leads to resolving this value as the task `some_number`, previously defined | ||

| double_number_with_dynamic_task_mapping_static: | ||

| decorator: airflow.decorators.task | ||

| python_callable: sample.double | ||

| expand: | ||

| number: | ||

| - 1 | ||

| - 3 | ||

| - 5 | ||

| double_number_with_dynamic_task_mapping_taskflow: | ||

| decorator: airflow.decorators.task | ||

| python_callable: sample.double | ||

| expand: | ||

| number: +numbers_list # the prefix + tells DagFactory to resolve this value as the task `numbers_list`, previously defined | ||

| multiply_with_multiple_parameters: | ||

| decorator: airflow.decorators.task | ||

| python_callable: sample.multiply | ||

| expand: | ||

| a: +numbers_list # the prefix + tells DagFactory to resolve this value as the task `numbers_list`, previously defined | ||

| b: +another_numbers_list # the prefix + tells DagFactory to resolve this value as the task `another_numbers_list`, previously defined | ||

| double_number_with_dynamic_task_and_partial: | ||

| decorator: airflow.decorators.task | ||

| python_callable: sample.double_with_label | ||

| expand: | ||

| number: +numbers_list # the prefix + tells DagFactory to resolve this value as the task `numbers_list`, previously defined | ||

| partial: | ||

| label: True |

Oops, something went wrong.