Semantic search and workflows for medical/scientific papers

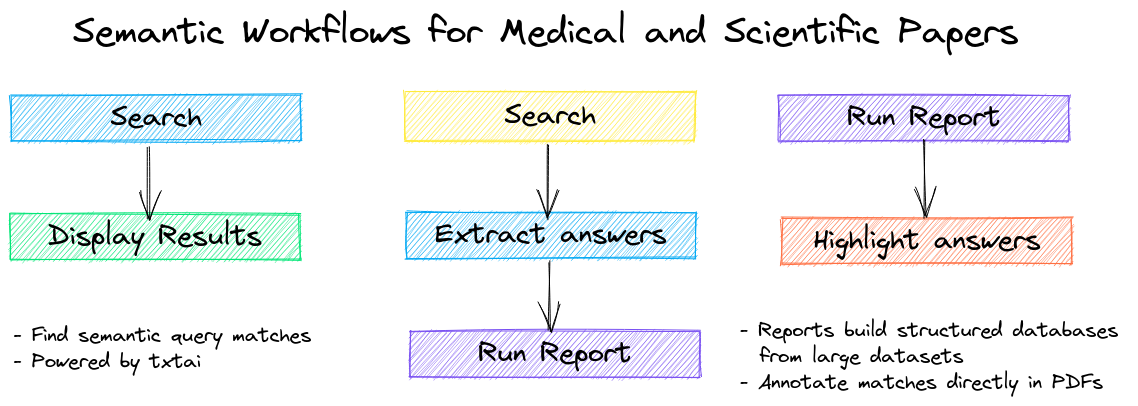

paperai is a semantic search and workflow application for medical/scientific papers.

Applications range from semantic search indexes that find matches for medical/scientific queries to full-fledged reporting applications powered by machine learning.

paperai and/or NeuML has been recognized in the following articles:

- Machine-Learning Experts Delve Into 47,000 Papers on Coronavirus Family

- Data scientists assist medical researchers in the fight against COVID-19

- CORD-19 Kaggle Challenge Awards

The easiest way to install is via pip and PyPI

pip install paperai

Python 3.8+ is supported. Using a Python virtual environment is recommended.

paperai can also be installed directly from GitHub to access the latest, unreleased features.

pip install git+https://github.com/neuml/paperai

See this link to help resolve environment-specific install issues.

Run the steps below to build a docker image with paperai and all dependencies.

wget https://raw.githubusercontent.com/neuml/paperai/master/docker/Dockerfile

docker build -t paperai .

docker run --name paperai --rm -it paperai

paperetl can be added in to have a single image to index and query content. Follow the instructions to build a paperetl docker image and then run the following.

docker build -t paperai --build-arg BASE_IMAGE=paperetl --build-arg START=/scripts/start.sh .

docker run --name paperai --rm -it paperai

The following notebooks and applications demonstrate the capabilities provided by paperai.

| Notebook | Description | |

|---|---|---|

| Introducing paperai | Overview of the functionality provided by paperai |

| Application | Description |

|---|---|

| Search | Search a paperai index. Set query parameters, execute searches and display results. |

paperai indexes databases previously built with paperetl. The following shows how to create a new paperai index.

-

(Optional) Create an index.yml file

paperai uses the default txtai embeddings configuration when not specified. Alternatively, an index.yml file can be specified that takes all the same options as a txtai embeddings instance. See the txtai documentation for more on the possible options. A simple example is shown below.

path: sentence-transformers/all-MiniLM-L6-v2 content: True -

Build embeddings index

python -m paperai.index <path to input data> <optional index configuration>

The paperai.index process requires an input data path and optionally takes index configuration. This configuration can either be a vector model path or an index.yml configuration file.

The fastest way to run queries is to start a paperai shell

paperai <path to model directory>

A prompt will come up. Queries can be typed directly into the console.

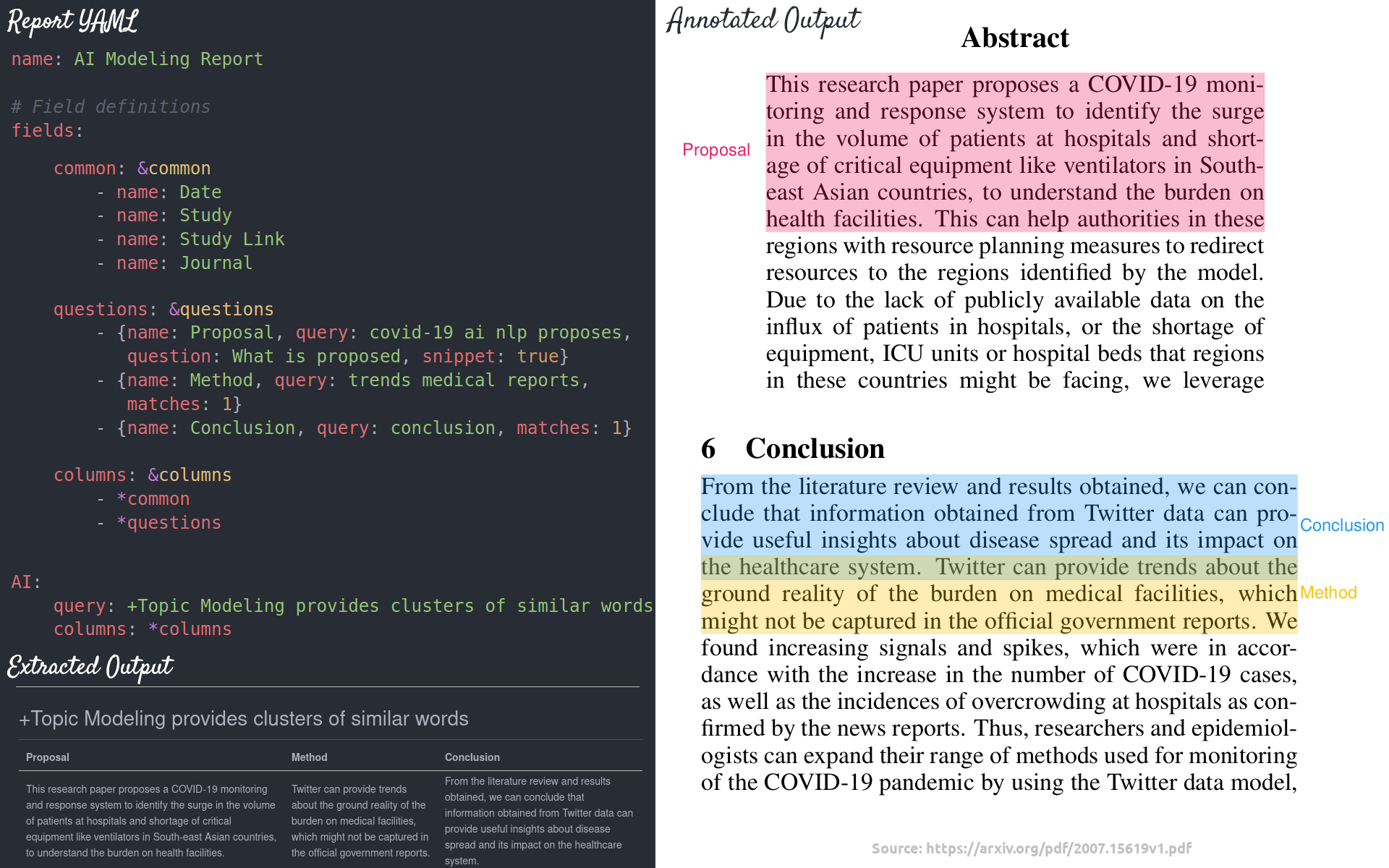

Reports support generating output in multiple formats. An example report call:

python -m paperai.report report.yml 50 md <path to model directory>

The following report formats are supported:

- Markdown (Default) - Renders a Markdown report. Columns and answers are extracted from articles with the results stored in a Markdown file.

- CSV - Renders a CSV report. Columns and answers are extracted from articles with the results stored in a CSV file.

- Annotation - Columns and answers are extracted from articles with the results annotated over the original PDF files. Requires passing in a path with the original PDF files.

In the example above, a file named report.md will be created. Example report configuration files can be found here.

paperai is a combination of a txtai embeddings index and a SQLite database with the articles. Each article is parsed into sentences and stored in SQLite along with the article metadata. Embeddings are built over the full corpus.

Multiple entry points exist to interact with the model.

- paperai.report - Builds a report for a series of queries. For each query, the top scoring articles are shown along with matches from those articles. There is also a highlights section showing the most relevant results.

- paperai.query - Runs a single query from the terminal

- paperai.shell - Allows running multiple queries from the terminal