Releases: appl-team/appl

Releases · appl-team/appl

v0.2.1

[v0.2.1] change default server settings (add small and large), enable env variables to config appl, update cursorrules

- Can configurate default small and large models, unify the interface (server name) for the developpers and users.

- Enable using environmental variable to update appl configs (requires

jsonargparse). - Update cursorrules to better use cursor to compose codes.

- Update docs for setup observability platforms.

- Support caching request with response_format as a pydantic model.

- Add

.env.exampleto help setup for new projects. - Add hint when Langfuse or Lunary is not configured.

APPL v0.2.0: Major Improvements Compared to v0.1.2

🚀 Key Updates

1. Automatic Initialization and Enhanced Configuration

- Auto Initialization: No need to call

appl.init()to initialize APPL. - Configuration Updates:

- Use

appl.yamlto overwrite the configs is still effective, with warning on items not in the config (useful for checking wrong configs). - Use

appl.init(**kwargs)or command line for runtime updates. - Introduced Pydantic models for stricter type checking of configs and

global_vars. - Command-line support via

jsonargparse(see cmd args example).

- Use

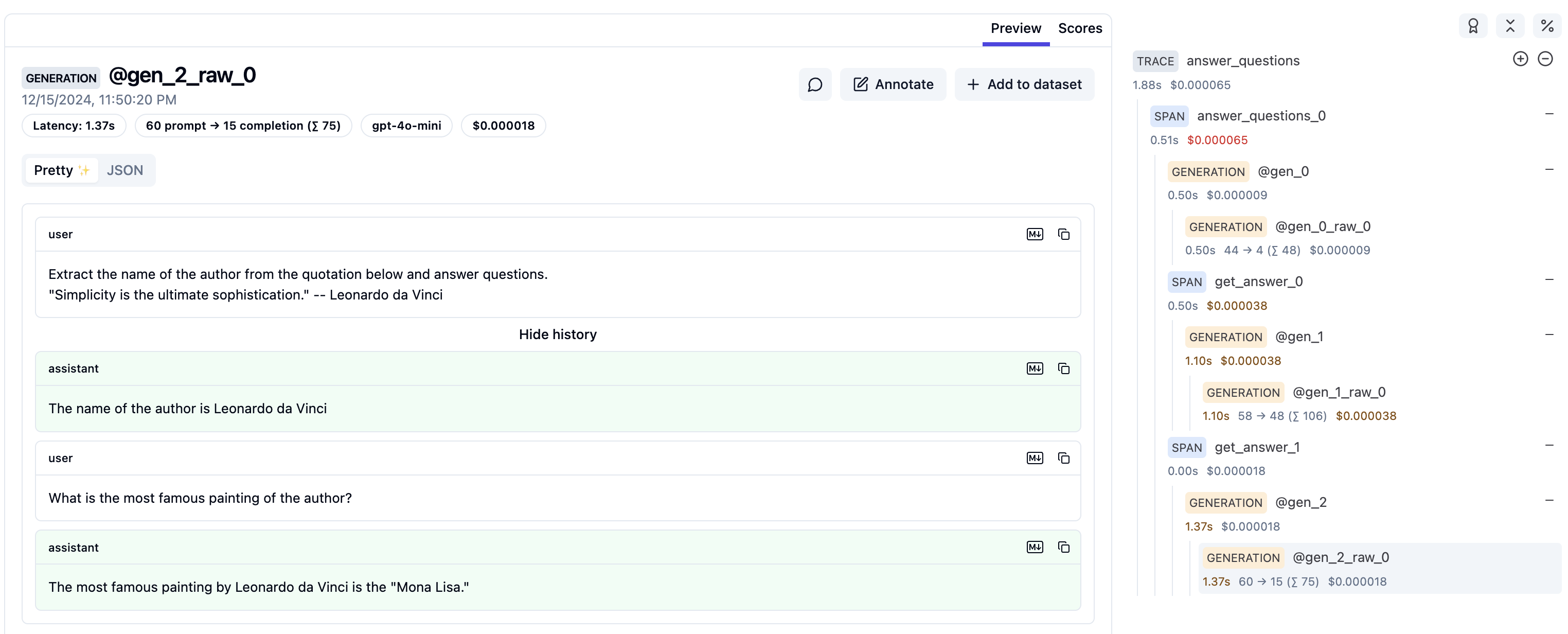

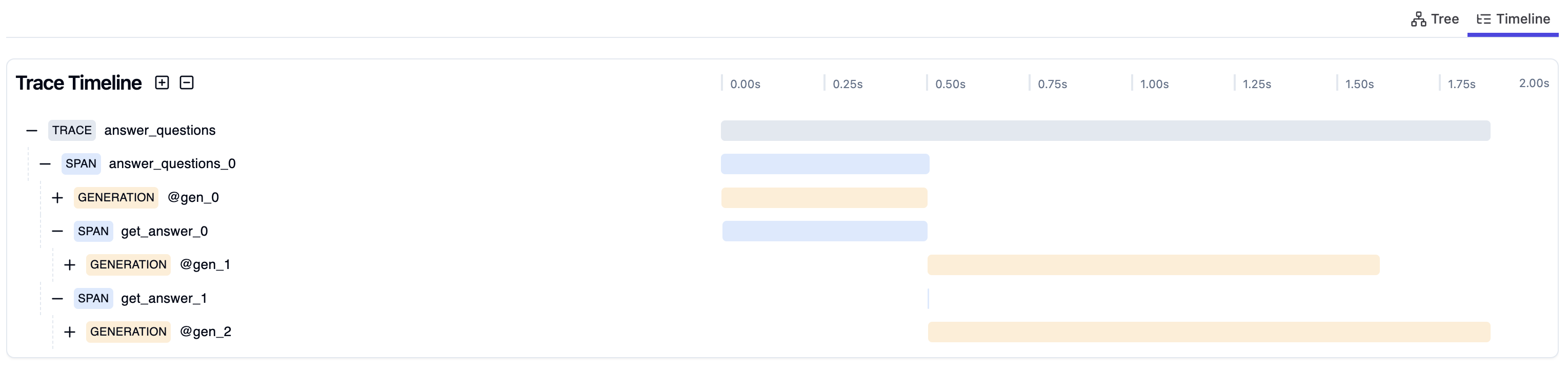

2. Advanced Tracing and Visualization

- Langfuse and Lunary Integration:

- Visualize traces with metadata such as git info and command-line arguments.

- Store code for

ppland@traceablefunctions in trace for viewing in Langfuse. - New

print_tracefunction to send traces, can be called at the end.

appltraceCommand: Export traces to multiple formats:- Langfuse (recommended), Lunary, HTML, Chrome tracing, etc.

3. New Features

- Explicit Growing Prompts:

- Use

growto explicitly grow the prompt.

- Use

- Independent

genCalls:appl.gen(orappl.completion) can be called outside ofpplfunction with messages, behavior like a wrapper aroundlitellm.completion.

- Auto Continuation:

- Automatically continue the generation stopped due to length limit. Ask for repeating the last line and concatenate by overlapping to achieve a reliable continuation.

- LLM Call Caching:

- Add persistent Database for caching LLM calls with

temperature=0.

- Add persistent Database for caching LLM calls with

- Concurrency:

- Add global executor pool with configurable

max_workersto limit the number of parallel LLM calls.

- Add global executor pool with configurable

- Better Image and Audio Support:

- Support pillow's Image as part of prompt.

- Support Audio as part of the prompt.

4. More Examples

- Tree-of-Thought:

- Add example for tree-of-thought with much less codes and enabled parallelization with about 6x speedup.

- Virtual Tool:

- Add example for using LLM to emulate tool execution.

- Streamlit:

- Add example for using streamlit to build a chat web-app with APPL.

- Command Args:

- Add example for using command args to update configs.

- Cursor Usage [Experimental]:

- Add example cursor rules for better using cursor to generate APPL codes.

5. Updated Default Configs

- Defaults:

- Default server is now

None(explicit setup required viaappl.yaml, command line, orappl.init(servers=...)). You can use model name as server name without setting the server configs. - Logging: Enabled file logging by default but disabled logging LLM call arguments by default.

- Streaming: default to

printthe streaming output, wasliveusingrich.Livebefore.

- Default server is now

6. Miscellaneous Fixes

- Minor bugs fixed and improved code readability.

- Code refactored for better maintainability.

Full Changelog: v0.1.2...v0.2.0

v0.1.2

Changelog

Multi-threading supports, auto-dedent multiline strings, supports response format, some fixes.

- Add support for multi-threading APPL function.

- Thread-specific logging file, filtered by tehe prefix of thread name.

- Allow assign prefix for LLM generations, used for tracing and caching.

- Add auto-dedent for multiline f-string.

- Use rich to show streaming messages.

- Add supports for

response_formatin LLM completion. - Remove langsmith dependency.

- Fix ruff and mypy check.

- Remove special exception handling inside APPL function (seems not necessary now).

v0.1.0

Changelog

- Change default llm to 'gpt-4o-mini' from 'gpt-3.5-turbo'.

- Defaults to exclude """docstring""" in the prompt, can be included by

include_docstring=True. - Change default structure output (

instructor) wrapper mode toJSON. - Add reset_context function to reset the context of functions with

ctx="resume". - Some fixes to examples, docs, mypy check, and tests.