generated from ansible-collections/collection_template

-

Notifications

You must be signed in to change notification settings - Fork 25

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

isolation at collection level #17

Merged

ansible-zuul

merged 1 commit into

ansible-collections:main

from

goneri:use-a-socket-per-collection_289

Oct 23, 2020

Merged

isolation at collection level #17

ansible-zuul

merged 1 commit into

ansible-collections:main

from

goneri:use-a-socket-per-collection_289

Oct 23, 2020

Conversation

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

553bb28 to

5f6151d

Compare

5f6151d to

e296fd6

Compare

Include the collection name in the socket path. This way we the modules of two different collections cannot access the same cache.

e296fd6 to

b160703

Compare

goneri

added a commit

to goneri/ansible

that referenced

this pull request

Oct 21, 2021

The traditional execution flow of an Ansible module includes

the following steps:

- Upload of a ZIP archive with the module and its dependencies

- Execution of the module, which is just a Python script

- Ansible collects the results once the script is finished

These steps happen for each task of a playbook, and on every host.

Most of the time, the execution of a module is fast enough for

the user. However, sometime the module requires an important

amount of time, just to initialize itself. This is a common

situation with the API based modules. A classic initialization

involves the following steps:

- Load a Python library to access the remote resource (via SDK)

- Open a client

- Load a bunch of Python modules.

- Request a new TCP connection.

- Create a session.

- Authenticate the client.

All these steps are time consuming and the same operations

will be running again and again.

For instance, here:

- `import openstack`: takes 0.569s

- `client = openstack.connect()`: takes 0.065s

- `client.authorize()`: takes 1.360s

These numbers are from test ran against VexxHost public cloud.

In this case, it's a 2s-ish overhead per task. If the playbook

comes with 10 tasks, the execution time cannot go below 20s.

`AnsibleTurboModule` is actually a class that inherites from

the standard `AnsibleModule` class that your modules probably

already use.

The big difference is that when an module starts, it also spawns

a little Python daemon. If a daemon already exists, it will just

reuse it.

All the module logic is run inside this Python daemon. This means:

- Python modules are actually loaded one time

- Ansible module can reuse an existing authenticated session.

If you are a collection maintainer and want to enable `AnsibleTurboModule`, you can

follow these steps.

Your module should inherit from `AnsibleTurboModule`, instead of `AnsibleModule`.

```python

from ansible_module.turbo.module import AnsibleTurboModule as AnsibleModule

```

You can also use the `functools.lru_cache()` decorator to ask Python to cache

the result of an operation, like a network session creation.

Finally, if some of the dependeded libraries are large, it may be nice

to defer your module imports, and do the loading AFTER the

`AnsibleTurboModule` instance creation.

The Ansible module is slightly different while using AnsibleTurboModule.

Here are some examples with OpenStack and VMware.

These examples use `functools.lru_cache` that is the Python core since 3.3.

`lru_cache()` decorator will managed the cache. It uses the function parameters

as unicity criteria.

- Integration with OpenStack Collection: goneri/ansible-collections-openstack@53ce986

- Integration with VMware Collection: https://github.com/goneri/vmware/commit/d1c02b93cbf899fde3a4665e6bcb4d7531f683a3

- Integration with Kubernetes Collection: ansible-collections/kubernetes.core#68

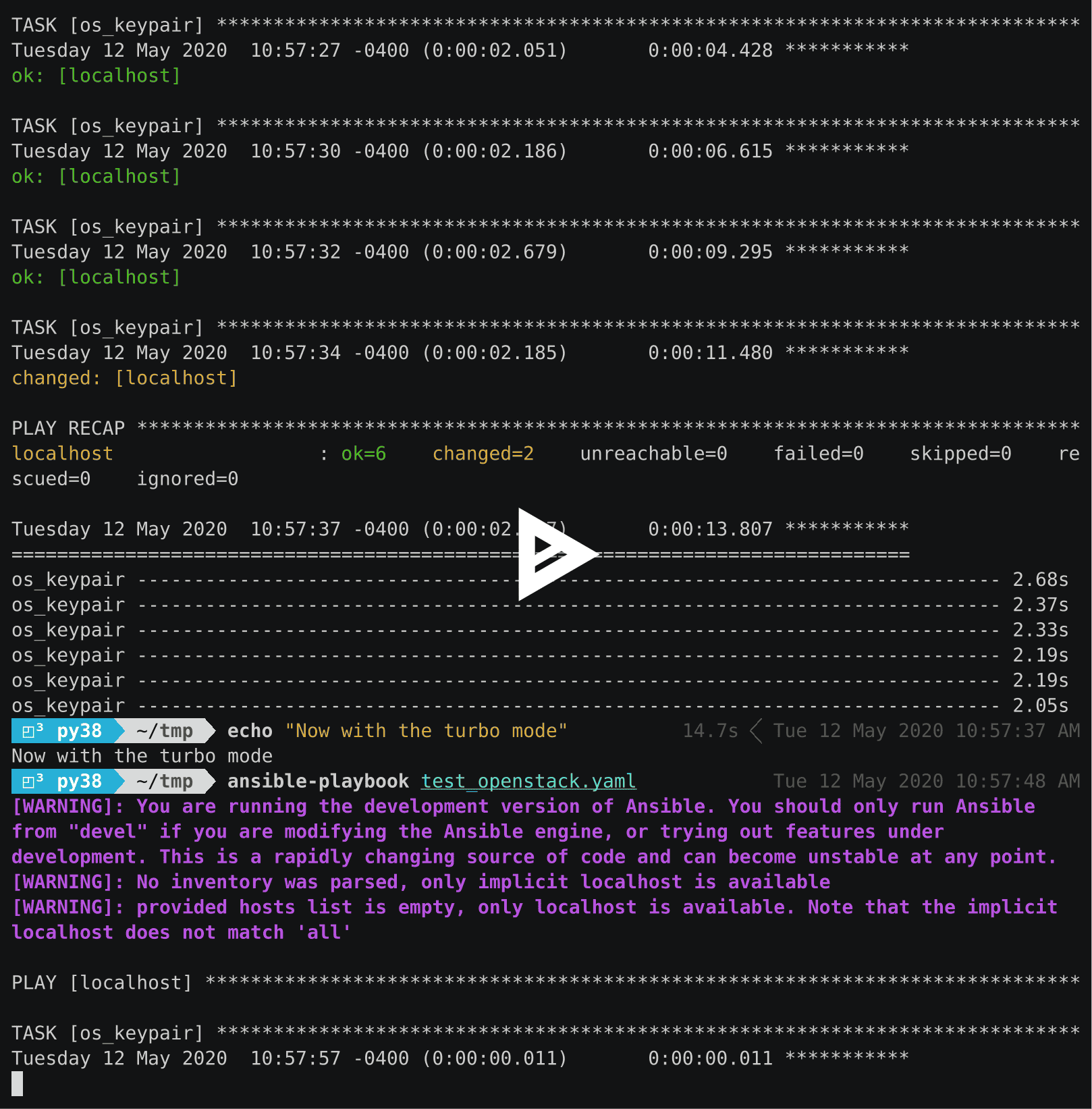

In this demo, we run one playbook that do several `os_keypair`

calls. For the first time, we run the regular Ansible module.

The second time, we run the same playbook, but with the modified

version.

[](https://asciinema.org/a/329481)

The daemon kills itself after 15s, and communication are done

through an Unix socket.

It runs in one single process and uses `asyncio` internally.

Consequently you can use the `async` keyword in your Ansible module.

This will be handy if you interact with a lot of remote systems

at the same time.

`ansible_module.turbo` open an Unix socket to interact with the background service.

We use this service to open the connection toward the different target systems.

This is similar to what SSH does with the sockets.

Keep in mind that:

- All the modules can access the same cache. Soon an isolation will be done at the collection level (ansible-collections/cloud.common#17)

- A task can loaded a different version of a library and impact the next tasks.

- If the same user runs two `ansible-playbook` at the same time, they will have access to the same cache.

When a module stores a session in a cache, it's a good idea to use a hash of the authentication information to identify the session.

.. note:: You may want to isolate your Ansible environemt in a container, in this case you can consider https://github.com/ansible/ansible-builder

`ansible_module.turbo` uses exception to communicate a result back to the module.

- `EmbeddedModuleFailure` is raised when `json_fail()` is called.

- `EmbeddedModuleSuccess` is raised in case of success and return the result to the origin module processthe origin.

Thse exceptions are defined in `ansible_collections.cloud.common.plugins.module_utils.turbo.exceptions`.

You can raise `EmbeddedModuleFailure` exception yourself, for instance from a module in `module_utils`.

Be careful with the catch all exception (`except Exception:`). Not only they are bad practice, but also may interface with this mechanism.

You may want to manually start the server. This can be done with the following command:

.. code-block:: shell

PYTHONPATH=$HOME/.ansible/collections python -m ansible.module_utils.turbo.server --socket-path $HOME/.ansible/tmp/turbo_mode.foo.bar.socket

Replace `foo.bar` with the name of the collection.

You can use the `--help` argument to get a list of the optional parameters.

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Include the collection name in the socket path. This way the modules

of two different collections cannot access the same cache.