-

Notifications

You must be signed in to change notification settings - Fork 3

Home

Welcome to the shared_autonomy_perception wiki!

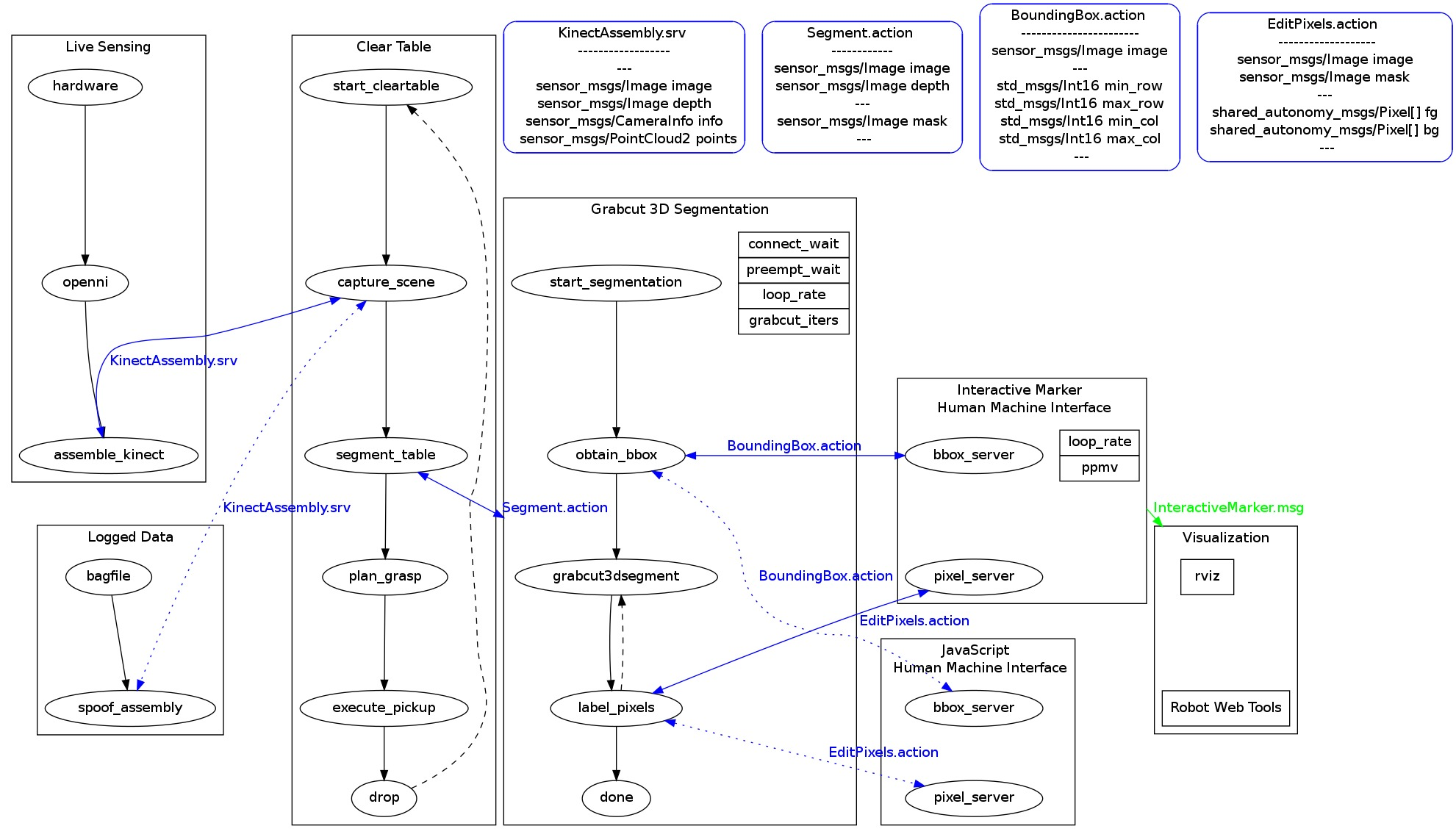

This repo provides a framework designed for testing shared autonomy for perception in the context of manipulation. Thus, in addition to an implementation of Grabcut3D and human-machine interfaces, it includes a state machine that performs a simple table-clearing task (using MoveIt! for the manipulation parts). It also has the tools required for comparing fully autonomous ORK cluster-based segmentation with grabcut's human-assisted result.

The following diagram provides an overview of how the different pieces fit together.

(assuming a PR2, but should be easily configurable to any robot controlled by MoveIt! with a kinect for perception) All the below should be done on the desktop unless otherwise specified.

- Install MoveIt!:

sudo apt-get install ros-hydro-moveit* - Install PR2 specific packages:

sudo apt-get install ros-hydro-pr2* - Install web packages:

sudo apt-get install ros-hydro-mjpeg-server ros-hydro-rosbridge-server* - The rest assumes that your hydro workspace is

~/catkin_hydro - Download the shared_autonomy_perception repo to both robot and desktop (master branch):

cd ~/catkin_hydro/src; git clone https://github.com/SharedAutonomyToolkit/shared_autonomy_perception.git - Download Bosch's roslibjs fork into ~catkin_hydro/src:

git clone https://github.com/bosch-ros-pkg/roslibjs.git; cd roslibjs/utils, and follow the install directions in the README. (On shelob, you'll probably only need to donpm install .; grunt build) - Build the

js_hmiinterface (JS doesn't use catkin):cd ~/catkin_hydro/src/shared_autonomy_perception/js_hmi/utils; npm install .; grunt build - Download the cluster_grasp_planner (extracted from arm_navigation, released as a single package):

cd ~/catkin_hydro/src; git clone https://github.com/bosch-ros-pkg/cluster_grasp_planner.git, and we need the hydro-devel branch:cd cluster_grasp_planner; git checkout hydro-devel - Install ORK, following the directions here

- (on robot):

roslaunch shared_autonomy_launch robot_hydro.launchThis is a slightly modified version of the /etc/ros/robot.launch file that starts the head-mounted kinect, but not any of the other cameras or the EKF localization. roslaunch shared_autonomy_launch move_group.launchroslaunch shared_autonomy_launch moveit_rviz.launch config:=true-

roslaunch shared_autonomy_launch clear_table_unified.launch use_grabcut:=true use_im:=falseThis launches the rest of the nodes required for clearing the table. Use the command line arguments to switch between shared autonomy using grabcut (use_grabcut:=true) and fully autonomous operation using ORK (use_grabcut:=false). You can also switch between using the Interactive Marker interface to grabcut (use_im:=false) and the JavaScript one (use_im:=true).- If using Interactive Markers, be sure to add them to your rviz display.

- If using JavaScript, open this file in your browser: shared_autonomy_perception/js_hmi/pages/interactive_segmentation_interfaces.html

We had trouble with MoveIt!'s internal octomap. More information here

For information on creating and using logs, see the assemble_kinect package.

Several of the packages are obsolete:

- augmented_object_selection

- bosch_object_segmentation

- image_segmentation_demo

Others just contain example code:

- random_snippets

- webtools