-

Notifications

You must be signed in to change notification settings - Fork 96

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Performance comparsion: AMD with ROCm vs NVIDIA with cuDNN? #173

Comments

|

If you happen to have access to some AMD GPUs that are supported by the ROCm stack, consider running some benchmarks from the TensorFlow benchmarks repository. The README in the Those scripts were used for the benchmarks shown on TensorFlows website. I've run the following on a Vega FE ( This yields the following. For comparison, the same command being run on a Tesla P100-PCIE-16GB ( Bear in mind, I haven't done anything to try and optimize performance on the Vega FE. These are essentially "out-of-the-box" results. |

|

@pricebenjamin when I try to run that same script ( I cloned the repo ) I get an import error: ImportError: No module named 'tensorflow.python.data.experimental' |

|

@Mandrewoid, if you haven't already, I'd recommend checking out the branch corresponding to your version of tensorflow, e.g. cd /path/to/benchmarks

git checkout cnn_tf_v1.11_compatible |

|

Nice that seems to have done it. I did not realize mainline TF had already advanced to 1.12 rookie mistake |

|

I have tried runnning benchmarks on my environment(Kernel 4.15, ROCm1.9.2, TF1.12 with RX 580). result are as follow: In my environment, Vgg16 has not runnning well. |

|

I have tested with vega64, ubuntu18.04, ROCm1.9.2, tf1.12: 4 mobilenet: python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=64 --model=mobilenet The nv gtx1080 ti was tested on another machine with cuda10, ubuntu 18.04. There are two values didn't add up:

Considering vega64 supports native half precision and fp16 should be a good selling point for amd vega. how is it slower if using fp16? I guess this is probably due to software support, especially ROCm. Can anyone please test it with --use_fp16 and see if having similar results. @kazulittlefox my vega runs smoothly with vgg16 @105images/sec |

|

@fshi98 that might be because of |

|

@Mandrewoid Thanks. That may be the reason. However, my rocblas version is 0.14.3.0, This may not be the same error bugs as #143, but may be some performance issues |

|

#288 FP16: This one made the GPU sound like a jet engine: FP 16: |

|

Hi @sebpuetz , maybe you can also try to enable fusion support :-) Doc is as following: |

|

Improvements across the board with |

|

Hi @sebpuetz , thanks for the update! |

|

|

Radeon RX Vega 64 Some Frameworks use option ' TF_ROCM_FUSION_ENABLE=1 ' doesn't change much, so I'm not giving the FUSION = 1 results. Due to lack of memory, there are some frameworks can't run on the batch_size=128.

|

|

Hi @sebpuetz , could you try to refresh your performance numbers using our official docker image? To run the benchmarks inside docker image: Thanks for your attention, and looking forward to your updates :-) |

|

6-core Intel i7 8700 with 16GB ram, and 400GB SSD disk. TC_ROCM_FUSION_ENABLE=1 python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=64 --model=resnet50 total images/sec: 248.34 TC_ROCM_FUSION_ENABLE=1 python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=128 --model=resnet50 total images/sec: 263.65 With a batch size of 256, i get out of memory errors. TC_ROCM_FUSION_ENABLE=1 python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=155 --model=resnet50 Step Img/sec total_loss total images/sec: 194.04 |

|

Leaving out TC_ROCM_FUSION_ENABLE does not make any difference. |

|

According to this blog, https://www.pugetsystems.com/labs/hpc/NVIDIA-RTX-2080-Ti-vs-2080-vs-1080-Ti-vs-Titan-V-TensorFlow-Performance-with-CUDA-10-0-1247/, the 2080Ti gets 280 images/sec and the 1080Ti gets 207 images/sec for FP32 training. |

|

One more: total images/sec: 377.79 |

|

@jimdowling that's some impressive perf ! |

|

@jimdowling these numbers seem substantially higher than the ones I got, what OS and kernel are you on? |

|

Hi, and @sunway513 these numbers are still pretty far away from what @jimdowling got, do you see a reason for this to happen? |

|

Ubuntu 18.04. Python 2.7. Kernel is 4.15. |

|

Hi @jimdowling , Thanks for your posting! However, it seems there's a typo in your script, therefore TF fusion is not really enabled there. Could you try the following command again? |

|

Hi @sebpuetz , thanks for your updated numbers with docker! |

|

Hi @sunway513 , Linux Mint 19.1 is based on Ubuntu 18.04, so this looks like a mismatch here? |

|

I am also using 2019-02-21 14:26:23.732074: I tensorflow/core/kernels/conv_grad_filter_ops.cc:975] running auto-tune for Backward-Filter

warning: <unknown>:0:0: loop not unrolled: the optimizer was unable to perform the requested transformation; the transformation might be disabled or specified as part of an unsupported transformation ordering

2019-02-21 14:26:27.702436: I tensorflow/core/kernels/conv_grad_input_ops.cc:1023] running auto-tune for Backward-Data

2019-02-21 14:26:29.084753: I tensorflow/core/kernels/conv_grad_filter_ops.cc:975] running auto-tune for Backward-Filter

warning: <unknown>:0:0: loop not unrolled: the optimizer was unable to perform the requested transformation; the transformation might be disabled or specified as part of an unsupported transformation ordering

2019-02-21 14:26:33.818470: I tensorflow/core/kernels/conv_grad_input_ops.cc:1023] running auto-tune for Backward-Data

2019-02-21 14:26:33.839322: I tensorflow/core/kernels/conv_grad_filter_ops.cc:975] running auto-tune for Backward-FilterAnd the performance is ~10% loss compared with others' benchmark: Step Img/sec total_loss

1 images/sec: 182.8 +/- 0.0 (jitter = 0.0) 8.217

10 images/sec: 187.2 +/- 0.9 (jitter = 0.7) 8.122

20 images/sec: 187.3 +/- 0.5 (jitter = 0.7) 8.229

30 images/sec: 187.1 +/- 0.4 (jitter = 0.9) 8.264

40 images/sec: 187.0 +/- 0.4 (jitter = 0.9) 8.347

50 images/sec: 187.0 +/- 0.3 (jitter = 1.1) 8.014

60 images/sec: 187.0 +/- 0.3 (jitter = 1.0) 8.264

70 images/sec: 186.8 +/- 0.3 (jitter = 1.1) 8.316

80 images/sec: 186.7 +/- 0.3 (jitter = 1.1) 8.231

90 images/sec: 186.7 +/- 0.2 (jitter = 1.2) 8.305But it should be expected to have about 207 images/sec. |

@mwrnd The performance regression on gfx803 has been fixed in ROCm v3.3. The issue was that assembly kernels were all disabled on gfx803 (see ROCm/MIOpen#134). |

|

GPU: MSI Radeon RX 580 Armor 8GB OC Benchmark dump. Command-line permutations were generated with cmds.py and log output processed with parse.py. Comparing ROCm 3.3.19 imagenet dataset total images/sec: cifar10 dataset total images/sec: DeepSpeech worked with a batch size of 16: CPU (Ryzen 5 3600X) total images/sec: |

|

Anyone else still fighting the AMD/ROCM drivers on a laptop. Even with the latest (Rev 20.10) and/or the latest ROCm I have the following peristent bugs related to (https://bugzilla.kernel.org/show_bug.cgi?id=203035):

Specs and results: |

|

@qixiang109 , MIOpen released pre-compiled kernels in ROCm3.5 release, aiming to reduce the overheads on startup. For more details, you can refer to the following document: |

|

I guess the following numbers are a bit problematic. Any ideas? Could it be the kernel? GPU: Radeon VII |

|

@papadako Can you try to set |

I get even worse results with the above settings I will try try to use a rocm-dkms supported kernel (i.e., 5.4.0) and report back |

|

@papadako , @huanzhang12 , i have the same performance (or similar) issue. I use vega 7nm, rhel 8.2, dkms drivers, rocm 3.5, tensorflow 2.2.0 (on 2.1.0 works fine). |

|

Running inside a Singularity container (v3.5.2) on host Ubuntu 18.04. GPU: Asus Radeon RX Vega 56 ROG Strix OC 8GB python3.7 tf_cnn_benchmarks.py --model=resnet50 --batch_size=64 Step Img/sec total_loss 1 images/sec: 132.0 +/- 0.0 (jitter = 0.0) 7.608 10 images/sec: 131.7 +/- 0.4 (jitter = 0.7) 7.849 20 images/sec: 131.4 +/- 0.3 (jitter = 0.8) 8.013 30 images/sec: 131.5 +/- 0.2 (jitter = 0.8) 7.940 40 images/sec: 131.4 +/- 0.2 (jitter = 0.8) 8.136 50 images/sec: 131.2 +/- 0.2 (jitter = 1.1) 8.052 60 images/sec: 131.2 +/- 0.1 (jitter = 1.0) 7.782 70 images/sec: 131.1 +/- 0.1 (jitter = 1.1) 7.853 80 images/sec: 131.2 +/- 0.1 (jitter = 1.1) 8.012 90 images/sec: 131.1 +/- 0.1 (jitter = 1.1) 7.843 100 images/sec: 131.0 +/- 0.1 (jitter = 1.3) 8.088 ---------------------------------------------------------------- total images/sec: 130.97 ---------------------------------------------------------------- |

|

Radeon VII Seems not as good as other Radeon VII posts. Got similar overhead mentioned in qixiang109's post |

|

I have similar issue, with lower than expected performance. The memory bandwidth is slow, which I don't know why. CPU: AMD Ryzen 7 3700X

|

|

Hi @nickdon2007 @webber26232 , thanks for reporting your observations. |

|

Ubuntu 20.04 Radeon VII rocm==3.7 |

|

Here are some RTX 3080 10GB results. (Obs.: When you see some low scores at higher batch sizes with Ryzen 9 5950X

|

|

Any 6900 XT benchmarks? |

|

@EmilPi 6900 XT would be very interesting indeed |

|

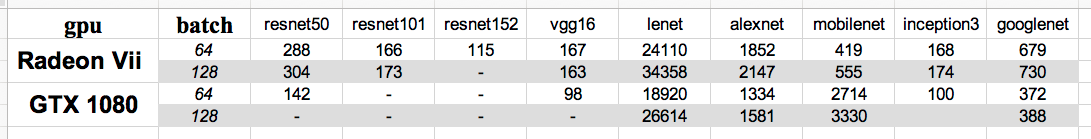

@dcominottim my GTX1080 and Radeon vii, training examples / second |

|

@Daniel451 @EmilPi @qixiang109 Unfortunately, without ROCm support for RDNA*, we can't test ROCm performance yet. However, I've managed to test a 6800 XT with tensorflow-directml (1.15.4, the latest version as of now) on W10! That's at least a little light for RDNA owners who are interested in ML. Here are the numbers: Ryzen 9 5950X

|

|

I have MI50 and V100 available which I can use for benchmarking. What would be the best benchmarks to run? I see the original benchmarks seem outdated |

|

Dose anyone try the benchmark on Rocm 4.5? Seemly Rocm now support gfx1030(6800xt/6900xt)? There is someone testing 6700xt: Link:https://www.zhihu.com/question/469674526/answer/2189926640 Linux-5.10, nvidia driver 460.91.03, cuda 11.2, pytorch-1.9.1,Tesla A100running benchmark for framework pytorch Linux-5.14.5, ROCm-4.3.0, pytorch-1.9.1, Radeon 6700XT :running benchmark for framework pytorch |

with not official support on rocm-4.5: ;) |

|

My previous Radeon VII results are here: #173 (comment) Hopefully AMD's next generation consumer GPUs include Matrix cores if they are actually bringing support to RDNA. Titan RTX: |

|

CDNA and up compute carte (MI100 ...) have already Matrix core ... but yes not "consomer" card Compared with RTX 3080 ... the old Titan is as fast... This benchmark is not optimised and no more update for tensorflow2... May be we can find some more update benchmark... |

|

The Titan RTX is slightly faster than a RTX 3080 since its FP32 accumulation runs at full throughput like Nvidia's professional/data center cards when using Tensor cores. Geforce RTX cards are limited to 0.5x throughput when using Tensor cores, it's a way to segment their lineup similar to how AMD/Nvidia limit FP64 throughput. https://lambdalabs.com/gpu-benchmarks I used this benchmark to test my MacBook M1 Pro and my Titan RTX: https://github.com/tlkh/tf-metal-experiments Model | GPU | BatchSize | Throughput |

|

If it's alright I'd like to post here the benchmark results I got with my rx 6800 using ROCm 4.5.2 and amdgpu-dkms version 1:5.11.32.40502-1350682 (from the latest amdgpu-pro driver stack): I guess these results are somewhat in line with @Djip007's results, as at least according to userbenchmark.com the 6900 XT is around 40% faster than the 6800. However, what I don't understand is how is the Radeon VII able to basically match the 6900 XT and beat the rx 6800 with the above set of benchmarks which I ran, when both the 6900 XT and 6800 are clearly the faster GPUs at least according to Geekbench's OpenCL benchmarks (note how the 6900 XT scores 167460, the 6800 scores 129251, and the Vega 20 i.e. Radeon VII only scores 96073). Could this performance discrepancy be explained by the Radeon VII's massive memory bandwidth, or the fact that the RDNA series of GPUs are not as optimized for compute (microarch-wise) as Radeon VII, or is it just that ROCm and the amdgpu-pro drivers haven't been optimized for performing compute tasks on RDNA cards? |

|

Ran some benchmarks on my RX6800XT (Quiet Mode Switch) in the Docker ROCm5.2.0-TF2.8-dev container: I would try it in Power Mode, but that would involve opening my case and flipping the BIOS switch on the card, which is too much work at this time. |

It would be very useful to compare real training performance on amd and nvidia cards.

For Nvidia cards we have a lot of graphs and tests, for example:

https://github.com/u39kun/deep-learning-benchmark

But for AMD cards there is no performance metrics.

It will be great to made direct comparsion between AND and NVIDIA with last cuDNN.

The text was updated successfully, but these errors were encountered: