-

-

Notifications

You must be signed in to change notification settings - Fork 5.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

ReentrantLock: wakeup a single task on unlock and add a short spin #56814

ReentrantLock: wakeup a single task on unlock and add a short spin #56814

Conversation

base/lock.jl

Outdated

| # Instead, the backoff is simply capped at a maximum value. This can be | ||

| # used to improve throughput in `compare_exchange` loops that have high | ||

| # contention. | ||

| @inline function spin(iteration::Int) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

is this better than just calling yield?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In this case we do not want to leave the core but instead just busy wait in-core for a small number of iterations before we attempt the compare_exchange again, this way if the critical section of the lock is small enough we have a chance to acquire the lock without paying for a OS thread context switch (or a Julia scheduler task switch if you mean Base.yield).

This is the same strategy employed by Rust here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I meant Base.yield. I think we're in a different situation than Rust since we have M:N threading and a user mode scheduler which Rust doesn't.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, I am aware of the differences but the one that matters most in this case is that Julia has a cooperative scheduler. This means we have a tradeoff between micro-contention throughput (where we want to stay in-core) and being nice to the other tasks (by calling Base.yield).

So I wrote a benchmark that measures the total amount of forward progress that can be made both by the tasks participating in locking as well as other unrelated tasks to see where we reach a good balance in this tradeoff. It turns out yielding to the Julia scheduler up to a limited amount seems to work great and does not suffer from the pathological case of always spinning in the scheduler (the first example in the PR description).

I will update the code and post benchmark results soon-ish (my daughter arrived yesterday!).

Thanks, @gbaraldi for working on benchmarks also, maybe I can contribute this new benchmark to your LockBench.jl

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@andrebsguedes It would be lovely to add more benchmarks to it. Having a suite of benchmarks that stress locks in different ways would be great.

|

(this PR is fantastically written! professional, comprehensive, and easy to follow 👏👏) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks! Very well written PR.

|

Another point of comparison could be https://github.com/kprotty/usync which is just a "normal" lock |

|

I wrote https://github.com/gbaraldi/LockBench.jl and there it seems to be a good upgrade |

|

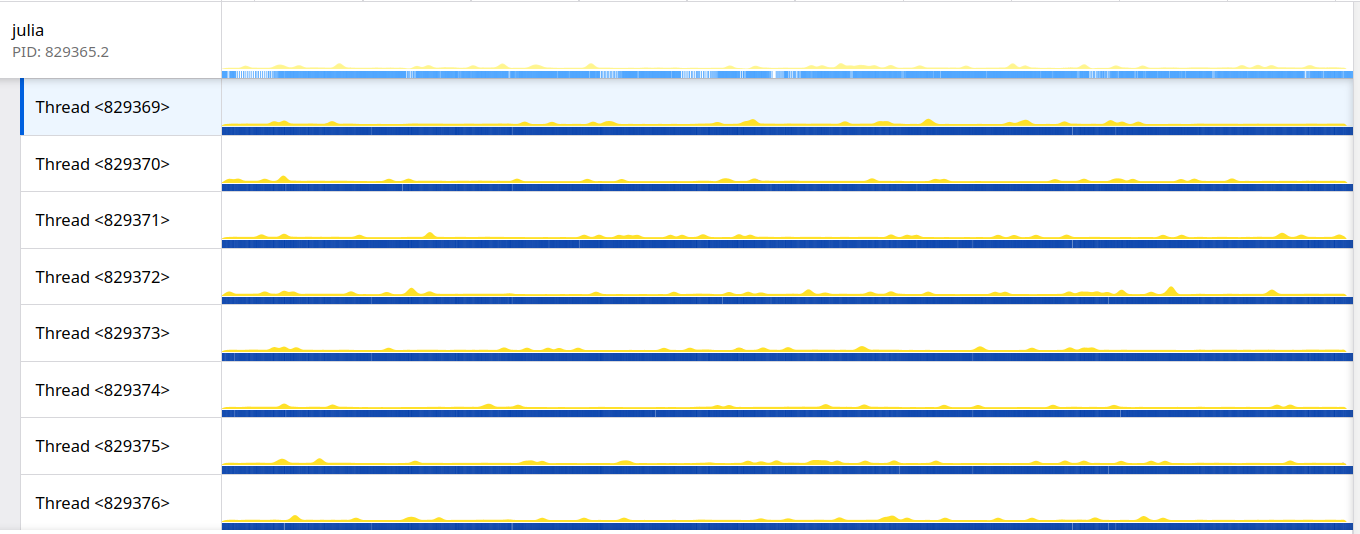

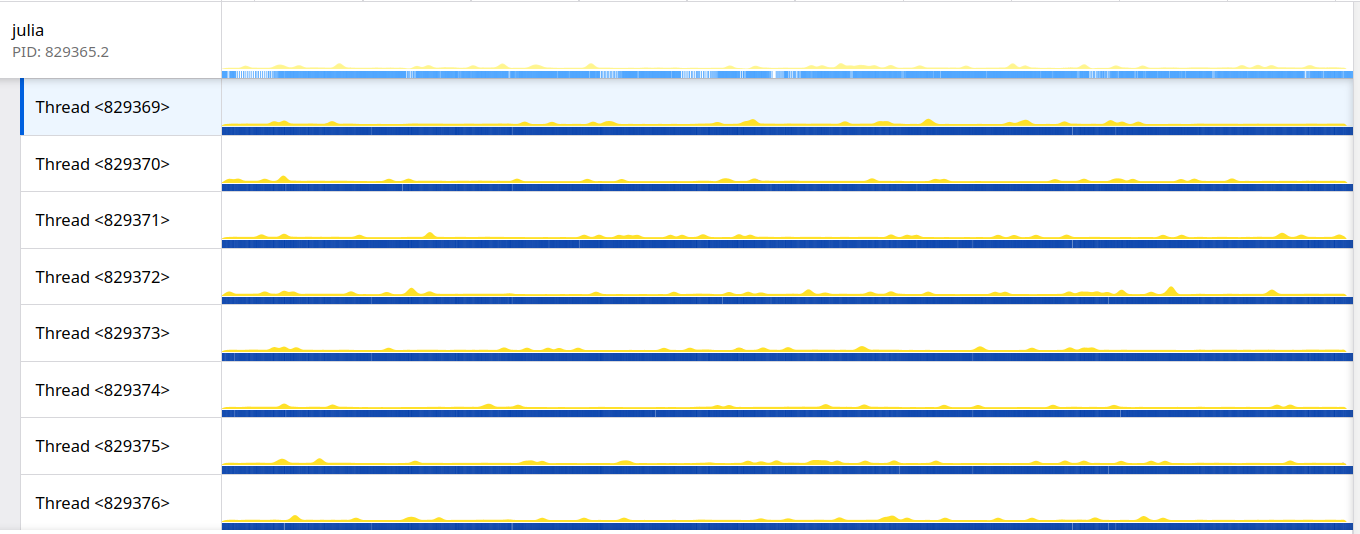

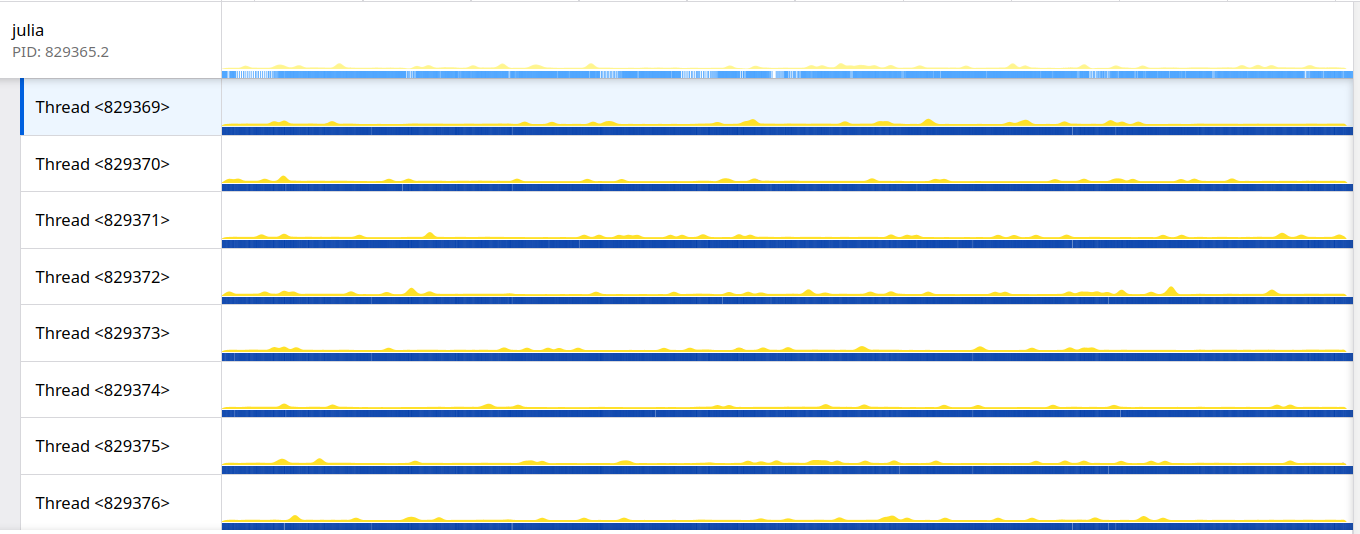

I updated the proposal to reflect the changes from tuning for fairness to non-locking tasks also. The following benchmark introduces competing tasks that perform a unit of work and call Current implementation (thundering heard, 8 threads, 8 tasks locking, 8 task yielding, short critical section)Initial proposal (limited CPU spin, 8 threads, 8 tasks locking, 8 tasks yielding, short critical section)New proposal (limited scheduler spin, 8 threads, 8 tasks locking, 8 tasks yielding, short critical section)Some observations:

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This looks good to me. The new benchmark is a good idea. @andrebsguedes: ifi you don't have enough time to add it to the lock benchmark suite, please share it with @gbaraldi to do so if/when he gets time!

Nice work!

|

@andrebsguedes: CI failures seem related: first, second. |

|

CI shows only upload failures. @vtjnash: this is good to go IMO. Can you take a look? |

|

We run Julia on very high core count machines and I'm pleased to see progress on scalability issues like this. Would the same kind of pattern be applicable to Channel condition notifications? Channels currently wake all waiters on put, which can lead to massive scheduler churn (at the OS level) to wake threads that have no work available. |

…uliaLang#56814) I propose a change in the implementation of the `ReentrantLock` to improve its overall throughput for short critical sections and fix the quadratic wake-up behavior where each unlock schedules **all** waiting tasks on the lock's wait queue. This implementation follows the same principles of the `Mutex` in the [parking_lot](https://github.com/Amanieu/parking_lot/tree/master) Rust crate which is based on the Webkit [WTF::ParkingLot](https://webkit.org/blog/6161/locking-in-webkit/) class. Only the basic working principle is implemented here, further improvements such as eventual fairness will be proposed separately. The gist of the change is that we add one extra state to the lock, essentially going from: ``` 0x0 => The lock is not locked 0x1 => The lock is locked by exactly one task. No other task is waiting for it. 0x2 => The lock is locked and some other task tried to lock but failed (conflict) ``` To: ``` ``` In the current implementation we must schedule all tasks to cause a conflict (state 0x2) because on unlock we only notify any task if the lock is in the conflict state. This behavior means that with high contention and a short critical section the tasks will be effectively spinning in the scheduler queue. With the extra state the proposed implementation has enough information to know if there are other tasks to be notified or not, which means we can always notify one task at a time while preserving the optimized path of not notifying if there are no tasks waiting. To improve throughput for short critical sections we also introduce a bounded amount of spinning before attempting to park. Not spinning on the scheduler queue greatly reduces the CPU utilization of the following example: ```julia function example() lock = ReentrantLock() @sync begin for i in 1:10000 Threads.@Spawn begin @lock lock begin sleep(0.001) end end end end end @time example() ``` Current: ``` 28.890623 seconds (101.65 k allocations: 7.646 MiB, 0.25% compilation time) ```  Proposed: ``` 22.806669 seconds (101.65 k allocations: 7.814 MiB, 0.35% compilation time) ```  In a micro-benchmark where 8 threads contend for a single lock with a very short critical section we see a ~2x improvement. Current: ``` 8-element Vector{Int64}: 6258688 5373952 6651904 6389760 6586368 3899392 5177344 5505024 Total iterations: 45842432 ``` Proposed: ``` 8-element Vector{Int64}: 12320768 12976128 10354688 12845056 7503872 13598720 13860864 11993088 Total iterations: 95453184 ``` ~~In the uncontended scenario the extra bookkeeping causes a 10% throughput reduction:~~ EDIT: I reverted _trylock to the simple case to recover the uncontended throughput and now both implementations are on the same ballpark (without hurting the above numbers). In the uncontended scenario: Current: ``` Total iterations: 236748800 ``` Proposed: ``` Total iterations: 237699072 ``` Closes JuliaLang#56182

|

|

…56814) I propose a change in the implementation of the `ReentrantLock` to improve its overall throughput for short critical sections and fix the quadratic wake-up behavior where each unlock schedules **all** waiting tasks on the lock's wait queue. This implementation follows the same principles of the `Mutex` in the [parking_lot](https://github.com/Amanieu/parking_lot/tree/master) Rust crate which is based on the Webkit [WTF::ParkingLot](https://webkit.org/blog/6161/locking-in-webkit/) class. Only the basic working principle is implemented here, further improvements such as eventual fairness will be proposed separately. The gist of the change is that we add one extra state to the lock, essentially going from: ``` 0x0 => The lock is not locked 0x1 => The lock is locked by exactly one task. No other task is waiting for it. 0x2 => The lock is locked and some other task tried to lock but failed (conflict) ``` To: ``` # PARKED_BIT | LOCKED_BIT | Description # 0 | 0 | The lock is not locked, nor is anyone waiting for it. # -----------+------------+------------------------------------------------------------------ # 0 | 1 | The lock is locked by exactly one task. No other task is # | | waiting for it. # -----------+------------+------------------------------------------------------------------ # 1 | 0 | The lock is not locked. One or more tasks are parked. # -----------+------------+------------------------------------------------------------------ # 1 | 1 | The lock is locked by exactly one task. One or more tasks are # | | parked waiting for the lock to become available. # | | In this state, PARKED_BIT is only ever cleared when the cond_wait lock # | | is held (i.e. on unlock). This ensures that # | | we never end up in a situation where there are parked tasks but # | | PARKED_BIT is not set (which would result in those tasks # | | potentially never getting woken up). ``` In the current implementation we must schedule all tasks to cause a conflict (state 0x2) because on unlock we only notify any task if the lock is in the conflict state. This behavior means that with high contention and a short critical section the tasks will be effectively spinning in the scheduler queue. With the extra state the proposed implementation has enough information to know if there are other tasks to be notified or not, which means we can always notify one task at a time while preserving the optimized path of not notifying if there are no tasks waiting. To improve throughput for short critical sections we also introduce a bounded amount of spinning before attempting to park. ### Results Not spinning on the scheduler queue greatly reduces the CPU utilization of the following example: ```julia function example() lock = ReentrantLock() @sync begin for i in 1:10000 Threads.@Spawn begin @lock lock begin sleep(0.001) end end end end end @time example() ``` Current: ``` 28.890623 seconds (101.65 k allocations: 7.646 MiB, 0.25% compilation time) ```  Proposed: ``` 22.806669 seconds (101.65 k allocations: 7.814 MiB, 0.35% compilation time) ```  In a micro-benchmark where 8 threads contend for a single lock with a very short critical section we see a ~2x improvement. Current: ``` 8-element Vector{Int64}: 6258688 5373952 6651904 6389760 6586368 3899392 5177344 5505024 Total iterations: 45842432 ``` Proposed: ``` 8-element Vector{Int64}: 12320768 12976128 10354688 12845056 7503872 13598720 13860864 11993088 Total iterations: 95453184 ``` ~~In the uncontended scenario the extra bookkeeping causes a 10% throughput reduction:~~ EDIT: I reverted _trylock to the simple case to recover the uncontended throughput and now both implementations are on the same ballpark (without hurting the above numbers). In the uncontended scenario: Current: ``` Total iterations: 236748800 ``` Proposed: ``` Total iterations: 237699072 ``` Closes #56182

I'm not following how, could you explain, please? |

|

Channel uses a ReentrantLock, fixing this fixes channel put. |

For the lock, yes, but it notifies all waiters of the condition variable https://github.com/JuliaLang/julia/blob/v1.11.2/base/channels.jl#L386: # notify all, since some of the waiters may be on a "fetch" call.

notify(c.cond_take, nothing, true, false) |

|

wait, what the hell is this doing? why would we notify waiters of the lock before we unlock? Isn't that just going to cause a bunch of contention for no reason? |

https://en.wikipedia.org/wiki/Monitor_(synchronization)#Condition_variables_2 |

…uliaLang#56814) (#200) I propose a change in the implementation of the `ReentrantLock` to improve its overall throughput for short critical sections and fix the quadratic wake-up behavior where each unlock schedules **all** waiting tasks on the lock's wait queue. This implementation follows the same principles of the `Mutex` in the [parking_lot](https://github.com/Amanieu/parking_lot/tree/master) Rust crate which is based on the Webkit [WTF::ParkingLot](https://webkit.org/blog/6161/locking-in-webkit/) class. Only the basic working principle is implemented here, further improvements such as eventual fairness will be proposed separately. The gist of the change is that we add one extra state to the lock, essentially going from: ``` 0x0 => The lock is not locked 0x1 => The lock is locked by exactly one task. No other task is waiting for it. 0x2 => The lock is locked and some other task tried to lock but failed (conflict) ``` To: ``` ``` In the current implementation we must schedule all tasks to cause a conflict (state 0x2) because on unlock we only notify any task if the lock is in the conflict state. This behavior means that with high contention and a short critical section the tasks will be effectively spinning in the scheduler queue. With the extra state the proposed implementation has enough information to know if there are other tasks to be notified or not, which means we can always notify one task at a time while preserving the optimized path of not notifying if there are no tasks waiting. To improve throughput for short critical sections we also introduce a bounded amount of spinning before attempting to park. Not spinning on the scheduler queue greatly reduces the CPU utilization of the following example: ```julia function example() lock = ReentrantLock() @sync begin for i in 1:10000 Threads.@Spawn begin @lock lock begin sleep(0.001) end end end end end @time example() ``` Current: ``` 28.890623 seconds (101.65 k allocations: 7.646 MiB, 0.25% compilation time) ```  Proposed: ``` 22.806669 seconds (101.65 k allocations: 7.814 MiB, 0.35% compilation time) ```  In a micro-benchmark where 8 threads contend for a single lock with a very short critical section we see a ~2x improvement. Current: ``` 8-element Vector{Int64}: 6258688 5373952 6651904 6389760 6586368 3899392 5177344 5505024 Total iterations: 45842432 ``` Proposed: ``` 8-element Vector{Int64}: 12320768 12976128 10354688 12845056 7503872 13598720 13860864 11993088 Total iterations: 95453184 ``` ~~In the uncontended scenario the extra bookkeeping causes a 10% throughput reduction:~~ EDIT: I reverted _trylock to the simple case to recover the uncontended throughput and now both implementations are on the same ballpark (without hurting the above numbers). In the uncontended scenario: Current: ``` Total iterations: 236748800 ``` Proposed: ``` Total iterations: 237699072 ``` Closes JuliaLang#56182 Co-authored-by: André Guedes <[email protected]>

I propose a change in the implementation of the

ReentrantLockto improve its overall throughput for short critical sections and fix the quadratic wake-up behavior where each unlock schedules all waiting tasks on the lock's wait queue.This implementation follows the same principles of the

Mutexin the parking_lot Rust crate which is based on the Webkit WTF::ParkingLot class. Only the basic working principle is implemented here, further improvements such as eventual fairness will be proposed separately.The gist of the change is that we add one extra state to the lock, essentially going from:

To:

In the current implementation we must schedule all tasks to cause a conflict (state 0x2) because on unlock we only notify any task if the lock is in the conflict state. This behavior means that with high contention and a short critical section the tasks will be effectively spinning in the scheduler queue.

With the extra state the proposed implementation has enough information to know if there are other tasks to be notified or not, which means we can always notify one task at a time while preserving the optimized path of not notifying if there are no tasks waiting. To improve throughput for short critical sections we also introduce a bounded amount of spinning before attempting to park.

Results

Not spinning on the scheduler queue greatly reduces the CPU utilization of the following example:

Current:

Proposed:

In a micro-benchmark where 8 threads contend for a single lock with a very short critical section we see a ~2x improvement.

Current:

Proposed:

In the uncontended scenario the extra bookkeeping causes a 10% throughput reduction:EDIT: I reverted _trylock to the simple case to recover the uncontended throughput and now both implementations are on the same ballpark (without hurting the above numbers).

In the uncontended scenario:

Current:

Proposed:

Closes #56182