-

Notifications

You must be signed in to change notification settings - Fork 47

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

merge in local branch for v3.0 release #64

Conversation

Avoid shell=True security issues with Popen

…eepspeedai#6488) * Handles an edge case when building `gds` where `CUDA_HOME` is not defined on ROCm systems

**Auto-generated PR to update version.txt after a DeepSpeed release** Released version - 0.15.1 Author - @loadams Co-authored-by: loadams <[email protected]>

Co-authored-by: Olatunji Ruwase <[email protected]>

Set the default value of op_builder/xxx.py/is_compatible()/verbose to False for quite warning. Add verbose judgement before op_builder/xxx.py/is_compatible()/self.warning(...). Otherwise the verbose arg will not work. --------- Co-authored-by: Logan Adams <[email protected]>

…dai#6484) Co-authored-by: Logan Adams <[email protected]>

Simple changes to fix the Intel cpu example link and add more xpu examples. Signed-off-by: roger feng <[email protected]>

…5878) In some multi-node environment like SLURM,there are some environment vars that contain special chars and can trigger errors when being exported. For example, there is a var `SLURM_JOB_CPUS_PER_NODE=64(x2)` when requesting two nodes with 64 cpus using SLURM. Using `runner.add_export` to export this var will add a command `export SLURM_JOB_CPUS_PER_NODE=64(x2)` when launching subprocesses, while this will cause a bash error since `(` is a key word of bash, like: ``` [2024-08-07 16:56:24,651] [INFO] [runner.py:568:main] cmd = pdsh -S -f 1024 -w server22,server27 export PYTHONPATH=/public/home/grzhang/code/CLIP-2; export SLURM_JOB_CPUS_PER_NODE=64(x2); ... server22: bash: -c: 行 0: 未预期的符号“(”附近有语法错误 ``` This PR simply wrap the environment vars with a pair of `"` to make sure they are treated as string. Co-authored-by: Logan Adams <[email protected]>

…k" (deepspeedai#6508) Reverts deepspeedai#5328 After offline discussion with @YangQun1 , we agreed that there is no memory effect as clear_lp_grads flag triggers zero_() ops which just zeros buffers and does not free any memory. the outcome is compute overhead.

Avoid security issues of `shell=True` in subprocess --------- Co-authored-by: Logan Adams <[email protected]>

…epspeedai#6517) Changes from deepspeedai#4724 broke support for torch<2.0 in the flops profiler as the scaled_dot_product_attention [wasn't added](https://pytorch.org/docs/2.0/generated/torch.nn.functional.scaled_dot_product_attention.html#torch.nn.functional.scaled_dot_product_attention) until a beta version in torch 2.0 Resolved: deepspeedai#5534 Todo: - [ ] Test this - [ ] Issue resolution with users.

Added Intel Gaudi to the list of accelerators in the setup guide. Co-authored-by: sakell <[email protected]> Co-authored-by: Logan Adams <[email protected]>

Adding the new tests in huggingface/accelerate#3097 caused the nv-accelerate-v100 tests to fail. Due to other CI issues we didn't notice this at first. This just skips the problematic test for now. cc: @stas00 / @muellerzr

Use msgpack for P2P communication in pipeline engine. Co-authored-by: Logan Adams <[email protected]>

Add performance tuning utilities: `ds_nvme_tune` and `ds_io`. Update tutorial with tuning section. --------- Co-authored-by: Ubuntu <[email protected]> Co-authored-by: Joe Mayer <[email protected]>

### Description This PR includes Cambricon MLU accelerator support. With this PR, DeepSpeed supports MLU as backend for training and inference tasks. --------- Co-authored-by: Logan Adams <[email protected]>

The ZeRO 1/2 optimizer performs incorrect gradient accumulation in the path for ZeRO2 + Offloading. This issue is caused by two main reasons: 1) The micro_step_id in the ZeRO 1/2 optimizer is: - Initialized to 0 in the constructor. - Reset to -1 during the backward pass. For example, given a gradient accumulation step of 4, the micro_step_id changes as follows: - For the first global step: 1, 2, 3, 4. - Subsequently: 0, 1, 2, 3. 2) Gradients are copied to the buffer on the first micro step and accumulated in the buffer during the following micro steps. However, the current code incorrectly copies gradients at steps that are not at the accumulation boundary. This PR aligns the micro_step_id initialization in both the constructor and the backward pass, and corrects the condition for copying and accumulating gradients. Co-authored-by: Olatunji Ruwase <[email protected]> Co-authored-by: Logan Adams <[email protected]>

…eedai#6564) When setting zero3 leaf modules to a higher level module and running with torch.compile, there are a few errors from ZeROOrderedDict. First it doesn't support Deep copy for not having a constructor with no parameters. Second, it doesn't check the existence of ds_status attr on param before accessing the attr. change contributed by Haifeng Chen Co-authored-by: Olatunji Ruwase <[email protected]> Co-authored-by: Logan Adams <[email protected]>

In DeepNVMe GDS update, many functions are changed into a more abstract way. Also added some files. These change break zero-infinity on XPU. To bring this feature back, we have this PR: 1. modify the aio opbuilder for new files. 2. Add custom cpu_op_desc_t for xpu users. (XPU don't handle buffer aligned here) --------- Co-authored-by: Olatunji Ruwase <[email protected]> Co-authored-by: Logan Adams <[email protected]>

…ai#6011) This PR adds the following APIs to offload model, optimizer, and engine states. ```pytyon def offload_states(self, include: Container[OffloadStateTypeEnum] = None, device: OffloadDeviceEnum = OffloadDeviceEnum.cpu, pin_memory: bool = True, non_blocking: bool = False) -> None: """Move the ZeRO optimizer buffers to the specified device. Arguments: include: Optional. The set of states to offload. If not provided, all states are offloaded. device: Optional. The device to move the ZeRO optimizer buffers to. pin_memory: Optional. Whether to pin the memory of the offloaded states. non_blocking: Optional. Whether to offload the states asynchronously. ... def offload_states_back(self, non_blocking: bool = False) -> None: ``` Here is the typical usage. ```python # Offload after forward, backward, and step model.offload_states() # Do something requiring a lot of device memory ... # Load states back to device memory model.offload_states_back() ``` You can selectively offload states to balance the offloading overhead and memory saving. ```python model.offload_states(include=set([OffloadStateTypeEnum.hp_params, OffloadStateTypeEnum.opt_states], device=OffloadDeviceEnum.cpu) ``` Performance (4.3B parameters / 4x A100) - Environment (4x A100, [benchmark script](https://gist.github.com/tohtana/05d5faba5068cf839abfc7b1e38b85e4)) - Average Device to Host transfer time: 2.45 GB/s, aggregated: 9.79 GB/s - Average Host to Device transfer: 11.05 GB/s, aggregated: 44.19 GB/s - Mem (allocated by PyTorch) - Before offload 18.2GB - After offloading 17.7MB - Time ([benchmark script](https://github.com/microsoft/DeepSpeedExamples/tree/tohtana/offload_states/training/offload_states), offloading time/loading time) python output_table.py | |pin_memory=0 non_blocking=0|pin_memory=0 non_blocking=1|pin_memory=1 non_blocking=0|pin_memory=1 non_blocking=1| |--:|---------------------------|---------------------------|---------------------------|---------------------------| | 1|4.34 / 3.42 |4.99 / 2.37 |6.5 / 2.42 |6.0 / 2.39 | | 2|9.9 / 3.28 |5.1 / 2.34 |6.21 / 2.42 |6.25 / 2.45 | | 3|9.92 / 3.19 |6.71 / 2.35 |6.33 / 2.38 |5.93 / 2.42 | | 4|9.55 / 2.82 |7.11 / 2.39 |6.9 / 2.38 |6.5 / 2.43 | | 5|4.4 / 3.35 |6.04 / 2.41 |6.26 / 2.41 |6.32 / 2.47 | | 6|4.4 / 3.57 |6.58 / 2.42 |6.88 / 2.4 |6.35 / 2.43 | | 7|9.51 / 3.12 |6.9 / 2.39 |6.9 / 2.39 |6.46 / 2.4 | | 8|4.77 / 3.64 |6.69 / 2.39 |7.39 / 2.42 |6.56 / 2.46 | | 9|9.5 / 3.07 |7.18 / 2.42 |6.67 / 2.39 |7.38 / 2.46 | TODO: - Enable offloading to a NVMe storage -> NVMe support is non-trivial. I suggest adding the support in another PR - [DONE] Discard buffer (and recreate it) instead of offloading. We don't need to restore the contiguous buffer for reduce. - [DONE] Check pin_memory improves performance or not --------- Co-authored-by: Logan Adams <[email protected]> Co-authored-by: Olatunji Ruwase <[email protected]>

to allow running inference tasks using bfloat16 --------- Co-authored-by: Olatunji Ruwase <[email protected]> Co-authored-by: Logan Adams <[email protected]> Co-authored-by: Logan Adams <[email protected]>

We use simple model + deepspeed zero 3 + torch.compile and count graph break numbers to demonstrate current status of combing deepspeed + torch.compile. --------- Co-authored-by: Masahiro Tanaka <[email protected]>

…eepspeedai#6583) HF accelerate implemented fixes here: huggingface/accelerate#3131 This means we can revert the changes from deepspeedai#6574

…ch workflow triggers (deepspeedai#6584) Changes from deepspeedai#6472 caused the no-torch workflow that is an example of how we build the DeepSpeed release package to fail (so we caught this before a release, see more in deepspeedai#6402). These changes also copy the style used to include torch in other accelerator op_builder implementations, such as npu [here](https://github.com/microsoft/DeepSpeed/blob/master/op_builder/npu/fused_adam.py#L8) and hpu [here](https://github.com/microsoft/DeepSpeed/blob/828ddfbbda2482412fffc89f5fcd3b0d0eba9a62/op_builder/hpu/fused_adam.py#L15). This also updates the no-torch workflow to run on all changes to the op_builder directory. The test runs quickly and shouldn't add any additional testing burden there. Resolves: deepspeedai#6576

Fixes deepspeedai#6585 Use shell=True for subprocess.check_output() in case of ROCm commands. Do not use shlex.split() since command string has wildcard expansion. Signed-off-by: Jagadish Krishnamoorthy <[email protected]>

Work in progress to ensure we meet SSF best practices: https://www.bestpractices.dev/en/projects/9530

SD workflow needed updates when we moved to pydantic 2 support that was never added before. Passing nv-sd workflow [here](https://github.com/microsoft/DeepSpeed/actions/runs/11239699283)

**Auto-generated PR to update version.txt after a DeepSpeed release** Released version - 0.16.4 Author - @loadams Co-authored-by: loadams <[email protected]>

@jeffra and I fixed this many years ago, so bringing this doc to a correct state. --------- Signed-off-by: Stas Bekman <[email protected]>

Description This PR includes Tecorigin SDAA accelerator support. With this PR, DeepSpeed supports SDAA as backend for training tasks. --------- Signed-off-by: siqi <[email protected]> Co-authored-by: siqi <[email protected]> Co-authored-by: Olatunji Ruwase <[email protected]> Co-authored-by: Logan Adams <[email protected]>

More information on libuv in pytorch: https://pytorch.org/tutorials/intermediate/TCPStore_libuv_backend.html Issue tracking the prevalence of the error on Windows (unresolved at the time of this PR): pytorch/pytorch#139990 LibUV github: https://github.com/libuv/libuv Windows error: ``` File "C:\hostedtoolcache\windows\Python\3.12.7\x64\Lib\site-packages\torch\distributed\rendezvous.py", line 189, in _create_c10d_store return TCPStore( ^^^^^^^^^ RuntimeError: use_libuv was requested but PyTorch was build without libuv support ``` use_libuv isn't well supported on Windows in pytorch <2.4, so we need to guard around this case. --------- Signed-off-by: Logan Adams <[email protected]>

Signed-off-by: Logan Adams <[email protected]>

@fukun07 and I discovered a bug when using the `offload_states` and `reload_states` APIs of the Zero3 optimizer. When using grouped parameters (for example, in weight decay or grouped lr scenarios), the order of the parameters mapping in `reload_states` ([here](https://github.com/deepspeedai/DeepSpeed/blob/14b3cce4aaedac69120d386953e2b4cae8c2cf2c/deepspeed/runtime/zero/stage3.py#L2953)) does not correspond with the initialization of `self.lp_param_buffer` ([here](https://github.com/deepspeedai/DeepSpeed/blob/14b3cce4aaedac69120d386953e2b4cae8c2cf2c/deepspeed/runtime/zero/stage3.py#L731)), which leads to misaligned parameter loading. This issue was overlooked by the corresponding unit tests ([here](https://github.com/deepspeedai/DeepSpeed/blob/master/tests/unit/runtime/zero/test_offload_states.py)), so we fixed the bug in our PR and added the corresponding unit tests. --------- Signed-off-by: Wei Wu <[email protected]> Co-authored-by: Masahiro Tanaka <[email protected]>

Signed-off-by: Logan Adams <[email protected]>

Following changes in Pytorch trace rules , my previous PR to avoid graph breaks caused by logger is no longer relevant. So instead I've added this functionality to torch dynamo - pytorch/pytorch@16ea0dd This commit allows the user to config torch to ignore logger methods and avoid associated graph breaks. To enable ignore logger methods - os.environ["DISABLE_LOGS_WHILE_COMPILING"] = "1" To ignore logger methods except for a specific method / methods (for example, info and isEnabledFor) - os.environ["DISABLE_LOGS_WHILE_COMPILING"] = "1" and os.environ["LOGGER_METHODS_TO_EXCLUDE_FROM_DISABLE"] = "info, isEnabledFor" Signed-off-by: ShellyNR <[email protected]> Co-authored-by: snahir <[email protected]>

The partition tensor doesn't need to move to the current device when meta load is used. Signed-off-by: Lai, Yejing <[email protected]> Co-authored-by: Olatunji Ruwase <[email protected]>

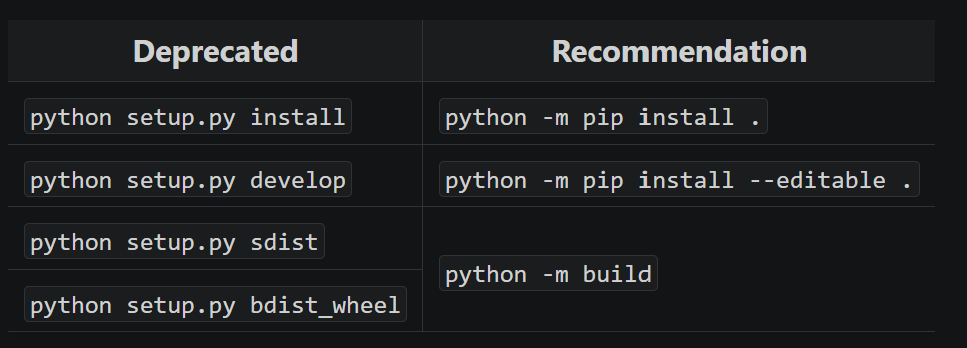

…t` (deepspeedai#7069) With future changes coming to pip/python/etc, we need to modify to no longer call `python setup.py ...` and replace it instead: https://packaging.python.org/en/latest/guides/modernize-setup-py-project/#should-setup-py-be-deleted  This means we need to install the build package which is added here as well. Additionally, we pass the `--sdist` flag to only build the sdist rather than the wheel as well here. --------- Signed-off-by: Logan Adams <[email protected]>

…eepspeedai#7076) This reverts commit 8577bd2. Fixes: deepspeedai#7072

Add deepseekv3 autotp. Signed-off-by: Lai, Yejing <[email protected]>

Fixes: deepspeedai#7082 --------- Signed-off-by: Logan Adams <[email protected]>

Latest transformers causes failures when cpu-torch-latest test, so we pin it for now to unblock other PRs. --------- Signed-off-by: Logan Adams <[email protected]>

Co-authored-by: Logan Adams <[email protected]>

…/runner (deepspeedai#7086) Signed-off-by: Logan Adams <[email protected]> Co-authored-by: Olatunji Ruwase <[email protected]>

These jobs haven't been run in a long time and were originally used when compatibility with torch <2 was more important. Signed-off-by: Logan Adams <[email protected]>

Fix CI issues by using new dlpack [api](https://pytorch.org/docs/stable/_modules/torch/utils/dlpack.html#from_dlpack) Minor pre-commit fixes. Signed-off-by: Olatunji Ruwase <[email protected]>

…deepspeedai#7081) This PR is a continuation of the efforts to improve Deepspeed performance when using PyTorch compile. The `instrument_w_nvtx` decorator is used to instrument code with NVIDIA Tools Extension (NVTX) markers for profiling and visualizing code execution on GPUs. Along with executing the function itself, `instrument_w_nvtx` makes calls to `nvtx.range_push` and `nvtx.range_pop` which can't be traced by Dynamo. That's why this decorator causes a graph break. The impact on performance can be significant due to numerous uses of the decorator throughout the code. We propose a simple solution: Don't invoke the sourceless functions when torch is compiling. --------- Signed-off-by: Max Kovalenko <[email protected]> Co-authored-by: Logan Adams <[email protected]>

…ckward hooks (deepspeedai#7062) This PR is part of the effort to improve Deepspeed performance when using PyTorch compile. There is a known [bug](pytorch/pytorch#128942) in torch.compile which causes a graph break when an inner class is defined within a method that is being compiled. The following would then appear in the log: `[__graph_breaks] torch._dynamo.exc.Unsupported: missing: LOAD_BUILD_CLASS` This is the case with the inner classes `PreBackwardFunctionForModule` and `PostBackwardFunctionModule`. While there is an open PyTorch [PR#133805 ](pytorch/pytorch#133805) for this, we can solve the issue by moving the inner classes into the initialization code. No graph breaks and the corresponding logs are produced anymore. --------- Signed-off-by: Max Kovalenko <[email protected]> Signed-off-by: Olatunji Ruwase <[email protected]> Signed-off-by: inkcherry <[email protected]> Signed-off-by: shaomin <[email protected]> Signed-off-by: Stas Bekman <[email protected]> Signed-off-by: siqi <[email protected]> Signed-off-by: Logan Adams <[email protected]> Signed-off-by: Wei Wu <[email protected]> Signed-off-by: ShellyNR <[email protected]> Signed-off-by: Lai, Yejing <[email protected]> Co-authored-by: Olatunji Ruwase <[email protected]> Co-authored-by: inkcherry <[email protected]> Co-authored-by: wukong1992 <[email protected]> Co-authored-by: shaomin <[email protected]> Co-authored-by: Hongwei Chen <[email protected]> Co-authored-by: Logan Adams <[email protected]> Co-authored-by: loadams <[email protected]> Co-authored-by: Stas Bekman <[email protected]> Co-authored-by: siqi654321 <[email protected]> Co-authored-by: siqi <[email protected]> Co-authored-by: Wei Wu <[email protected]> Co-authored-by: Masahiro Tanaka <[email protected]> Co-authored-by: Shelly Nahir <[email protected]> Co-authored-by: snahir <[email protected]> Co-authored-by: Yejing-Lai <[email protected]>

Run pre-commit on all files may change files that are not part of the current branch. The updated script will only run pre-commit on the files that have been changed in the current branch. Signed-off-by: Hongwei <[email protected]>

This PR is a continuation of the efforts to improve Deepspeed performance when using PyTorch compile. The `fetch_sub_module()` routine makes use of the `frozenset` which is problematic because: 1. `iter_params` returns an iterable over model parameters 2. `frozenset` wraps this iterable, making it unmodifiable 3. PyTorch’s compilation process cannot infer how `frozenset` interacts with tensors, leading to a graph break. If we replace the `frozenset` with a modifiable `set`, then there is no longer such graph break. Signed-off-by: Max Kovalenko <[email protected]> Co-authored-by: Masahiro Tanaka <[email protected]> Co-authored-by: Olatunji Ruwase <[email protected]>

Suppose qkv_linear_weight_shape = [in_features, out_features]. The qkv linear weight shape is [3, in_features, out_features] if using fued_qkv gemm optimization. It will cause "ValueError: too many values to unpack (expected 2)" issue when printing the model. Solution: Take the last two weight dimensions shapes as in_features and out_features. Signed-off-by: Lai, Yejing <[email protected]> Co-authored-by: Hongwei Chen <[email protected]> Co-authored-by: Logan Adams <[email protected]>

As a part of joining the Linux Foundation AI&Data it makes sense to rename the X/Twitter accounts associated with DeepSpeed. --------- Signed-off-by: Logan Adams <[email protected]>

…o deepspeedai-master

|

|

|

Tested and working for DP/MP/PP, along with TE on latest neox main |

No description provided.