-

Python 3.8.5

-

Ubuntu 22.04

To set up the environment for this repository, please follow the steps below:

Step 1: Create a Python environment (optional) If you wish to use a specific Python environment, you can create one using the following:

conda create -n pyt1.11 python=3.8.5Step 2: Install PyTorch with CUDA (optional) If you want to use PyTorch with CUDA support, you can install it using the following:

conda install pytorch==1.11 torchvision torchaudio cudatoolkit=11.3 -c pytorchStep 3: Install Python dependencies To install the required Python dependencies, run the following command:

pip install -r requirements.txtTo set up the environment for this repository, please follow the steps below:

Step 1: Create a Python environment (optional) If you wish to use a specific Python environment, you can create one using the following:

conda create -n pyt2.2 python=3.10Step 2: Install PyTorch with CUDA (optional) If you want to use PyTorch with CUDA support, you can install it using the following:

conda install pytorch torchvision torchaudio pytorch-cuda=12.1 -c pytorch -c nvidiaStep 3: Install Python dependencies To install the required Python dependencies, run the following command:

pip install -r requirements1.txt- Unzip all the zip files located in the data folder, including its subfolders.

- Place the following folders, extracted from their respective zip files, under the data folder:

kg,ct, andgold_subset - Locate the

local_context_datasetfolder unzipped fromdata/local_context_dataset.zip.Move it tomodels/T5. - Copy the file

e2t.jsonand paste it into the following folders:models\GPT*\,models\Iterative\, andpreprocess\ - Locate the

og,sim,kg, andctfolder under the biomedical folder, copy them to the corresponding folder underbiomedical_models\*\data

- Navigate to the

preprocessand run thebash preprocess.sh - Navigate to the

models\GPTFSand runprocess.py - Navigate to the

biomedical_models\*and runpreprocess.py

The project data includes the following components:

data/local_context_dataset.zip: This folder contains the training, validation, and testing files for our task.data/kg/*.json: Thedata/kgdirectory contains files that store the original Information Extraction (IE) results for all paper abstracts.data/ct/*.csv: Thedata/ctdirectory contains files that represent the citation network for all papers.data/gold_subset: This directory contains our gold annotation subsets.data/biomedical.zip: This directory contains our biochemical datasets.evaluationcontain sample evaluation code.

result/sentence_generation.zip: This zip file contains GPT3.5/GPT4 for initial round results

result/iterative_novelty_boosting.zip: This zip file contains GPT3.5/GPT4 for iterative novelty boosting results

Set up environment first:

conda activate pyt1.11To train the T5 model under models\T5*, run the following command:

bash finetune_*.sh To test the T5 model under models\T5*, run the following command:

bash eval_*.sh To test the GPT3.5 model under models\GPT*, run the following command:

bash eval3.sh After getting GPT3.5 results, we can also get GPT4 results using same input by running the following command:

python gpt4.pyAfter gettubg GPT4 results, first copy all GPT4 results under the iterative folder, you can then run the first two iterations of iterative novelty boosting by running the following command:

python calculate_sim.py

python gpt4_iter1.py

python calculate_sim1.py

python gpt4_iter2.pySet up environment first:

conda activate pyt2.2Download Meditron-7b model from huggingface and put it under biomedical_models\model

To train the T5 model under biomedical_models\*\, run the following command:

bash train.sh To test the trained model under biomedical_models\*\, run the following command:

python inf_generator.py @article{wang2023learning,

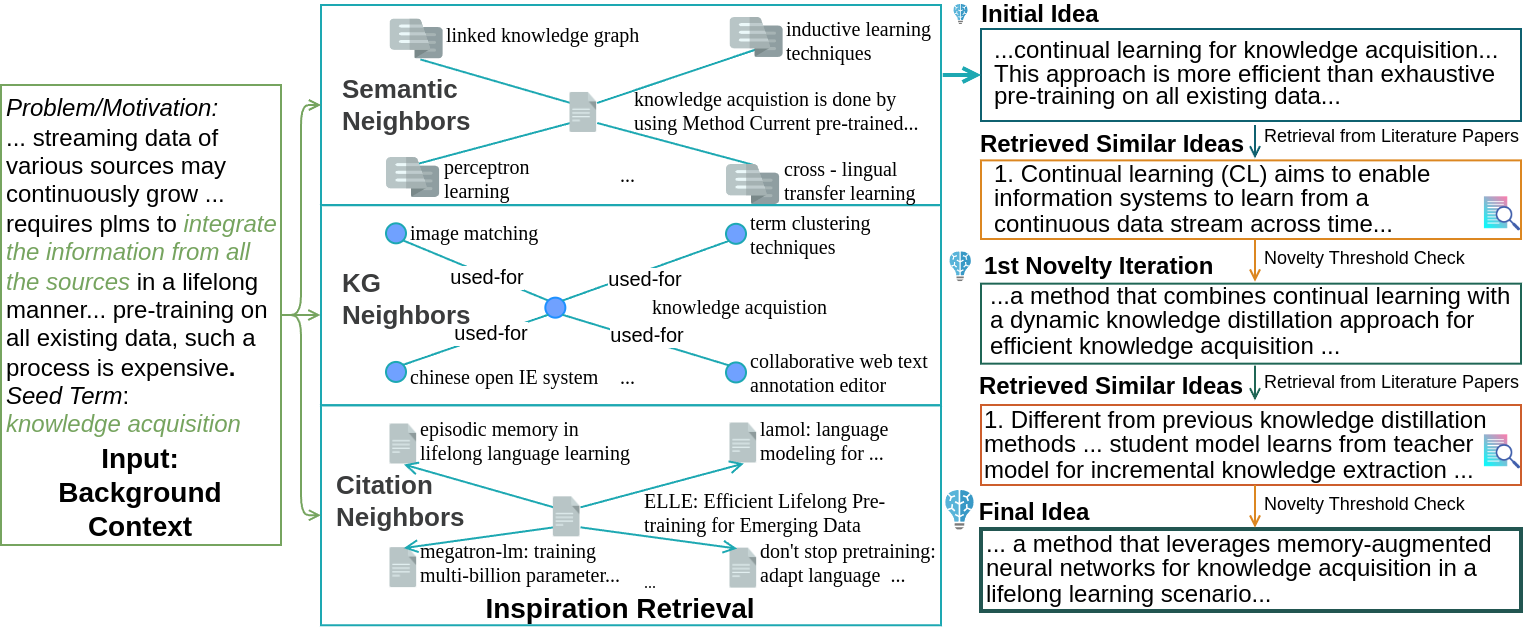

title={SciMON: Scientific Inspiration Machines Optimized for Novelty},

author={Wang, Qingyun and Downey, Doug and Ji, Heng and Hope, Tom},

journal={arXiv preprint arXiv:2305.14259},

year={2023}

}