Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

fix: Use throw instead of reject in broker facade (#10735)

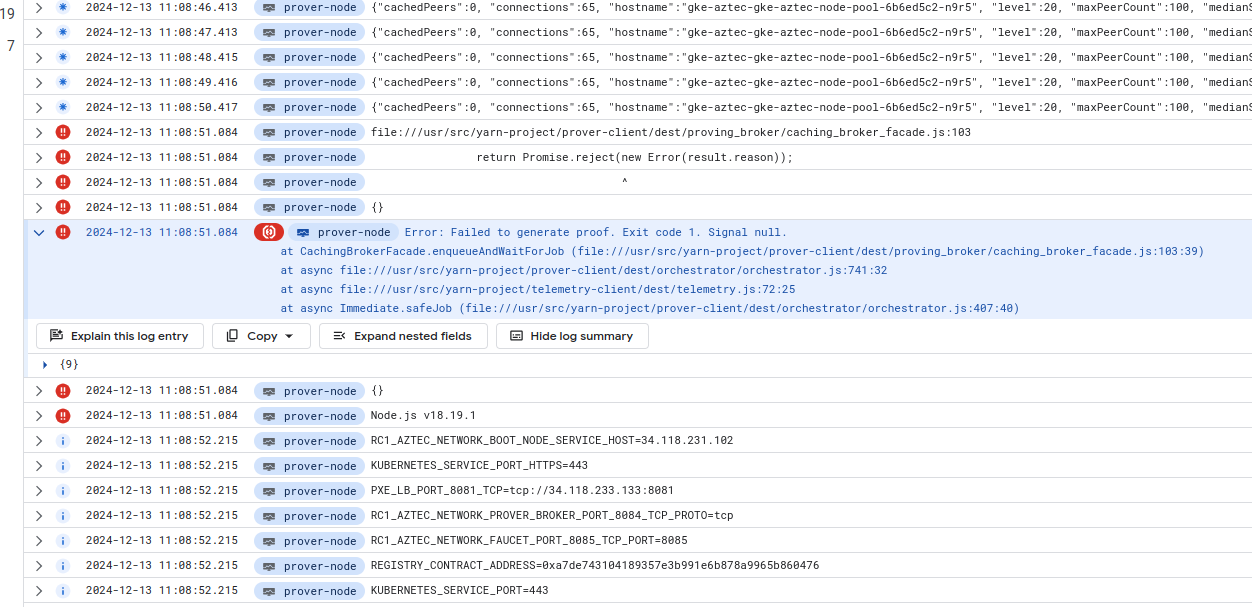

We were seeing proving failures for the initial epoch in rc1. In Loki, this looked like the proof was ongoing, and suddently the node restarted. See the "Logger initialized" line at 11:08:54.  Looking for the same time range in google cloud, which fetches loads from stderr instead of the OTEL collector, shows an error that was not in Loki, and that was not json-formatted, meaning it didn't come from pino:  This an error emitted by the node process directly. I was able to reproduce this in a local setup by spawning the prover node, agent, and broker as separate process (I could not reproduce it if node and broker were on the _same_ process), and causing the bb-prover to fail on AVM proofs. The errors in all caps are temporary log lines I added for testing: ``` [18:44:22.950] VERBOSE: prover-client:orchestrator Updating archive tree with block 5 header 0x1e2aefd32ac7a3f31826e996e637ca6c8a053bb69c9087318f63c65311fd804a [18:44:22.954] VERBOSE: prover-client:orchestrator Orchestrator finalised block 5 [18:44:23.194] ERROR: prover-client:caching-prover-broker PROVING JOB FAILED id=PUBLIC_VM:7e3848db3c57254c1d8875839cdaa9d330c517d0bba84a3c805bc3d03962d3f9: FAILING AVM file:///home/santiago/Projects/aztec3-packages-3/yarn-project/prover-client/dest/proving_broker/caching_broker_facade.js:104 return Promise.reject(new Error(result.reason)); ^ Error: FAILING AVM at CachingBrokerFacade.enqueueAndWaitForJob (file:///home/santiago/Projects/aztec3-packages-3/yarn-project/prover-client/dest/proving_broker/caching_broker_facade.js:104:39) at async file:///home/santiago/Projects/aztec3-packages-3/yarn-project/prover-client/dest/orchestrator/orchestrator.js:742:37 at async file:///home/santiago/Projects/aztec3-packages-3/yarn-project/telemetry-client/dest/telemetry.js:72:25 at async Immediate.safeJob (file:///home/santiago/Projects/aztec3-packages-3/yarn-project/prover-client/dest/orchestrator/orchestrator.js:407:40) Node.js v18.19.1 ~/Projects/aztec3-packages-3/yarn-project/aztec (master)$ ``` This killed the prover node process itself in the `CachingBrokerFacade.enqueueAndWaitForJob`. Changing the `return Promise.reject(new Error(result.reason));` line in the facade to a `throw new Error(result.reason);` fixed the issue: ``` [18:52:04.239] VERBOSE: simulator:public-processor Processed tx 0x22f9872451dcd3b5ea4157d3d5b210b48fc19122d97204803bbf74b9e365398b with 1 public calls {"txHash":"0x22f9872451dcd3b5ea4157d3d5b210b48fc19122d97204803bbf74b9e365398b","txFee":25842656343661200,"revertCode":0,"gasUsed":{"totalGas":{"daGas":15360,"l2Gas":476820},"teardownGas":{"daGas":0,"l2Gas":0}},"publicDataWriteCount":8,"nullifierCount":3,"noteHashCount":0,"contractClassLogCount":0,"unencryptedLogCount":0,"privateLogCount":1,"l2ToL1MessageCount":0} [18:52:04.239] INFO: prover-client:orchestrator Received transaction: 0x22f9872451dcd3b5ea4157d3d5b210b48fc19122d97204803bbf74b9e365398b [18:52:04.261] ERROR: prover-client:orchestrator GETTING AVM PROOF [18:52:04.287] VERBOSE: prover-node:epoch-proving-job Processed all 1 txs for block 2 {"blockNumber":2,"blockHash":"0x11c03a9cf30308de9e0c30f5c08db6029db2f9196e86f2b92cc7141d9de33254","uuid":"2aa2de51-ce77-4d0f-a4e6-58e593ec0eca"} [18:52:04.297] VERBOSE: prover-client:orchestrator Block 2 completed. Assembling header. [18:52:04.301] VERBOSE: prover-client:orchestrator Updating archive tree with block 2 header 0x11c03a9cf30308de9e0c30f5c08db6029db2f9196e86f2b92cc7141d9de33254 [18:52:04.304] VERBOSE: prover-client:orchestrator Orchestrator finalised block 2 [18:52:05.186] ERROR: prover-client:caching-prover-broker PROVING JOB FAILED id=PUBLIC_VM:9fed284ba66a64c2237419640ed5af6e4f466b17a42b36ffd0039542d7cb8443: FAILING AVM [18:52:05.188] ERROR: prover-client:orchestrator ERROR IN AVM PROOF [18:52:05.188] WARN: prover-client:orchestrator Error thrown when proving AVM circuit, but AVM_PROVING_STRICT is off, so faking AVM proof and carrying on. Error: Error: FAILING AVM. [18:52:06.925] VERBOSE: prover-client:orchestrator Orchestrator completed root rollup for epoch 2 [18:52:06.925] INFO: prover-node:epoch-proving-job Finalised proof for epoch 2 {"epochNumber":2,"uuid":"2aa2de51-ce77-4d0f-a4e6-58e593ec0eca","duration":3014.239808999002} [18:52:06.932] INFO: sequencer:publisher SubmitEpochProof proofSize=38 bytes [18:52:06.958] VERBOSE: sequencer:publisher Sent L1 transaction 0x4ee81acf36558c1b0945b6bf75201853a00c0d22123e278c1b3cad303d064c32 {"gasLimit":296230,"maxFeePerGas":"1.500241486","maxPriorityFeePerGas":"1.44"} [18:52:07.949] INFO: archiver Updated proven chain to block 2 (epoch 2) {"provenBlockNumber":2,"provenEpochNumber":2} [18:52:07.998] VERBOSE: world_state Finalized chain is now at block 2 [18:52:11.967] INFO: archiver Updated proven chain to block 2 (epoch 2) {"provenBlockNumber":2,"provenEpochNumber":2} [18:52:15.994] INFO: archiver Updated proven chain to block 2 (epoch 2) {"provenBlockNumber":2,"provenEpochNumber":2} ``` This PR also tweaks a few logs along the way.

- Loading branch information