This repository shows samples of WebGL Compute shader. For detail, see Intent to Implement: WebGL 2.0 Compute and WebGL 2.0 Compute Specification - Draft.

As of May 2019, WebGL Compute shader runs only on Windows(or Linux) Google Chrome or Windows Microsoft Edge Insider Channels launched with below command line flags.

-

--enable-webgl2-compute-context

Enable WebGL Compute shader, WebGL2ComputeRenderingContext

Or you can enable this flag via about://flags/ if using the corresponding version, choose "WebGL 2.0 Compute" -

--use-angle=gl

Run ANGLE with OpenGL backend because Shader Storage Buffer Object is now on implementing and there are some use cases which do not work well on D3D backend yet

Some demos in this page need this flag

Or you can enable this flag via about://flags/, choose "Choose ANGLE graphics backend" -

--use-cmd-decoder=passthrough

In some environments, it could run well only after adding this flag. So try this if could not work with above two flags -

--use-gl=angle

Run OpenGL with ANGLE

On Linux, you must specify this explicitly. On Windows, this flag is not needed because ANGLE will be used by default

Note that some environments could not run WebGL Compute shader, especially in older GPU nor display driver.

WebGL 2.0 Compute API is now implementing. So if you try to develop, Chrome Canary is recommended as the latest feature-update and bug-fix are Nightly provided.

Interaction calculations such as Boids simulation are suited for Compute shader because it can be run in parallel. In this demo, it calculates coordinates and velocities of each Boids using Compute shader, and draws them using Instancing. In CPU, it is not doing anything except calling graphics API.

Bitonic sort is one of the sort algorithm that can be executed in parallel. This demo sorts an array with selected number of elements by CPU (JavaScript built-in sort()) and GPU (Compute shader), then compares each elapsed time.

In CPU sort, it simply measures the time to execute sort(). In GPU sort, it measures the total time to copy the array to GPU, execute Bitonic sort in Compute shader and copy the result data back to CPU.

Note that WebGL initialization time, for example shader compile, is not included.

This demo shows how to do ray tracing in a web browser using compute shaders. This ray tracer use progressive rendeing to obtain interactive and real-time frame rate.

Photorealistic rendering is hard to obtain using the regular WebGL pipeline. But thanks to WebGL Compute, we can now implement expensive rendering algorithms in the browser.

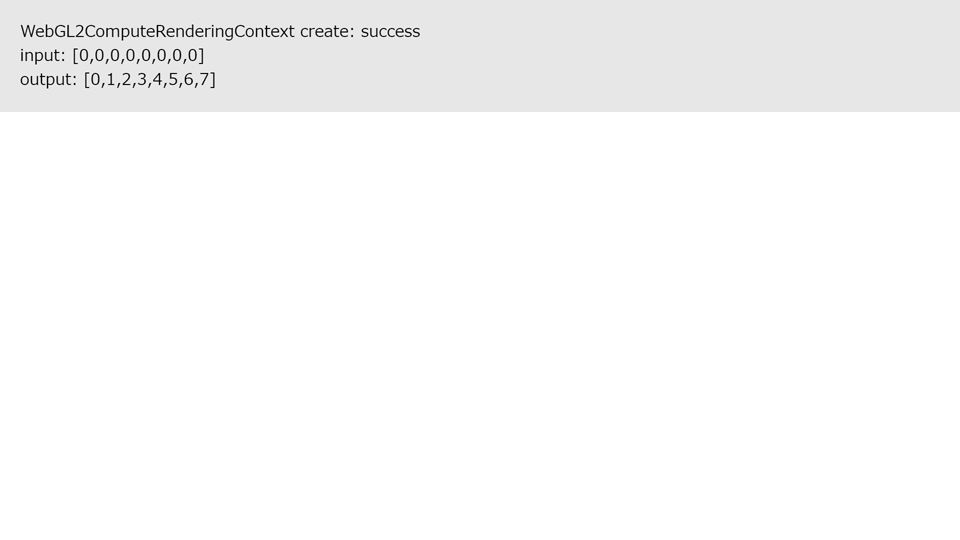

This is for the first step to implement Compute shader. It copies an array defined in CPU to GPU as the input data, writes its thread index to the array through Shader Storage Buffer Object in Compute shader, then copies the result data back to CPU and read it.

We can bind Shader Storage Buffer Object as ARRAY_BUFFER and use it as vertex attribute in Vertex shader. So, we can use the result, calculated in Compute shader, in Vertex shader without going through CPU. This means there is no memory copy between CPU and GPU. In this sample, it calculates particle coordinates in Compute shader and writes the result to Shader Storage Buffer Object, then renders the particle using it as attribte in Vertex shader.

There are two ways to output the result from Compute shader. One is using SSBO as mentioned above, and the other is using Texture. Texture bound from JavaScript side using bindImageTexture(), new API added by WebGL 2.0 Compute, can be read and written by Compute shader through imageLoad()/imageStore (). Note that Texture to specify to bindImageTexture() should be immutable. "Immutable" means Texture has been allocated by using texStorageXX(), not texImageXX(). Now, we can create procedural textures or execute image processing without any vertices.