diff --git a/.github/pull_request_template.md b/.github/pull_request_template.md

index ff239510f..52a0aa5b4 100644

--- a/.github/pull_request_template.md

+++ b/.github/pull_request_template.md

@@ -22,7 +22,7 @@

If you need to run benchmark experiments for a performance-impacting changes:

- [ ] I have contacted @vwxyzjn to obtain access to the [openrlbenchmark W&B team](https://wandb.ai/openrlbenchmark).

-- [ ] I have used the [benchmark utility](/get-started/benchmark-utility/) to submit the tracked experiments to the [openrlbenchmark/cleanrl](https://wandb.ai/openrlbenchmark/cleanrl) W&B project, optionally with `--capture-video`.

+- [ ] I have used the [benchmark utility](/get-started/benchmark-utility/) to submit the tracked experiments to the [openrlbenchmark/cleanrl](https://wandb.ai/openrlbenchmark/cleanrl) W&B project, optionally with `--capture_video`.

- [ ] I have performed RLops with `python -m openrlbenchmark.rlops`.

- For new feature or bug fix:

- [ ] I have used the RLops utility to understand the performance impact of the changes and confirmed there is no regression.

diff --git a/.github/workflows/tests.yaml b/.github/workflows/tests.yaml

index ae4bc1540..ebdd57e4c 100644

--- a/.github/workflows/tests.yaml

+++ b/.github/workflows/tests.yaml

@@ -1,10 +1,5 @@

name: tests

on:

- push:

- paths-ignore:

- - '**/README.md'

- - 'docs/**/*'

- - 'cloud/**/*'

pull_request:

paths-ignore:

- '**/README.md'

@@ -15,8 +10,8 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: [3.8]

- poetry-version: [1.3.1]

+ python-version: ["3.8", "3.9", "3.10"]

+ poetry-version: ["1.7"]

os: [ubuntu-22.04, macos-latest, windows-latest]

runs-on: ${{ matrix.os }}

steps:

@@ -58,8 +53,8 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: [3.8]

- poetry-version: [1.3.1]

+ python-version: ["3.8", "3.9", "3.10"]

+ poetry-version: ["1.7"]

os: [ubuntu-22.04, macos-latest, windows-latest]

runs-on: ${{ matrix.os }}

steps:

@@ -94,8 +89,8 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: [3.8]

- poetry-version: [1.3.1]

+ python-version: ["3.8", "3.9", "3.10"]

+ poetry-version: ["1.7"]

os: [ubuntu-22.04, macos-latest, windows-latest]

runs-on: ${{ matrix.os }}

steps:

@@ -120,8 +115,8 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: [3.8]

- poetry-version: [1.3.1]

+ python-version: ["3.8", "3.9", "3.10"]

+ poetry-version: ["1.7"]

os: [ubuntu-22.04]

runs-on: ${{ matrix.os }}

steps:

@@ -180,8 +175,8 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: [3.8]

- poetry-version: [1.3.1]

+ python-version: ["3.8", "3.9", "3.10"]

+ poetry-version: ["1.7"]

os: [ubuntu-22.04]

runs-on: ${{ matrix.os }}

steps:

@@ -194,29 +189,12 @@ jobs:

with:

poetry-version: ${{ matrix.poetry-version }}

- # mujoco_py tests

- - name: Install dependencies

- run: poetry install -E "pytest mujoco_py mujoco jax"

- - name: Run gymnasium migration dependencies

- run: poetry run pip install "stable_baselines3==2.0.0a1"

- - name: Downgrade setuptools

- run: poetry run pip install setuptools==59.5.0

- - name: install mujoco_py dependencies

- run: |

- sudo apt-get update && sudo apt-get -y install wget unzip software-properties-common \

- libgl1-mesa-dev \

- libgl1-mesa-glx \

- libglew-dev \

- libosmesa6-dev patchelf

- - name: Run mujoco_py tests

- run: poetry run pytest tests/test_mujoco_py.py

-

test-envpool-envs:

strategy:

fail-fast: false

matrix:

- python-version: [3.8]

- poetry-version: [1.3.1]

+ python-version: ["3.8", "3.9", "3.10"]

+ poetry-version: ["1.7"]

os: [ubuntu-22.04]

runs-on: ${{ matrix.os }}

steps:

@@ -241,8 +219,8 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: [3.8]

- poetry-version: [1.3.1]

+ python-version: ["3.8", "3.9", "3.10"]

+ poetry-version: ["1.7"]

os: [ubuntu-22.04]

runs-on: ${{ matrix.os }}

steps:

@@ -267,8 +245,8 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: [3.8]

- poetry-version: [1.3.1]

+ python-version: ["3.8", "3.9", "3.10"]

+ poetry-version: ["1.7"]

os: [ubuntu-22.04]

runs-on: ${{ matrix.os }}

steps:

diff --git a/.github/workflows/utils_test.yaml b/.github/workflows/utils_test.yaml

index 8b1929503..cd668166f 100644

--- a/.github/workflows/utils_test.yaml

+++ b/.github/workflows/utils_test.yaml

@@ -15,8 +15,8 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: [3.8]

- poetry-version: [1.3.1]

+ python-version: ["3.8", "3.9", "3.10"]

+ poetry-version: ["1.7"]

os: [ubuntu-22.04]

runs-on: ${{ matrix.os }}

steps:

diff --git a/.gitignore b/.gitignore

index 4784f1086..1d4cfa0e4 100644

--- a/.gitignore

+++ b/.gitignore

@@ -1,3 +1,5 @@

+slurm

+.aim

runs

balance_bot.xml

cleanrl/ppo_continuous_action_isaacgym/isaacgym/examples

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

index ccb3fc71a..516cd23bc 100644

--- a/.pre-commit-config.yaml

+++ b/.pre-commit-config.yaml

@@ -56,10 +56,6 @@ repos:

name: poetry-export requirements-dm_control.txt

args: ["--without-hashes", "-o", "requirements/requirements-dm_control.txt", "-E", "dm_control"]

stages: [manual]

- - id: poetry-export

- name: poetry-export requirements-mujoco_py.txt

- args: ["--without-hashes", "-o", "requirements/requirements-mujoco_py.txt", "-E", "mujoco_py"]

- stages: [manual]

- id: poetry-export

name: poetry-export requirements-procgen.txt

args: ["--without-hashes", "-o", "requirements/requirements-procgen.txt", "-E", "procgen"]

diff --git a/README.md b/README.md

index 5e645ab46..790ad9933 100644

--- a/README.md

+++ b/README.md

@@ -191,3 +191,8 @@ If you use CleanRL in your work, please cite our technical [paper](https://www.j

url = {http://jmlr.org/papers/v23/21-1342.html}

}

```

+

+

+## Acknowledgement

+

+We thank [Hugging Face](https://huggingface.co/)'s cluster for providing GPU computational resources to this project.

diff --git a/benchmark/c51.sh b/benchmark/c51.sh

index fb46bb6b4..6aba77810 100644

--- a/benchmark/c51.sh

+++ b/benchmark/c51.sh

@@ -1,29 +1,29 @@

poetry install

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids CartPole-v1 Acrobot-v1 MountainCar-v0 \

- --command "poetry run python cleanrl/c51.py --cuda False --track --capture-video" \

+ --command "poetry run python cleanrl/c51.py --no_cuda --track --capture_video" \

--num-seeds 3 \

--workers 9

poetry install -E atari

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

- --command "poetry run python cleanrl/c51_atari.py --track --capture-video" \

+ --command "poetry run python cleanrl/c51_atari.py --track --capture_video" \

--num-seeds 3 \

--workers 1

poetry install -E "jax"

-poetry run pip install --upgrade "jax[cuda]==0.3.17" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

+poetry run pip install --upgrade "jax[cuda11_cudnn82]==0.4.8" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

CUDA_VISIBLE_DEVICES=-1 xvfb-run -a python -m cleanrl_utils.benchmark \

--env-ids CartPole-v1 Acrobot-v1 MountainCar-v0 \

- --command "poetry run python cleanrl/c51_jax.py --track --capture-video" \

+ --command "poetry run python cleanrl/c51_jax.py --track --capture_video" \

--num-seeds 3 \

--workers 1

poetry install -E "atari jax"

-poetry run pip install --upgrade "jax[cuda]==0.3.17" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

+poetry run pip install --upgrade "jax[cuda11_cudnn82]==0.4.8" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

xvfb-run -a python -m cleanrl_utils.benchmark \

--env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

- --command "poetry run python cleanrl/c51_atari_jax.py --track --capture-video" \

+ --command "poetry run python cleanrl/c51_atari_jax.py --track --capture_video" \

--num-seeds 3 \

--workers 1

diff --git a/benchmark/cleanrl_1gpu.slurm_template b/benchmark/cleanrl_1gpu.slurm_template

new file mode 100644

index 000000000..b7c76c297

--- /dev/null

+++ b/benchmark/cleanrl_1gpu.slurm_template

@@ -0,0 +1,21 @@

+#!/bin/bash

+#SBATCH --job-name=low-priority

+#SBATCH --partition=production-cluster

+#SBATCH --gpus-per-task={{gpus_per_task}}

+#SBATCH --cpus-per-gpu={{cpus_per_gpu}}

+#SBATCH --ntasks={{ntasks}}

+#SBATCH --output=slurm/logs/%x_%j.out

+#SBATCH --array={{array}}

+#SBATCH --mem-per-cpu=12G

+#SBATCH --exclude=ip-26-0-146-[33,100,122-123,149,183,212,249],ip-26-0-147-[6,94,120,141],ip-26-0-152-[71,101,119,178,186,207,211],ip-26-0-153-[6,62,112,132,166,251],ip-26-0-154-[38,65],ip-26-0-155-[164,174,187,217],ip-26-0-156-[13,40],ip-26-0-157-27

+##SBATCH --nodelist=ip-26-0-147-204

+{{nodes}}

+

+env_ids={{env_ids}}

+seeds={{seeds}}

+env_id=${env_ids[$SLURM_ARRAY_TASK_ID / {{len_seeds}}]}

+seed=${seeds[$SLURM_ARRAY_TASK_ID % {{len_seeds}}]}

+

+echo "Running task $SLURM_ARRAY_TASK_ID with env_id: $env_id and seed: $seed"

+

+srun {{command}} --env-id $env_id --seed $seed #

diff --git a/benchmark/ddpg.sh b/benchmark/ddpg.sh

index 9f26b302e..3746b4d99 100755

--- a/benchmark/ddpg.sh

+++ b/benchmark/ddpg.sh

@@ -1,16 +1,22 @@

-poetry install -E "mujoco_py"

-python -c "import mujoco_py"

-xvfb-run -a python -m cleanrl_utils.benchmark \

- --env-ids HalfCheetah-v2 Walker2d-v2 Hopper-v2 InvertedPendulum-v2 Humanoid-v2 Pusher-v2 \

- --command "poetry run python cleanrl/ddpg_continuous_action.py --track --capture-video" \

+poetry install -E "mujoco"

+python -m cleanrl_utils.benchmark \

+ --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 \

+ --command "poetry run python cleanrl/ddpg_continuous_action.py --track" \

--num-seeds 3 \

- --workers 1

+ --workers 18 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

-poetry install -E "mujoco_py jax"

-poetry run pip install --upgrade "jax[cuda]==0.3.17" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

-poetry run python -c "import mujoco_py"

-xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

- --env-ids HalfCheetah-v2 Walker2d-v2 Hopper-v2 \

- --command "poetry run python cleanrl/ddpg_continuous_action_jax.py --track --capture-video" \

+poetry install -E "mujoco jax"

+poetry run pip install --upgrade "jax[cuda11_cudnn82]==0.4.8" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

+poetry run python -m cleanrl_utils.benchmark \

+ --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 \

+ --command "poetry run python cleanrl/ddpg_continuous_action_jax.py --track" \

--num-seeds 3 \

- --workers 1

+ --workers 18 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

diff --git a/benchmark/ddpg_plot.sh b/benchmark/ddpg_plot.sh

new file mode 100755

index 000000000..d36db199e

--- /dev/null

+++ b/benchmark/ddpg_plot.sh

@@ -0,0 +1,20 @@

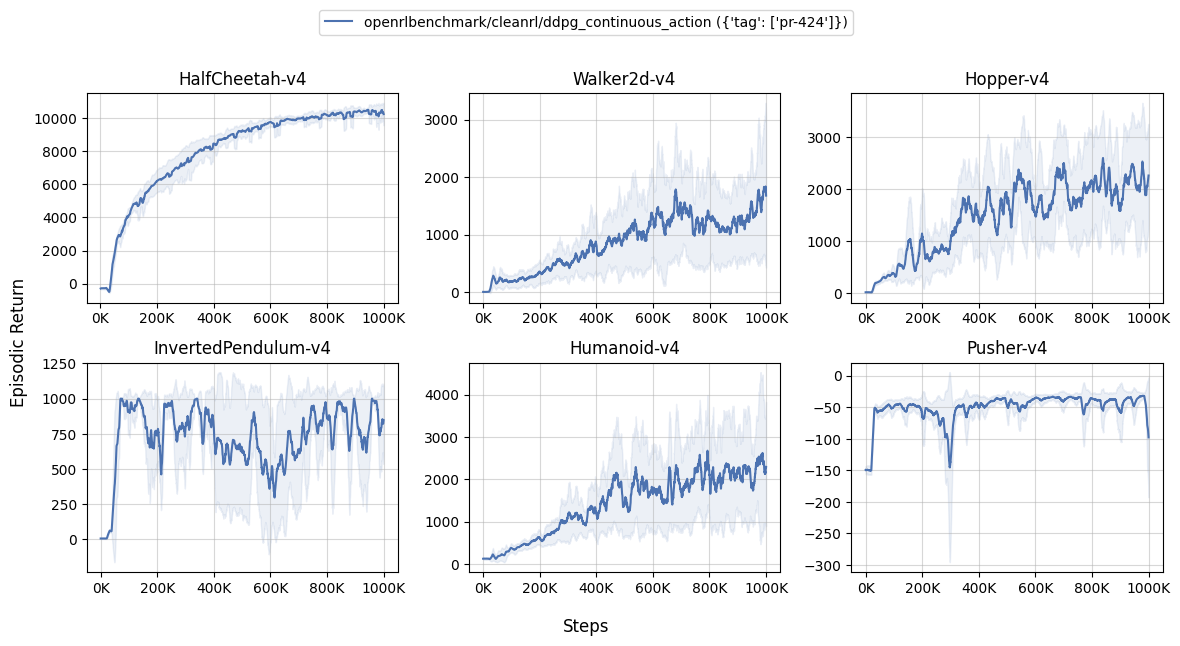

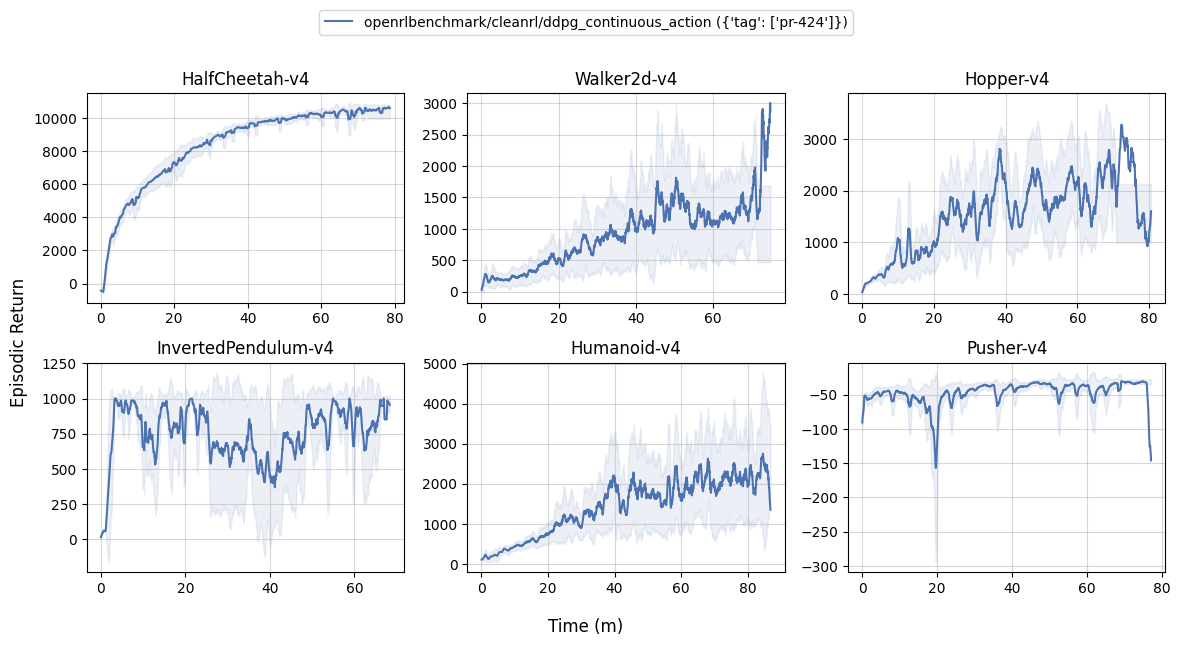

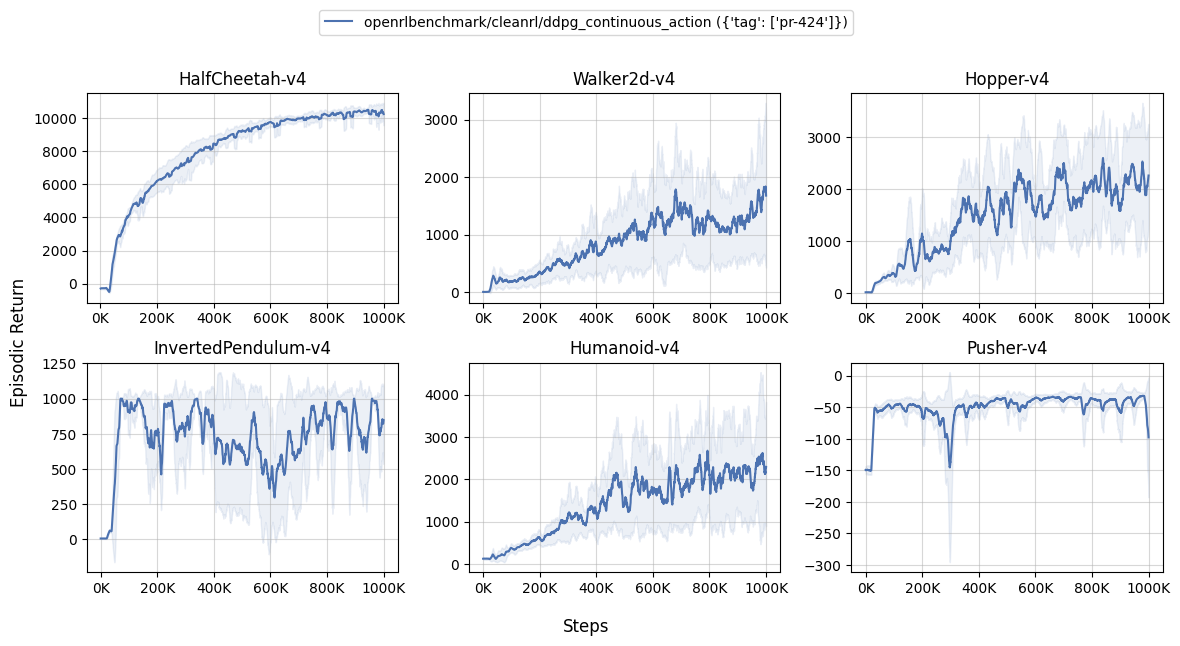

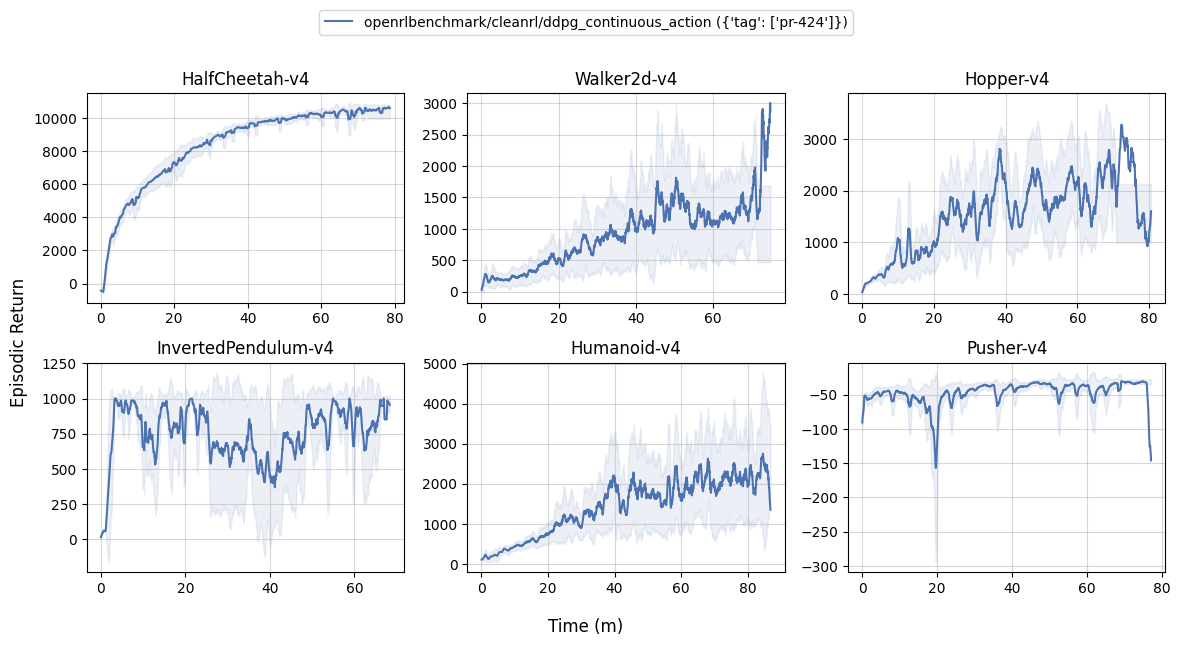

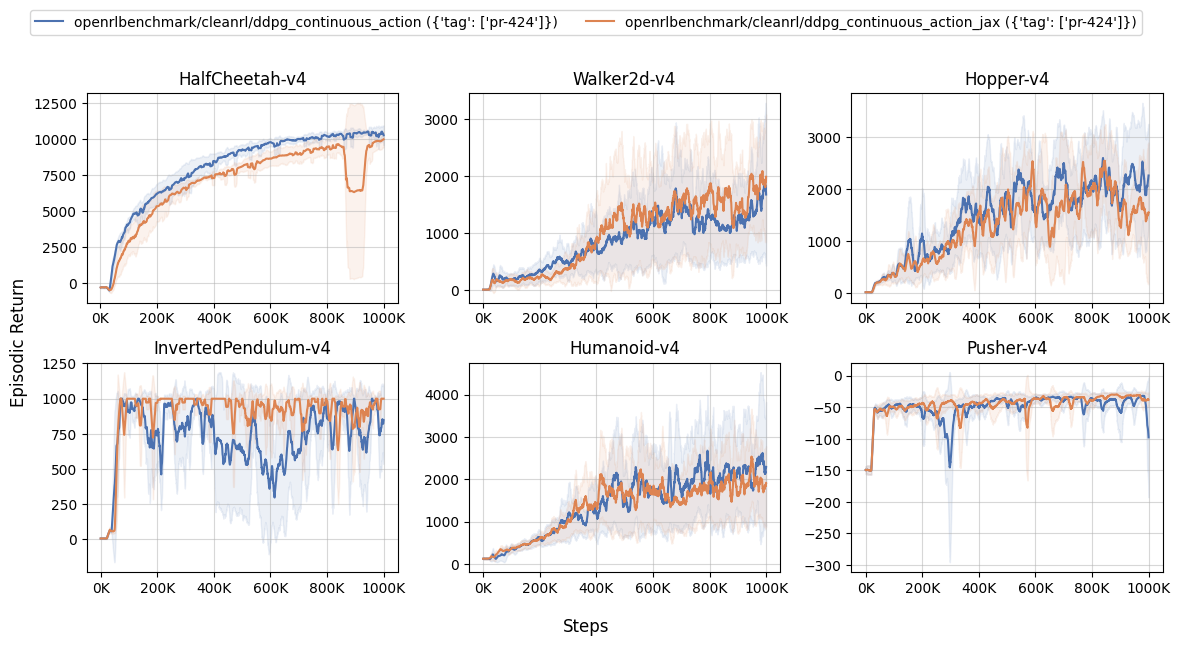

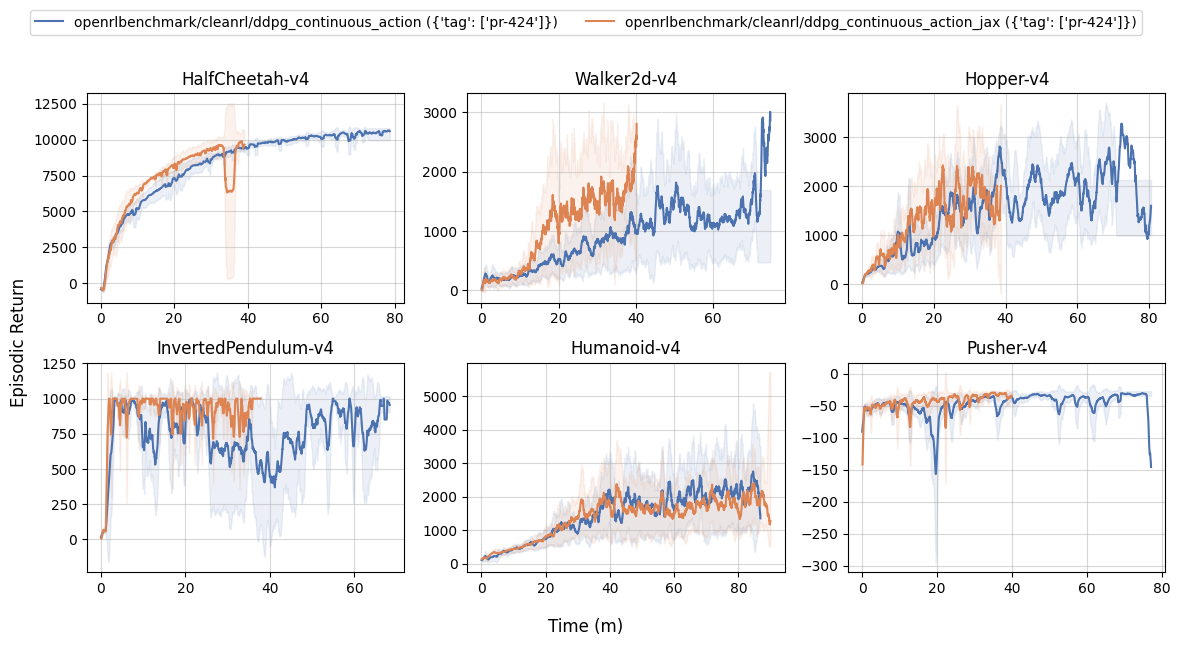

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ddpg_continuous_action?tag=pr-424' \

+ --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ddpg \

+ --scan-history

+

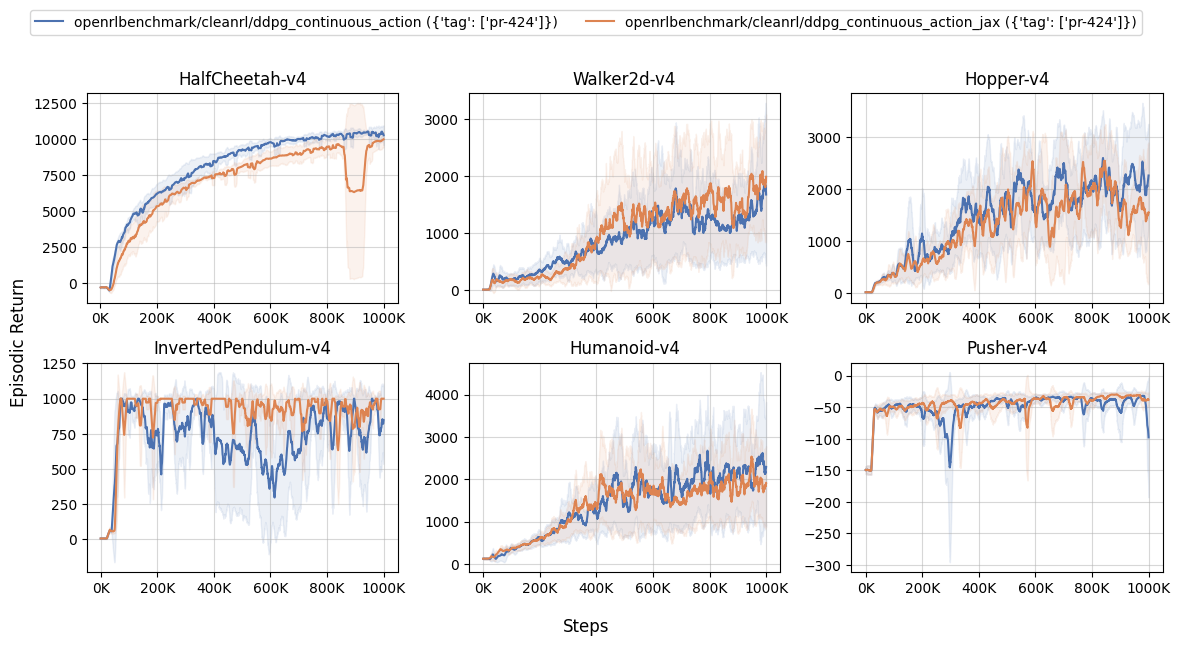

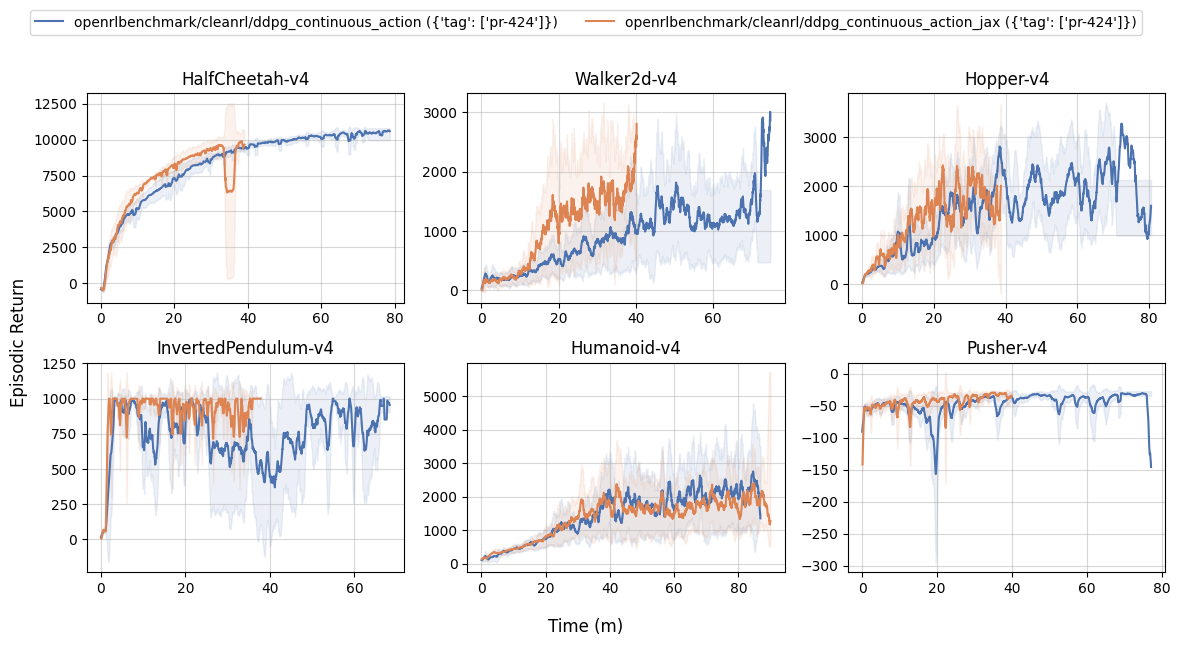

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ddpg_continuous_action?tag=pr-424' \

+ 'ddpg_continuous_action_jax?tag=pr-424' \

+ --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ddpg_jax \

+ --scan-history

diff --git a/benchmark/dqn.sh b/benchmark/dqn.sh

index 9a8d8e32e..dcd90446b 100644

--- a/benchmark/dqn.sh

+++ b/benchmark/dqn.sh

@@ -1,29 +1,29 @@

poetry install

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids CartPole-v1 Acrobot-v1 MountainCar-v0 \

- --command "poetry run python cleanrl/dqn.py --cuda False --track --capture-video" \

+ --command "poetry run python cleanrl/dqn.py --no_cuda --track --capture_video" \

--num-seeds 3 \

--workers 9

poetry install -E atari

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

- --command "poetry run python cleanrl/dqn_atari.py --track --capture-video" \

+ --command "poetry run python cleanrl/dqn_atari.py --track --capture_video" \

--num-seeds 3 \

--workers 1

poetry install -E jax

-poetry run pip install --upgrade "jax[cuda]==0.3.17" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

+poetry run pip install --upgrade "jax[cuda11_cudnn82]==0.4.8" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

xvfb-run -a python -m cleanrl_utils.benchmark \

--env-ids CartPole-v1 Acrobot-v1 MountainCar-v0 \

- --command "poetry run python cleanrl/dqn_jax.py --track --capture-video" \

+ --command "poetry run python cleanrl/dqn_jax.py --track --capture_video" \

--num-seeds 3 \

--workers 1

poetry install -E "atari jax"

-poetry run pip install --upgrade "jax[cuda]==0.3.17" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

+poetry run pip install --upgrade "jax[cuda11_cudnn82]==0.4.8" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

xvfb-run -a python -m cleanrl_utils.benchmark \

--env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

- --command "poetry run python cleanrl/dqn_atari_jax.py --track --capture-video" \

+ --command "poetry run python cleanrl/dqn_atari_jax.py --track --capture_video" \

--num-seeds 3 \

--workers 1

diff --git a/benchmark/ppg.sh b/benchmark/ppg.sh

index 20fde68cf..ee5580f33 100644

--- a/benchmark/ppg.sh

+++ b/benchmark/ppg.sh

@@ -3,6 +3,6 @@

poetry install -E procgen

xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids starpilot bossfight bigfish \

- --command "poetry run python cleanrl/ppg_procgen.py --track --capture-video" \

+ --command "poetry run python cleanrl/ppg_procgen.py --track --capture_video" \

--num-seeds 3 \

--workers 1

diff --git a/benchmark/ppo.sh b/benchmark/ppo.sh

index 7fefcd933..70f374785 100644

--- a/benchmark/ppo.sh

+++ b/benchmark/ppo.sh

@@ -3,118 +3,143 @@

poetry install

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids CartPole-v1 Acrobot-v1 MountainCar-v0 \

- --command "poetry run python cleanrl/ppo.py --cuda False --track --capture-video" \

+ --command "poetry run python cleanrl/ppo.py --no_cuda --track --capture_video" \

--num-seeds 3 \

- --workers 9

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

poetry install -E atari

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

- --command "poetry run python cleanrl/ppo_atari.py --track --capture-video" \

+ --command "poetry run python cleanrl/ppo_atari.py --track --capture_video" \

--num-seeds 3 \

- --workers 3

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

+

+poetry install -E mujoco

+OMP_NUM_THREADS=1 xvfb-run -a python -m cleanrl_utils.benchmark \

+ --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 \

+ --command "poetry run python cleanrl/ppo_continuous_action.py --no_cuda --track --capture_video" \

+ --num-seeds 3 \

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

+

+poetry install -E "mujoco dm_control"

+OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

+ --env-ids dm_control/acrobot-swingup-v0 dm_control/acrobot-swingup_sparse-v0 dm_control/ball_in_cup-catch-v0 dm_control/cartpole-balance-v0 dm_control/cartpole-balance_sparse-v0 dm_control/cartpole-swingup-v0 dm_control/cartpole-swingup_sparse-v0 dm_control/cartpole-two_poles-v0 dm_control/cartpole-three_poles-v0 dm_control/cheetah-run-v0 dm_control/dog-stand-v0 dm_control/dog-walk-v0 dm_control/dog-trot-v0 dm_control/dog-run-v0 dm_control/dog-fetch-v0 dm_control/finger-spin-v0 dm_control/finger-turn_easy-v0 dm_control/finger-turn_hard-v0 dm_control/fish-upright-v0 dm_control/fish-swim-v0 dm_control/hopper-stand-v0 dm_control/hopper-hop-v0 dm_control/humanoid-stand-v0 dm_control/humanoid-walk-v0 dm_control/humanoid-run-v0 dm_control/humanoid-run_pure_state-v0 dm_control/humanoid_CMU-stand-v0 dm_control/humanoid_CMU-run-v0 dm_control/lqr-lqr_2_1-v0 dm_control/lqr-lqr_6_2-v0 dm_control/manipulator-bring_ball-v0 dm_control/manipulator-bring_peg-v0 dm_control/manipulator-insert_ball-v0 dm_control/manipulator-insert_peg-v0 dm_control/pendulum-swingup-v0 dm_control/point_mass-easy-v0 dm_control/point_mass-hard-v0 dm_control/quadruped-walk-v0 dm_control/quadruped-run-v0 dm_control/quadruped-escape-v0 dm_control/quadruped-fetch-v0 dm_control/reacher-easy-v0 dm_control/reacher-hard-v0 dm_control/stacker-stack_2-v0 dm_control/stacker-stack_4-v0 dm_control/swimmer-swimmer6-v0 dm_control/swimmer-swimmer15-v0 dm_control/walker-stand-v0 dm_control/walker-walk-v0 dm_control/walker-run-v0 \

+ --command "poetry run python cleanrl/ppo_continuous_action.py --exp-name ppo_continuous_action_8M --total-timesteps 8000000 --no_cuda --track" \

+ --num-seeds 10 \

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

poetry install -E atari

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

- --command "poetry run python cleanrl/ppo_atari_lstm.py --track --capture-video" \

+ --command "poetry run python cleanrl/ppo_atari_lstm.py --track --capture_video" \

--num-seeds 3 \

- --workers 3

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

poetry install -E envpool

-xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

+poetry run python -m cleanrl_utils.benchmark \

--env-ids Pong-v5 BeamRider-v5 Breakout-v5 \

- --command "poetry run python cleanrl/ppo_atari_envpool.py --track --capture-video" \

+ --command "poetry run python cleanrl/ppo_atari_envpool.py --track --capture_video" \

--num-seeds 3 \

- --workers 1

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

-poetry install -E "mujoco_py mujoco"

-poetry run python -c "import mujoco_py"

-OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

- --env-ids HalfCheetah-v2 Walker2d-v2 Hopper-v2 InvertedPendulum-v2 Humanoid-v2 Pusher-v2 \

- --command "poetry run python cleanrl/ppo_continuous_action.py --cuda False --track --capture-video" \

+poetry install -E "envpool jax"

+poetry run python -m cleanrl_utils.benchmark \

+ --env-ids Alien-v5 Amidar-v5 Assault-v5 Asterix-v5 Asteroids-v5 Atlantis-v5 BankHeist-v5 BattleZone-v5 BeamRider-v5 Berzerk-v5 Bowling-v5 Boxing-v5 Breakout-v5 Centipede-v5 ChopperCommand-v5 CrazyClimber-v5 Defender-v5 DemonAttack-v5 DoubleDunk-v5 Enduro-v5 FishingDerby-v5 Freeway-v5 Frostbite-v5 Gopher-v5 Gravitar-v5 Hero-v5 IceHockey-v5 Jamesbond-v5 Kangaroo-v5 Krull-v5 KungFuMaster-v5 MontezumaRevenge-v5 MsPacman-v5 NameThisGame-v5 Phoenix-v5 Pitfall-v5 Pong-v5 PrivateEye-v5 Qbert-v5 Riverraid-v5 RoadRunner-v5 Robotank-v5 Seaquest-v5 Skiing-v5 Solaris-v5 SpaceInvaders-v5 StarGunner-v5 Surround-v5 Tennis-v5 TimePilot-v5 Tutankham-v5 UpNDown-v5 Venture-v5 VideoPinball-v5 WizardOfWor-v5 YarsRevenge-v5 Zaxxon-v5 \

+ --command "poetry run python ppo_atari_envpool_xla_jax.py --track --wandb-project-name envpool-atari --wandb-entity openrlbenchmark" \

+ --num-seeds 3 \

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

+

+poetry install -E "envpool jax"

+python -m cleanrl_utils.benchmark \

+ --env-ids Pong-v5 BeamRider-v5 Breakout-v5 \

+ --command "poetry run python cleanrl/ppo_atari_envpool_xla_jax_scan.py --track --capture_video" \

--num-seeds 3 \

- --workers 6

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

poetry install -E procgen

-xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

+poetry run python -m cleanrl_utils.benchmark \

--env-ids starpilot bossfight bigfish \

- --command "poetry run python cleanrl/ppo_procgen.py --track --capture-video" \

+ --command "poetry run python cleanrl/ppo_procgen.py --track --capture_video" \

--num-seeds 3 \

- --workers 1

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

poetry install -E atari

xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

- --command "poetry run torchrun --standalone --nnodes=1 --nproc_per_node=2 cleanrl/ppo_atari_multigpu.py --track --capture-video" \

+ --command "poetry run torchrun --standalone --nnodes=1 --nproc_per_node=2 cleanrl/ppo_atari_multigpu.py --local-num-envs 4 --track --capture_video" \

--num-seeds 3 \

- --workers 1

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

-poetry install "pettingzoo atari"

+poetry install -E "pettingzoo atari"

poetry run AutoROM --accept-license

xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids pong_v3 surround_v2 tennis_v3 \

- --command "poetry run python cleanrl/ppo_pettingzoo_ma_atari.py --track --capture-video" \

+ --command "poetry run python cleanrl/ppo_pettingzoo_ma_atari.py --track --capture_video" \

--num-seeds 3 \

- --workers 3

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

# IMPORTANT: see specific Isaac Gym installation at

# https://docs.cleanrl.dev/rl-algorithms/ppo/#usage_8

poetry install --with isaacgym

xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids Cartpole Ant Humanoid BallBalance Anymal \

- --command "poetry run python cleanrl/ppo_continuous_action_isaacgym/ppo_continuous_action_isaacgym.py --track --capture-video" \

+ --command "poetry run python cleanrl/ppo_continuous_action_isaacgym/ppo_continuous_action_isaacgym.py --track --capture_video" \

--num-seeds 3 \

- --workers 1

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids AllegroHand ShadowHand \

- --command "poetry run python cleanrl/ppo_continuous_action_isaacgym/ppo_continuous_action_isaacgym.py --track --capture-video --num-envs 8192 --num-steps 8 --update-epochs 5 --num-minibatches 4 --reward-scaler 0.01 --total-timesteps 600000000 --record-video-step-frequency 3660" \

+ --command "poetry run python cleanrl/ppo_continuous_action_isaacgym/ppo_continuous_action_isaacgym.py --track --capture_video --num-envs 8192 --num-steps 8 --update-epochs 5 --num-minibatches 4 --reward-scaler 0.01 --total-timesteps 600000000 --record-video-step-frequency 3660" \

--num-seeds 3 \

- --workers 1

-

-

-poetry install "envpool jax"

-poetry run python -m cleanrl_utils.benchmark \

- --env-ids Alien-v5 Amidar-v5 Assault-v5 Asterix-v5 Asteroids-v5 Atlantis-v5 BankHeist-v5 BattleZone-v5 BeamRider-v5 Berzerk-v5 Bowling-v5 Boxing-v5 Breakout-v5 Centipede-v5 ChopperCommand-v5 CrazyClimber-v5 Defender-v5 DemonAttack-v5 \

- --command "poetry run python ppo_atari_envpool_xla_jax.py --track --wandb-project-name envpool-atari --wandb-entity openrlbenchmark" \

- --num-seeds 3 \

- --workers 1

-poetry run python -m cleanrl_utils.benchmark \

- --env-ids DoubleDunk-v5 Enduro-v5 FishingDerby-v5 Freeway-v5 Frostbite-v5 Gopher-v5 Gravitar-v5 Hero-v5 IceHockey-v5 Jamesbond-v5 Kangaroo-v5 Krull-v5 KungFuMaster-v5 MontezumaRevenge-v5 MsPacman-v5 NameThisGame-v5 Phoenix-v5 Pitfall-v5 Pong-v5 \

- --command "poetry run python ppo_atari_envpool_xla_jax.py --track --wandb-project-name envpool-atari --wandb-entity openrlbenchmark" \

- --num-seeds 3 \

- --workers 1

-poetry run python -m cleanrl_utils.benchmark \

- --env-ids PrivateEye-v5 Qbert-v5 Riverraid-v5 RoadRunner-v5 Robotank-v5 Seaquest-v5 Skiing-v5 Solaris-v5 SpaceInvaders-v5 StarGunner-v5 Surround-v5 Tennis-v5 TimePilot-v5 Tutankham-v5 UpNDown-v5 Venture-v5 VideoPinball-v5 WizardOfWor-v5 YarsRevenge-v5 Zaxxon-v5 \

- --command "poetry run python ppo_atari_envpool_xla_jax.py --track --wandb-project-name envpool-atari --wandb-entity openrlbenchmark" \

- --num-seeds 3 \

- --workers 1

-

-# gymnasium support

-poetry install -E mujoco

-OMP_NUM_THREADS=1 xvfb-run -a python -m cleanrl_utils.benchmark \

- --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 \

- --command "poetry run python cleanrl/gymnasium_support/ppo_continuous_action.py --cuda False --track" \

- --num-seeds 3 \

- --workers 1

-

-poetry install "dm_control mujoco"

-OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

- --env-ids dm_control/acrobot-swingup-v0 dm_control/acrobot-swingup_sparse-v0 dm_control/ball_in_cup-catch-v0 dm_control/cartpole-balance-v0 dm_control/cartpole-balance_sparse-v0 dm_control/cartpole-swingup-v0 dm_control/cartpole-swingup_sparse-v0 dm_control/cartpole-two_poles-v0 dm_control/cartpole-three_poles-v0 dm_control/cheetah-run-v0 dm_control/dog-stand-v0 dm_control/dog-walk-v0 dm_control/dog-trot-v0 dm_control/dog-run-v0 dm_control/dog-fetch-v0 dm_control/finger-spin-v0 dm_control/finger-turn_easy-v0 dm_control/finger-turn_hard-v0 dm_control/fish-upright-v0 dm_control/fish-swim-v0 dm_control/hopper-stand-v0 dm_control/hopper-hop-v0 dm_control/humanoid-stand-v0 dm_control/humanoid-walk-v0 dm_control/humanoid-run-v0 dm_control/humanoid-run_pure_state-v0 dm_control/humanoid_CMU-stand-v0 dm_control/humanoid_CMU-run-v0 dm_control/lqr-lqr_2_1-v0 dm_control/lqr-lqr_6_2-v0 dm_control/manipulator-bring_ball-v0 dm_control/manipulator-bring_peg-v0 dm_control/manipulator-insert_ball-v0 dm_control/manipulator-insert_peg-v0 dm_control/pendulum-swingup-v0 dm_control/point_mass-easy-v0 dm_control/point_mass-hard-v0 dm_control/quadruped-walk-v0 dm_control/quadruped-run-v0 dm_control/quadruped-escape-v0 dm_control/quadruped-fetch-v0 dm_control/reacher-easy-v0 dm_control/reacher-hard-v0 dm_control/stacker-stack_2-v0 dm_control/stacker-stack_4-v0 dm_control/swimmer-swimmer6-v0 dm_control/swimmer-swimmer15-v0 dm_control/walker-stand-v0 dm_control/walker-walk-v0 dm_control/walker-run-v0 \

- --command "poetry run python cleanrl/gymnasium_support/ppo_continuous_action.py --cuda False --track" \

- --num-seeds 3 \

- --workers 9

-

-poetry install "envpool jax"

-python -m cleanrl_utils.benchmark \

- --env-ids Pong-v5 BeamRider-v5 Breakout-v5 \

- --command "poetry run python cleanrl/ppo_atari_envpool_xla_jax_scan.py --track --capture-video" \

- --num-seeds 3 \

- --workers 1

-

-poetry install "mujoco dm_control"

-OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

- --env-ids dm_control/acrobot-swingup-v0 dm_control/acrobot-swingup_sparse-v0 dm_control/ball_in_cup-catch-v0 dm_control/cartpole-balance-v0 dm_control/cartpole-balance_sparse-v0 dm_control/cartpole-swingup-v0 dm_control/cartpole-swingup_sparse-v0 dm_control/cartpole-two_poles-v0 dm_control/cartpole-three_poles-v0 dm_control/cheetah-run-v0 dm_control/dog-stand-v0 dm_control/dog-walk-v0 dm_control/dog-trot-v0 dm_control/dog-run-v0 dm_control/dog-fetch-v0 dm_control/finger-spin-v0 dm_control/finger-turn_easy-v0 dm_control/finger-turn_hard-v0 dm_control/fish-upright-v0 dm_control/fish-swim-v0 dm_control/hopper-stand-v0 dm_control/hopper-hop-v0 dm_control/humanoid-stand-v0 dm_control/humanoid-walk-v0 dm_control/humanoid-run-v0 dm_control/humanoid-run_pure_state-v0 dm_control/humanoid_CMU-stand-v0 dm_control/humanoid_CMU-run-v0 dm_control/lqr-lqr_2_1-v0 dm_control/lqr-lqr_6_2-v0 dm_control/manipulator-bring_ball-v0 dm_control/manipulator-bring_peg-v0 dm_control/manipulator-insert_ball-v0 dm_control/manipulator-insert_peg-v0 dm_control/pendulum-swingup-v0 dm_control/point_mass-easy-v0 dm_control/point_mass-hard-v0 dm_control/quadruped-walk-v0 dm_control/quadruped-run-v0 dm_control/quadruped-escape-v0 dm_control/quadruped-fetch-v0 dm_control/reacher-easy-v0 dm_control/reacher-hard-v0 dm_control/stacker-stack_2-v0 dm_control/stacker-stack_4-v0 dm_control/swimmer-swimmer6-v0 dm_control/swimmer-swimmer15-v0 dm_control/walker-stand-v0 dm_control/walker-walk-v0 dm_control/walker-run-v0 \

- --command "poetry run python cleanrl/ppo_continuous_action.py --exp-name ppo_continuous_action_8M --total-timesteps 8000000 --cuda False --track" \

- --num-seeds 10 \

- --workers 1

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

diff --git a/benchmark/ppo_plot.sh b/benchmark/ppo_plot.sh

new file mode 100644

index 000000000..95678d986

--- /dev/null

+++ b/benchmark/ppo_plot.sh

@@ -0,0 +1,117 @@

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo?tag=pr-424' \

+ --env-ids CartPole-v1 Acrobot-v1 MountainCar-v0 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo \

+ --scan-history

+

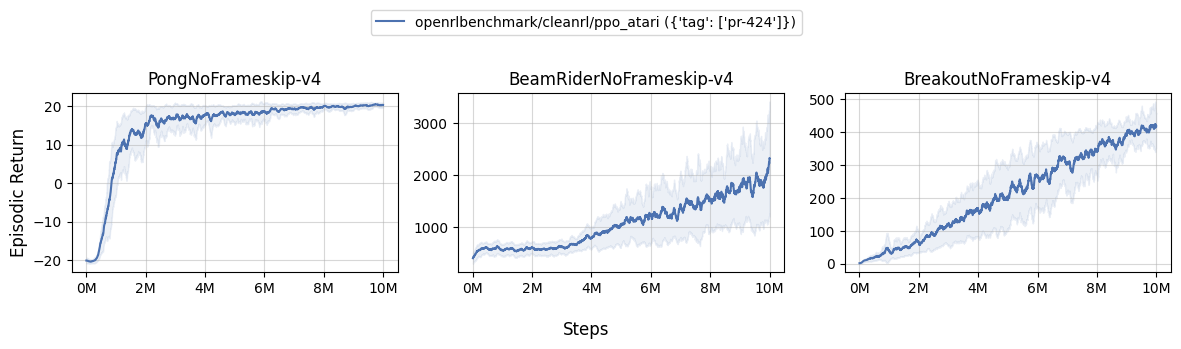

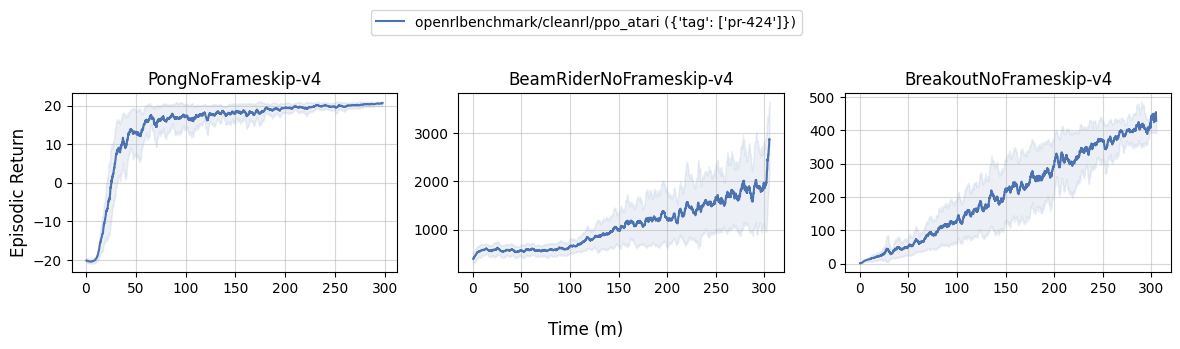

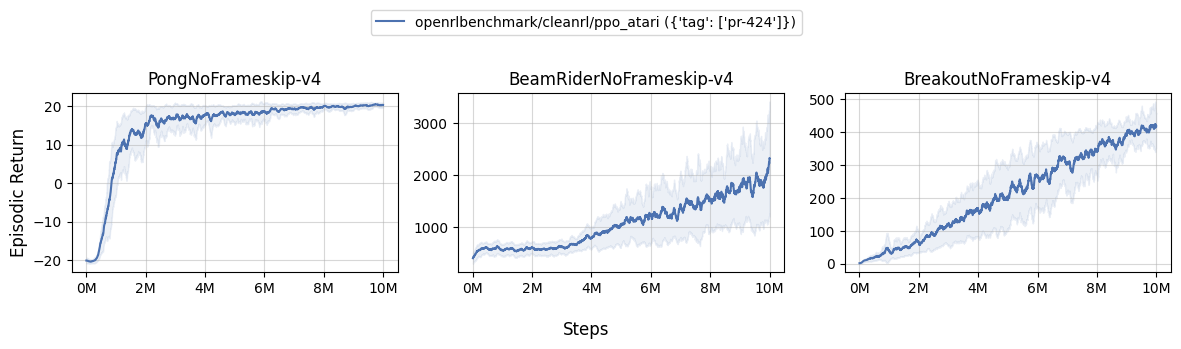

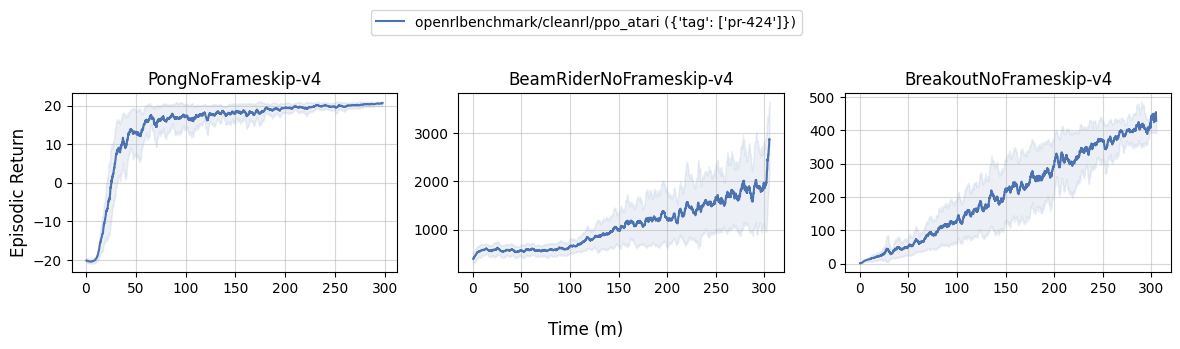

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo_atari?tag=pr-424' \

+ --env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_atari \

+ --scan-history

+

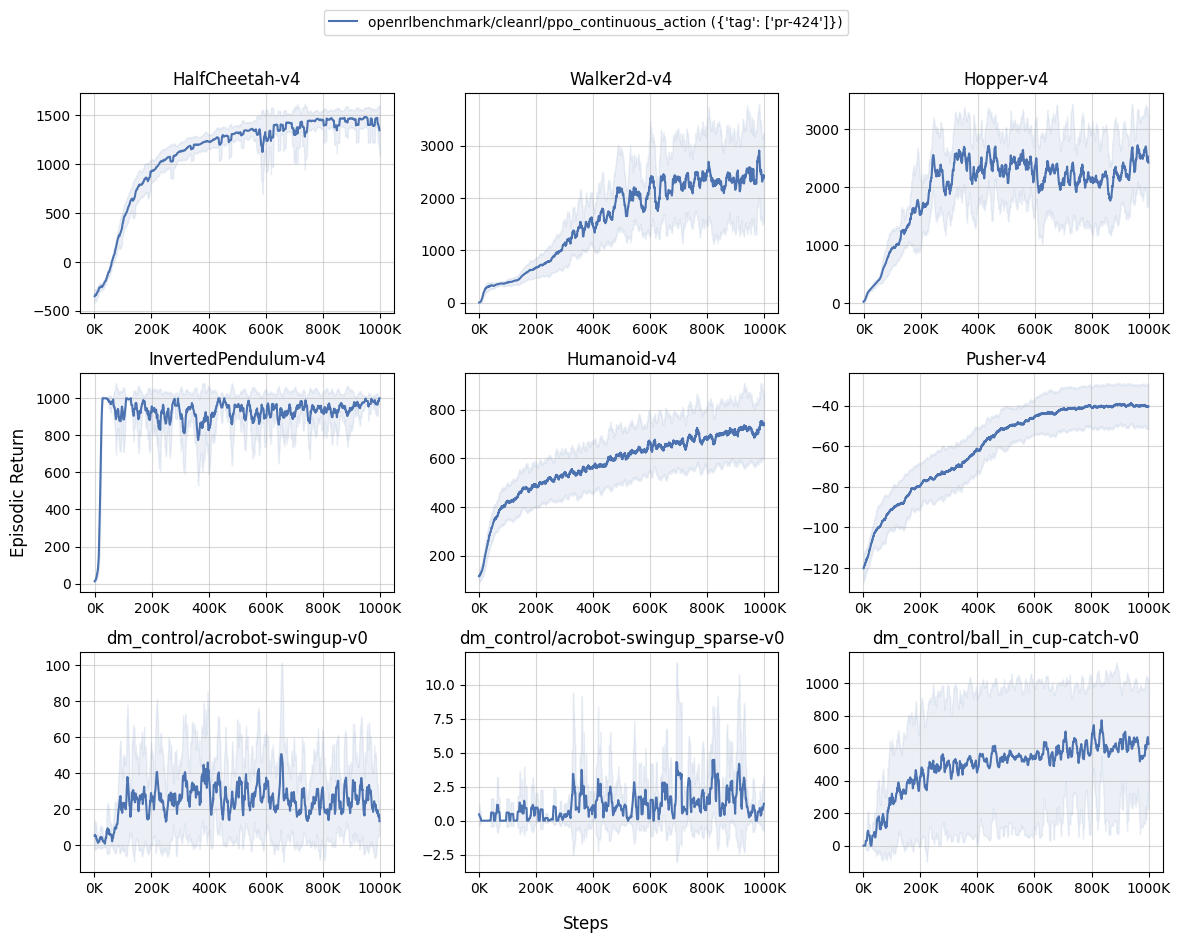

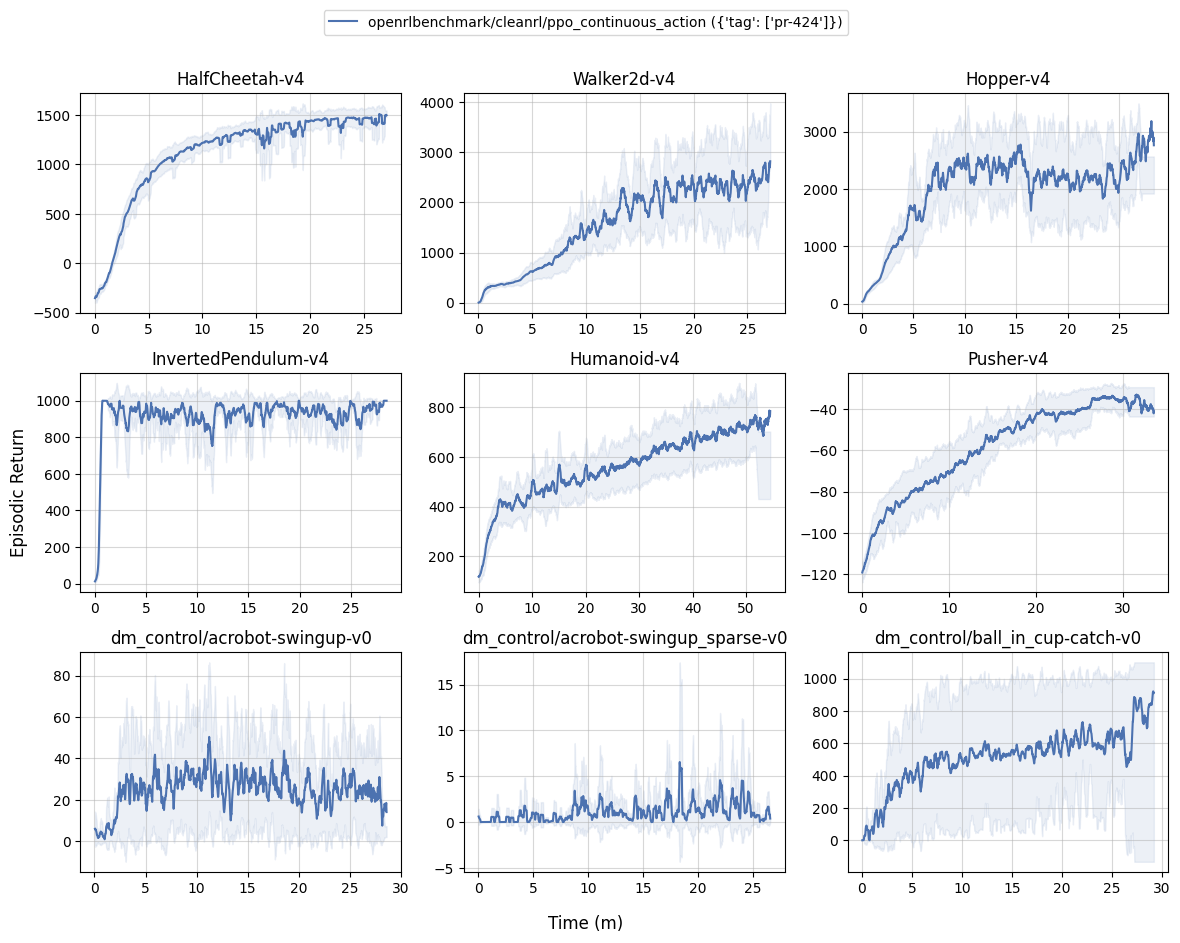

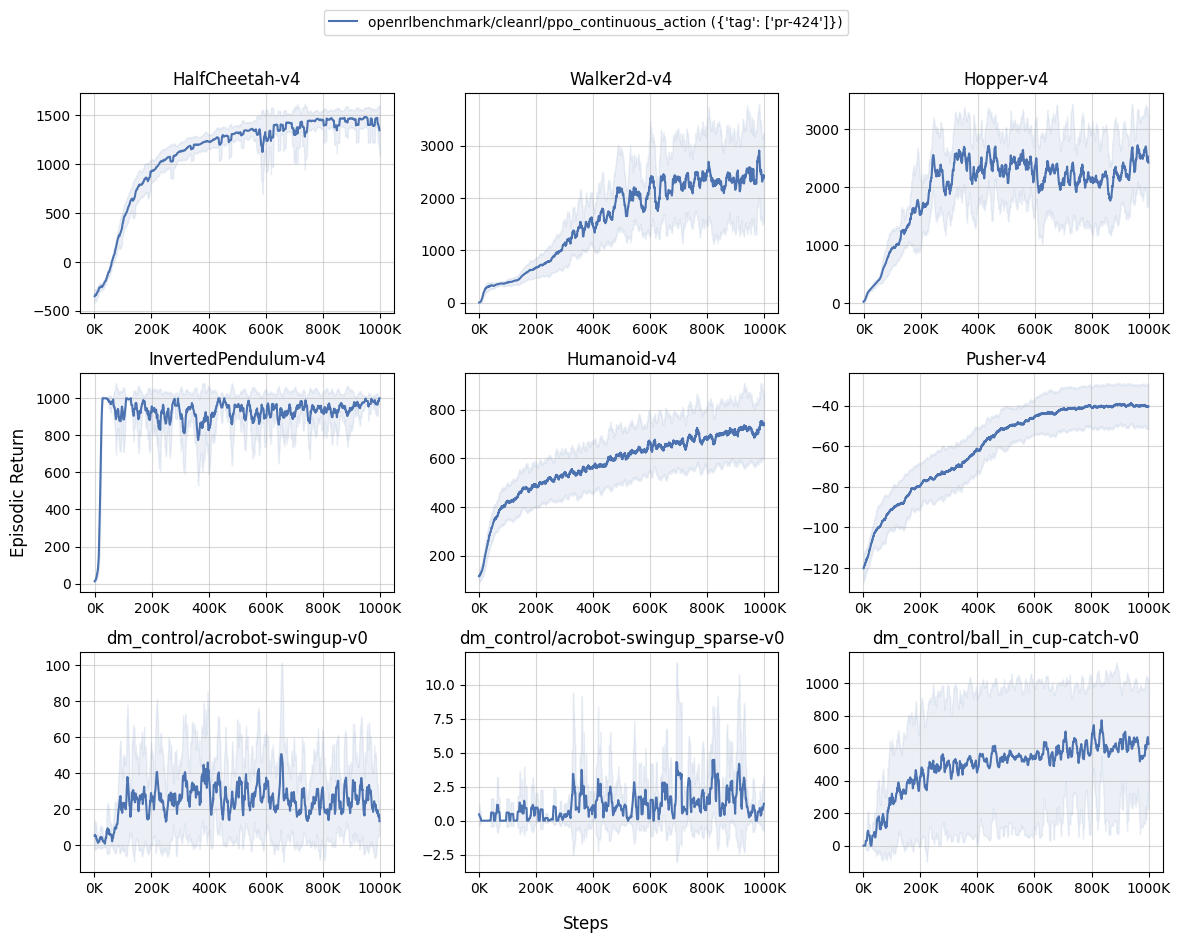

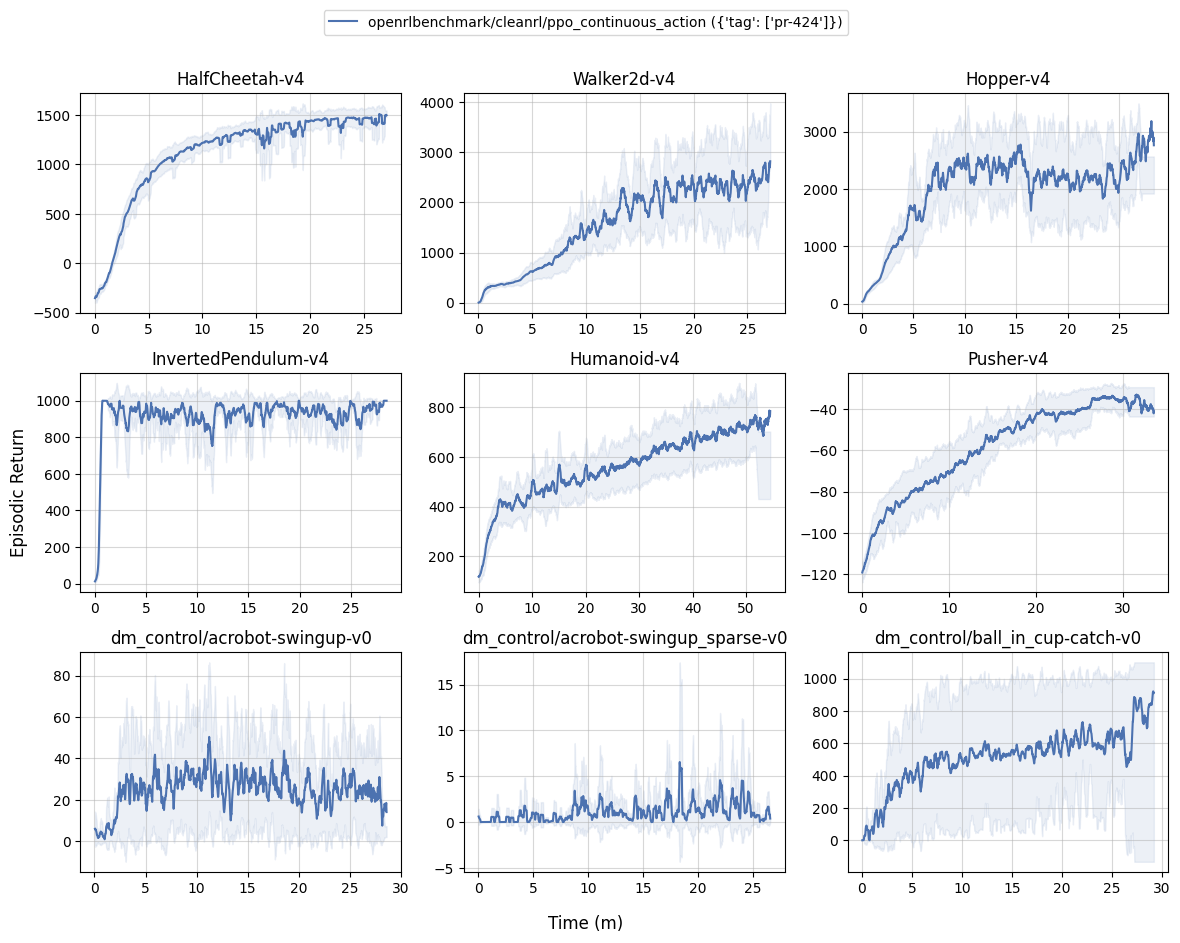

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo_continuous_action?tag=pr-424' \

+ --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 dm_control/acrobot-swingup-v0 dm_control/acrobot-swingup_sparse-v0 dm_control/ball_in_cup-catch-v0 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_continuous_action \

+ --scan-history

+

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo_continuous_action?tag=v1.0.0-13-gcbd83f6' \

+ --env-ids dm_control/acrobot-swingup-v0 dm_control/acrobot-swingup_sparse-v0 dm_control/ball_in_cup-catch-v0 dm_control/cartpole-balance-v0 dm_control/cartpole-balance_sparse-v0 dm_control/cartpole-swingup-v0 dm_control/cartpole-swingup_sparse-v0 dm_control/cartpole-two_poles-v0 dm_control/cartpole-three_poles-v0 dm_control/cheetah-run-v0 dm_control/dog-stand-v0 dm_control/dog-walk-v0 dm_control/dog-trot-v0 dm_control/dog-run-v0 dm_control/dog-fetch-v0 dm_control/finger-spin-v0 dm_control/finger-turn_easy-v0 dm_control/finger-turn_hard-v0 dm_control/fish-upright-v0 dm_control/fish-swim-v0 dm_control/hopper-stand-v0 dm_control/hopper-hop-v0 dm_control/humanoid-stand-v0 dm_control/humanoid-walk-v0 dm_control/humanoid-run-v0 dm_control/humanoid-run_pure_state-v0 dm_control/humanoid_CMU-stand-v0 dm_control/humanoid_CMU-run-v0 dm_control/lqr-lqr_2_1-v0 dm_control/lqr-lqr_6_2-v0 dm_control/manipulator-bring_ball-v0 dm_control/manipulator-bring_peg-v0 dm_control/manipulator-insert_ball-v0 dm_control/manipulator-insert_peg-v0 dm_control/pendulum-swingup-v0 dm_control/point_mass-easy-v0 dm_control/point_mass-hard-v0 dm_control/quadruped-walk-v0 dm_control/quadruped-run-v0 dm_control/quadruped-escape-v0 dm_control/quadruped-fetch-v0 dm_control/reacher-easy-v0 dm_control/reacher-hard-v0 dm_control/stacker-stack_2-v0 dm_control/stacker-stack_4-v0 dm_control/swimmer-swimmer6-v0 dm_control/swimmer-swimmer15-v0 dm_control/walker-stand-v0 dm_control/walker-walk-v0 dm_control/walker-run-v0 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_continuous_action_dm_control \

+ --scan-history

+

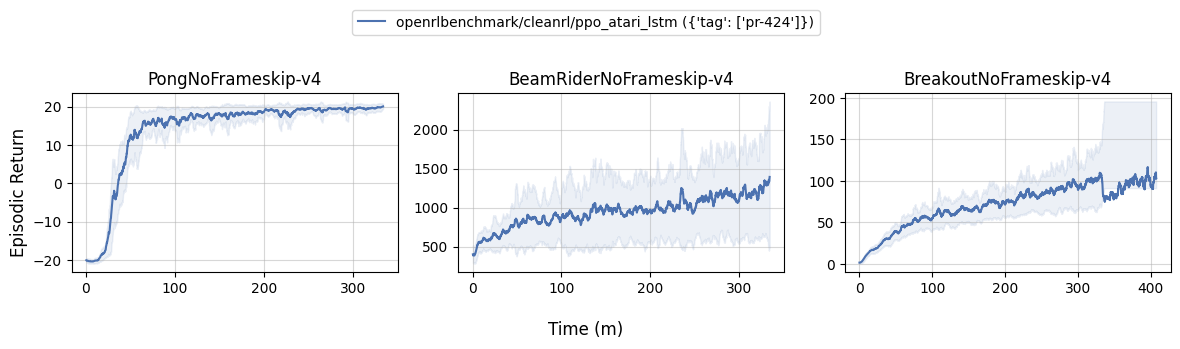

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo_atari_lstm?tag=pr-424' \

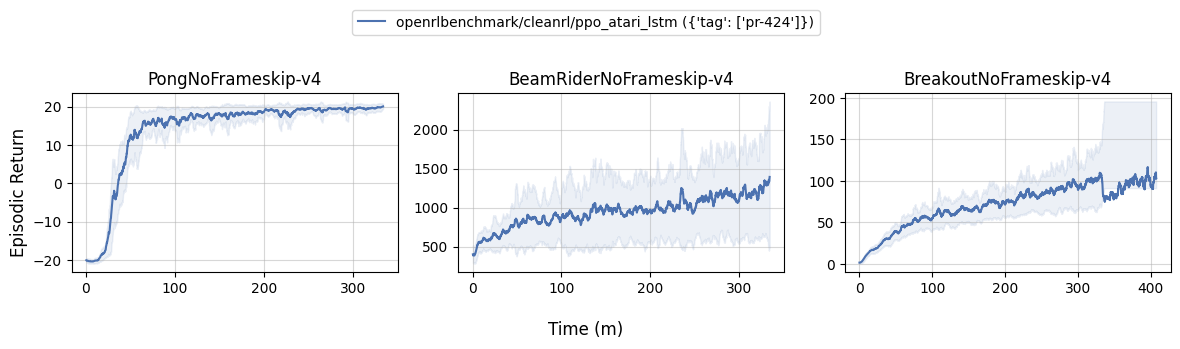

+ --env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_atari_lstm \

+ --scan-history

+

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/avg_episodic_return' \

+ 'ppo_atari_envpool?tag=pr-424' \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo_atari?tag=pr-424' \

+ --env-ids Pong-v5 BeamRider-v5 Breakout-v5 \

+ --env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_atari_envpool \

+ --scan-history

+

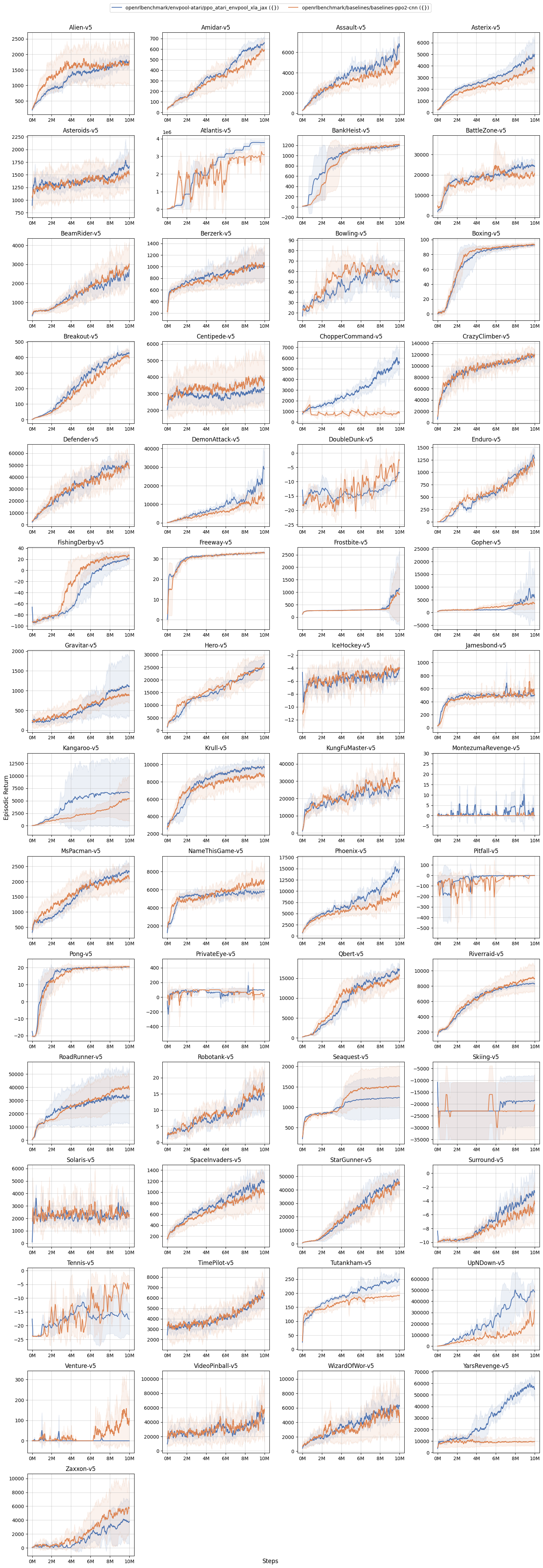

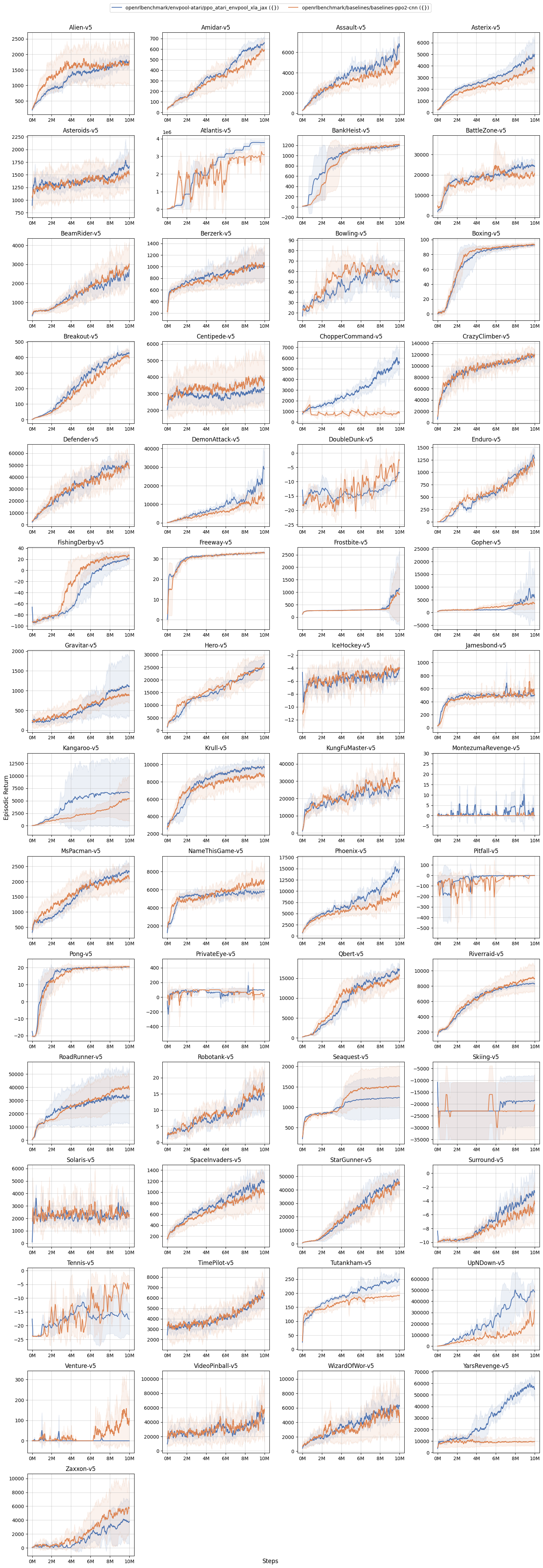

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=envpool-atari&ceik=env_id&cen=exp_name&metric=charts/avg_episodic_return' \

+ 'ppo_atari_envpool_xla_jax' \

+ --filters '?we=openrlbenchmark&wpn=baselines&ceik=env&cen=exp_name&metric=charts/episodic_return' \

+ 'baselines-ppo2-cnn' \

+ --env-ids Alien-v5 Amidar-v5 Assault-v5 Asterix-v5 Asteroids-v5 Atlantis-v5 BankHeist-v5 BattleZone-v5 BeamRider-v5 Berzerk-v5 Bowling-v5 Boxing-v5 Breakout-v5 Centipede-v5 ChopperCommand-v5 CrazyClimber-v5 Defender-v5 DemonAttack-v5 DoubleDunk-v5 Enduro-v5 FishingDerby-v5 Freeway-v5 Frostbite-v5 Gopher-v5 Gravitar-v5 Hero-v5 IceHockey-v5 Jamesbond-v5 Kangaroo-v5 Krull-v5 KungFuMaster-v5 MontezumaRevenge-v5 MsPacman-v5 NameThisGame-v5 Phoenix-v5 Pitfall-v5 Pong-v5 PrivateEye-v5 Qbert-v5 Riverraid-v5 RoadRunner-v5 Robotank-v5 Seaquest-v5 Skiing-v5 Solaris-v5 SpaceInvaders-v5 StarGunner-v5 Surround-v5 Tennis-v5 TimePilot-v5 Tutankham-v5 UpNDown-v5 Venture-v5 VideoPinball-v5 WizardOfWor-v5 YarsRevenge-v5 Zaxxon-v5 \

+ --env-ids AlienNoFrameskip-v4 AmidarNoFrameskip-v4 AssaultNoFrameskip-v4 AsterixNoFrameskip-v4 AsteroidsNoFrameskip-v4 AtlantisNoFrameskip-v4 BankHeistNoFrameskip-v4 BattleZoneNoFrameskip-v4 BeamRiderNoFrameskip-v4 BerzerkNoFrameskip-v4 BowlingNoFrameskip-v4 BoxingNoFrameskip-v4 BreakoutNoFrameskip-v4 CentipedeNoFrameskip-v4 ChopperCommandNoFrameskip-v4 CrazyClimberNoFrameskip-v4 DefenderNoFrameskip-v4 DemonAttackNoFrameskip-v4 DoubleDunkNoFrameskip-v4 EnduroNoFrameskip-v4 FishingDerbyNoFrameskip-v4 FreewayNoFrameskip-v4 FrostbiteNoFrameskip-v4 GopherNoFrameskip-v4 GravitarNoFrameskip-v4 HeroNoFrameskip-v4 IceHockeyNoFrameskip-v4 JamesbondNoFrameskip-v4 KangarooNoFrameskip-v4 KrullNoFrameskip-v4 KungFuMasterNoFrameskip-v4 MontezumaRevengeNoFrameskip-v4 MsPacmanNoFrameskip-v4 NameThisGameNoFrameskip-v4 PhoenixNoFrameskip-v4 PitfallNoFrameskip-v4 PongNoFrameskip-v4 PrivateEyeNoFrameskip-v4 QbertNoFrameskip-v4 RiverraidNoFrameskip-v4 RoadRunnerNoFrameskip-v4 RobotankNoFrameskip-v4 SeaquestNoFrameskip-v4 SkiingNoFrameskip-v4 SolarisNoFrameskip-v4 SpaceInvadersNoFrameskip-v4 StarGunnerNoFrameskip-v4 SurroundNoFrameskip-v4 TennisNoFrameskip-v4 TimePilotNoFrameskip-v4 TutankhamNoFrameskip-v4 UpNDownNoFrameskip-v4 VentureNoFrameskip-v4 VideoPinballNoFrameskip-v4 WizardOfWorNoFrameskip-v4 YarsRevengeNoFrameskip-v4 ZaxxonNoFrameskip-v4 \

+ --no-check-empty-runs \

+ --pc.ncols 4 \

+ --pc.ncols-legend 2 \

+ --rliable \

+ --rc.score_normalization_method atari \

+ --rc.normalized_score_threshold 8.0 \

+ --rc.sample_efficiency_plots \

+ --rc.sample_efficiency_and_walltime_efficiency_method Median \

+ --rc.performance_profile_plots \

+ --rc.aggregate_metrics_plots \

+ --rc.sample_efficiency_num_bootstrap_reps 50000 \

+ --rc.performance_profile_num_bootstrap_reps 50000 \

+ --rc.interval_estimates_num_bootstrap_reps 50000 \

+ --output-filename benchmark/cleanrl/ppo_atari_envpool_xla_jax \

+ --scan-history

+

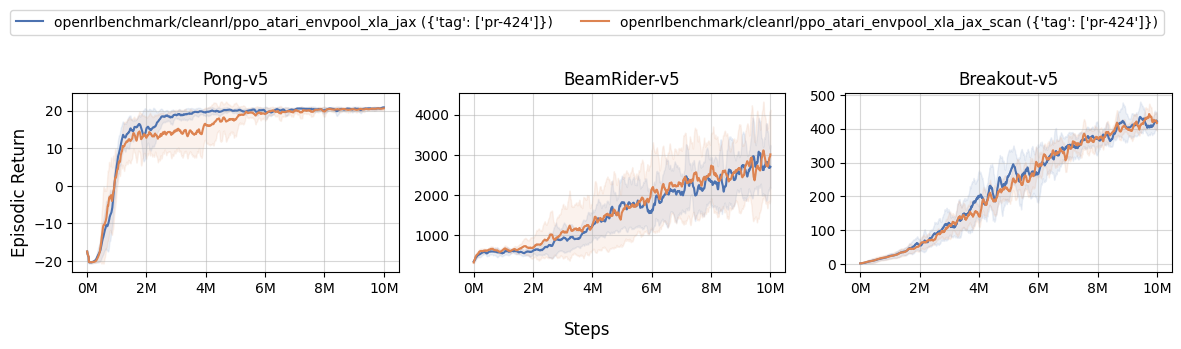

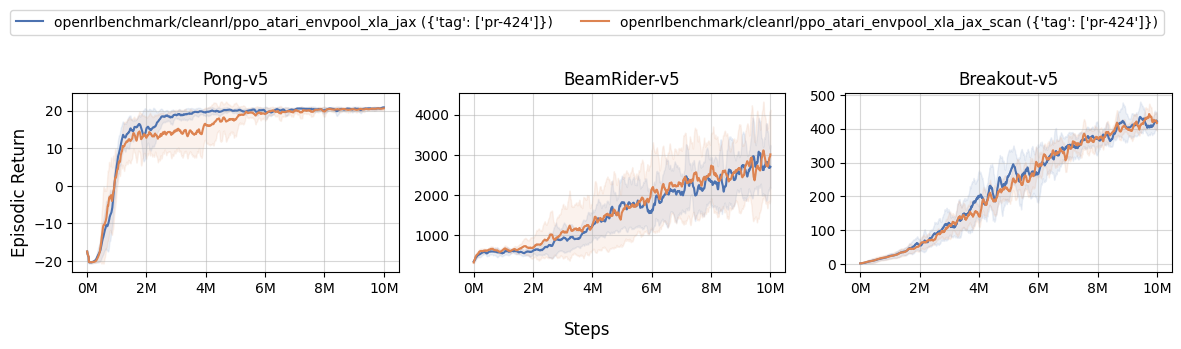

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/avg_episodic_return' \

+ 'ppo_atari_envpool_xla_jax?tag=pr-424' \

+ 'ppo_atari_envpool_xla_jax_scan?tag=pr-424' \

+ --env-ids Pong-v5 BeamRider-v5 Breakout-v5 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_atari_envpool_xla_jax_scan \

+ --scan-history

+

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo_procgen?tag=pr-424' \

+ --env-ids starpilot bossfight bigfish \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_procgen \

+ --scan-history

+

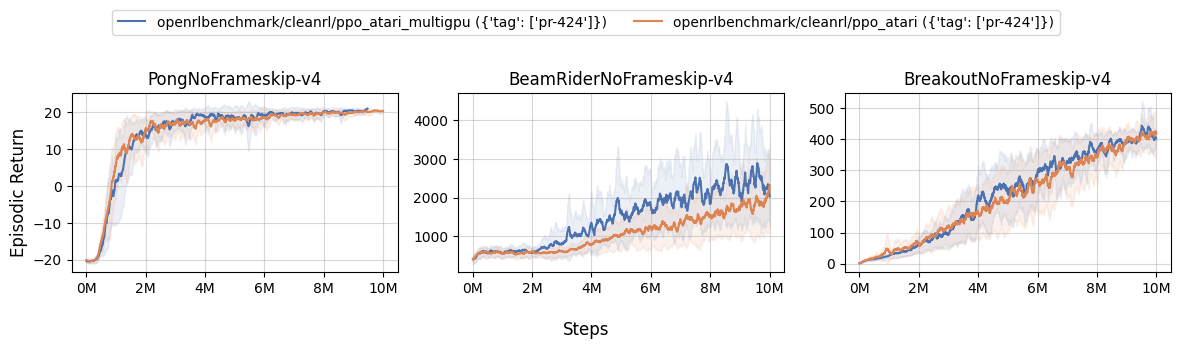

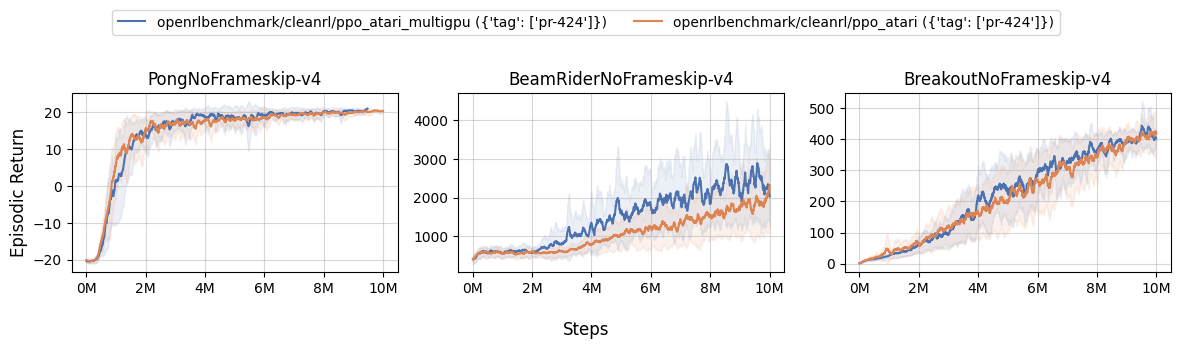

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo_atari_multigpu?tag=pr-424' \

+ 'ppo_atari?tag=pr-424' \

+ --env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_atari_multigpu \

+ --scan-history

diff --git a/benchmark/qdagger.sh b/benchmark/qdagger.sh

index 2491716a0..dc7851fb3 100644

--- a/benchmark/qdagger.sh

+++ b/benchmark/qdagger.sh

@@ -1,15 +1,15 @@

poetry install -E atari

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

- --command "poetry run python cleanrl/qdagger_dqn_atari_impalacnn.py --track --capture-video" \

+ --command "poetry run python cleanrl/qdagger_dqn_atari_impalacnn.py --track --capture_video" \

--num-seeds 3 \

--workers 1

poetry install -E "atari jax"

-poetry run pip install --upgrade "jax[cuda]==0.3.17" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

+poetry run pip install --upgrade "jax[cuda11_cudnn82]==0.4.8" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

- --command "poetry run python cleanrl/qdagger_dqn_atari_jax_impalacnn.py --track --capture-video" \

+ --command "poetry run python cleanrl/qdagger_dqn_atari_jax_impalacnn.py --track --capture_video" \

--num-seeds 3 \

--workers 1

diff --git a/benchmark/rpo.sh b/benchmark/rpo.sh

index cbb551bac..d389197fa 100644

--- a/benchmark/rpo.sh

+++ b/benchmark/rpo.sh

@@ -1,42 +1,42 @@

poetry install "mujoco dm_control"

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids dm_control/acrobot-swingup-v0 dm_control/acrobot-swingup_sparse-v0 dm_control/ball_in_cup-catch-v0 dm_control/cartpole-balance-v0 dm_control/cartpole-balance_sparse-v0 dm_control/cartpole-swingup-v0 dm_control/cartpole-swingup_sparse-v0 dm_control/cartpole-two_poles-v0 dm_control/cartpole-three_poles-v0 dm_control/cheetah-run-v0 dm_control/dog-stand-v0 dm_control/dog-walk-v0 dm_control/dog-trot-v0 dm_control/dog-run-v0 dm_control/dog-fetch-v0 dm_control/finger-spin-v0 dm_control/finger-turn_easy-v0 dm_control/finger-turn_hard-v0 dm_control/fish-upright-v0 dm_control/fish-swim-v0 dm_control/hopper-stand-v0 dm_control/hopper-hop-v0 dm_control/humanoid-stand-v0 dm_control/humanoid-walk-v0 dm_control/humanoid-run-v0 dm_control/humanoid-run_pure_state-v0 dm_control/humanoid_CMU-stand-v0 dm_control/humanoid_CMU-run-v0 dm_control/lqr-lqr_2_1-v0 dm_control/lqr-lqr_6_2-v0 dm_control/manipulator-bring_ball-v0 dm_control/manipulator-bring_peg-v0 dm_control/manipulator-insert_ball-v0 dm_control/manipulator-insert_peg-v0 dm_control/pendulum-swingup-v0 dm_control/point_mass-easy-v0 dm_control/point_mass-hard-v0 dm_control/quadruped-walk-v0 dm_control/quadruped-run-v0 dm_control/quadruped-escape-v0 dm_control/quadruped-fetch-v0 dm_control/reacher-easy-v0 dm_control/reacher-hard-v0 dm_control/stacker-stack_2-v0 dm_control/stacker-stack_4-v0 dm_control/swimmer-swimmer6-v0 dm_control/swimmer-swimmer15-v0 dm_control/walker-stand-v0 dm_control/walker-walk-v0 dm_control/walker-run-v0 \

- --command "poetry run python cleanrl/rpo_continuous_action.py --cuda False --track" \

+ --command "poetry run python cleanrl/rpo_continuous_action.py --no_cuda --track" \

--num-seeds 10 \

--workers 1

poetry run pip install box2d-py==2.3.5

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids Pendulum-v1 BipedalWalker-v3 \

- --command "poetry run python cleanrl/rpo_continuous_action.py --cuda False --track --capture-video" \

+ --command "poetry run python cleanrl/rpo_continuous_action.py --no_cuda --track --capture_video" \

--num-seeds 1 \

--workers 1

poetry install -E mujoco

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids HumanoidStandup-v4 Humanoid-v4 InvertedPendulum-v4 Walker2d-v4 \

- --command "poetry run python cleanrl/rpo_continuous_action.py --cuda False --track --capture-video" \

+ --command "poetry run python cleanrl/rpo_continuous_action.py --no_cuda --track --capture_video" \

--num-seeds 10 \

--workers 1

poetry install -E mujoco

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids HumanoidStandup-v2 Humanoid-v2 InvertedPendulum-v2 Walker2d-v2 \

- --command "poetry run python cleanrl/rpo_continuous_action.py --cuda False --track --capture-video" \

+ --command "poetry run python cleanrl/rpo_continuous_action.py --no_cuda --track --capture_video" \

--num-seeds 10 \

--workers 1

poetry install -E mujoco

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids Ant-v4 InvertedDoublePendulum-v4 Reacher-v4 Pusher-v4 Hopper-v4 HalfCheetah-v4 Swimmer-v4 \

- --command "poetry run python cleanrl/rpo_continuous_action.py --rpo-alpha 0.01 --cuda False --track --capture-video" \

+ --command "poetry run python cleanrl/rpo_continuous_action.py --rpo-alpha 0.01 --no_cuda --track --capture_video" \

--num-seeds 10 \

--workers 1

poetry install -E mujoco

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids Ant-v2 InvertedDoublePendulum-v2 Reacher-v2 Pusher-v2 Hopper-v2 HalfCheetah-v2 Swimmer-v2 \

- --command "poetry run python cleanrl/rpo_continuous_action.py --rpo-alpha 0.01 --cuda False --track --capture-video" \

+ --command "poetry run python cleanrl/rpo_continuous_action.py --rpo-alpha 0.01 --no_cuda --track --capture_video" \

--num-seeds 10 \

--workers 1

diff --git a/benchmark/sac.sh b/benchmark/sac.sh

index e94e11192..2c948bc93 100644

--- a/benchmark/sac.sh

+++ b/benchmark/sac.sh

@@ -1,7 +1,10 @@

-poetry install -E mujoco_py

-poetry run python -c "import mujoco_py"

-OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

- --env-ids HalfCheetah-v2 Walker2d-v2 Hopper-v2 \

- --command "poetry run python cleanrl/sac_continuous_action.py --track --capture-video" \

+poetry install -E mujoco

+poetry run python -m cleanrl_utils.benchmark \

+ --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 \

+ --command "poetry run python cleanrl/sac_continuous_action.py --track" \

--num-seeds 3 \

- --workers 3

\ No newline at end of file

+ --workers 18 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

diff --git a/benchmark/sac_atari.sh b/benchmark/sac_atari.sh

index 13f9e3c9d..a8e8a78ed 100755

--- a/benchmark/sac_atari.sh

+++ b/benchmark/sac_atari.sh

@@ -1,6 +1,6 @@

poetry install -E atari

OMP_NUM_THREADS=1 python -m cleanrl_utils.benchmark \

--env-ids PongNoFrameskip-v4 BreakoutNoFrameskip-v4 BeamRiderNoFrameskip-v4 \

- --command "poetry run python cleanrl/sac_atari.py --cuda True --track" \

+ --command "poetry run python cleanrl/sac_atari.py --track" \

--num-seeds 3 \

--workers 2

diff --git a/benchmark/sac_plot.sh b/benchmark/sac_plot.sh

new file mode 100644

index 000000000..7d82406fa

--- /dev/null

+++ b/benchmark/sac_plot.sh

@@ -0,0 +1,9 @@

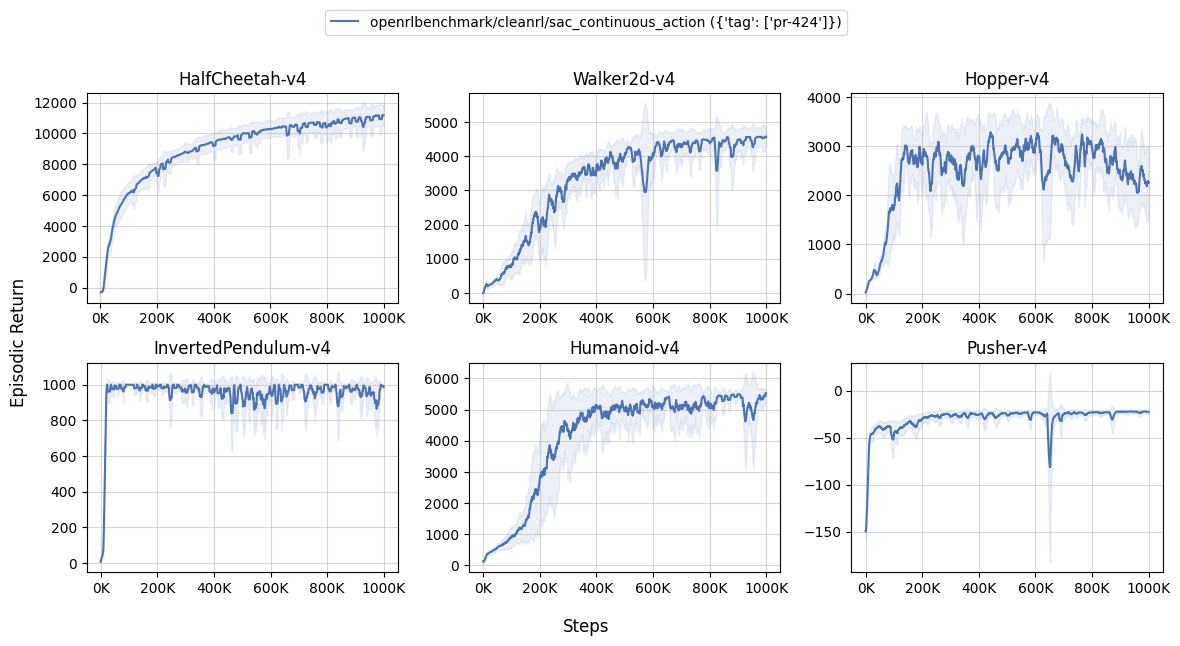

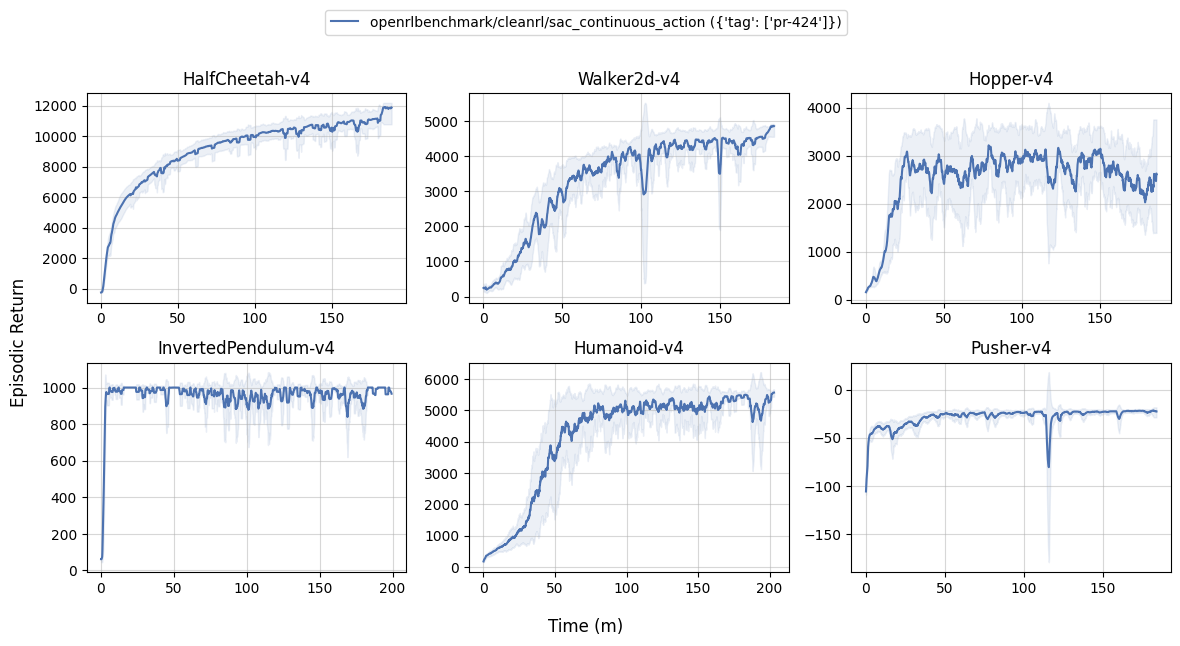

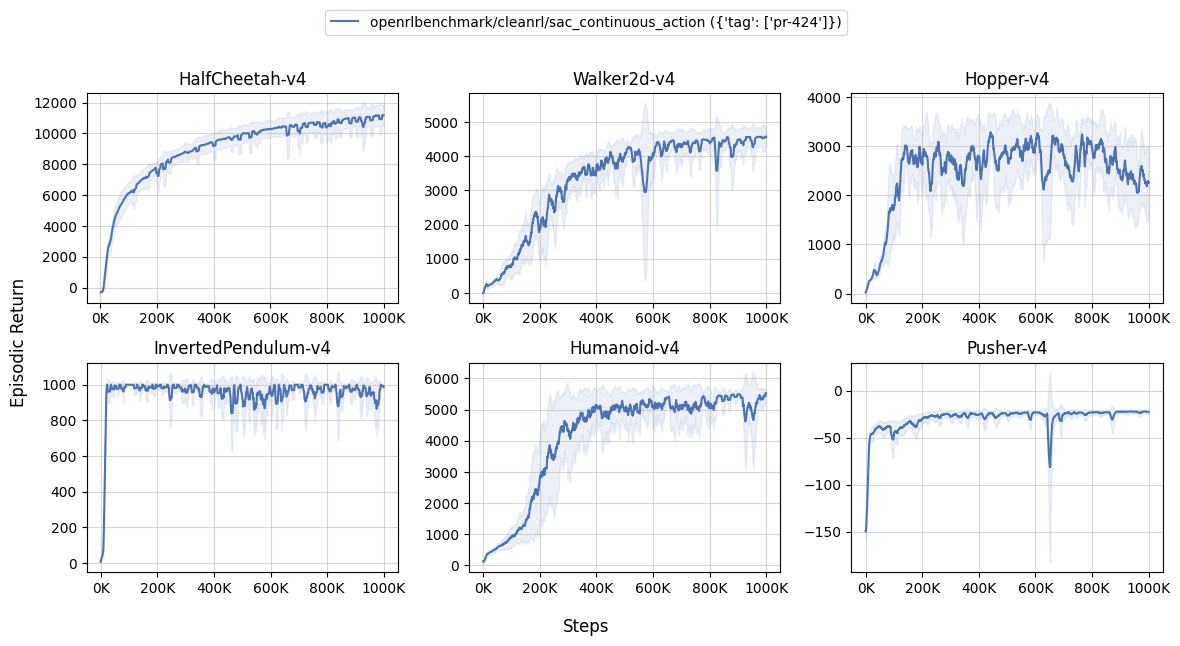

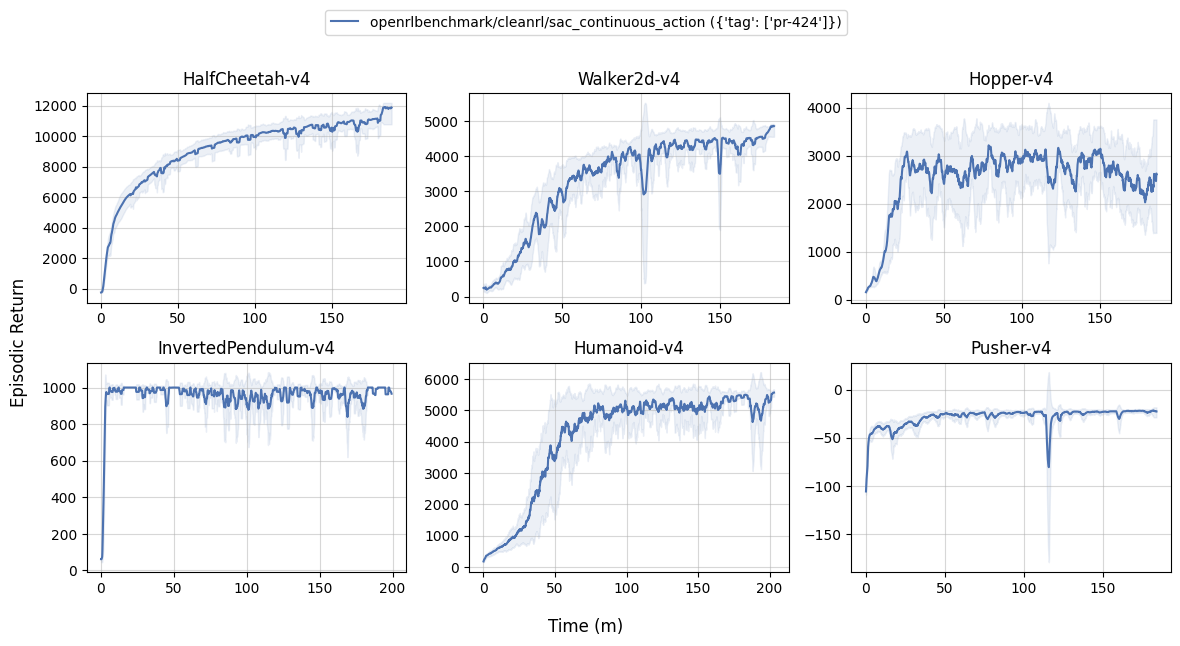

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'sac_continuous_action?tag=pr-424' \

+ --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/sac \

+ --scan-history

diff --git a/benchmark/td3.sh b/benchmark/td3.sh

index ea94c2c32..e68004c73 100644

--- a/benchmark/td3.sh

+++ b/benchmark/td3.sh

@@ -1,16 +1,22 @@

-poetry install -E mujoco_py

-python -c "import mujoco_py"

-OMP_NUM_THREADS=1 xvfb-run -a python -m cleanrl_utils.benchmark \

- --env-ids HalfCheetah-v2 Walker2d-v2 Hopper-v2 InvertedPendulum-v2 Humanoid-v2 Pusher-v2 \

- --command "poetry run python cleanrl/td3_continuous_action.py --track --capture-video" \

+poetry install -E "mujoco"

+python -m cleanrl_utils.benchmark \

+ --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 \

+ --command "poetry run python cleanrl/td3_continuous_action.py --track" \

--num-seeds 3 \

- --workers 1

+ --workers 18 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

-poetry install -E "mujoco_py jax"

-poetry run pip install --upgrade "jax[cuda]==0.3.17" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

-poetry run python -c "import mujoco_py"

-xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

- --env-ids HalfCheetah-v2 Walker2d-v2 Hopper-v2 \

- --command "poetry run python cleanrl/td3_continuous_action_jax.py --track --capture-video" \

+poetry install -E "mujoco jax"

+poetry run pip install --upgrade "jax[cuda11_cudnn82]==0.4.8" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

+poetry run python -m cleanrl_utils.benchmark \

+ --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 \

+ --command "poetry run python cleanrl/td3_continuous_action_jax.py --track" \

--num-seeds 3 \

- --workers 1

+ --workers 18 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

diff --git a/benchmark/td3_plot.sh b/benchmark/td3_plot.sh

new file mode 100644

index 000000000..ad37305cc

--- /dev/null

+++ b/benchmark/td3_plot.sh

@@ -0,0 +1,21 @@

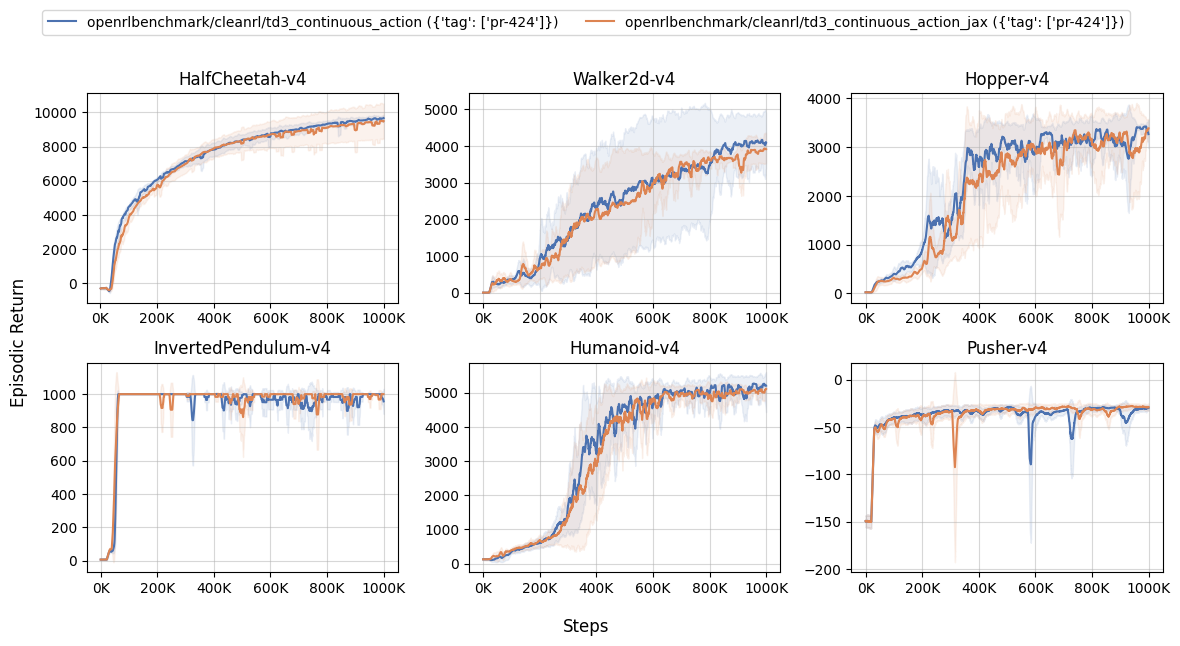

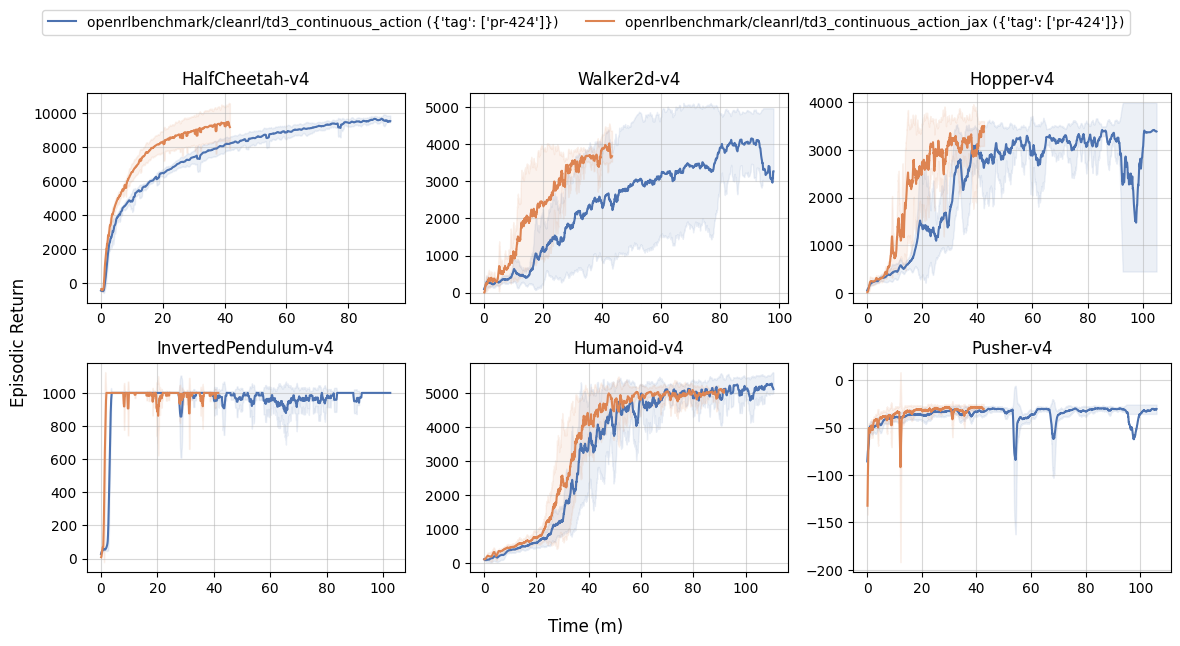

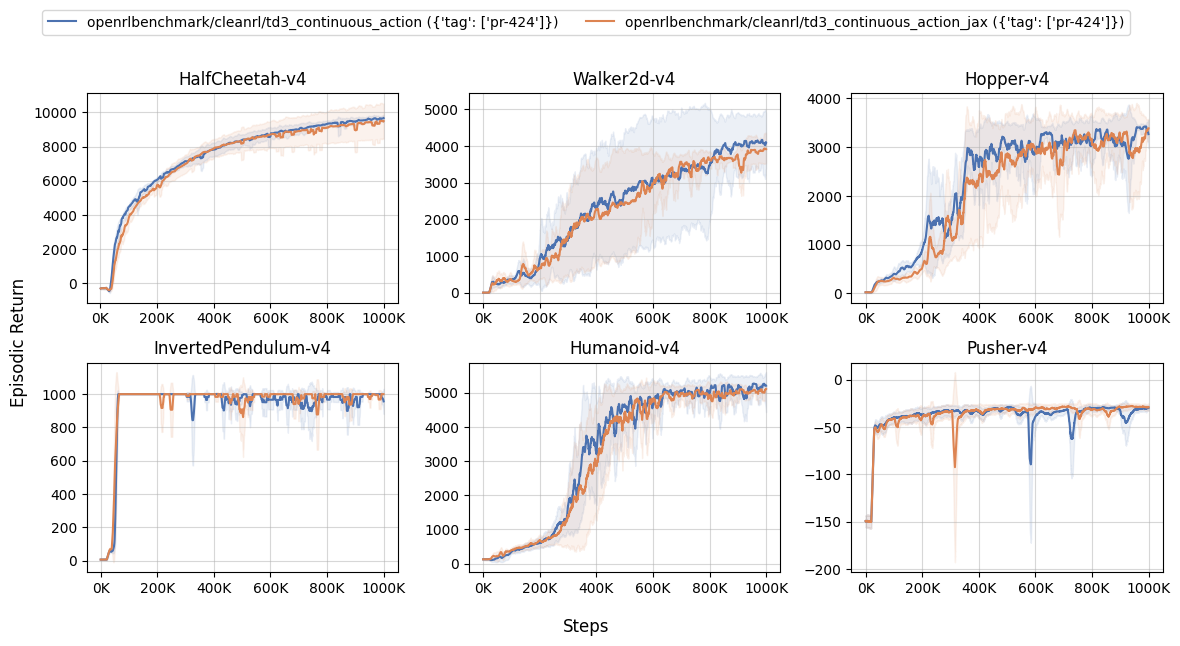

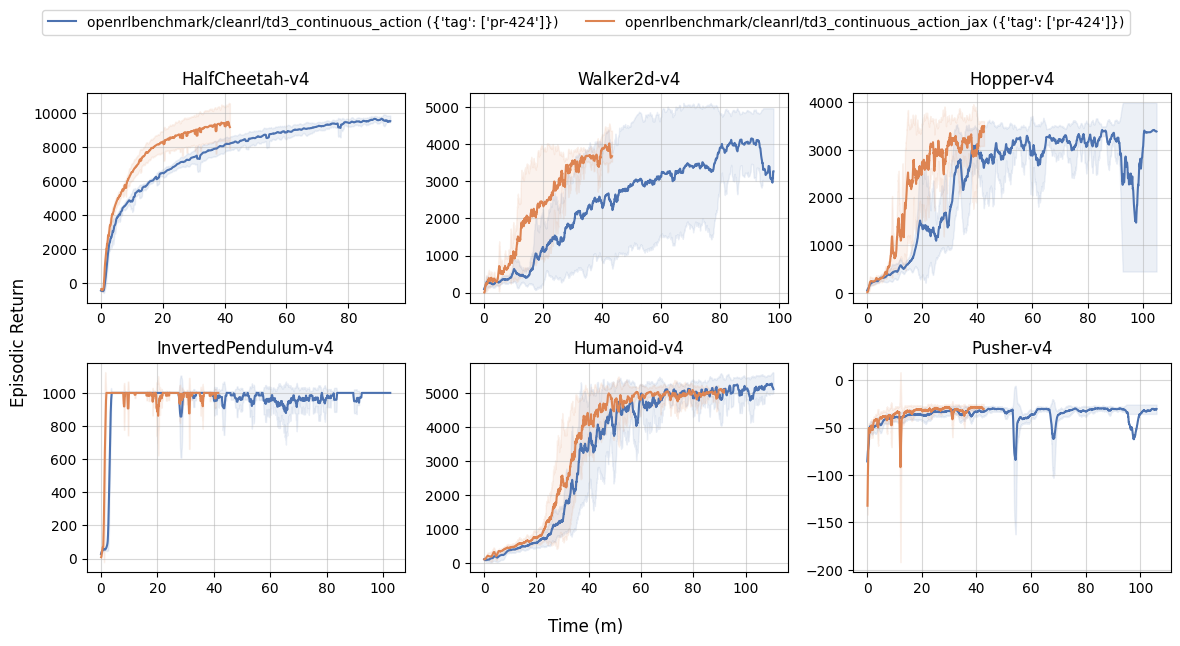

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'td3_continuous_action?tag=pr-424' \

+ 'td3_continuous_action_jax?tag=pr-424' \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/td3 \

+ --scan-history

+

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'sac_continuous_action?tag=pr-424' \

+ --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/sac \

+ --scan-history

diff --git a/benchmark/zoo.sh b/benchmark/zoo.sh

index f7646c5d5..a5ab38e14 100644

--- a/benchmark/zoo.sh

+++ b/benchmark/zoo.sh

@@ -3,25 +3,25 @@ poetry run python cleanrl/dqn_atari_jax.py --env-id SeaquestNoFrameskip-v4 --sa

xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids CartPole-v1 Acrobot-v1 MountainCar-v0 \

- --command "poetry run python cleanrl/dqn.py --cuda False --track --capture-video --save-model --upload-model --hf-entity cleanrl" \

+ --command "poetry run python cleanrl/dqn.py --no_cuda --track --capture_video --save-model --upload-model --hf-entity cleanrl" \

--num-seeds 1 \

--workers 1

CUDA_VISIBLE_DEVICES="-1" xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids CartPole-v1 Acrobot-v1 MountainCar-v0 \

- --command "poetry run python cleanrl/dqn_jax.py --track --capture-video --save-model --upload-model --hf-entity cleanrl" \

+ --command "poetry run python cleanrl/dqn_jax.py --track --capture_video --save-model --upload-model --hf-entity cleanrl" \

--num-seeds 1 \

--workers 1

xvfb-run -a python -m cleanrl_utils.benchmark \

--env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

- --command "poetry run python cleanrl/dqn_atari_jax.py --track --capture-video --save-model --upload-model --hf-entity cleanrl" \

+ --command "poetry run python cleanrl/dqn_atari_jax.py --track --capture_video --save-model --upload-model --hf-entity cleanrl" \

--num-seeds 1 \

--workers 1

xvfb-run -a python -m cleanrl_utils.benchmark \

--env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

- --command "poetry run python cleanrl/dqn_atari.py --track --capture-video --save-model --upload-model --hf-entity cleanrl" \

+ --command "poetry run python cleanrl/dqn_atari.py --track --capture_video --save-model --upload-model --hf-entity cleanrl" \

--num-seeds 1 \

--workers 1

diff --git a/cleanrl/c51.py b/cleanrl/c51.py

index 3959466f1..9f99a7a31 100755

--- a/cleanrl/c51.py

+++ b/cleanrl/c51.py

@@ -1,83 +1,77 @@

# docs and experiment results can be found at https://docs.cleanrl.dev/rl-algorithms/c51/#c51py

-import argparse

import os

import random

import time

-from distutils.util import strtobool

+from dataclasses import dataclass

import gymnasium as gym

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optim

+import tyro

from stable_baselines3.common.buffers import ReplayBuffer

from torch.utils.tensorboard import SummaryWriter

-def parse_args():

- # fmt: off

- parser = argparse.ArgumentParser()

- parser.add_argument("--exp-name", type=str, default=os.path.basename(__file__).rstrip(".py"),

- help="the name of this experiment")

- parser.add_argument("--seed", type=int, default=1,

- help="seed of the experiment")

- parser.add_argument("--torch-deterministic", type=lambda x: bool(strtobool(x)), default=True, nargs="?", const=True,

- help="if toggled, `torch.backends.cudnn.deterministic=False`")

- parser.add_argument("--cuda", type=lambda x: bool(strtobool(x)), default=True, nargs="?", const=True,

- help="if toggled, cuda will be enabled by default")

- parser.add_argument("--track", type=lambda x: bool(strtobool(x)), default=False, nargs="?", const=True,

- help="if toggled, this experiment will be tracked with Weights and Biases")

- parser.add_argument("--wandb-project-name", type=str, default="cleanRL",

- help="the wandb's project name")

- parser.add_argument("--wandb-entity", type=str, default=None,

- help="the entity (team) of wandb's project")

- parser.add_argument("--capture-video", type=lambda x: bool(strtobool(x)), default=False, nargs="?", const=True,

- help="whether to capture videos of the agent performances (check out `videos` folder)")

- parser.add_argument("--save-model", type=lambda x: bool(strtobool(x)), default=False, nargs="?", const=True,

- help="whether to save model into the `runs/{run_name}` folder")

- parser.add_argument("--upload-model", type=lambda x: bool(strtobool(x)), default=False, nargs="?", const=True,

- help="whether to upload the saved model to huggingface")

- parser.add_argument("--hf-entity", type=str, default="",

- help="the user or org name of the model repository from the Hugging Face Hub")

+@dataclass

+class Args:

+ exp_name: str = os.path.basename(__file__)[: -len(".py")]

+ """the name of this experiment"""

+ seed: int = 1

+ """seed of the experiment"""

+ torch_deterministic: bool = True

+ """if toggled, `torch.backends.cudnn.deterministic=False`"""

+ cuda: bool = True

+ """if toggled, cuda will be enabled by default"""

+ track: bool = False

+ """if toggled, this experiment will be tracked with Weights and Biases"""

+ wandb_project_name: str = "cleanRL"

+ """the wandb's project name"""

+ wandb_entity: str = None

+ """the entity (team) of wandb's project"""

+ capture_video: bool = False

+ """whether to capture videos of the agent performances (check out `videos` folder)"""

+ save_model: bool = False

+ """whether to save model into the `runs/{run_name}` folder"""

+ upload_model: bool = False

+ """whether to upload the saved model to huggingface"""

+ hf_entity: str = ""

+ """the user or org name of the model repository from the Hugging Face Hub"""

# Algorithm specific arguments

- parser.add_argument("--env-id", type=str, default="CartPole-v1",

- help="the id of the environment")

- parser.add_argument("--total-timesteps", type=int, default=500000,

- help="total timesteps of the experiments")

- parser.add_argument("--learning-rate", type=float, default=2.5e-4,

- help="the learning rate of the optimizer")

- parser.add_argument("--num-envs", type=int, default=1,

- help="the number of parallel game environments")

- parser.add_argument("--n-atoms", type=int, default=101,

- help="the number of atoms")

- parser.add_argument("--v-min", type=float, default=-100,

- help="the return lower bound")

- parser.add_argument("--v-max", type=float, default=100,

- help="the return upper bound")

- parser.add_argument("--buffer-size", type=int, default=10000,

- help="the replay memory buffer size")

- parser.add_argument("--gamma", type=float, default=0.99,

- help="the discount factor gamma")

- parser.add_argument("--target-network-frequency", type=int, default=500,

- help="the timesteps it takes to update the target network")

- parser.add_argument("--batch-size", type=int, default=128,

- help="the batch size of sample from the reply memory")

- parser.add_argument("--start-e", type=float, default=1,

- help="the starting epsilon for exploration")

- parser.add_argument("--end-e", type=float, default=0.05,

- help="the ending epsilon for exploration")

- parser.add_argument("--exploration-fraction", type=float, default=0.5,

- help="the fraction of `total-timesteps` it takes from start-e to go end-e")

- parser.add_argument("--learning-starts", type=int, default=10000,

- help="timestep to start learning")

- parser.add_argument("--train-frequency", type=int, default=10,

- help="the frequency of training")

- args = parser.parse_args()

- # fmt: on

- assert args.num_envs == 1, "vectorized envs are not supported at the moment"

-

- return args

+ env_id: str = "CartPole-v1"

+ """the id of the environment"""

+ total_timesteps: int = 500000

+ """total timesteps of the experiments"""

+ learning_rate: float = 2.5e-4

+ """the learning rate of the optimizer"""

+ num_envs: int = 1

+ """the number of parallel game environments"""

+ n_atoms: int = 101

+ """the number of atoms"""

+ v_min: float = -100

+ """the return lower bound"""

+ v_max: float = 100

+ """the return upper bound"""

+ buffer_size: int = 10000

+ """the replay memory buffer size"""

+ gamma: float = 0.99

+ """the discount factor gamma"""

+ target_network_frequency: int = 500

+ """the timesteps it takes to update the target network"""

+ batch_size: int = 128

+ """the batch size of sample from the reply memory"""

+ start_e: float = 1

+ """the starting epsilon for exploration"""

+ end_e: float = 0.05

+ """the ending epsilon for exploration"""

+ exploration_fraction: float = 0.5

+ """the fraction of `total-timesteps` it takes from start-e to go end-e"""

+ learning_starts: int = 10000

+ """timestep to start learning"""

+ train_frequency: int = 10

+ """the frequency of training"""

def make_env(env_id, seed, idx, capture_video, run_name):

@@ -136,7 +130,8 @@ def linear_schedule(start_e: float, end_e: float, duration: int, t: int):

poetry run pip install "stable_baselines3==2.0.0a1"

"""

)

- args = parse_args()

+ args = tyro.cli(Args)

+ assert args.num_envs == 1, "vectorized envs are not supported at the moment"

run_name = f"{args.env_id}__{args.exp_name}__{args.seed}__{int(time.time())}"

if args.track:

import wandb

@@ -201,14 +196,10 @@ def linear_schedule(start_e: float, end_e: float, duration: int, t: int):

# TRY NOT TO MODIFY: record rewards for plotting purposes

if "final_info" in infos:

for info in infos["final_info"]:

- # Skip the envs that are not done

- if "episode" not in info:

- continue

- print(f"global_step={global_step}, episodic_return={info['episode']['r']}")

- writer.add_scalar("charts/episodic_return", info["episode"]["r"], global_step)

- writer.add_scalar("charts/episodic_length", info["episode"]["l"], global_step)

- writer.add_scalar("charts/epsilon", epsilon, global_step)

- break

+ if info and "episode" in info:

+ print(f"global_step={global_step}, episodic_return={info['episode']['r']}")

+ writer.add_scalar("charts/episodic_return", info["episode"]["r"], global_step)

+ writer.add_scalar("charts/episodic_length", info["episode"]["l"], global_step)

# TRY NOT TO MODIFY: save data to reply buffer; handle `final_observation`

real_next_obs = next_obs.copy()

diff --git a/cleanrl/c51_atari.py b/cleanrl/c51_atari.py

index 8e47bacc5..97b790759 100755

--- a/cleanrl/c51_atari.py

+++ b/cleanrl/c51_atari.py

@@ -1,15 +1,15 @@

# docs and experiment results can be found at https://docs.cleanrl.dev/rl-algorithms/c51/#c51_ataripy

-import argparse

import os

import random

import time

-from distutils.util import strtobool

+from dataclasses import dataclass

import gymnasium as gym

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optim

+import tyro

from stable_baselines3.common.atari_wrappers import (

ClipRewardEnv,

EpisodicLifeEnv,

@@ -21,70 +21,64 @@

from torch.utils.tensorboard import SummaryWriter

-def parse_args():

- # fmt: off

- parser = argparse.ArgumentParser()

- parser.add_argument("--exp-name", type=str, default=os.path.basename(__file__).rstrip(".py"),

- help="the name of this experiment")

- parser.add_argument("--seed", type=int, default=1,

- help="seed of the experiment")

- parser.add_argument("--torch-deterministic", type=lambda x: bool(strtobool(x)), default=True, nargs="?", const=True,

- help="if toggled, `torch.backends.cudnn.deterministic=False`")

- parser.add_argument("--cuda", type=lambda x: bool(strtobool(x)), default=True, nargs="?", const=True,

- help="if toggled, cuda will be enabled by default")

- parser.add_argument("--track", type=lambda x: bool(strtobool(x)), default=False, nargs="?", const=True,

- help="if toggled, this experiment will be tracked with Weights and Biases")

- parser.add_argument("--wandb-project-name", type=str, default="cleanRL",

- help="the wandb's project name")

- parser.add_argument("--wandb-entity", type=str, default=None,

- help="the entity (team) of wandb's project")

- parser.add_argument("--capture-video", type=lambda x: bool(strtobool(x)), default=False, nargs="?", const=True,

- help="whether to capture videos of the agent performances (check out `videos` folder)")

- parser.add_argument("--save-model", type=lambda x: bool(strtobool(x)), default=False, nargs="?", const=True,

- help="whether to save model into the `runs/{run_name}` folder")

- parser.add_argument("--upload-model", type=lambda x: bool(strtobool(x)), default=False, nargs="?", const=True,

- help="whether to upload the saved model to huggingface")

- parser.add_argument("--hf-entity", type=str, default="",

- help="the user or org name of the model repository from the Hugging Face Hub")

+@dataclass

+class Args:

+ exp_name: str = os.path.basename(__file__)[: -len(".py")]

+ """the name of this experiment"""

+ seed: int = 1

+ """seed of the experiment"""

+ torch_deterministic: bool = True

+ """if toggled, `torch.backends.cudnn.deterministic=False`"""

+ cuda: bool = True

+ """if toggled, cuda will be enabled by default"""

+ track: bool = False

+ """if toggled, this experiment will be tracked with Weights and Biases"""

+ wandb_project_name: str = "cleanRL"

+ """the wandb's project name"""

+ wandb_entity: str = None

+ """the entity (team) of wandb's project"""

+ capture_video: bool = False

+ """whether to capture videos of the agent performances (check out `videos` folder)"""

+ save_model: bool = False

+ """whether to save model into the `runs/{run_name}` folder"""

+ upload_model: bool = False

+ """whether to upload the saved model to huggingface"""

+ hf_entity: str = ""

+ """the user or org name of the model repository from the Hugging Face Hub"""

# Algorithm specific arguments

- parser.add_argument("--env-id", type=str, default="BreakoutNoFrameskip-v4",

- help="the id of the environment")

- parser.add_argument("--total-timesteps", type=int, default=10000000,

- help="total timesteps of the experiments")

- parser.add_argument("--learning-rate", type=float, default=2.5e-4,

- help="the learning rate of the optimizer")

- parser.add_argument("--num-envs", type=int, default=1,

- help="the number of parallel game environments")

- parser.add_argument("--n-atoms", type=int, default=51,

- help="the number of atoms")

- parser.add_argument("--v-min", type=float, default=-10,

- help="the return lower bound")

- parser.add_argument("--v-max", type=float, default=10,

- help="the return upper bound")

- parser.add_argument("--buffer-size", type=int, default=1000000,

- help="the replay memory buffer size")

- parser.add_argument("--gamma", type=float, default=0.99,

- help="the discount factor gamma")

- parser.add_argument("--target-network-frequency", type=int, default=10000,

- help="the timesteps it takes to update the target network")

- parser.add_argument("--batch-size", type=int, default=32,

- help="the batch size of sample from the reply memory")

- parser.add_argument("--start-e", type=float, default=1,

- help="the starting epsilon for exploration")

- parser.add_argument("--end-e", type=float, default=0.01,

- help="the ending epsilon for exploration")

- parser.add_argument("--exploration-fraction", type=float, default=0.10,

- help="the fraction of `total-timesteps` it takes from start-e to go end-e")

- parser.add_argument("--learning-starts", type=int, default=80000,

- help="timestep to start learning")

- parser.add_argument("--train-frequency", type=int, default=4,

- help="the frequency of training")

- args = parser.parse_args()

- # fmt: on

- assert args.num_envs == 1, "vectorized envs are not supported at the moment"

-

- return args

+ env_id: str = "BreakoutNoFrameskip-v4"

+ """the id of the environment"""

+ total_timesteps: int = 10000000

+ """total timesteps of the experiments"""

+ learning_rate: float = 2.5e-4

+ """the learning rate of the optimizer"""

+ num_envs: int = 1

+ """the number of parallel game environments"""

+ n_atoms: int = 51

+ """the number of atoms"""

+ v_min: float = -10

+ """the return lower bound"""

+ v_max: float = 10

+ """the return upper bound"""

+ buffer_size: int = 1000000

+ """the replay memory buffer size"""

+ gamma: float = 0.99

+ """the discount factor gamma"""

+ target_network_frequency: int = 10000

+ """the timesteps it takes to update the target network"""

+ batch_size: int = 32

+ """the batch size of sample from the reply memory"""

+ start_e: float = 1

+ """the starting epsilon for exploration"""

+ end_e: float = 0.01

+ """the ending epsilon for exploration"""

+ exploration_fraction: float = 0.10

+ """the fraction of `total-timesteps` it takes from start-e to go end-e"""

+ learning_starts: int = 80000

+ """timestep to start learning"""

+ train_frequency: int = 4

+ """the frequency of training"""

def make_env(env_id, seed, idx, capture_video, run_name):

@@ -158,7 +152,8 @@ def linear_schedule(start_e: float, end_e: float, duration: int, t: int):

poetry run pip install "stable_baselines3==2.0.0a1" "gymnasium[atari,accept-rom-license]==0.28.1" "ale-py==0.8.1"

"""

)

- args = parse_args()

+ args = tyro.cli(Args)

+ assert args.num_envs == 1, "vectorized envs are not supported at the moment"

run_name = f"{args.env_id}__{args.exp_name}__{args.seed}__{int(time.time())}"

if args.track:

import wandb

@@ -224,14 +219,10 @@ def linear_schedule(start_e: float, end_e: float, duration: int, t: int):

# TRY NOT TO MODIFY: record rewards for plotting purposes

if "final_info" in infos:

for info in infos["final_info"]:

- # Skip the envs that are not done

- if "episode" not in info:

- continue

- print(f"global_step={global_step}, episodic_return={info['episode']['r']}")

- writer.add_scalar("charts/episodic_return", info["episode"]["r"], global_step)

- writer.add_scalar("charts/episodic_length", info["episode"]["l"], global_step)

- writer.add_scalar("charts/epsilon", epsilon, global_step)

- break

+ if info and "episode" in info:

+ print(f"global_step={global_step}, episodic_return={info['episode']['r']}")

+ writer.add_scalar("charts/episodic_return", info["episode"]["r"], global_step)

+ writer.add_scalar("charts/episodic_length", info["episode"]["l"], global_step)

# TRY NOT TO MODIFY: save data to reply buffer; handle `final_observation`

real_next_obs = next_obs.copy()

diff --git a/cleanrl/c51_atari_jax.py b/cleanrl/c51_atari_jax.py

index 93c436ec5..8cd46e855 100644

--- a/cleanrl/c51_atari_jax.py

+++ b/cleanrl/c51_atari_jax.py

@@ -1,9 +1,8 @@

# docs and experiment results can be found at https://docs.cleanrl.dev/rl-algorithms/c51/#c51_atari_jaxpy

-import argparse

import os

import random

import time

-from distutils.util import strtobool

+from dataclasses import dataclass

os.environ[

"XLA_PYTHON_CLIENT_MEM_FRACTION"

@@ -16,6 +15,7 @@

import jax.numpy as jnp

import numpy as np

import optax

+import tyro

from flax.training.train_state import TrainState

from stable_baselines3.common.atari_wrappers import (

ClipRewardEnv,

@@ -28,66 +28,60 @@

from torch.utils.tensorboard import SummaryWriter

-def parse_args():

- # fmt: off

- parser = argparse.ArgumentParser()

- parser.add_argument("--exp-name", type=str, default=os.path.basename(__file__).rstrip(".py"),

- help="the name of this experiment")

- parser.add_argument("--seed", type=int, default=1,

- help="seed of the experiment")

- parser.add_argument("--track", type=lambda x: bool(strtobool(x)), default=False, nargs="?", const=True,

- help="if toggled, this experiment will be tracked with Weights and Biases")

- parser.add_argument("--wandb-project-name", type=str, default="cleanRL",

- help="the wandb's project name")

- parser.add_argument("--wandb-entity", type=str, default=None,

- help="the entity (team) of wandb's project")

- parser.add_argument("--capture-video", type=lambda x: bool(strtobool(x)), default=False, nargs="?", const=True,

- help="whether to capture videos of the agent performances (check out `videos` folder)")

- parser.add_argument("--save-model", type=lambda x: bool(strtobool(x)), default=False, nargs="?", const=True,

- help="whether to save model into the `runs/{run_name}` folder")

- parser.add_argument("--upload-model", type=lambda x: bool(strtobool(x)), default=False, nargs="?", const=True,

- help="whether to upload the saved model to huggingface")

- parser.add_argument("--hf-entity", type=str, default="",

- help="the user or org name of the model repository from the Hugging Face Hub")

+@dataclass

+class Args:

+ exp_name: str = os.path.basename(__file__)[: -len(".py")]

+ """the name of this experiment"""

+ seed: int = 1

+ """seed of the experiment"""

+ track: bool = False

+ """if toggled, this experiment will be tracked with Weights and Biases"""

+ wandb_project_name: str = "cleanRL"

+ """the wandb's project name"""

+ wandb_entity: str = None

+ """the entity (team) of wandb's project"""

+ capture_video: bool = False

+ """whether to capture videos of the agent performances (check out `videos` folder)"""

+ save_model: bool = False

+ """whether to save model into the `runs/{run_name}` folder"""

+ upload_model: bool = False

+ """whether to upload the saved model to huggingface"""

+ hf_entity: str = ""

+ """the user or org name of the model repository from the Hugging Face Hub"""

# Algorithm specific arguments

- parser.add_argument("--env-id", type=str, default="BreakoutNoFrameskip-v4",

- help="the id of the environment")

- parser.add_argument("--total-timesteps", type=int, default=10000000,

- help="total timesteps of the experiments")

- parser.add_argument("--learning-rate", type=float, default=2.5e-4,

- help="the learning rate of the optimizer")

- parser.add_argument("--num-envs", type=int, default=1,

- help="the number of parallel game environments")

- parser.add_argument("--n-atoms", type=int, default=51,

- help="the number of atoms")

- parser.add_argument("--v-min", type=float, default=-10,

- help="the return lower bound")

- parser.add_argument("--v-max", type=float, default=10,

- help="the return upper bound")

- parser.add_argument("--buffer-size", type=int, default=1000000,

- help="the replay memory buffer size")

- parser.add_argument("--gamma", type=float, default=0.99,

- help="the discount factor gamma")

- parser.add_argument("--target-network-frequency", type=int, default=10000,

- help="the timesteps it takes to update the target network")

- parser.add_argument("--batch-size", type=int, default=32,

- help="the batch size of sample from the reply memory")

- parser.add_argument("--start-e", type=float, default=1,

- help="the starting epsilon for exploration")

- parser.add_argument("--end-e", type=float, default=0.01,

- help="the ending epsilon for exploration")

- parser.add_argument("--exploration-fraction", type=float, default=0.1,

- help="the fraction of `total-timesteps` it takes from start-e to go end-e")

- parser.add_argument("--learning-starts", type=int, default=80000,

- help="timestep to start learning")

- parser.add_argument("--train-frequency", type=int, default=4,

- help="the frequency of training")

- args = parser.parse_args()

- # fmt: on

- assert args.num_envs == 1, "vectorized envs are not supported at the moment"

-

- return args

+ env_id: str = "BreakoutNoFrameskip-v4"

+ """the id of the environment"""

+ total_timesteps: int = 10000000

+ """total timesteps of the experiments"""

+ learning_rate: float = 2.5e-4

+ """the learning rate of the optimizer"""

+ num_envs: int = 1

+ """the number of parallel game environments"""

+ n_atoms: int = 51

+ """the number of atoms"""

+ v_min: float = -10

+ """the return lower bound"""

+ v_max: float = 10

+ """the return upper bound"""

+ buffer_size: int = 1000000

+ """the replay memory buffer size"""

+ gamma: float = 0.99

+ """the discount factor gamma"""

+ target_network_frequency: int = 10000

+ """the timesteps it takes to update the target network"""

+ batch_size: int = 32

+ """the batch size of sample from the reply memory"""

+ start_e: float = 1

+ """the starting epsilon for exploration"""

+ end_e: float = 0.01

+ """the ending epsilon for exploration"""

+ exploration_fraction: float = 0.10