-

Notifications

You must be signed in to change notification settings - Fork 18

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

How optimizable is BigDecimal? #27

Comments

|

"has some highly optimized implementations" is too vague to be useful as a statement. For example, large integers (~ BigInts) "have some highly optimized implementations" (e.g.: GMP, MPFR, Flint, OpenJDK), but no browser is shipping or (to the best of my knowledge) planning to ship any of those (for various reasons: licence compatibility, memory management, ...). I would expect a native implementation to have no significant performance benefit compared to a user-space library/polyfill. |

|

@jakobkummerow Yep, this is why I'm using the word "optimizable" rather than making claims about performance. |

|

Probably my implementation is fastest on the market https://github.com/munrocket/double.js |

|

@munrocket It doesn't look like that library does what this proposal does, namely arbitrary-precision. |

|

You can't optimize proposed BigDecimal in base10 good enough, because hardware not support it yet. I am agree with @jakobkummerow by the way and #31 Consider good sofware handles for hardware and users will create a proper certified libraries, they is not that hard. Main reason why bigdecimal.js is slow, becasue JS don't have int32/int64 and FMA instruction to make such libraries with good performance and accuracy. Even wasm can't give a proper FMA instruction in base2 that fit to IEEE-754 on every machines right now https://github.com/WebAssembly/simd/issues/10. Without this basic native primitives we can't do proper libraries that you wish. |

|

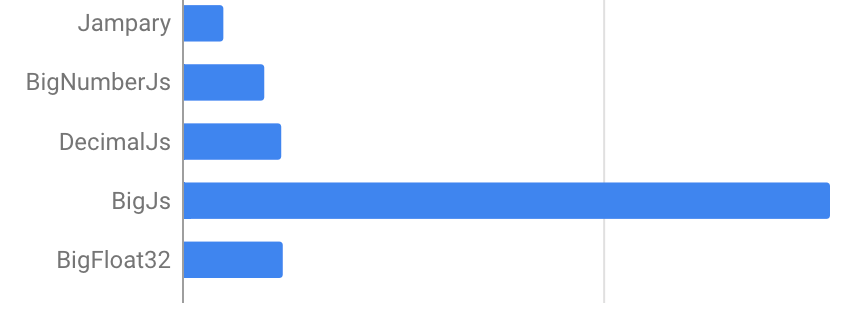

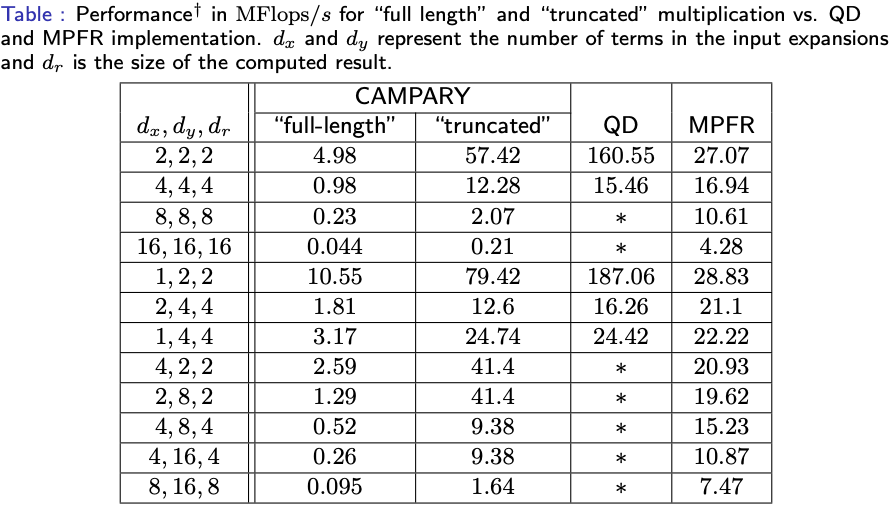

Recently I am tried to implement modern arbitrary precision library CAMPARY in javascript. I have pretty good early results that can be probably improved x2 with better memory management and x20 for multiplication with FMA (that not exist in JS). But then I realized that algorithms based on EFT is better for small numbers or GPU implementation. Since this proposal on CPU, implementations that uses long integers like GMP and MPFR is better for huge numbers. On this picture first column show how many terms it represents, for example [4,4,4] is ~4*53 bits or ~60 decimal digits. So, as you can see double-double / quad-double is faster for small numbers and have really simple implementation, while library based on integers like MPFR or Decimal64 (if it also based on integers) will be faster for really big numbers, if it implemented properly. Probably exist a simple way how to implement Decimal64 too, if it uses BigInt. There are other libraries like MPFUN, ARB, ARPREC etc. But not all of them has correct rounding. We should understand that modern CPU usually have integer and FMA, but javascript is not. And we need it for good user space arbitrary precision libraries. Also worth mention that JS<->WASM interoperation is awful for such things, so WASM can't be a graceful alternative until algorithm not implemented inside WASM sandbox too (MPFR++ can be useful in this case). |

|

@munsocket ,

looks like this talks about "comparison operation", not the advantages of the formats. @munsocket, what is the maximum binary precision of your library? is it about 960 bits? |

|

@Yaffle it was maid just for fun and based on Campary which is truly arbitrary. I can’t guarantee full precision right now and it have a room for performance improvement. Right now it is calculating something that better than double. |

|

Interesting papers:

Don’t know why nobody posted this papers here. So here alternative implementation that should be faster than traditional one with integers. |

|

@syg I know you mentioned some concerns about BigDecimal in the December Decimal update. Are optimizations such as this one on the table and would they address your concerns? |

IIRC my concerns were about maintenance burden and complexity in the codebase given the poor ROI from BigInts, and the maintenance burden that resulted from that. I'm not sure how these optimizations would address my concerns. |

@apaprocki has suggested that, for cases where it's within a certain range of precision, BigDecimal could be lowered to IEEE 754 decimal64, which has some highly optimized implementations.

Within a prototype implementation, we could assess whether such an optimization is indeed practical.

I'd suggest we investigate optimizability once we have several more basic design issues worked out. Some choices that we make about the semantics may affect this.

The text was updated successfully, but these errors were encountered: