-

Notifications

You must be signed in to change notification settings - Fork 2.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

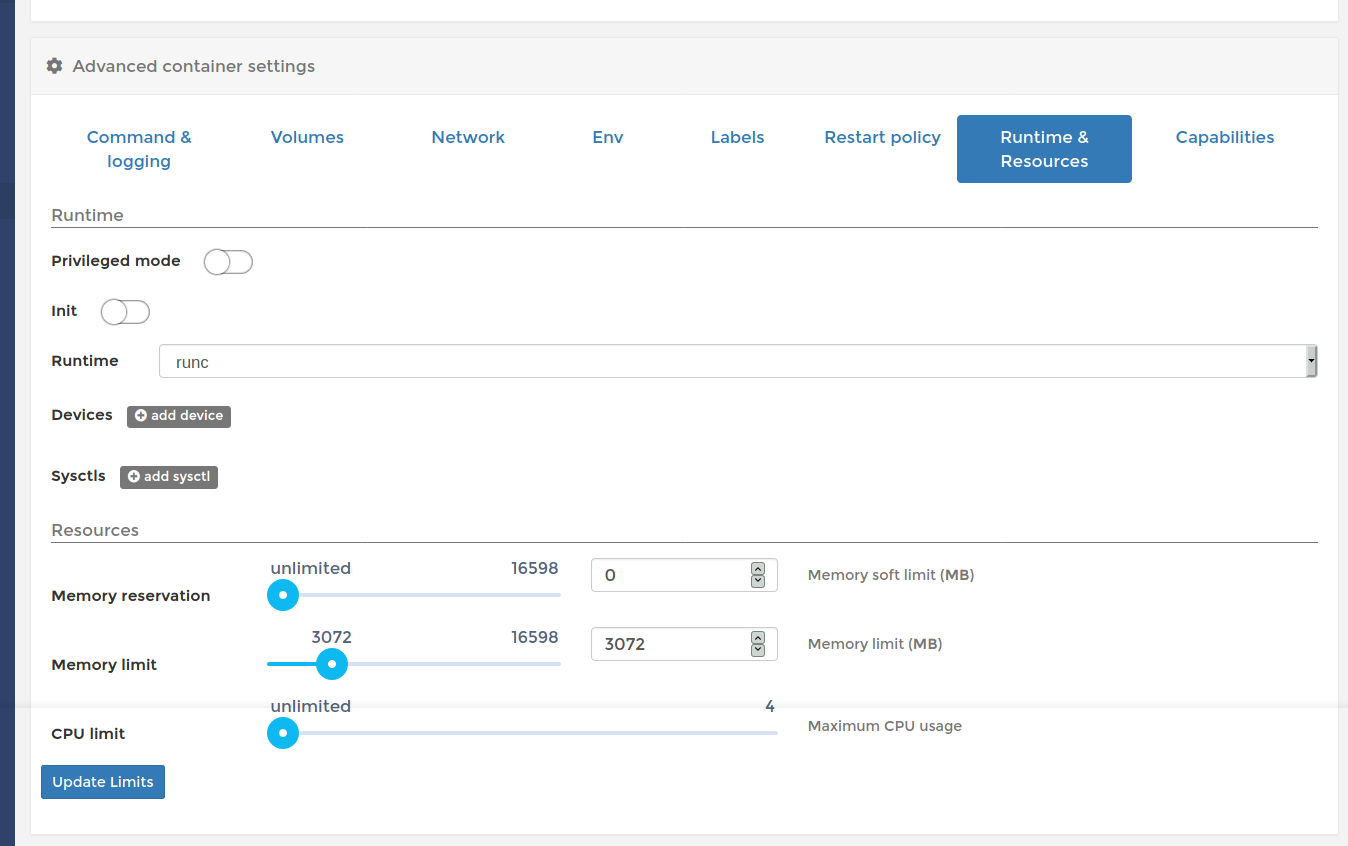

Docker limits realtime-update #5906

Comments

|

PR #5909 |

|

Hi, thanks for the contribution! I'm trying to understand a little bit more about your use case. Would be able to explain what you're trying to achieve with the change? For instance with the current version of Portainer, if I wanted to update the resources I'd do:

So I'm curious about the workflow that you have in mind. |

Thx, for the reply. This provide a way that Just update the Limits but no need to recreate the Container:

The 2nd one is in-realtime, without the need of restart container, still that without the need of restart business-app's progress. $ docker update --help

Options:

--blkio-weight uint16 Block IO (relative weight), between 10 and 1000, or 0 to disable (default 0)

--cpu-period int Limit CPU CFS (Completely Fair Scheduler) period

--cpu-quota int Limit CPU CFS (Completely Fair Scheduler) quota

--cpu-rt-period int Limit the CPU real-time period in microseconds

--cpu-rt-runtime int Limit the CPU real-time runtime in microseconds

-c, --cpu-shares int CPU shares (relative weight)

--cpus decimal Number of CPUs

--cpuset-cpus string CPUs in which to allow execution (0-3, 0,1)

--cpuset-mems string MEMs in which to allow execution (0-3, 0,1)

--kernel-memory bytes Kernel memory limit

-m, --memory bytes Memory limit

--memory-reservation bytes Memory soft limit

--memory-swap bytes Swap limit equal to memory plus swap: '-1' to enable unlimited swap

--restart string Restart policy to apply when a container exits |

|

For some reason the CI system hasn't actually created a build for it, which would be useful for anyone that wants to try it out. So we might have to add another commit. At the moment I'm still considering the benefits of not having to recreate a container vs what side effects there could be from opening up updating the container. And does it work equally for Docker standalone and Swarm? @SvenDowideit @d1mnewz any thoughts? |

|

@huib-portainer IMO from the novice user's POV nothing will change, they should not be caring so much about restart vs update on the fly. I do not have an idea how updating works with Swarm, though. Also, I'm into this feature because Kubernetes does exactly the same thing — updating deployment limits on the fly, without redeploying the pod. |

|

If they don't care about the restart vs update, then why add the complexity to the UI? |

|

I guess my first thought is - with the UX that @huib-portainer has, why would that redeploy, and not detect that it can do it using my second thought is OMG that's a lot of UX steps to do something that the user would expect is simple. as a user, I would care about needlessly restarting - for example, it takes over a minute to restart the gitlab omnibus container - and if I'm hitting resource constraints, I'd like to goto portainer, see that I'm running out of X, and right there, update the limits on the already existing container - and then be able to assume that this has also been stored in portainer, so that if i ever need to restart it, the right limits are applied. |

|

@yi-portainer the additional commits haven't actually solved the issue with the CI. |

|

I've managed to get the CI working again, so this can be tried by using the image Looking at the UI, the first section will only update the container, while the second section will redeploy the container. And the third section defines how a container gets redeployed as well. To me at the moment that doesn't feel very coherent. But I don't see an easy way out for it either. |

Okay, I'll change the latter. |

|

Awesome, thanks! |

Update docker limits with cpu/mem/mem.reservation in realtime.

The text was updated successfully, but these errors were encountered: