Warning

Remember that if you create modifications that alter the lib standard functioning It will break the applications that use it on the SEPAL app dashboard.

After forking the project, run the following command to start developing:

$ git clone https://github.com/<github id>/sepal_ui.git

$ cd sepal_ui

$ pip install -e .[dev]Warning

setuptool > 60 has changed its editable installation process. If your environment is not supporting it (e.g. SEPAL) use the following line instead:

$ pip install -e .[dev] --config-settings editable_mode=compat

!DANGER!

pre-commits are installed in edit mode. Every commit that does not respect the conventional commits framework will be refused.

you can read this documentation to learn more about them and we highly recommend to use the commitizen lib to create your commits: https://commitizen-tools.github.io/commitizen.

The tool is currently translated in the following languages:

| English | Français | Español |

You can contribute to the translation effort on our crowdin project. Contributors can suggest new languages and new translation. The admin will review this modification as fast as possible. If nobody in the core team master the suggested language, we'll be force to trust you !

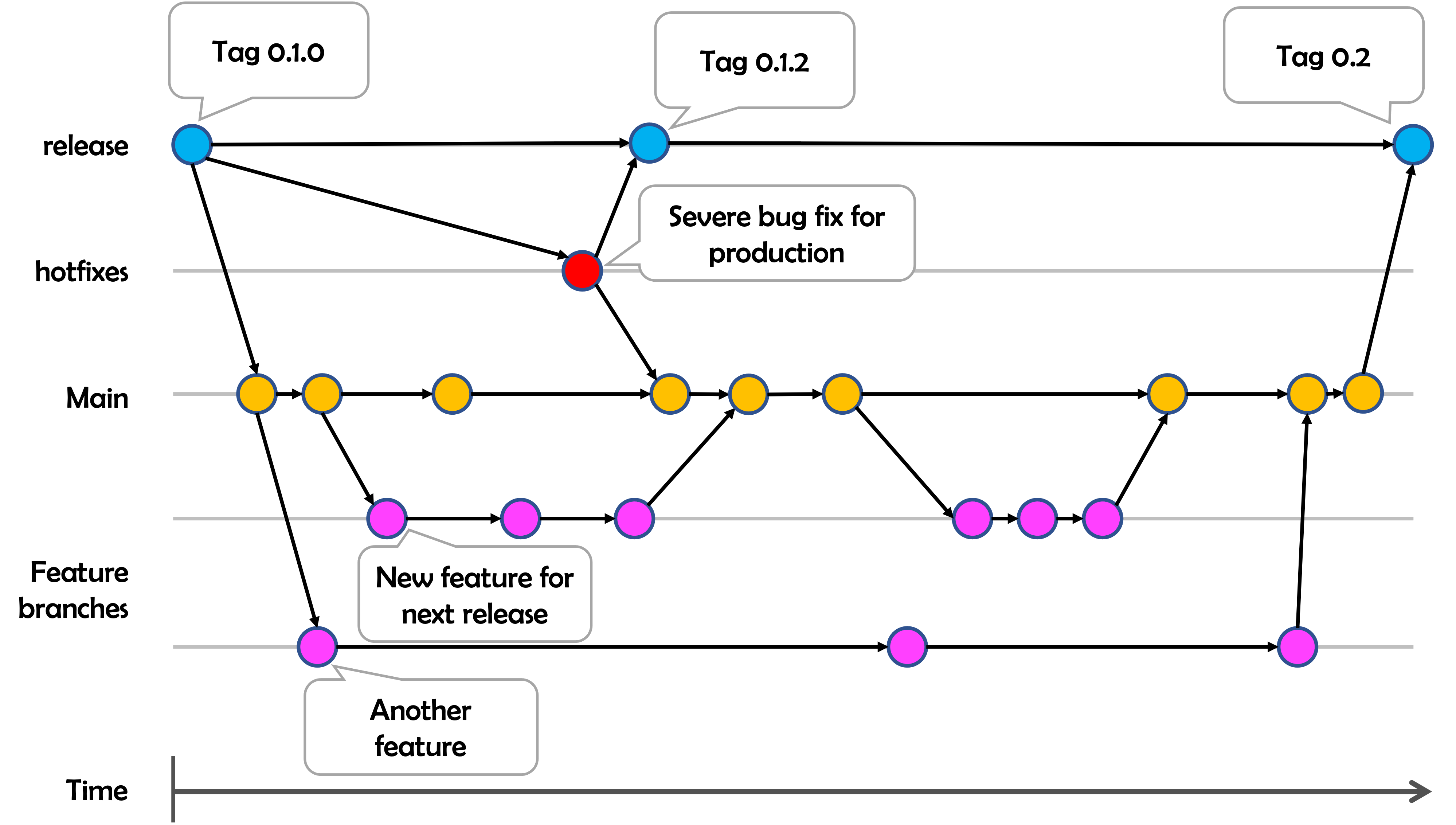

Since 2020-08-14, this repository follows these development guidelines. The git flow is thus the following:

Please consider using the --no-ff option when merging to keep the repository consistent with PR.

In the project to adapt to JupyterLab IntelSense, we decided to explicitly write the return statement for every function.

When a new function or class is created please use the Deprecated lib to specify that the feature is new in the documentation.

from deprecated.sphinx import deprecated

from deprecated.sphinx import versionadded

from deprecated.sphinx import versionchanged

@versionadded(version='1.0', reason="This function is new")

def function_one():

'''This is the function one'''

@versionchanged(version='1.0', reason="This function is modified")

def function_two():

'''This is the function two'''

@deprecated(version='1.0', reason="This function will be removed soon")

def function_three():

'''This is the function three'''In this repository we use the Conventional Commits specification. The Conventional Commits specification is a lightweight convention on top of commit messages. It provides an easy set of rules for creating an explicit commit history; which makes it easier to write automated tools on top of. This convention dovetails with SemVer, by describing the features, fixes, and breaking changes made in commit messages.

You can learn more about Conventional Commits following this link

Our branching system embed some rules to avoid crash of the production environment. If you want to contribute to this framework, here are some basic rules that we try our best to follow:

- the modification you offer is solving a critical bug in prod : PR in main

- the modification you propose solve the following issues : test, documentation, typo, quality, refactoring, translation PR in main

- the modification you propose is a new feature : open an issue to discuss with the maintainers and then PR to main

the maintainers will try their best to use PR for new features, to help the community follow the development, for other modification they will simply push to the appropriate branch.

To start, install nox:

$ pip install noxYou can call nox from the command line in order to perform common actions that are needed in building. nox operates with isolated environments, so each action has its own packages installed in a local directory (.nox). For common development actions, you’ll simply need to use nox and won’t need to set up any other packages.

pre-commit allows us to run several checks on the codebase every time a new Git commit is made. This ensures standards and basic quality control for our code.

Install pre-commit with the following command:

$ pip install pre-committhen navigate to this repository’s folder and activate it like so:

$ pre-commit installThis will install the necessary dependencies to run pre-commit every time you make a commit with Git.

Note

Your pre-commit dependencies will be installed in the environment from which you’re calling pre-commit, nox, etc. They will not be installed in the isolated environments used by nox.

!DANGER!

for maintainers only

Warning

You need to use the commitizen lib to create your release: https://commitizen-tools.github.io/commitizen

In the files change the version number by running commitizen bump:

cz bumpIt should modify for you the version number in sepal_ui/__init__.py, setup.py, and .cz.yaml according to semantic versioning thanks to the conventional commit that we use in the lib.

It will also update the CHANGELOG.md file with the latest commits, sorted by categories if you run the following code, using the version bumped in the previous commit.

!DANGER!

As long as commitizen-tools/commitizen#463 remains open, the version names of this repository won't work with the commitizen lib and the changelog won't be updated. As a maintainer you need to clone the project and follow the instruction from commitizen-tools/commitizen#463 (comment).

Then push the current main branch to the release branch. You can now create a new tag with your new version number. use the same convention as the one found in .cz.yaml: v_$minor.$major.$patch$prerelease.

Warning

The target branch of the new release is release not main.

The CI should take everything in control from here and execute the Upload Python Package GitHub Action that is publishing the new version on PyPi.

Once it's done you need to trigger the rebuild of SEPAL. modify the following file with the latest version number and the rebuild will start automatically.

Sometimes is useful to create environmental variables to store some data that your workflows will receive (i.e. component testing). For example, to perform the local tests of the planetapi sepal module, the PLANET_API_KEY and PLANET_API_CREDENTIALS env vars are required, even though they are also skippable.

To store a variable in your local session, just type export= followed by the var value.

$ export PLANET_API_KEY="neverending_resourcesapi"Tip

In SEPAL this variable will expire every time you start a new session, to create it every session and make it live longer, go to your home folder and save the previous line in the .bash_profile file.

$ vim .bash_profileThe current environmental keys and its structure is the following:

PLANET_API_CREDENTIALS='{"username": "[email protected]", "password": "secure"}'PLANET_API_KEY="string_planet_api_key"

To test/use the Google EarthEngine components, you need to run the ìnit__ee` script.

In a local development environment you can fully rely on your own GEE account. simply make sure to run at least once the authentication process from a terminal:

$ earthengine authenticateIn a distant environment (such as GitHub Actions) it is compulsory to use a environment variable to link your earthengine account. First, find the Earth Engine credentials file on your computer.

Windows: C:\Users\USERNAME\.config\earthengine\credentials Linux: /home/USERNAME/.config/earthengine/credentials MacOS: /Users/USERNAME/.config/earthengine/credentials

Open the credentials file and copy its content. On the GitHub Actions page, create a new secret with the name EARTHENGINE_TOKEN, and the value of the copied content.

We are using api-doc to build the documentation of the lib so if you want to see the API related documentation in your local build you need to run the following lines from the sepal_ui folder:

sphinx-apidoc --force --module-first --templatedir=docs/source/_templates/apidoc -o docs/source/modulesYou can then build the documentation, it will automatically call autodoc and autosummary during the process.

Test your locally your workflows using Act.

To pass your secrets to the workflows, create a secrets.env file and store the secrets of your custom workflow, for example:

EARTHENGINE_TOKEN=""

PLANET_API_CREDENTIALS=""

PLANET_API_KEY=""

FIRMS_API_KEY=""

EARTHENGINE_SERVICE_ACCOUNT=""Then run the following command:

$ gh act --secret-file $ENV_FILE --workflows .github/workflows/unit.ymlYou can change the workflow file to test the one you want, if you are only interested in testing some specific jobs, you can use the --job flag.