-

Notifications

You must be signed in to change notification settings - Fork 2k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[question] nomad 0.4.1 registers check with consul to 127.0.0.1 even if it bind is 172.30.x.x #1988

Comments

|

@jrusiecki Would you mind trying with 0.5.0 RC2? Unfortunately we don't have versioned docs yet and there were many doc fixes so we decided to push the RC docs. |

|

Thx, 0.5RC2 properly registers with and without checks_use_advertise. |

|

Sweet! Thanks for following up @jrusiecki! Closing this issue |

|

@dadgar I seem to be having this issue with both the released version of nomad 0.5.0 and the linked version 0.5.0-rc2. I am playing with Nomad in a container and not using the "--net host" flag. Nomad starts and registers to consul with the following config: However both versions give me the same result. ######################################################################### ########################################################################## |

|

@morfien101 Thanks for the report. What version of Consul are you running. Can you paste your consul config too so we can try to reproduce. Going to re-open! |

|

@dadgar I will get this back to the state that created this issue and post all the details as soon as I can. |

|

Hi @dadgar I have also created a vagrant file that can reproduce this reliably. |

|

@morfien101 Thank you so much for doing that! Will get this fixed in the coming weeks! |

|

I have a related issue with this.

--> Either checks_use_advertise should apply to every service registration nomad is doing, or there should be more control over how job service registration is done on a per job basis... (preference is adapting the behaviour of checks_use_advertise) |

|

As a follow up, I actually got it to work using (an undocumented feature of) the client network_interface config option: This started registering nomad jobs with the "correct" IP... |

|

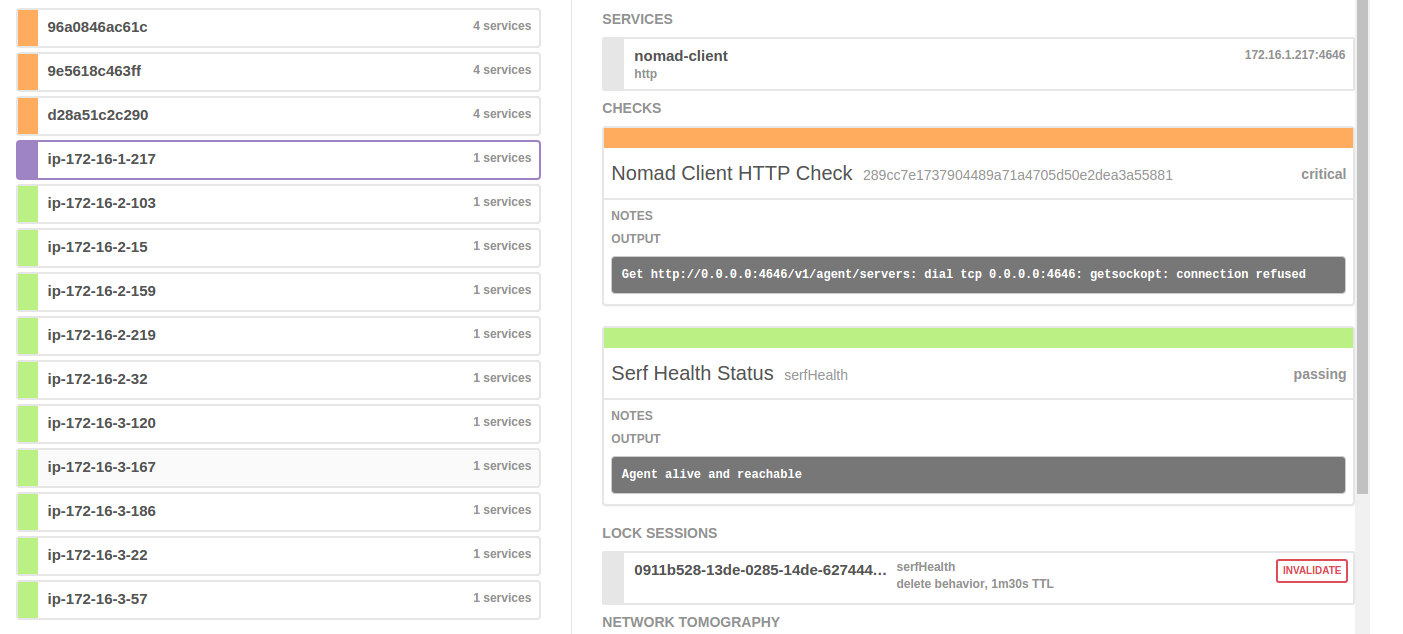

same consul 0.7.4 and nomad 0.5.4, different containers :(((( though nomad checks seem valid:

|

|

@jens-solarisbank Thanks for bumping this. This should be fixed from that release. Please test the 0.5.5 RC1: https://releases.hashicorp.com/nomad/0.5.5-rc1/ If it is still not working we can re-open! Thanks! |

|

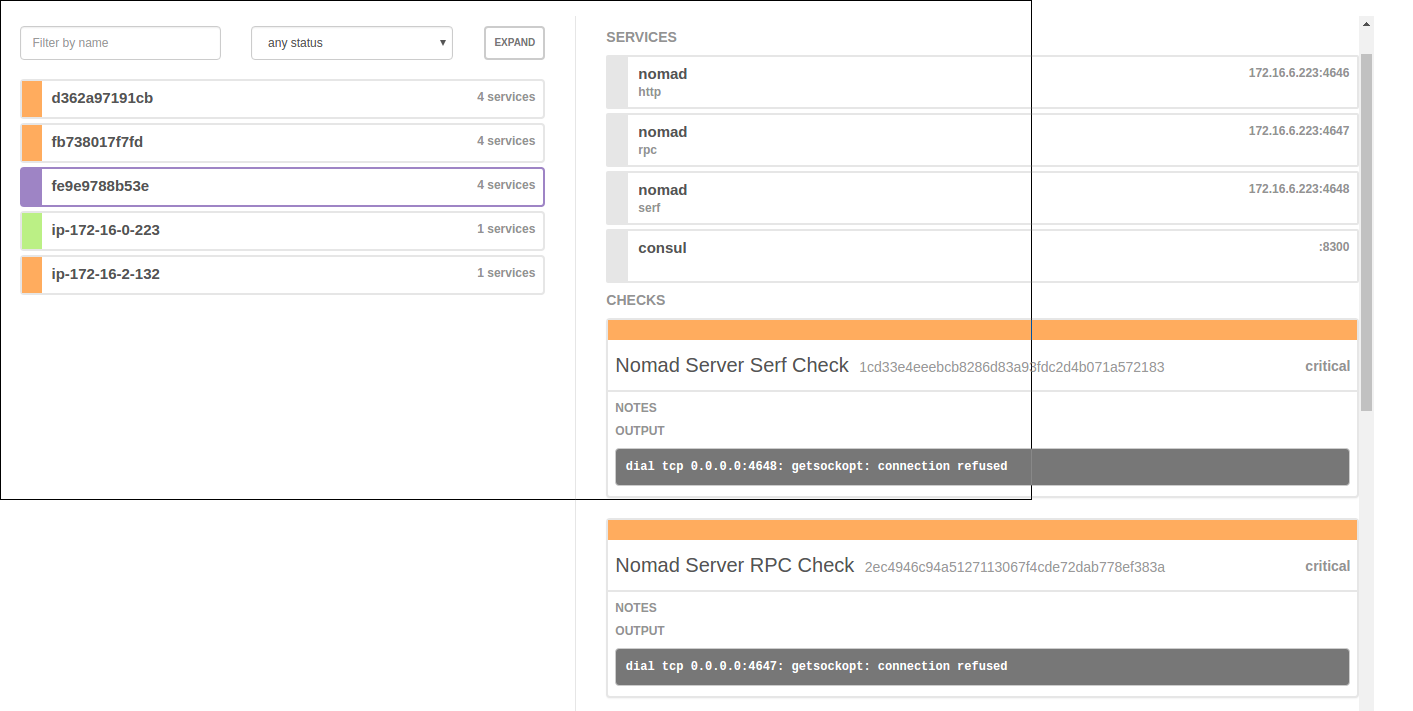

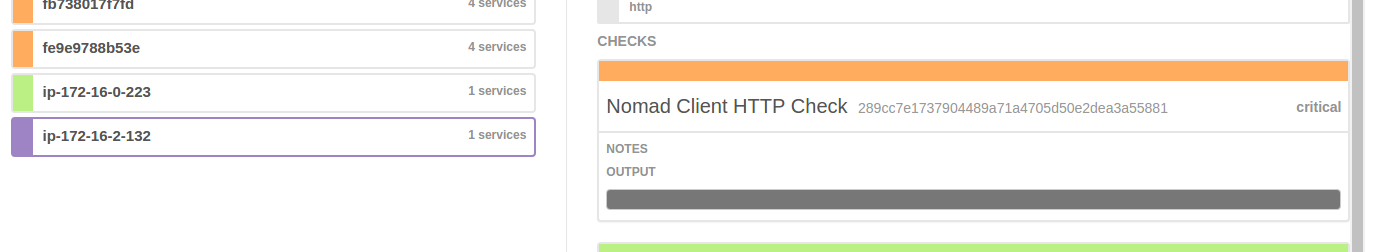

@jens-solarisbank Thanks for the details. @dadgar hello, it doesn't seem to work :( Here's config of "on-the-host" nomad https://gist.github.com/b5bf4ec36f099b07cf4eb3e33f97ef77. Well and here's its check it doesn't work All checks on the nomad servers (which are inside containers) also do not work complaining So IMO nothing's really changed :( |

|

please reopen |

|

@jens-solarisbank @dadgar Also I haven't tried to set |

|

@dennybaa Hey I just tried in on the RC and it is working. You have to set The configs you linked do not have that set to true. And to be clear this is fixed in the latest RC: https://releases.hashicorp.com/nomad/0.5.5-rc1/ |

|

I'm going to lock this issue because it has been closed for 120 days ⏳. This helps our maintainers find and focus on the active issues. |

How to make it register when nomad is not accessible to consul via 127.0.0.1 ?

Nomad 64bit v0.4.1 Linux 64bit @ubuntu 16.04 LTS)

Consul 64bit v0.5.2, Consul Protocol: 2 (Understands back to: 1)

When start Nomad, it gets error @ registration:

if I list checks in Consul it lists:

Nomad config is:

On the other hand nomad's switch checks_use_advertise in consul section does not seem to work:

* consul -> invalid key: checks_use_advertiseThe text was updated successfully, but these errors were encountered: