The original algorithm from the paper Generative Art Using Neural Visual Grammars and Dual Encoders running on 1 GPU allows optimization of any image using a genetic algorithm. This is much more general but much slower than using Arnheim 2 which uses gradients.

A reimplementation of the Arnheim 1 generative architecture in the CLIPDraw framework allowing optimization of its parameters using gradients. Much more efficient than Arnheim 1 above but requires differentiating through the image itself.

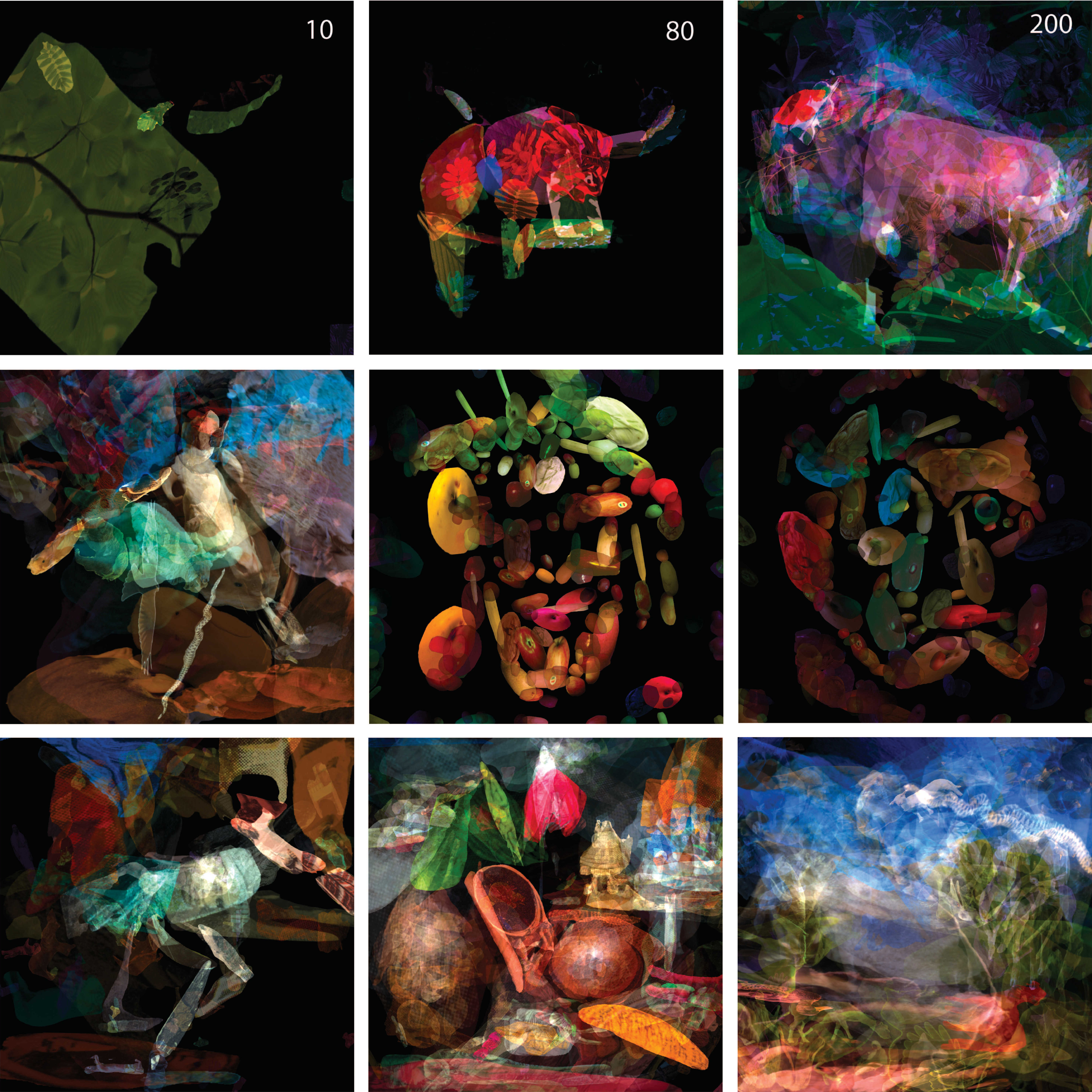

A spatial transformer-based Arnheim implementation for generating collage images. It employs a combination of evolution and training to create collages from opaque to transparent image patches.

Example patch datasets, with the exception of 'Fruit and veg', are provided under

CC BY 4.0 licence.

The 'Fruit and veg' patches in collage_patches/fruit.npy are based on a subset

of the Kaggle Fruits 360 and are provided under

CC BY-SA 4.0 licence,

as are all example collages using them.

Usage instructions are included in the Colabs which open and run on the free-to-use Google Colab platform - just click the buttons below! Improved performance and longer timeouts are available with Colab Pro.

If you use this code (or any derived code), data or these models in your work, please cite the relevant accompanying papers on Generative Art Using Neural Visual Grammars and Dual Encoders or on CLIP-CLOP: CLIP-Guided Collage and Photomontage.

@misc{fernando2021genart,

title={Generative Art Using Neural Visual Grammars and Dual Encoders},

author={Chrisantha Fernando and S. M. Ali Eslami and Jean-Baptiste Alayrac and Piotr Mirowski and Dylan Banarse and Simon Osindero}

year={2021},

eprint={2105.00162},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@inproceedings{mirowski2022clip,

title={CLIP-CLOP: CLIP-Guided Collage and Photomontage},

author={Piotr Mirowski and Dylan Banarse and Mateusz Malinowski and Simon Osindero and Chrisantha Fernando},

booktitle={Proceedings of the Thirteenth International Conference on Computational Creativity},

year={2022}

}

This is not an official Google product.

CLIPDraw provided under license, Copyright 2021 Kevin Frans.

Other works may be copyright of the authors of such work.