This is an example CDK stack to deploy The Scalable Webhook stack described by Jeremy Daly here - https://www.jeremydaly.com/serverless-microservice-patterns-for-aws/#scalablewebhook

An advanced version of this pattern was talked about by Heitor Lessa at re:Invent 2019 as Call me, “Maybe” (Webhook)

If you want a walkthrough of the theory, the code and finally a demo of the deployed implementation check out:

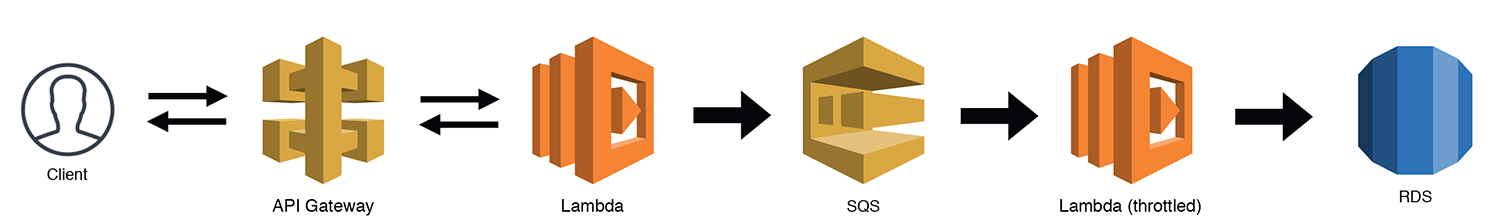

You would use this pattern when you have a non serverless resource like an RDS DB in direct contact with a serverless resource like a lambda. You need to make sure that your serverless resource doesn't scale up to an amount that it DOS attacks your non serverless resource.

This is done by putting a queue between them and having a lambda with a throttled concurrency policy pull items off the queue and communicate with your serverless resource at a rate it can handle.

NOTE: For this pattern in the cdk deployable construct I have swapped RDS for DynamoDB.

Why? Because it is significantly cheaper/faster for developers to deploy and maintain, I also don't think we lose the essence of the pattern with this swap given we still do the pub/sub deduplication via SQS/Lambda and throttle the subscription lambda. RDS also introduces extra complexity in that it needs to be deployed in a VPC. I am slightly worried developers would get distracted by the extra RDS logic when the main point is the pattern. A real life implementation of this pattern could use RDS MySQL or it could be a call to an on-prem mainframe, the main purpose of the pattern is the throttling to not overload the scale-limited resource.

When people move to the cloud (especially serverless) they tend to think that this means their applications are now infinitely scalable:

For the right reasons this just isn't true. If any one person's resources were infinitely scalable then any one person could consume the whole of AWS no matter how scalable the platform.

If we weren't using DynamoDB, we would need to know the max connections limit configured for our instance size:

We need to slow down the amount of direct requests to our DB somehow, that is where the scalable webhook comes in:

We can use SQS to hold all requests in a queue as soon as they come in. Again, SQS will have limits:

120,000 in flight messages with an unlimited backlog I think will be effective enough as a buffer.

Now we have our messages in a queue but we need to subscribe to the queue and insert the records into the DB. To do this we create a throttled lambda where we set the max number of concurrent executions to whatever scale we are happy with. This should be less than the max connections on our DB and should take into account any other Lambdas running in this account.

One final improvement that we could make if implementing this in a production system is to delete the Lambda between the API Gateway and SQS. You can do a direct integration which will reduce costs and latency:

If you want an AWS managed service to try and help with this scalability problem you can check out AWS RDS Proxy which is in preview

When you deploy this you will have an API Gateway where any url is routed through to the publish lambda. If you modify the url from / to say /hello this url will be sent as a message via sqs to a lambda which will insert "hello from /hello" into dynamodb as a message. You can track the progress of your message at every stage through cloudwatch as logs are printed, you can view the contents of dynamo in the console and the contents of sqs in the console. You should also notice that SQS can include duplicate messages but in those instances you don't get two identical records in DynamoDB as we used an id we generated in the message as the key

This is a blank project for Python development with CDK.

The cdk.json file tells the CDK Toolkit how to execute your app.

This project is set up like a standard Python project. The initialization

process also creates a virtualenv within this project, stored under the .env

directory. To create the virtualenv it assumes that there is a python3

(or python for Windows) executable in your path with access to the venv

package. If for any reason the automatic creation of the virtualenv fails,

you can create the virtualenv manually.

To manually create a virtualenv on MacOS and Linux:

$ python -m venv .env

After the init process completes and the virtualenv is created, you can use the following step to activate your virtualenv.

$ source .env/bin/activate

If you are a Windows platform, you would activate the virtualenv like this:

% .env\Scripts\activate.bat

Once the virtualenv is activated, you can install the required dependencies.

$ pip install -r requirements.txt

At this point you can now synthesize the CloudFormation template for this code.

$ cdk synth

To add additional dependencies, for example other CDK libraries, just add

them to your setup.py file and rerun the pip install -r requirements.txt

command.

cdk lslist all stacks in the appcdk synthemits the synthesized CloudFormation templatecdk deploydeploy this stack to your default AWS account/regioncdk diffcompare deployed stack with current statecdk docsopen CDK documentation

Enjoy!