#Cygnus architecture

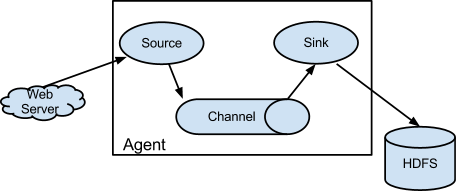

##Flume architecture As stated in flume.apache.org:

An Event is a unit of data that flows through a Flume agent. The Event flows from Source to Channel to Sink, and is represented by an implementation of the Event interface. An Event carries a payload (byte array) that is accompanied by an optional set of headers (string attributes). A Flume agent is a process (JVM) that hosts the components that allow Events to flow from an external source to a external destination.

A Source consumes Events having a specific format, and those Events are delivered to the Source by an external source like a web server. For example, an AvroSource can be used to receive Avro Events from clients or from other Flume agents in the flow. When a Source receives an Event, it stores it into one or more Channels. The Channel is a passive store that holds the Event until that Event is consumed by a Sink. One type of Channel available in Flume is the FileChannel which uses the local filesystem as its backing store. A Sink is responsible for removing an Event from the Channel and putting it into an external repository like HDFS (in the case of an HDFSEventSink) or forwarding it to the Source at the next hop of the flow. The Source and Sink within the given agent run asynchronously with the Events staged in the Channel.

##Basic Cygnus architecture The simplest way of using Cygnus is to adopt basic constructs of source -> channel -> sink as described in the Apache Flume documentation. There can be as many basic constructs as persistence elements; within Cygnus context, these are:

- source -> hdfs-channel -> hdfs-sink

- source -> mysql-channel -> mysql-sink

- source -> ckan-channel -> ckan-sink

Please observe a generic source has been used instead of specific hdfs-source, mysql-source or ckan-source. This is because the source is the same for all the persistence elements, i.e. a HttpSource. The way this native source processes the Orion notifications is by means of a specific REST handler: OrionRESTHandler.

Regarding the channels, in the current version of Cygnus all of them are recommended to be of type MemoryChannel, nevertheless nothing avoids a FileChannel or a JDBCChannel can be used.

Finally, the sinks are custom ones, one per each persistence element covered by the current version of Cygnus: OrionHDFSSink, OrionMySQLSink and OrionCKANSink.

##Advanced Cygnus architectures

All the advanced archictures arise when trying to improve the performance of Cygnus. As seen above, basic Cygnus configuration is about a source writting Flume events into a single channel where a single sink consumes those events. This can be clearly moved to a multiple sink configuration running in parallel. But there is not a single configuration but many (more details in doc/operation/performace_tuning_tips.md):

You can simply add more sinks consuming events from the same single channel. This configuration theoretically increases the processing capabilities in the sink side, but usually shows an important drawback, specially if the events are consumed by the sinks very fast: the sinks have to compete for the single channel. Thus, some times you can find that adding more sinks in this way simply turns the system slower than a single sink configuration. This configuration is only recommended when the sinks require a lot of time to process a single event, ensuring few collisions when accessing the channel.

The above mentioned drawback can be solved by configuring a channel per each sink, avoiding the competition for the single channel.

However, when multiple channels are used for a same storage, then some kind of dispatcher deciding which channels will receive a copy of the events is required. This is the goal of the Flume Channel Selectors, a piece of software selecting the appropriate set of channels the Flume events will be put in. The default one is Replicating Channel Selector, i.e. each time a Flume event is generated at the sources, it is replicated in all the channels connected to those sources. There is another selector, the Multiplexing Channel Selector, which puts the events in a channel given certain matching-like criteria. Nevertheless:

- We want the Flume events to be replicated per each configured storage. E.g. we want the events are persisted both in a HDFS and CKAN storage.

- But within a storage, we want the Flume events to be put into a single channel, not replicated. E.g. among all the channels associated to a HDFS storage, we only want to put the event within a single one of them.

- And the dispatching criteria is not based on a matching rule but on a round robin-like behaviour. E.g. if we have 3 channels (

ch1,ch2,ch3) associated to a HDFS storage, then select firstch1, thench2, thench3and then againch1, etc.

Due to the available Channel Selectors do not fit our needs, a custom selector has been developed: RoundRobinChannelSelector. This selector extends AbstractChannelSelector as ReplicatingChannelSelector and MultiplexingChannelSelector do.

##High availability Cygnus architecture High Availability (or HA) is achieved by replicating a whole Cygnus agent, independently of the internal architecture (basic or advance), in an active-passive standard schema. I.e. when the active Cygnus agent fails, a load balancer redirects all the incoming Orion notifications to the passive one. Both Cygnus agents are able to persist the notified context data using the same set of sinks with identical configuration.

Please observe the described configuration shows a little drawback: when migrating from the old active Cygnus (now passive) to the new active one (previously passive), the already notified context data to the old active Cygnus is lost, due to this information is stored within its channel in the form of Flume events. Independently of the channel type used in the agent (MemoryChannel, FileChannel or JDBCChannel), this data cannot be retrieved by an external agent. This drawback can only be fixed if a custom channel allowing for external data retrieval is implemented.

##Kerberos authentication when using OrionHDFSSink

A Kerberos infrastructure can be used to provide authentication facilities between OrionHDFSSink and HDFS. If enabled, the integration architecture with such a Kerberos infrastructure is depicted below:

##Sequence diagrams The achitecture shown in this document is complemented with some sequence diagrams depicting more details about the notification handling and event producing, and the event cosumption and data persistency by all the differente persistence backends.

###Notification handling and event producing (default ReplicatingChannelSelector)

Orion sends a notification to the Cygnus agent, specifically to its HttpSource. This source, through the OrionRestHandler, parses the notification and produces a Flume event which is replicated and put into all the configured channels (one per sink).

###Notification handling and event producing (custom RoundRobinChannelSelector)

The difference with the above is the event, once produced, is not replicated into all the channels regarding a sink type, but a Round Robin-like schema is used. Obviously, the Flume event is still replicated per each configured sink type.

###Event consumption and data persistency at HDFS backend

Regarding the HDFS persistence, Flume events are taken from the channel and a HDFS file name is created (see doc/design/naming_conventions.md for more details). The existence of this file in HDFS is checked; if exists, the data within the event is appended; if not, it is created (and some HDFS folders as well), together with its related Hive table.

###Event consumption and data persistency at CKAN backend

Regarding the CKAN persistence, an organization, a package and a resource name are dereived from the taken Flume event data (see doc/design/naming_conventions.md for more details). Organization, package and resource (if working in row-like mode) are created within CKAN premises if not existing, then the data is upserted. Please observe a cache exists within Cygnus about already created organizations, packages and resources; in the case any of these elements has to be created by Cygnus, they are properly cached.

###Event consumption and data persistency at MySQL backend

Regarding the MySQL persistence, a database and a table name are derived from the taken Flume event data (see doc/design/naming_conventions.md for more details). Database and table are created within MySQL premises if not existing, then the data is inserted.

##Contact

- Fermín Galán Márquez ([email protected]).

- Francisco Romero Bueno ([email protected]).