-

Notifications

You must be signed in to change notification settings - Fork 331

Why Big Data Platforms Return to SQL?

The better alternative is unknown to most of them for now.

Here we give the necessary background information. First, we need to figure out what big data platforms are dealing with.

Big data platforms focus on addressing the demand for big data storage and analytics. Among the vast amount of data to be properly stored, there is, besides the structured data resulted from business activities, the huge amount of unstructured data like audio and video data that occupies a lot of space. Sometimes the unstructured data accounts for as much as over 80% of all data stored on a big data platform. But storing data is only one part of a big data platform’s goal, the more important part is to analyze and use data to generate business value.

The big data analytics covers two types of data – Structured data and Unstructured data.

Structured data is generated in the course of daily business operations and constitutes the core part of an enterprise. Before big data platforms took root, structured data made up the major part of, or even the whole of an enterprise’s information base. Data accumulates as business expands, severely testing the capability of conventional RDB-based processing system. Big data platforms rose to the challenge, supplying solutions to the structured data analysis problems.

People are inspired by the popularity of big data platforms, trying to extract value from the conventionally futile unstructured data like logs, images, audios and videos – which calls for unstructured data analytics. Compared with the core structured data analytics, unstructured data analytics looks more like the icing on the cake. And it does not deal with homogeneous subjects, but, rather, is always accompanied by structured data handling. Collecting unstructured data often involves extracting relevant structured data, such as getting producers, dates, categories, durations, and other information for videos. Certain unstructured data can turn into structured data through some processing such as obtaining user IPs, access times and keywords. The so-called unstructured data analytics, actually, mainly focuses on the byproduct structured data.

So, structured data analytics still dominates the business analytics on big data platforms. There are mature technologies for processing structured data, such as the dominant SQL-driven, relational model-based RDBs.

Going back to SQL syntax is the trend in big data analytics. In the Hadoop system, the early PIG Latin was abandoned while Hive has persisted; and, on Spark, SparkSQL is used much more widely than Sacla (the latter is easy to start yet hard to master, and as a compiled language, it has a lot of inconvenience due to the non-support of hot swap). The new big data computing systems also prefer to use SQL to handle computations. The ancient language reaffirms its position after years of rivalry with various challengers.

There are two reasons behind this renewal.

1. A better choice is yet unavailable

The relational databases have enjoyed so long and much popularity that SQL has become an old friend of programmers, even engrained in the minds of them. It is simple to perform regular queries in SQL but it is not convenient for handling complex procedural computations or order-based computations. Its alternative techniques are as inconvenient as SQL. They require similarly complicated code as SQL does in dealing with complex computations and logics with UDFs. It’s wise to choose the familiar one between two nuisances.

2. SQL has the endorsement of big data vendors

Big data technologies, in essence, seek higher performance, and SQL is the front line of the competition. A contest is a contest only when it aims at dealing with the same problems. The more special and complex the computation is the more determinants it involves, and the harder a big data platform’s ability is assessed. SQL, having the internationally-recognized standard TPC-H benchmark, is easy to understand and assess, encouraging vendors to focus optimization on SQL.

The benefits are clear when a big data platform is SQL-compatible. SQL is widely applied, and commonly used among programmers. Using SQL helps avoid extra training costs. There are a multitude of frontend software products, and a SQL-compatible platform is easy to fit into the existing ecosystem. Developing in SQL also creates great compatibility with the conventional SQL-based databases that big data platforms want to replace, as well as cost-effective migration.

But while having those benefits of using the SQL-based RDB model, people are also paying the price by enduring its shortcomings.

The biggest problem of using SQL is that it is not the most effective means of achieving the high performance that is most important for big data computing.

SQL lacks data types and definitions of certain types of calculations that are necessary for expressing some high-performance algorithms, and looks to engineering optimization of the computing engine. We have gained rich experience in optimizing the conventional commercial databases during a few decades, while still finding it hard to optimize a lot of scenarios. A problem at the theoretical level cannot be solved at the engineering level. The emerging big data platforms, outperformed by the traditional databases in terms of optimization and algorithmic implementation, turn to larger clusters for performance increase. SQL is not good at describing a procedural computation and specifying the optimal execution path that is crucial to obtaining high performance. Optimizing the execution path requires deliberate intervention through the use of many special modifiers – procedural syntax is more straightforward than that – making it harder to achieve high-performance algorithms.

The computer hardware capacity was very limited when SQL was in its infant stage. The language’s design had to be adapted for the then hardware condition to become practical, leading to the future dilemma that the adult SQL is hard to fully exploit the contemporary computers’ hardware ability, which is featured by big memory, parallel processing and cluster mechanism. The SQL JOIN matches records by key values, but a JOIN under a big memory environment can locate matching records through addresses without calculating HASH values for comparison. The latter increases performance a lot. A SQL data table is unordered. It is easy to parallelly processed by partition for a single-table computation, but a synchronous and dynamic division across multiple tables is almost impossible for a multi-table join. We have to divide tables in advance. The static segmentation makes it hard to determine the number of parallel threads flexibly according to workloads on machines. The problem also exists in cluster computing. SQL does not distinguish the dimension table from a fact table theoretically and it defines a JOIN operation simply as the filtered Cartesian product. Performing a join operation on large tables involves HASH Shuffle actions that takes up a great deal of network resources. The benefits of cluster computing will be seriously compromised by the network transmission delay when there are a large number of nodes within the cluster.

Here is an example. We are trying to get the top 10 from one billion records and the SQL statement to get it done is like this:

select top 10 x,y from T order by x desc

The order by clause is used. It will involve a full table sorting if the statement is strictly executed, but the sorting is extremely slow. Actually, we can devise an algorithm that does not depend on full sorting. But SQL is unable to express it. It has to rely on database optimizer to do the sorting thing. For this simple scenario SQL is handling, many commercial databases have their optimization methods to exclude the full table sorting and successfully increase the performance. But for a complicated scenario like getting top 10 from each group, SQL uses a window function and a subquery to generate the following statement:

select * from

(select y,*,row_number() over (partition by y order by x desc) rn from T)

where rn<=10

This time the database optimizer cannot identify the SQL’s real target, and becomes unworkable by exactly executing the sorting logic (expressed by order by), resulting in sharply reduced performance.

The SQL-based databases not only run slowly but induce inefficient programming. The SQL code for handling complex scenarios is particularly complicated. To count the longest consecutively rising days for a stock according to the stock record table, for instance, the Oracle SQL generates the following code:

select code, max(ContinuousDays) - 1

from (

select code, NoRisingDays, count(*) ContinuousDays

from (

select code,

sum(RisingFlag) over (partition by code order by day) NoRisingDays

from (

select code, day,

case when price>lag(price) over (partittion by code order by day)

then 0 else 1 end RisingFlag

from stock ) )

group by NoRisingDays )

group by code

The process is roundabout, difficult to write and understand.

SQL is also inconvenient for implementing procedural programming. The procedural programming achieves a computation, especially an order-based one, step by step.

Examples include:

Calculating the proportion of users who have a total logging duration of at least one hour within a week, by excluding the mistaken logins last for less than 10 seconds;

Finding the distribution of numbers of longest consecutive days of credit card purchases during the last three months, taking the get-tripled-bonus-points-by-10-days-consecutive-purchases sales campaign into consideration;

Finding how many users, within 24 hours, perform the item viewing, adding to cart and buying actions continuously and how many exit halfway through;

And so on.

(These examples are deliberately simplified for easier understanding. The real-world scenarios are far more complicated).

Usually, it takes writing the complicated UDF to achieve these procedural computations in SQL. The language therefore seems not to be an omnipotent one, especially if it cannot handle some of the above scenarios.

Generally, complex SQL statements are executed slowly. This turns the topic back to performance issues. Computational performance is closely related to programming efficiency, which is responsible for causing many performance problems.

It is hard to optimize complex SQL to a satisfactory level. The database optimizer is easily overwhelmed by a multilevel nested query and becomes ineffective. Since the auto-optimization is unreliable for enhancing performance of complex computations, we must try to produce or implement high-performance algorithms in order to solve the problem fundamentally. Another problem for which there is nothing the database optimizer can do is the accumulation of database intermediate tables. SQL stores the intermediate results a procedural computation generates as temporary tables in the database for reuse, but the high IO cost affects performance. The issue cannot be solved unless we re-design the computing process.

To increase performance, in essence, is to reduce programming difficulty. As we cannot increase hardware performance through software optimization, we need to design simpler algorithms and implement them fast and cost-effectively in an effort to achieve higher performance. But if it is hard, or even impossible, to express high-performance algorithms with a language’s syntax and programmers using the language have to resort to higher complexity algorithms, performance enhancement seems to be impossible. SQL performance optimization does not lead to easy programming because it is the problematic SQL syntax that determines how to program a computation. Its inability to describe high performance algorithms makes it impossible to improve performance substantially.

Writing UDFs helps to increase performance in many scenarios, but, on one hand, it has a rather high degree of development complexity and, on the other hand, the hardcoding cannot exploit the SQL engine’s optimization ability. Often a computation cannot be achieved completely by writing UDFs, and SQL data types are needed through the computing platform’s driver. This is also an obstacle to accomplishing high performance algorithms.

A more radical solution – by this we mean designing convenient, efficient and easy-to-use syntax – is needed to address the issues.

The open-source esProc SPL is the ideal replacement and extension of SQL. It is specifically designed for big data platforms by inheriting SQL merits while abandoning its demerits.

SPL is the specialized open-source data computing engine. The system operates independent of the RDB model by breaking new ground, particularly in the aspects of increasing programming efficiency and computing performance. SPL boasts a lot of features that make it more suitable for the contemporary big data platforms.

SPL will be useless – no matter how great it is – if it cannot be well integrated into the big data platform. It is convenient to use SPL on a big data platform by importing the necessary jars (which are open-source, too and can be used as you like). SPL offers the standard JDBC driver through which users can directly execute the SPL script or invoke the script file.

…

Class.forName("com.esproc.jdbc.InternalDriver");

Connection conn =DriverManager.getConnection("jdbc:esproc:local://");

Statement st = connection.();

// Execute the SPL script directly

//ResultSet rs = st.executeQuery("=100.new(~:baseNum,~*~:square2)");

// Invoke the SPL script file

CallableStatement st = conn.prepareCall("{call SplScript(?, ?)}");

st.setObject(1, 3000);

st.setObject(2, 5000);

ResultSet result=st.execute();

...

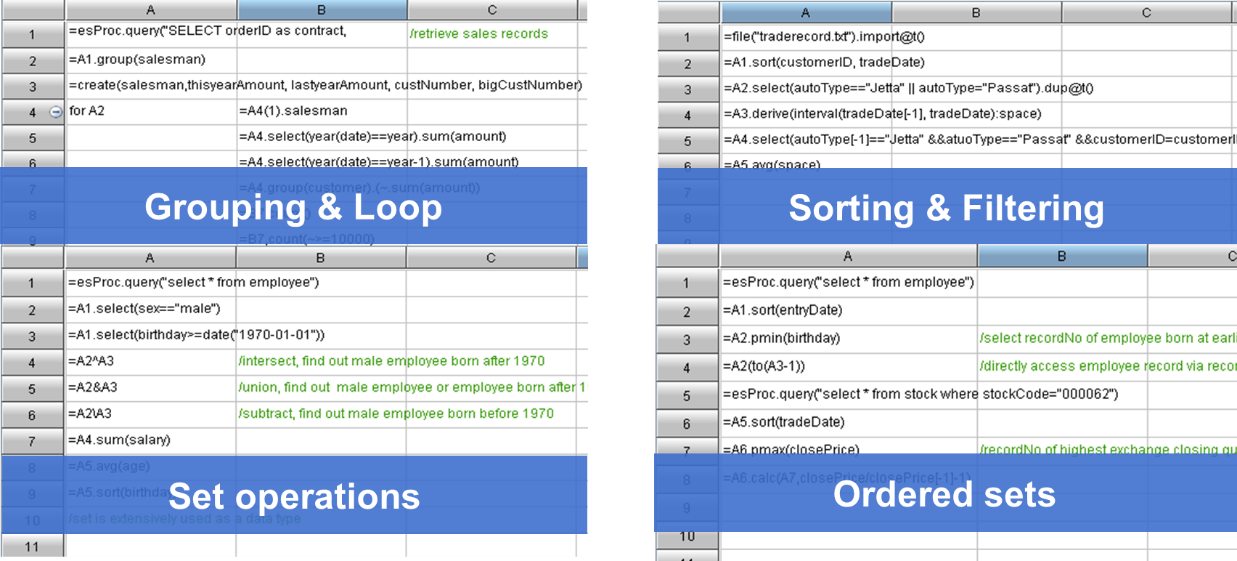

SPL provides its own syntax and rich class libraries for processing structured data, as well as supports procedural programming that makes it convenient and easy to achieve complex computations. To handle the above task of counting the largest consecutively rising days for a stock, SPL has the following script:

| A | |

| 1 | =db.query("select * from stock order by day") |

| 2 | =A1.group@i(price < price[-1] ).max(~.len())-1 |

It sorts records by trade dates, puts records with continuously rising prices in the same group, gets the length of group having the most records, and subtracting 1 from it to get the largest number of rising days in a row. The process is smooth and straightforward, and is much simpler than SQL’s roundabout way.

To list the latest login interval for each user according to the user login record, for instance:

| A | ||

| 1 | =ulogin.groups(uid;top(2,-logtime)) | Get the last two login records |

| 2 | =A1.new(uid,#2(1).logtime-#2(2).logtime:interval) | Calculate the time interval |

SPL syntax supports stepwise coding and is convenient to implement the procedural computation.

SPL supplies a wealth of class libraries for further simplifying various computations.

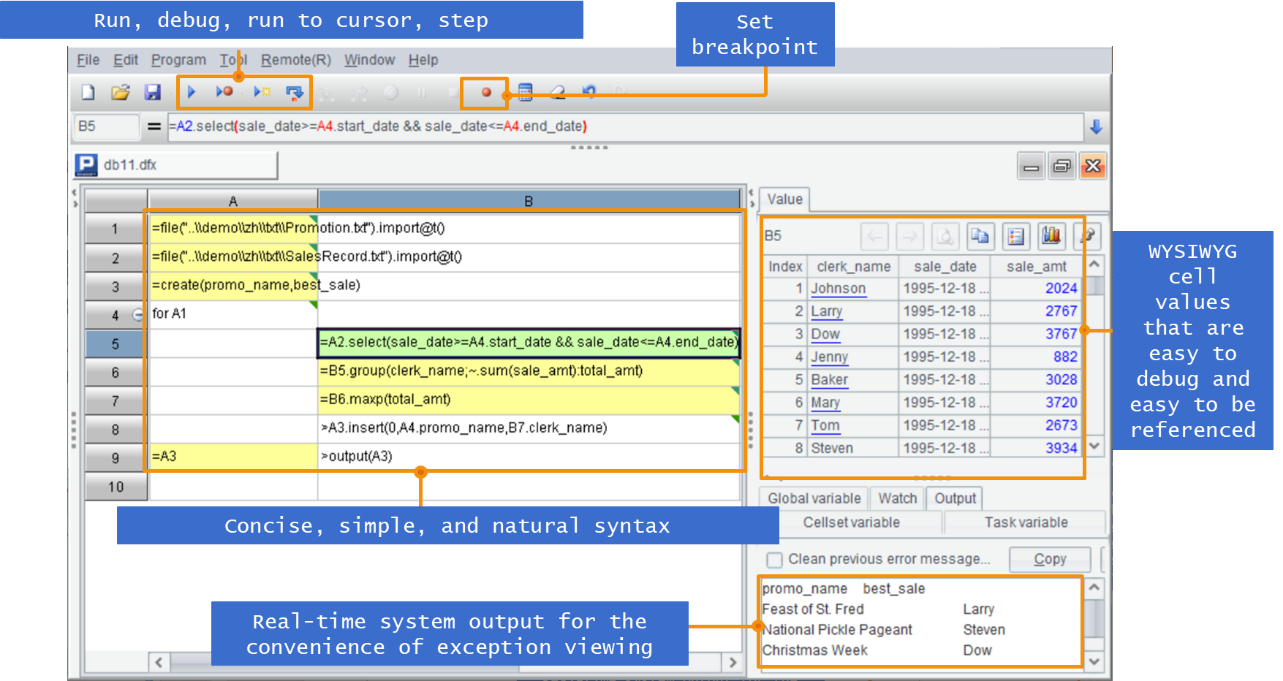

SPL has simple and easy-to-use IDE to facilitate programming. It features a variety of options like step and set breakpoint and a WYSIWYG result viewing panel

The SPL system is open. It supports accessing and using diverse and heterogeneous data sources directly. By contrast, the existing big data platforms are more closed by demanding “data loading first”.

Data sources supported by SPL (the list is growing longer…)

Based on the diverse source support, SPL is able to implement mixed computations, giving full play to each source’s strengths and creating a more inclusive platform. The ability to use diverse data sources directly makes programming easy and speeds up development.

The interpreted execution SPL intrinsically supports hot swap. This gives great conveniences to Java-based big data platforms. Editing, modifying, operating and maintaining big data computing logic written in SPL are real-time, without having to restart the server. Development and debugging thus become fast and efficient.

We mentioned that high performance and high development efficiency are two sides of the same coin. It is easy to phrase high performance algorithms using the concise SPL syntax. SPL also designs high-performance algorithmic strategies and data storage mechanisms, which makes it easy to implement high-performance algorithms and storage plans that SQL finds it hard to achieve. This again shows that algorithms and storage plans are key factors for software performance.

Take the above-mentioned Top N problems as an example. SPL treats TopN as a type of aggregate operations, successfully transforming the highly complicated sorting to a relatively simple aggregate operation and creating new application fields.

| A | ||

| 1 | =file(“data.ctx”).create().cursor() | |

| 2 | =A1.groups(;top(10,amount)) | Get orders records whose amounts rank in top 10 |

| 3 | =A1.groups(area;top(10,amount)) | Get orders records whose amounts rank in top 10 in each area |

The SPL statements do not involve any sorting-related keywords and the sorting action. The syntax for getting TopN within the full set and that for getting them within each group are basically the same, as well as have the same high performance.

There is a list of examples of SPL-based high-performance computing scenarios:

Open-source SPL Speeds up Query on Detail Table of Group Insurance by 2000+ Times

Open-source SPL improves bank’s self-service analysis from 5-concurrency to 100-concurrency

Open-source SPL turns pre-association of query on bank mobile account into real-time association

Open-source SPL speeds up batch operating of bank loan agreements by 10+ times

Open-source SPL optimizes bank pre-calculated fixed query to real-time flexible query

Open-source SPL optimizes batch operating of insurance company from 2 hours to 17 minutes

Let me add a few more words in conclusion. SPL does not use the RDB model, it is based on a radically new theoretical system instead. Its innovation is at the theoretical level. If you want to learn more about SPL, SPL: a database language featuring easy writing and fast running gives a detailed explanation.

SPL Resource: SPL Official Website | SPL Blog | Download esProc SPL | SPL Source Code