This Master Test Plan describes how the project "Unveiled" will be tested during development and before it is shipped. It should provide a common basis for all team members how testing in this project is organizied and executed. This document gathers all information necessary to plan and control the test effort for the project "Unveiled".

This document addresses the following types and levels of testing:

- Unit Tests

- Functional Tests

- Performance Tests

- Installation Tests

There is no need to test the webinterface with unit or functional tests, because it does not contain any testable logic. In addition it will also not be covered by Unit Tests, because the time scale of this project is to short to allow a high effort in testing it. It is more important that the other components of the "Unveiled" application will be covered by tests. See section 3 for the targeted test items.

In this section definitions and explanations of acronyms and abbreviations are listed to help the reader to understand these.

- UC Use Case

- SAD Software Architecture Document

- OUCD Overall Use Case Diagram

| Title | Date |

|---|---|

| Unveiled Website | 09.06.2016 |

| Unveiled Blog | 09.06.2016 |

| Overall Use Case Diagram (OUCD) | 16.10.2015 |

| UC1: Capture and stream video | 23.10.2015 |

| UC2: Configure settigns | 23.10.2015 |

| UC3: Maintain user profile | 22.11.2015 |

| UC4: Switch user | 21.11.2015 |

| UC5: Register | 28.11.2015 |

| UC6: Browse own media | 28.11.2015 |

| UC7: Manage Users | 19.12.2015 |

| UC8: Delete own media | 03.06.2016 |

| UC9: Download own media | 03.06.2016 |

| UC10: View own media | 03.06.2016 |

| UC11: Approve registration | 03.06.2016 |

| UC12: Upload file | 03.06.2016 |

| Software Architecture Document | 15.11.2015 |

| Software Requirement Specification (SRS) | 14.11.2015 |

| Android App Installation Guide | 09.06.2016 |

| Jira Board | 09.06.2016 |

| Sourcecode (Github) | 09.06.2016 |

| Function point calculation and use case estimation | 09.06.2016 |

| Deployment Diagram | 28.11.2015 |

| Class Diagram Backend PHP Stack | 14.11.2015 |

| Continuous Integration Process | 11.06.2016 |

| Project Presentation | 09.06.2016 |

The following chapters will describe which parts of the "Unveiled" application will be covered by tests, what the common test approach is, which tools should be used and how the test goals are defined.

Testing supports all project members with feedback to their work. It ensures that use cases and functionalities are implemented in a correct manner and shows if changes infected the behaviour of the application in a bad way.

The overall goal is to find broken functionality and logical bugs, so that they can be fixed early enough.

Our test motivation is to reduce quality and technical risks. This supports us successfully realise our use cases.

The following list contains those test items that have been identified as targets for testing.

- Unveiled Android Application: Android Application running on Nexus 6 virtual device, Android 5 Lollipop, Android SDK 18, libstreaming 3.0

- imflux: The streaming library is tested with Java 7 (openjdk7, oraclejdk7), Maven model version 4.0.0 and following dependencies: netty-all 4.0.36, junit 4.12, slf4j-api 1.7.12 and log4j-slf4j-impl 2.4.1.

- Unveiled-Server Java-Stack: The Java-Stack of the Unveiled Backend is also tested with Java 7, Maven model version 4.0.0 and following dependencies: imflux 0.1.0, javax.servlet-api 3.1.0, javax.ejb-api 3.2, junit 4.12 and mysql-connector-java 5.1.38.

- Unveiled-Server PHP-Stack: The PHP-Stack of the Unveiled Backend is tested with PHP 5.6.5, Composer 1.2 and Postman API calls.

(n/a)

(n/a)

(n/a)

| Object | Description |

|---|---|

| Technique Objective: | Setting up and managing RTP, RTCP and RTSP session with the streaming library imflux. |

| Technique: | - (automated test) |

| Oracles: | - |

| Required Tools: | The technique requires the following tools: - Maven - JUnit - TravisCI |

| Success Criteria: | The technique supports the testing of: - Set up RTP/RTCP session - Set up RTSP session - automated RTCP handling - transmitting data via RTP/RTCP - sending messages via RTSP |

| Special Considerations: | - |

| Object | Description |

|---|---|

| Technique Objective: | Test all API functionality of the PHP-Stack. |

| Technique: | Run predefined Postman Collection on the to be tested API. If all calls succeed the test was successful. |

| Oracles: | - |

| Required Tools: | The technique requires the following tools: - Postman - Internet Connection |

| Success Criteria: | The technique supports the testing of: - login - register - logout - CRUD user - CRUD media - approve registration |

| Special Considerations: | - |

(n/a)

(n/a)

| Object | Description |

|---|---|

| Technique Objective: | Test the streaming capabilities of the Java-Backend-Stack. |

| Technique: | Use a test script to upload large files and measure the time the server needed to process the request. Also consider to run these test scripts from different networks, locations and internet connections. There should never be a network connection timeout. As a second step run this test script concurrently to test the server behaviour on heavy load. |

| Oracles: | - |

| Required Tools: | The technique requires the following tools: - Server Access - Internet Connection - A test script for streaming large files. |

| Success Criteria: | The technique supports the testing of: - a single large upload stream - multiple upload stream processed concurrently |

| Special Considerations: | - use multiple physical clients, each running tests scripts, to place a load on the server - consider that your Internet bandwith must be good enaugh for such test |

(n/a)

(n/a)

(n/a)

(n/a)

(n/a)

(n/a)

| Object | Description |

|---|---|

| Technique Objective: | Test installation of the Unveiled Android Application on different devices. |

| Technique: | Install Android Application on an Android Device. Afterwards test application by opening it and going through all use-cases. Consider following: - use devices with different Android versions and different manufacturers - do a new install (the App was never installed before on this device) - do a reinstall (the same or older version was installed before) |

| Oracles: | - |

| Required Tools: | Android devices from different manufacturers and with different Android versions |

| Success Criteria: | App should install successfully and afterwards work correctly. |

| Special Considerations: | - |

This Test Plan's execution can begin once the build environment was set up, the development has started and all Use Cases were defined properly.

This Test Plan is not applicable any more once the development of the "Unveiled" application has stopped, the project has finished or it is replaced by another Test Plan.

(n/a)

The test avulation summaries are generated by our automated test tools and will have the following results:

- passed All tests have passed and were successful.

- failed At least one test was not successful.

- error There was an error during the execution of the tests.

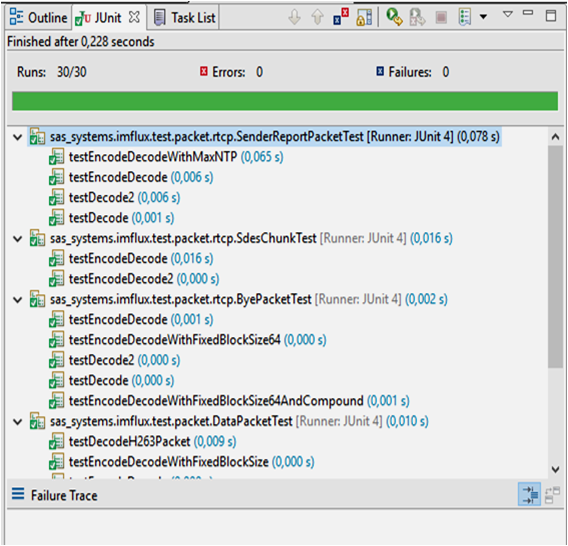

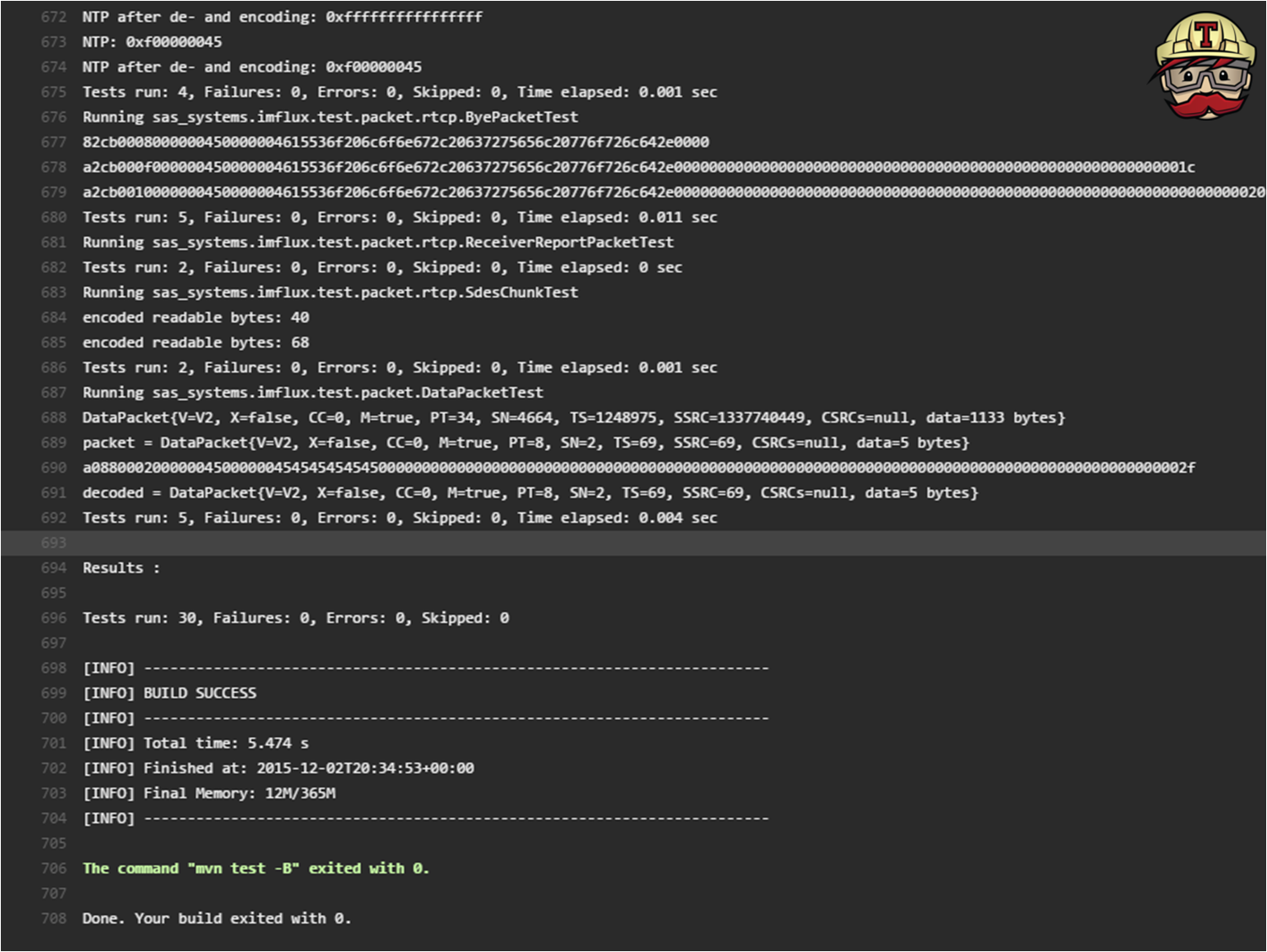

Following pictures show some example test reports from automated UnitTests:

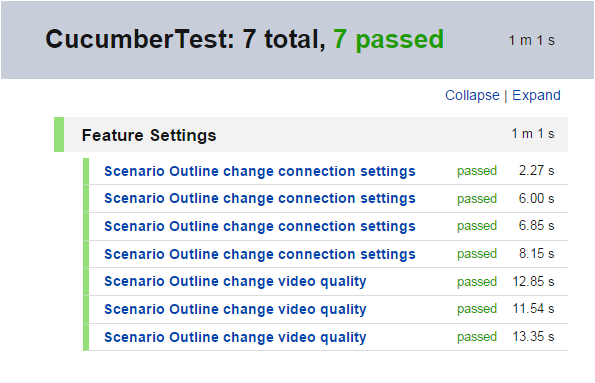

And this picture shows the result of an automated UI test:

Test coverage is reported to coveralls.io:

and sonarqube:

You can find some up to date high level quality metrics on sonarqube as well. Please consult these links:

We are also using low level metrics to ensure a good quality of our code. The following screenshot shows you a not up-to-date report from the tool Metrics 1.3.6:

Our build process automatically triggers a ticket creation on Jira if a build fails. Therefore all team members are notified and are able to follow the link to the failed build to check the failing reason. The following picture shows you how an automatically created Jira ticket looks like.

Users and customer can log their incidents and change wishes as issues on Github. From these issues new Jira tickets will be created to add a new backlog item to the team members task list.

(n/a)

tbd

We mostly use JUnit as testing framework for our Java-Backend-Stack. All Java application parts are managed with maven and therefore we can run unit tests as well as funtional tests within our IDE easily through one maven command. Our build process supports testing as well. Every push to the master branch and every pull request of our application's Github repositories trigger a new build process. We use TravisCI for continuous integration. Travis builds the application and will afterwards run the tests. All test results as well as the code coverage are then published to coveralls.io and sonarqube. If the build and all tests were successful the Unveiled-Server application is deployed on the server. If a build failed the originator of this build is notified via email to ensure that the issues are addressed immediately and a new Jira ticket is created. The automatic deployment process is also described in detail in this blog entry.

The following table sets forth the system resources for the test effort presented in this Test Plan.

| Resource | Quantity | Name and Type |

|---|---|---|

| Database Server - Network or Subnet - Server Name - Database Name |

1 | mySQL database for testing sas.systemgrid.de Server01 unveiled |

| Client Test PCs - installed Software: |

3 | Java JDK 7/8, Tomcat7 with openEJB, PHP 5, Maven, Git, appropriate IDE |

| Test Repository - Network or Subnet - Server Name |

1 | Server for Testing sas.systemgrid.de/unveiled/php/ Server01 |

| Test Server Environment | 3 | TravisCI container-based test environments configured for the corresponding tests. |

The following base software elements are required in the test environment for this Test Plan.

| Software Element Name | Version | Type and Other Notes |

|---|---|---|

| Ubuntu | 14.04 | Operating System |

| Apache Maven | Dependencies Management and Build Tool | |

| JRE | 1.7.x | Runtime |

| PHP | Runtime | |

| Windows | 7,8,10 | Operating System |

| Google Chrome | Internet Browser | |

| Mozilla Firefox | Internet Browser | |

| Android SDK | SDK, Runtime, Virtual Device |

The following tools will be employed to support the test process for this Test Plan.

| Tool Category or Type | Tool Brand Name | Vendor or In-house | Version |

|---|---|---|---|

| Test Coverage Monitor | Coveralls.io | Lemur Heavy Industries | |

| Code Coverage | JaCoCo | EclEmma | 0.7.6 |

| Code Climate, Metrics | Sonarqube | SonarSource S.A | 4.5.7 |

| UI Test tool | Cucumber for Android | Cucumber Limited |

The following Test Environment Configurations needs to be provided and supported for this project.

| Configuration Name | Desription | Implemented in Physical Configuration |

|---|---|---|

| Average user configuration | Number of users who are accessing the application at the same time | 100 Users |

| Network installation and bandwith | Speed and power of the internet connection provided by the server host. | 50 Mbit/s Down 10 Mbit/s Up |

| Minimal configuration supported | Performance of the application server and database server. | Application and Database Server on same maschine: CPU: Dualcore 2.5GHz RAM: 8GB HDD: 100GB OS: Ubuntu 14.04 |

This table shows the staffing assumptions for the test effort.

| Role | Minimum Resources | Specific Responsibilites or Comments |

|---|---|---|

| Test Manager | Sebastian Schmidl (1) | Provides management oversight. Responsibilities include: - planning and logistics - agree mission - identify motivators - acquire appropriate resources - present management reporting - advocate the interests of test - evaluate effectiveness of test effort |

| Test Analyst | Fabian Schäfer (1) | Identifies and defines the specific tests to be conducted. Responsibilities include: - identify test ideas - define test details - determine test results - document change requests - evaluate product quality |

| Test Designer | Sebastian Adams (1) | Defines the technical approach to the implementation of the test effort. Responsibilities include: - define test approach - define test automation architecture - verify test techniques - define testability elements - structure test implementation |

| Tester | all team members (3) | Implements and executes the tests. Responsibilities include: - implement tests and test suites - execute test suites - log results - analyze and recover from test failures - document incidents |

| Test System Administrator | Sebastian Schmidl (1) | Ensures test environment and assets are managed and maintained. Responsibilities include: - administer test management system - install and support access to, and recovery of, test environment configurations and test labs |

| Database Administrator, Database Manager | Sebastian Adams(1) | Ensures test data (database) environment and assets are managed and maintained. Responsibilities include: - support the administration of test data and test beds (database). |

| Implementer | Sebastian Schmidl, Sebastian Adams (2) | Implements and unit tests the test classes and test packages. Responsibilities include: - creates the test components required to support testability requirements as defined by the designer |

(n/a)

Milestones to be achieved till 30.05.2016

- at least 50% test coverage for the streaming library imflux, because it could be reused in other projects

- at least 20% test coverage for Unveiled Java-Backend-Stack

- successfully completed installation tests for at least 3 different Android device types

(n/a)

(n/a)