diff --git a/.gitignore b/.gitignore

index 6ad1c578..b6706876 100644

--- a/.gitignore

+++ b/.gitignore

@@ -63,6 +63,7 @@ instance/

# Sphinx documentation

docs/_build/

+docs/output.txt

# PyBuilder

target/

@@ -108,3 +109,17 @@ info/

# Cython generated C code

*.c

+*.cpp

+

+

+# generated docs

+docs/source/examples/

+docs/source/generated/

+

+

+*.DS_Store

+

+*.zip

+

+*.vtu

+*.vtr

diff --git a/.travis.yml b/.travis.yml

index 730a6def..125eed4b 100644

--- a/.travis.yml

+++ b/.travis.yml

@@ -1,137 +1,100 @@

language: python

+python: 3.8

-matrix:

- include:

- - name: "Linux py27"

- sudo: required

- language: python

- python: 2.7

- services: docker

- env:

- - PIP=pip

- - CIBW_BUILD="cp27-*"

- - COVER="off"

+# setuptools-scm needs all tags in order to obtain a proper version

+git:

+ depth: false

+

+env:

+ global:

+ - TWINE_USERNAME=geostatframework

+ - CIBW_BEFORE_BUILD="pip install numpy==1.17.3 cython==0.29.14 setuptools"

+ - CIBW_TEST_REQUIRES=pytest

+ - CIBW_TEST_COMMAND="pytest -v {project}/tests"

- - name: "Linux py34"

- sudo: required

- language: python

- python: 3.4

+before_install:

+ - |

+ if [[ "$TRAVIS_OS_NAME" = windows ]]; then

+ choco install python --version 3.8.0

+ export PATH="/c/Python38:/c/Python38/Scripts:$PATH"

+ # make sure it's on PATH as 'python3'

+ ln -s /c/Python38/python.exe /c/Python38/python3.exe

+ fi

+

+script:

+ - python3 -m pip install cibuildwheel==1.3.0

+ - python3 -m cibuildwheel --output-dir dist

+

+after_success:

+ - |

+ if [[ $TRAVIS_PULL_REQUEST == 'false' ]]; then

+ python3 -m pip install twine

+ python3 -m twine upload --verbose --skip-existing --repository-url https://test.pypi.org/legacy/ dist/*

+ if [[ $TRAVIS_TAG ]]; then python3 -m twine upload --verbose --skip-existing dist/*; fi

+ fi

+

+notifications:

+ email:

+ recipients:

+ - info@geostat-framework.org

+

+jobs:

+ include:

+ - name: "sdist and coverage"

services: docker

- env:

- - PIP=pip

- - CIBW_BUILD="cp34-*"

- - COVER="off"

+ env: OMP_NUM_THREADS=4

+ script:

+ - python3 -m pip install -U setuptools pytest-cov coveralls

+ - python3 -m pip install -U numpy==1.17.3 cython==0.29.14

+ - python3 -m pip install -r requirements.txt

+ - python3 setup.py sdist -d dist

+ - python3 setup.py --openmp build_ext --inplace

+ - python3 -m pytest --cov gstools --cov-report term-missing -v tests/

+ - python3 -m coveralls

- name: "Linux py35"

- sudo: required

- language: python

- python: 3.5

services: docker

- env:

- - PIP=pip

- - CIBW_BUILD="cp35-*"

- - COVER="off"

-

- # py36 for coverage and sdist

+ env: CIBW_BUILD="cp35-*"

- name: "Linux py36"

- sudo: required

- language: python

- python: 3.6

services: docker

- env:

- - PIP=pip

- - CIBW_BUILD="cp36-*"

- - COVER="on"

-

- # https://github.com/travis-ci/travis-ci/issues/9815

+ env: CIBW_BUILD="cp36-*"

- name: "Linux py37"

- sudo: required

- language: python

- python: 3.7

- dist: xenial

services: docker

- env:

- - PIP=pip

- - CIBW_BUILD="cp37-*"

- - COVER="off"

-

- - name: "MacOS py27"

- os: osx

- language: generic

- env:

- - PIP=pip2

- - CIBW_BUILD="cp27-*"

- - COVER="off"

-

- - name: "MacOS py34"

- os: osx

- language: generic

- env:

- - PIP=pip2

- - CIBW_BUILD="cp34-*"

- - COVER="off"

+ env: CIBW_BUILD="cp37-*"

+ - name: "Linux py38"

+ services: docker

+ env: CIBW_BUILD="cp38-*"

- name: "MacOS py35"

os: osx

- language: generic

- env:

- - PIP=pip2

- - CIBW_BUILD="cp35-*"

- - COVER="off"

-

+ language: shell

+ env: CIBW_BUILD="cp35-*"

- name: "MacOS py36"

os: osx

- language: generic

- env:

- - PIP=pip2

- - CIBW_BUILD="cp36-*"

- - COVER="off"

-

+ language: shell

+ env: CIBW_BUILD="cp36-*"

- name: "MacOS py37"

os: osx

- language: generic

- env:

- - PIP=pip2

- - CIBW_BUILD="cp37-*"

- - COVER="off"

-

-env:

- global:

- - TWINE_USERNAME=geostatframework

- - CIBW_BEFORE_BUILD="pip install numpy==1.14.5 cython==0.28.3"

- - CIBW_TEST_REQUIRES=pytest-cov

- # inplace cython build and test run

- - CIBW_TEST_COMMAND="cd {project} && python setup.py build_ext --inplace && py.test --cov gstools --cov-report term-missing -v {project}/tests"

-

-script:

- # create wheels

- - $PIP install cibuildwheel==0.11.1

- - cibuildwheel --output-dir wheelhouse

- # create source dist for pypi and create coverage (only once for linux py3.6)

- - |

- if [[ $COVER == "on" ]]; then

- rm -rf dist

- python -m pip install -U numpy==1.14.5 cython==0.28.3 setuptools

- python -m pip install pytest-cov coveralls

- python -m pip install -r docs/requirements.txt

- python setup.py sdist

- python setup.py build_ext --inplace

- python -m pytest --cov gstools --cov-report term-missing -v tests/

- python -m coveralls

- fi

-

-after_success:

- # pypi upload ("test" allways and "official" on TAG)

- - python -m pip install twine

- - python -m twine upload --verbose --skip-existing --repository-url https://test.pypi.org/legacy/ wheelhouse/*.whl

- - python -m twine upload --verbose --skip-existing --repository-url https://test.pypi.org/legacy/ dist/*.tar.gz

- - |

- if [[ $TRAVIS_TAG ]]; then

- python -m twine upload --verbose --skip-existing wheelhouse/*.whl

- python -m twine upload --verbose --skip-existing dist/*.tar.gz

- fi

+ language: shell

+ env: CIBW_BUILD="cp37-*"

+ - name: "MacOS py38"

+ os: osx

+ language: shell

+ env: CIBW_BUILD="cp38-*"

-notifications:

- email:

- recipients:

- - info@geostat-framework.org

+ - name: "Win py35"

+ os: windows

+ language: shell

+ env: CIBW_BUILD="cp35-*"

+ - name: "Win py36"

+ os: windows

+ language: shell

+ env: CIBW_BUILD="cp36-*"

+ - name: "Win py37"

+ os: windows

+ language: shell

+ env: CIBW_BUILD="cp37-*"

+ - name: "Win py38"

+ os: windows

+ language: shell

+ env: CIBW_BUILD="cp38-*"

diff --git a/.zenodo.json b/.zenodo.json

new file mode 100755

index 00000000..ad72d74b

--- /dev/null

+++ b/.zenodo.json

@@ -0,0 +1,49 @@

+{

+ "license": "LGPL-3.0+",

+ "contributors": [

+ {

+ "type": "Other",

+ "name": "Bane Sullivan"

+ },

+ {

+ "orcid": "0000-0002-2547-8102",

+ "affiliation": "Helmholtz Centre for Environmental Research - UFZ",

+ "type": "ResearchGroup",

+ "name": "Falk He\u00dfe"

+ },

+ {

+ "orcid": "0000-0002-8783-6198",

+ "affiliation": "Hydrogeology Group, Department of Earth Science, Utrecht University, Netherlands",

+ "type": "ResearchGroup",

+ "name": "Alraune Zech"

+ },

+ {

+ "orcid": "0000-0002-7798-7080",

+ "affiliation": "Helmholtz Centre for Environmental Research - UFZ",

+ "type": "Supervisor",

+ "name": "Sabine Attinger"

+ }

+ ],

+ "language": "eng",

+ "keywords": [

+ "geostatistics",

+ "kriging",

+ "random fields",

+ "covariance models",

+ "variogram",

+ "Python",

+ "GeoStat-Framework"

+ ],

+ "creators": [

+ {

+ "orcid": "0000-0001-9060-4008",

+ "affiliation": "Helmholtz Centre for Environmental Research - UFZ",

+ "name": "Sebastian M\u00fcller"

+ },

+ {

+ "orcid": "0000-0001-9362-1372",

+ "affiliation": "Helmholtz Centre for Environmental Research - UFZ",

+ "name": "Lennart Sch\u00fcler"

+ }

+ ]

+}

\ No newline at end of file

diff --git a/CHANGELOG.md b/CHANGELOG.md

index d5885da8..8192866f 100755

--- a/CHANGELOG.md

+++ b/CHANGELOG.md

@@ -3,13 +3,30 @@

All notable changes to **GSTools** will be documented in this file.

-## [Unreleased]

+## [1.2.0] - Volatile Violet - 2020-03-20

### Enhancements

+- different variogram estimator functions can now be used #51

+- the TPLGaussian and TPLExponential now have analytical spectra #67

+- added property ``is_isotropic`` to CovModel #67

+- reworked the whole krige sub-module to provide multiple kriging methods #67

+ - Simple

+ - Ordinary

+ - Universal

+ - External Drift Kriging

+ - Detrended Kriging

+- a new transformation function for discrete fields has been added #70

+- reworked tutorial section in the documentation #63

+- pyvista interface #29

### Changes

+- Python versions 2.7 and 3.4 are no longer supported #40 #43

+- CovModel: in 3D the input of anisotropy is now treated slightly different: #67

+ - single given anisotropy value [e] is converted to [1, e] (it was [e, e] before)

+ - two given length-scales [l_1, l_2] are converted to [l_1, l_2, l_2] (it was [l_1, l_2, l_1] before)

### Bugfixes

+- a race condition in the structured variogram estimation has been fixed #51

## [1.1.1] - Reverberating Red - 2019-11-08

@@ -97,7 +114,8 @@ All notable changes to **GSTools** will be documented in this file.

First release of GSTools.

-[Unreleased]: https://github.com/GeoStat-Framework/gstools/compare/v1.1.1...HEAD

+[Unreleased]: https://github.com/GeoStat-Framework/gstools/compare/v1.2.0...HEAD

+[1.2.0]: https://github.com/GeoStat-Framework/gstools/compare/v1.1.1...v1.2.0

[1.1.1]: https://github.com/GeoStat-Framework/gstools/compare/v1.1.0...v1.1.1

[1.1.0]: https://github.com/GeoStat-Framework/gstools/compare/v1.0.1...v1.1.0

[1.0.1]: https://github.com/GeoStat-Framework/gstools/compare/v1.0.0...v1.0.1

diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

index fb253d03..efa19903 100644

--- a/CONTRIBUTING.md

+++ b/CONTRIBUTING.md

@@ -23,7 +23,7 @@ print(gstools.__version__)

Open a [new issue](https://github.com/GeoStat-Framework/GSTools/issues)

with your idea or suggestion and we'd love to discuss about it.

-

+

## Do you want to enhance GSTools or fix something?

@@ -31,4 +31,6 @@ with your idea or suggestion and we'd love to discuss about it.

- Add yourself to AUTHORS.md (if you want to).

- We use the black code format, please use the script `black --line-length 79 gstools/` after you have written your code.

- Add some tests if possible.

+- Add an example showing your new feature in one of the examples sub-folders if possible.

+ Follow this [Sphinx-Gallary guide](https://sphinx-gallery.github.io/stable/syntax.html#embed-rst-in-your-example-python-files)

- Push to your fork and submit a pull request.

diff --git a/README.md b/README.md

index 33b7aceb..24a7774a 100644

--- a/README.md

+++ b/README.md

@@ -2,10 +2,10 @@

[](https://doi.org/10.5281/zenodo.1313628)

[](https://badge.fury.io/py/gstools)

+[](https://anaconda.org/conda-forge/gstools)

[](https://travis-ci.org/GeoStat-Framework/GSTools)

-[](https://ci.appveyor.com/project/GeoStat-Framework/gstools/branch/master)

[](https://coveralls.io/github/GeoStat-Framework/GSTools?branch=master)

-[](https://geostat-framework.readthedocs.io/projects/gstools/en/latest/)

+[](https://geostat-framework.readthedocs.io/projects/gstools/en/stable/?badge=stable)

[](https://github.com/ambv/black)

@@ -58,7 +58,9 @@ To install the latest development version via pip, see the

At the moment you can cite the Zenodo code publication of GSTools:

-> Sebastian Müller, & Lennart Schüler. (2019, October 1). GeoStat-Framework/GSTools: Reverberating Red (Version v1.1.0). Zenodo. http://doi.org/10.5281/zenodo.3468230

+> Sebastian Müller & Lennart Schüler. GeoStat-Framework/GSTools. Zenodo. https://doi.org/10.5281/zenodo.1313628

+

+If you want to cite a specific version, have a look at the Zenodo site.

A publication for the GeoStat-Framework is in preperation.

@@ -79,8 +81,9 @@ The documentation also includes some [tutorials][tut_link], showing the most imp

- [Kriging][tut5_link]

- [Conditioned random field generation][tut6_link]

- [Field transformations][tut7_link]

+- [Miscellaneous examples][tut0_link]

-Some more examples are provided in the examples folder.

+The associated python scripts are provided in the `examples` folder.

## Spatial Random Field Generation

@@ -97,12 +100,11 @@ The core of this library is the generation of spatial random fields. These field

This is an example of how to generate a 2 dimensional spatial random field with a gaussian covariance model.

```python

-from gstools import SRF, Gaussian

-import matplotlib.pyplot as plt

+import gstools as gs

# structured field with a size 100x100 and a grid-size of 1x1

x = y = range(100)

-model = Gaussian(dim=2, var=1, len_scale=10)

-srf = SRF(model)

+model = gs.Gaussian(dim=2, var=1, len_scale=10)

+srf = gs.SRF(model)

srf((x, y), mesh_type='structured')

srf.plot()

```

@@ -113,12 +115,11 @@ srf.plot()

A similar example but for a three dimensional field is exported to a [VTK](https://vtk.org/) file, which can be visualized with [ParaView](https://www.paraview.org/) or [PyVista](https://docs.pyvista.org) in Python:

```python

-from gstools import SRF, Gaussian

-import matplotlib.pyplot as pt

+import gstools as gs

# structured field with a size 100x100x100 and a grid-size of 1x1x1

x = y = z = range(100)

-model = Gaussian(dim=3, var=0.6, len_scale=20)

-srf = SRF(model)

+model = gs.Gaussian(dim=3, var=0.6, len_scale=20)

+srf = gs.SRF(model)

srf((x, y, z), mesh_type='structured')

srf.vtk_export('3d_field') # Save to a VTK file for ParaView

@@ -144,18 +145,18 @@ model again.

```python

import numpy as np

-from gstools import SRF, Exponential, Stable, vario_estimate_unstructured

+import gstools as gs

# generate a synthetic field with an exponential model

x = np.random.RandomState(19970221).rand(1000) * 100.

y = np.random.RandomState(20011012).rand(1000) * 100.

-model = Exponential(dim=2, var=2, len_scale=8)

-srf = SRF(model, mean=0, seed=19970221)

+model = gs.Exponential(dim=2, var=2, len_scale=8)

+srf = gs.SRF(model, mean=0, seed=19970221)

field = srf((x, y))

# estimate the variogram of the field with 40 bins

bins = np.arange(40)

-bin_center, gamma = vario_estimate_unstructured((x, y), field, bins)

+bin_center, gamma = gs.vario_estimate_unstructured((x, y), field, bins)

# fit the variogram with a stable model. (no nugget fitted)

-fit_model = Stable(dim=2)

+fit_model = gs.Stable(dim=2)

fit_model.fit_variogram(bin_center, gamma, nugget=False)

# output

ax = fit_model.plot(x_max=40)

@@ -184,8 +185,8 @@ For better visualization, we will condition a 1d field to a few "measurements",

```python

import numpy as np

-from gstools import Gaussian, SRF

import matplotlib.pyplot as plt

+import gstools as gs

# conditions

cond_pos = [0.3, 1.9, 1.1, 3.3, 4.7]

@@ -194,8 +195,8 @@ cond_val = [0.47, 0.56, 0.74, 1.47, 1.74]

gridx = np.linspace(0.0, 15.0, 151)

# spatial random field class

-model = Gaussian(dim=1, var=0.5, len_scale=2)

-srf = SRF(model)

+model = gs.Gaussian(dim=1, var=0.5, len_scale=2)

+srf = gs.SRF(model)

srf.set_condition(cond_pos, cond_val, "ordinary")

# generate the ensemble of field realizations

@@ -223,10 +224,10 @@ Here we re-implement the Gaussian covariance model by defining just a

[correlation][cor_link] function, which takes a non-dimensional distance ``h = r/l``:

```python

-from gstools import CovModel

import numpy as np

+import gstools as gs

# use CovModel as the base-class

-class Gau(CovModel):

+class Gau(gs.CovModel):

def cor(self, h):

return np.exp(-h**2)

```

@@ -248,12 +249,11 @@ spatial vector fields can be generated.

```python

import numpy as np

-import matplotlib.pyplot as plt

-from gstools import SRF, Gaussian

+import gstools as gs

x = np.arange(100)

y = np.arange(100)

-model = Gaussian(dim=2, var=1, len_scale=10)

-srf = SRF(model, generator='VectorField')

+model = gs.Gaussian(dim=2, var=1, len_scale=10)

+srf = gs.SRF(model, generator='VectorField')

srf((x, y), mesh_type='structured', seed=19841203)

srf.plot()

```

@@ -275,10 +275,10 @@ a handy [VTK][vtk_link] export routine using the `.vtk_export()` or you could

create a VTK/PyVista dataset for use in Python with to `.to_pyvista()` method:

```python

-from gstools import SRF, Gaussian

+import gstools as gs

x = y = range(100)

-model = Gaussian(dim=2, var=1, len_scale=10)

-srf = SRF(model)

+model = gs.Gaussian(dim=2, var=1, len_scale=10)

+srf = gs.SRF(model)

srf((x, y), mesh_type='structured')

srf.vtk_export("field") # Saves to a VTK file

mesh = srf.to_pyvista() # Create a VTK/PyVista dataset in memory

@@ -288,15 +288,18 @@ mesh.plot()

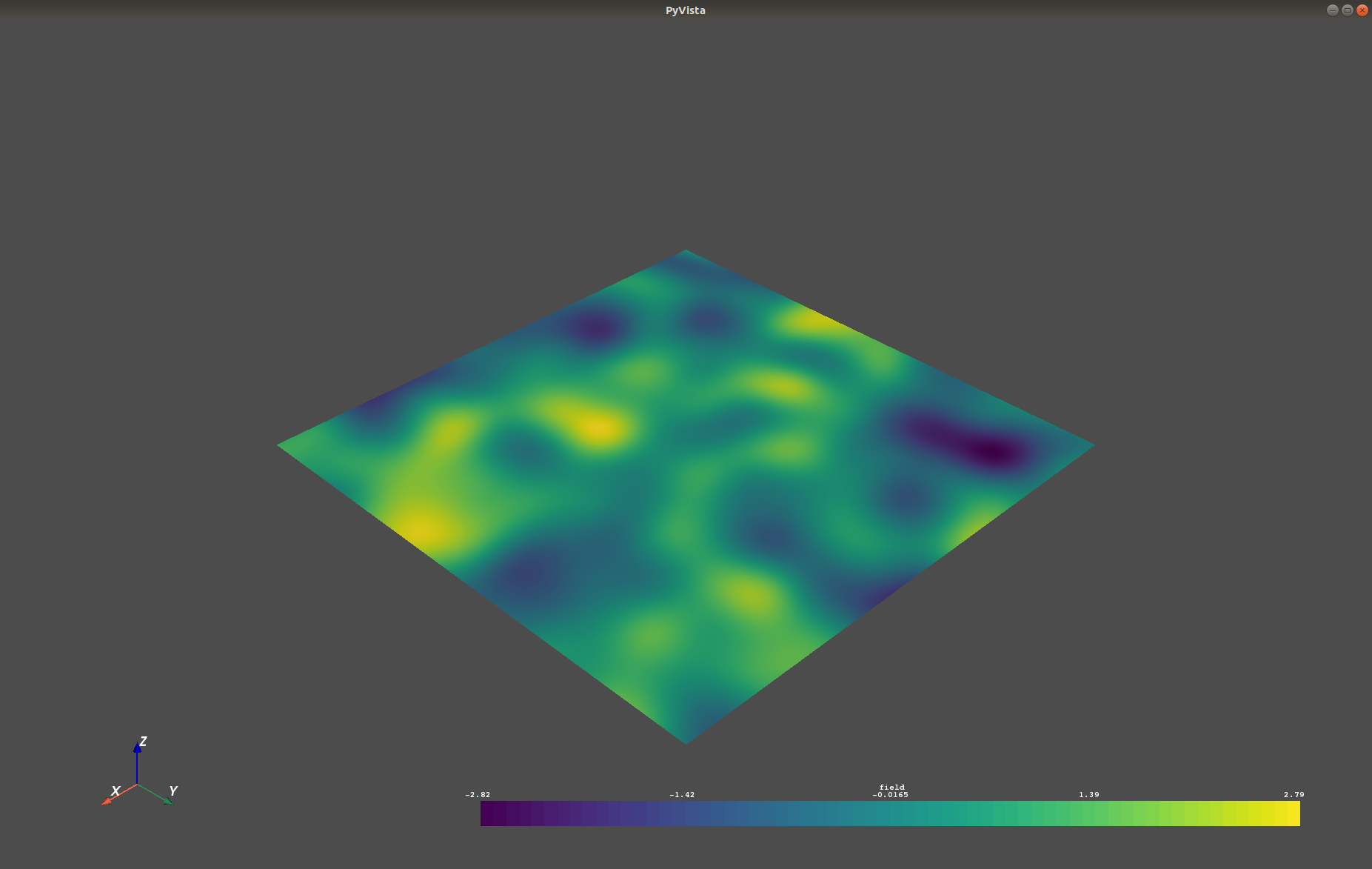

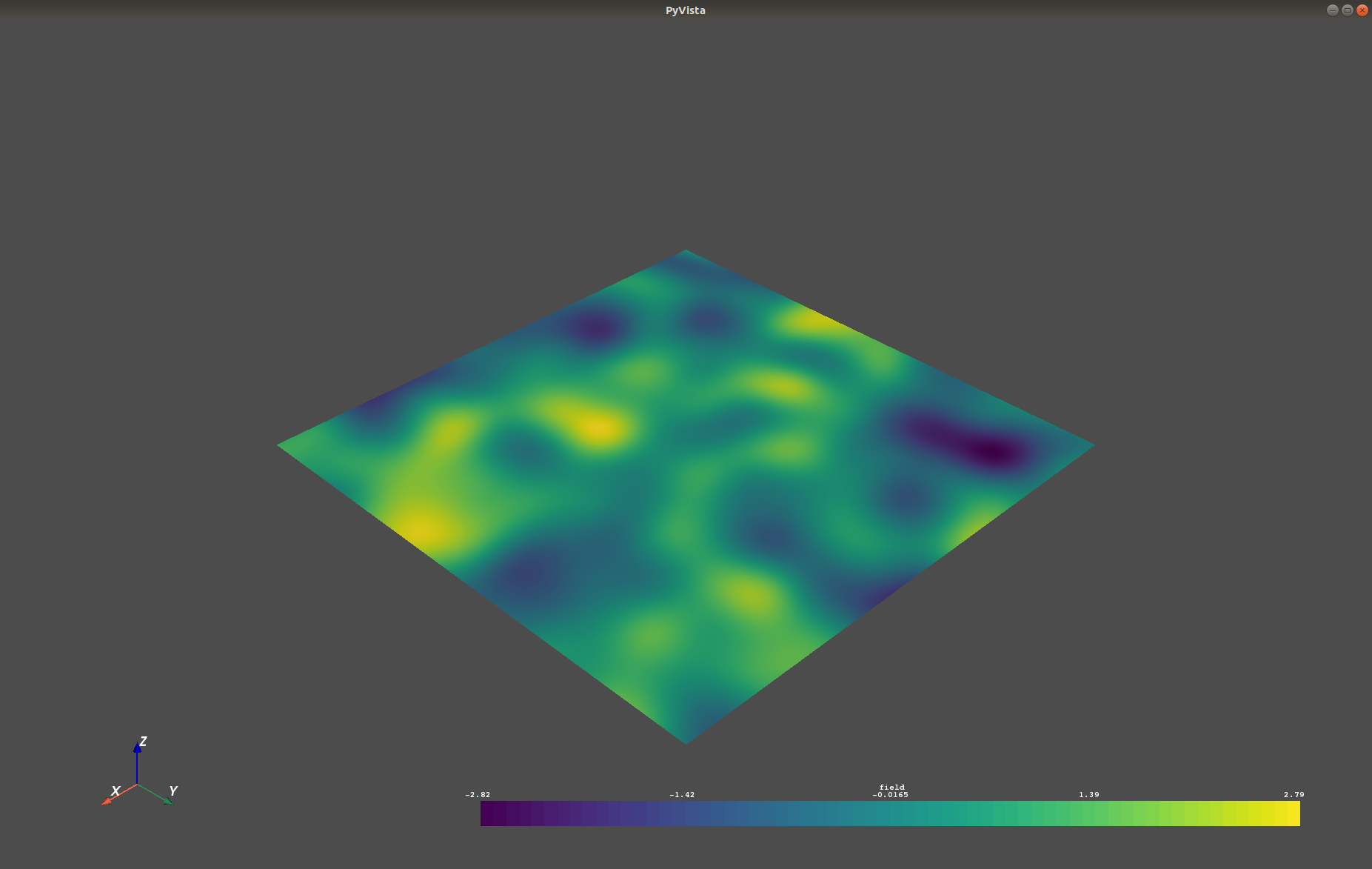

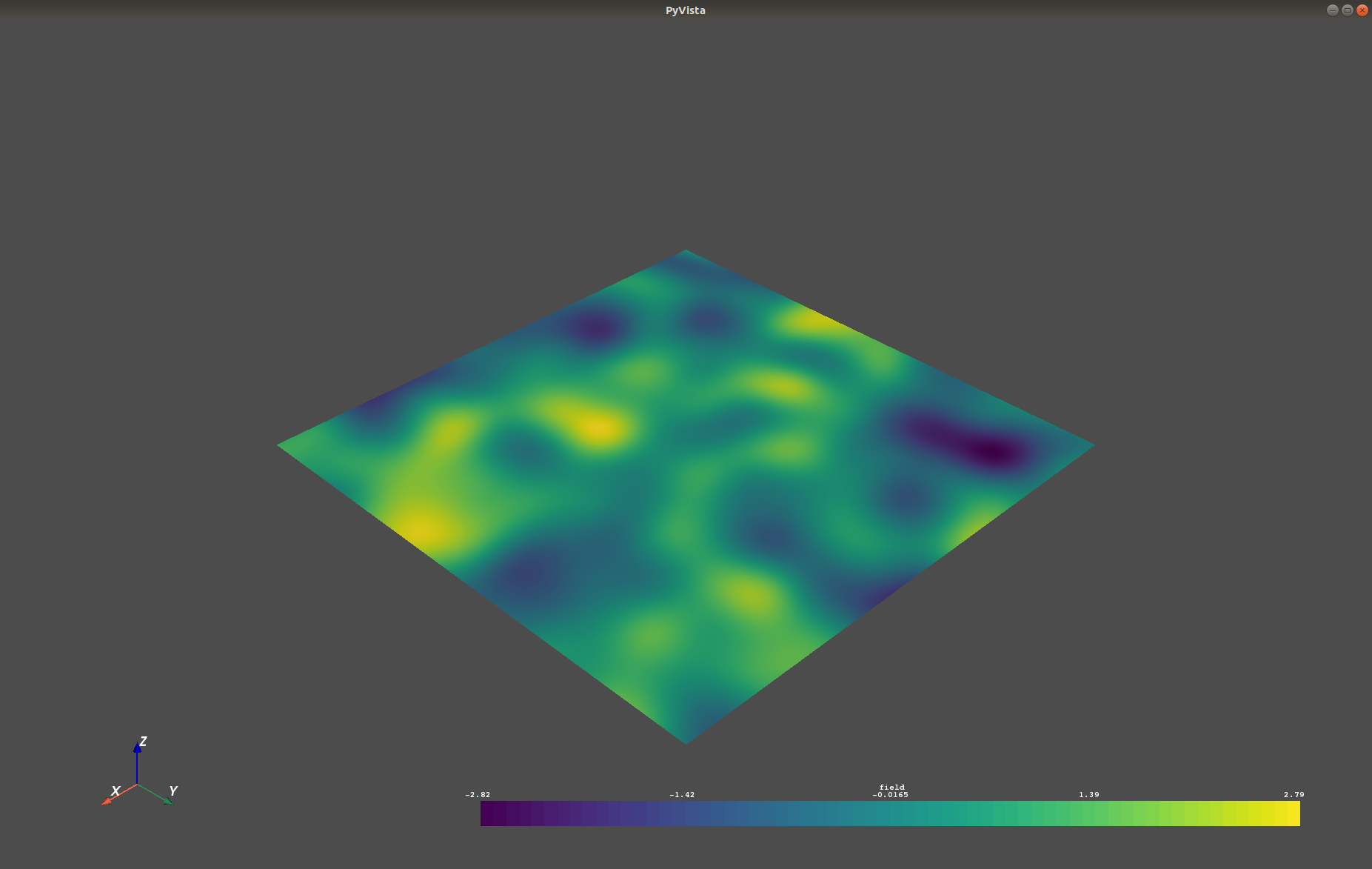

Which gives a RectilinearGrid VTK file ``field.vtr`` or creates a PyVista mesh

in memory for immediate 3D plotting in Python.

+

+ +

+

+

## Requirements:

- [NumPy >= 1.14.5](https://www.numpy.org)

- [SciPy >= 1.1.0](https://www.scipy.org/scipylib)

-- [hankel >= 0.3.6](https://github.com/steven-murray/hankel)

+- [hankel >= 1.0.2](https://github.com/steven-murray/hankel)

- [emcee >= 3.0.0](https://github.com/dfm/emcee)

-- [pyevtk](https://bitbucket.org/pauloh/pyevtk)

-- [six](https://github.com/benjaminp/six)

+- [pyevtk >= 1.1.1](https://github.com/pyscience-projects/pyevtk)

### Optional

@@ -311,7 +314,7 @@ You can contact us via .

## License

-[LGPLv3][license_link] © 2018-2019

+[LGPLv3][license_link] © 2018-2020

[pip_link]: https://pypi.org/project/gstools

[conda_link]: https://docs.conda.io/en/latest/miniconda.html

@@ -320,17 +323,18 @@ You can contact us via .

[pipiflag]: https://pip-python3.readthedocs.io/en/latest/reference/pip_install.html?highlight=i#cmdoption-i

[winpy_link]: https://winpython.github.io/

[license_link]: https://github.com/GeoStat-Framework/GSTools/blob/master/LICENSE

-[cov_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/latest/covmodel.html

+[cov_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/generated/gstools.covmodel.CovModel.html#gstools.covmodel.CovModel

[stable_link]: https://en.wikipedia.org/wiki/Stable_distribution

-[doc_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/latest/

-[doc_install_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/latest/#pip

-[tut_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/latest/tutorials.html

-[tut1_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/latest/examples/01_random_field/index.html

-[tut2_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/latest/examples/02_cov_model/index.html

-[tut3_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/latest/examples/03_variogram/index.html

-[tut4_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/latest/examples/04_vector_field/index.html

-[tut5_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/latest/examples/05_kriging/index.html

-[tut6_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/latest/examples/06_conditioned_fields/index.html

-[tut7_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/latest/examples/07_transformations/index.html

+[doc_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/

+[doc_install_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/#pip

+[tut_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/tutorials.html

+[tut1_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/01_random_field/index.html

+[tut2_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/02_cov_model/index.html

+[tut3_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/03_variogram/index.html

+[tut4_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/04_vector_field/index.html

+[tut5_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/05_kriging/index.html

+[tut6_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/06_conditioned_fields/index.html

+[tut7_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/07_transformations/index.html

+[tut0_link]: https://geostat-framework.readthedocs.io/projects/gstools/en/stable/examples/00_misc/index.html

[cor_link]: https://en.wikipedia.org/wiki/Autocovariance#Normalization

[vtk_link]: https://www.vtk.org/

diff --git a/appveyor.yml b/appveyor.yml

deleted file mode 100644

index acd9e40d..00000000

--- a/appveyor.yml

+++ /dev/null

@@ -1,22 +0,0 @@

-environment:

- global:

- TWINE_USERNAME: geostatframework

- CIBW_BEFORE_BUILD: pip install numpy==1.14.5 cython==0.28.3

- CIBW_TEST_REQUIRES: pytest-cov

- CIBW_TEST_COMMAND: cd {project} && python setup.py build_ext --inplace && py.test --cov gstools --cov-report term-missing -v {project}/tests

-

-build_script:

- - pip install cibuildwheel==0.11.1

- - cibuildwheel --output-dir wheelhouse

- - python -m pip install twine

- - python -m twine upload --skip-existing --repository-url https://test.pypi.org/legacy/ wheelhouse/*.whl

- - >

- IF "%APPVEYOR_REPO_TAG%" == "true"

- (

- python -m pip install twine

- &&

- python -m twine upload --skip-existing wheelhouse/*.whl

- )

-artifacts:

- - path: "wheelhouse\\*.whl"

- name: Wheels

\ No newline at end of file

diff --git a/docs/output.txt b/docs/output.txt

deleted file mode 100644

index 869370c5..00000000

--- a/docs/output.txt

+++ /dev/null

@@ -1,59 +0,0 @@

-Sphinx v1.8.2 in Verwendung

-Erstelle Ausgabeverzeichnis…

-loading intersphinx inventory from https://docs.python.org/3.6/objects.inv...

-loading intersphinx inventory from https://docs.python.org/objects.inv...

-intersphinx inventory has moved: https://docs.python.org/objects.inv -> https://docs.python.org/3/objects.inv

-loading intersphinx inventory from http://docs.scipy.org/doc/numpy/objects.inv...

-intersphinx inventory has moved: http://docs.scipy.org/doc/numpy/objects.inv -> https://docs.scipy.org/doc/numpy/objects.inv

-loading intersphinx inventory from http://docs.scipy.org/doc/scipy/reference/objects.inv...

-intersphinx inventory has moved: http://docs.scipy.org/doc/scipy/reference/objects.inv -> https://docs.scipy.org/doc/scipy/reference/objects.inv

-loading intersphinx inventory from http://matplotlib.org/objects.inv...

-intersphinx inventory has moved: http://matplotlib.org/objects.inv -> https://matplotlib.org/objects.inv

-loading intersphinx inventory from http://www.sphinx-doc.org/en/stable/objects.inv...

-[autosummary] generating autosummary for: covmodel.rst, field.rst, index.rst, main.rst, random.rst, upscaling.rst, variogram.rst

-building [mo]: targets for 0 po files that are out of date

-building [html]: targets for 7 source files that are out of date

-updating environment: 7 added, 0 changed, 0 removed

-reading sources... [ 14%] covmodel

-reading sources... [ 28%] field

-reading sources... [ 42%] index

-reading sources... [ 57%] main

-reading sources... [ 71%] random

-reading sources... [ 85%] upscaling

-reading sources... [100%] variogram

-

-looking for now-outdated files... none found

-pickling environment... erledigt

-checking consistency... erledigt

-preparing documents... erledigt

-writing output... [ 14%] covmodel

-writing output... [ 28%] field

-writing output... [ 42%] index

-writing output... [ 57%] main

-writing output... [ 71%] random

-writing output... [ 85%] upscaling

-writing output... [100%] variogram

-

-generating indices... genindex py-modindex

-highlighting module code... [ 12%] gstools.covmodel.base

-highlighting module code... [ 25%] gstools.covmodel.models

-highlighting module code... [ 37%] gstools.field.generator

-highlighting module code... [ 50%] gstools.field.srf

-highlighting module code... [ 62%] gstools.field.upscaling

-highlighting module code... [ 75%] gstools.random.rng

-highlighting module code... [ 87%] gstools.random.tools

-highlighting module code... [100%] gstools.variogram.variogram

-

-writing additional pages... search

-copying images... [ 25%] gstools.png

-copying images... [ 50%] gau_field.png

-copying images... [ 75%] tplstable_field.png

-copying images... [100%] exp_vario_fit.png

-

-copying static files... done

-copying extra files... erledigt

-dumping search index in English (code: en) ... erledigt

-dumping object inventory... erledigt

-build abgeschlossen, 5640 warnings.

-

-The HTML pages are in build/html.

diff --git a/docs/requirements.txt b/docs/requirements.txt

index 3eb3c028..c5a6a232 100644

--- a/docs/requirements.txt

+++ b/docs/requirements.txt

@@ -1,5 +1,3 @@

-# required for readthedocs.org

-cython>=0.28.3

-numpydoc

-# https://stackoverflow.com/a/11704396/6696397

--r ../requirements.txt

\ No newline at end of file

+-r requirements_doc.txt

+-r ../requirements_setup.txt

+-r ../requirements.txt

diff --git a/docs/requirements_doc.txt b/docs/requirements_doc.txt

new file mode 100755

index 00000000..c9d3ee24

--- /dev/null

+++ b/docs/requirements_doc.txt

@@ -0,0 +1,5 @@

+numpydoc

+sphinx-gallery

+matplotlib

+pyvista

+pykrige

diff --git a/docs/source/covmodel.base.rst b/docs/source/_templates/autosummary/class.rst

similarity index 53%

rename from docs/source/covmodel.base.rst

rename to docs/source/_templates/autosummary/class.rst

index 7aa1c973..c5c858a1 100644

--- a/docs/source/covmodel.base.rst

+++ b/docs/source/_templates/autosummary/class.rst

@@ -1,11 +1,12 @@

-gstools.covmodel.base

----------------------

+{{ fullname | escape | underline}}

-.. automodule:: gstools.covmodel.base

+.. currentmodule:: {{ module }}

+

+.. autoclass:: {{ objname }}

:members:

:undoc-members:

- :show-inheritance:

:inherited-members:

+ :show-inheritance:

.. raw:: latex

diff --git a/docs/source/conf.py b/docs/source/conf.py

index 1961b10a..b9214669 100644

--- a/docs/source/conf.py

+++ b/docs/source/conf.py

@@ -20,8 +20,16 @@

# NOTE:

# pip install sphinx_rtd_theme

# is needed in order to build the documentation

-import os

-import sys

+# import os

+# import sys

+import datetime

+import warnings

+

+warnings.filterwarnings(

+ "ignore",

+ category=UserWarning,

+ message="Matplotlib is currently using agg, which is a non-GUI backend, so cannot show the figure.",

+)

# local module should not be added to sys path if it's installed on RTFD

# see: https://stackoverflow.com/a/31882049/6696397

@@ -59,6 +67,7 @@ def setup(app):

"sphinx.ext.autosummary",

"sphinx.ext.napoleon", # parameters look better than with numpydoc only

"numpydoc",

+ "sphinx_gallery.gen_gallery",

]

# autosummaries from source-files

@@ -95,8 +104,9 @@ def setup(app):

master_doc = "contents"

# General information about the project.

+curr_year = datetime.datetime.now().year

project = "GSTools"

-copyright = "2018 - 2019, Lennart Schueler, Sebastian Mueller"

+copyright = "2018 - {}, Lennart Schueler, Sebastian Mueller".format(curr_year)

author = "Lennart Schueler, Sebastian Mueller"

# The version info for the project you're documenting, acts as replacement for

@@ -171,7 +181,9 @@ def setup(app):

# Output file base name for HTML help builder.

htmlhelp_basename = "GeoStatToolsdoc"

-

+# logos for the page

+html_logo = "pics/gstools_150.png"

+html_favicon = "pics/gstools.ico"

# -- Options for LaTeX output ---------------------------------------------

# latex_engine = 'lualatex'

@@ -247,3 +259,48 @@ def setup(app):

"hankel": ("https://hankel.readthedocs.io/en/latest/", None),

"emcee": ("https://emcee.readthedocs.io/en/latest/", None),

}

+

+

+# -- Sphinx Gallery Options

+from sphinx_gallery.sorting import FileNameSortKey

+

+sphinx_gallery_conf = {

+ # only show "print" output as output

+ "capture_repr": (),

+ # path to your examples scripts

+ "examples_dirs": [

+ "../../examples/00_misc/",

+ "../../examples/01_random_field/",

+ "../../examples/02_cov_model/",

+ "../../examples/03_variogram/",

+ "../../examples/04_vector_field/",

+ "../../examples/05_kriging/",

+ "../../examples/06_conditioned_fields/",

+ "../../examples/07_transformations/",

+ ],

+ # path where to save gallery generated examples

+ "gallery_dirs": [

+ "examples/00_misc/",

+ "examples/01_random_field/",

+ "examples/02_cov_model/",

+ "examples/03_variogram/",

+ "examples/04_vector_field/",

+ "examples/05_kriging/",

+ "examples/06_conditioned_fields/",

+ "examples/07_transformations/",

+ ],

+ # Pattern to search for example files

+ "filename_pattern": r"\.py",

+ # Remove the "Download all examples" button from the top level gallery

+ "download_all_examples": False,

+ # Sort gallery example by file name instead of number of lines (default)

+ "within_subsection_order": FileNameSortKey,

+ # directory where function granular galleries are stored

+ "backreferences_dir": None,

+ # Modules for which function level galleries are created. In

+ "doc_module": "gstools",

+ # "image_scrapers": ('pyvista', 'matplotlib'),

+ # "first_notebook_cell": ("%matplotlib inline\n"

+ # "from pyvista import set_plot_theme\n"

+ # "set_plot_theme('document')"),

+}

diff --git a/docs/source/covmodel.models.rst b/docs/source/covmodel.models.rst

deleted file mode 100644

index faffb056..00000000

--- a/docs/source/covmodel.models.rst

+++ /dev/null

@@ -1,12 +0,0 @@

-gstools.covmodel.models

------------------------

-

-.. automodule:: gstools.covmodel.models

- :members:

- :undoc-members:

- :no-inherited-members:

- :show-inheritance:

-

-.. raw:: latex

-

- \clearpage

diff --git a/docs/source/covmodel.rst b/docs/source/covmodel.rst

index 37b22dbd..74e7cebe 100644

--- a/docs/source/covmodel.rst

+++ b/docs/source/covmodel.rst

@@ -10,7 +10,4 @@ gstools.covmodel

.. toctree::

:hidden:

- covmodel.base.rst

- covmodel.models.rst

- covmodel.tpl_models.rst

covmodel.plot.rst

diff --git a/docs/source/covmodel.tpl_models.rst b/docs/source/covmodel.tpl_models.rst

deleted file mode 100644

index dd57c096..00000000

--- a/docs/source/covmodel.tpl_models.rst

+++ /dev/null

@@ -1,11 +0,0 @@

-gstools.covmodel.tpl_models

----------------------------

-

-.. automodule:: gstools.covmodel.tpl_models

- :members:

- :undoc-members:

- :show-inheritance:

-

-.. raw:: latex

-

- \clearpage

diff --git a/docs/source/index.rst b/docs/source/index.rst

index 67dd85c4..bc3d23e9 100644

--- a/docs/source/index.rst

+++ b/docs/source/index.rst

@@ -48,24 +48,28 @@ To get the latest development version you can install it directly from GitHub:

.. code-block:: none

- pip install https://github.com/GeoStat-Framework/GSTools/archive/develop.zip

+ pip install git+git://github.com/GeoStat-Framework/GSTools.git@develop

+

+If something went wrong during installation, try the :code:`-I` `flag from pip `_.

To enable the OpenMP support, you have to provide a C compiler, Cython and OpenMP.

To get all other dependencies, it is recommended to first install gstools once

in the standard way just decribed.

-Then use the following command:

+Simply use the following commands:

.. code-block:: none

- pip install --global-option="--openmp" gstools

+ pip install gstools

+ pip install -I --no-deps --global-option="--openmp" gstools

Or for the development version:

.. code-block:: none

- pip install --global-option="--openmp" https://github.com/GeoStat-Framework/GSTools/archive/develop.zip

+ pip install git+git://github.com/GeoStat-Framework/GSTools.git@develop

+ pip install -I --no-deps --global-option="--openmp" git+git://github.com/GeoStat-Framework/GSTools.git@develop

-If something went wrong during installation, try the :code:`-I` `flag from pip `_.

+The flags :code:`-I --no-deps` force pip to reinstall gstools but not the dependencies.

Citation

@@ -73,23 +77,27 @@ Citation

At the moment you can cite the Zenodo code publication of GSTools:

-| *Sebastian Müller, & Lennart Schüler. (2019, October 1). GeoStat-Framework/GSTools: Reverberating Red (Version v1.1.0). Zenodo. http://doi.org/10.5281/zenodo.3468230*

+| *Sebastian Müller & Lennart Schüler. GeoStat-Framework/GSTools. Zenodo. https://doi.org/10.5281/zenodo.1313628*

+

+If you want to cite a specific version, have a look at the Zenodo site.

A publication for the GeoStat-Framework is in preperation.

+

Tutorials and Examples

======================

-The documentation also includes some `tutorials `_,

+The documentation also includes some `tutorials `__,

showing the most important use cases of GSTools, which are

-- `Random Field Generation `_

-- `The Covariance Model `_

-- `Variogram Estimation `_

-- `Random Vector Field Generation `_

-- `Kriging `_

-- `Conditioned random field generation `_

-- `Field transformations `_

+- `Random Field Generation `__

+- `The Covariance Model `__

+- `Variogram Estimation `__

+- `Random Vector Field Generation `__

+- `Kriging `__

+- `Conditioned random field generation `__

+- `Field transformations `__

+- `Miscellaneous examples `__

Some more examples are provided in the examples folder.

@@ -105,7 +113,6 @@ These fields are generated using the randomisation method, described by

Examples

--------

-

Gaussian Covariance Model

^^^^^^^^^^^^^^^^^^^^^^^^^

@@ -114,12 +121,11 @@ with a :any:`Gaussian` covariance model.

.. code-block:: python

- from gstools import SRF, Gaussian

- import matplotlib.pyplot as plt

+ import gstools as gs

# structured field with a size 100x100 and a grid-size of 1x1

x = y = range(100)

- model = Gaussian(dim=2, var=1, len_scale=10)

- srf = SRF(model)

+ model = gs.Gaussian(dim=2, var=1, len_scale=10)

+ srf = gs.SRF(model)

srf((x, y), mesh_type='structured')

srf.plot()

@@ -134,12 +140,11 @@ A similar example but for a three dimensional field is exported to a

.. code-block:: python

- from gstools import SRF, Gaussian

- import matplotlib.pyplot as pt

+ import gstools as gs

# structured field with a size 100x100x100 and a grid-size of 1x1x1

x = y = z = range(100)

- model = Gaussian(dim=3, var=0.6, len_scale=20)

- srf = SRF(model)

+ model = gs.Gaussian(dim=3, var=0.6, len_scale=20)

+ srf = gs.SRF(model)

srf((x, y, z), mesh_type='structured')

srf.vtk_export('3d_field') # Save to a VTK file for ParaView

@@ -151,70 +156,6 @@ A similar example but for a three dimensional field is exported to a

:align: center

-Truncated Power Law Model

-^^^^^^^^^^^^^^^^^^^^^^^^^

-

-GSTools also implements truncated power law variograms, which can be represented as a

-superposition of scale dependant modes in form of standard variograms, which are truncated by

-a lower- :math:`\ell_{\mathrm{low}}` and an upper length-scale :math:`\ell_{\mathrm{up}}`.

-

-This example shows the truncated power law (:any:`TPLStable`) based on the

-:any:`Stable` covariance model and is given by

-

-.. math::

- \gamma_{\ell_{\mathrm{low}},\ell_{\mathrm{up}}}(r) =

- \intop_{\ell_{\mathrm{low}}}^{\ell_{\mathrm{up}}}

- \gamma(r,\lambda) \frac{\rm d \lambda}{\lambda}

-

-with `Stable` modes on each scale:

-

-.. math::

- \gamma(r,\lambda) &=

- \sigma^2(\lambda)\cdot\left(1-

- \exp\left[- \left(\frac{r}{\lambda}\right)^{\alpha}\right]

- \right)\\

- \sigma^2(\lambda) &= C\cdot\lambda^{2H}

-

-which gives Gaussian modes for ``alpha=2`` or Exponential modes for ``alpha=1``.

-

-For :math:`\ell_{\mathrm{low}}=0` this results in:

-

-.. math::

- \gamma_{\ell_{\mathrm{up}}}(r) &=

- \sigma^2_{\ell_{\mathrm{up}}}\cdot\left(1-

- \frac{2H}{\alpha} \cdot

- E_{1+\frac{2H}{\alpha}}

- \left[\left(\frac{r}{\ell_{\mathrm{up}}}\right)^{\alpha}\right]

- \right) \\

- \sigma^2_{\ell_{\mathrm{up}}} &=

- C\cdot\frac{\ell_{\mathrm{up}}^{2H}}{2H}

-

-.. code-block:: python

-

- import numpy as np

- import matplotlib.pyplot as plt

- from gstools import SRF, TPLStable

- x = y = np.linspace(0, 100, 100)

- model = TPLStable(

- dim=2, # spatial dimension

- var=1, # variance (C calculated internally, so that `var` is 1)

- len_low=0, # lower truncation of the power law

- len_scale=10, # length scale (a.k.a. range), len_up = len_low + len_scale

- nugget=0.1, # nugget

- anis=0.5, # anisotropy between main direction and transversal ones

- angles=np.pi/4, # rotation angles

- alpha=1.5, # shape parameter from the stable model

- hurst=0.7, # hurst coefficient from the power law

- )

- srf = SRF(model, mean=1, mode_no=1000, seed=19970221, verbose=True)

- srf((x, y), mesh_type='structured')

- srf.plot()

-

-.. image:: https://raw.githubusercontent.com/GeoStat-Framework/GSTools/master/docs/source/pics/tplstable_field.png

- :width: 400px

- :align: center

-

-

Estimating and fitting variograms

=================================

@@ -232,18 +173,18 @@ model again.

.. code-block:: python

import numpy as np

- from gstools import SRF, Exponential, Stable, vario_estimate_unstructured

+ import gstools as gs

# generate a synthetic field with an exponential model

x = np.random.RandomState(19970221).rand(1000) * 100.

y = np.random.RandomState(20011012).rand(1000) * 100.

- model = Exponential(dim=2, var=2, len_scale=8)

- srf = SRF(model, mean=0, seed=19970221)

+ model = gs.Exponential(dim=2, var=2, len_scale=8)

+ srf = gs.SRF(model, mean=0, seed=19970221)

field = srf((x, y))

# estimate the variogram of the field with 40 bins

bins = np.arange(40)

- bin_center, gamma = vario_estimate_unstructured((x, y), field, bins)

+ bin_center, gamma = gs.vario_estimate_unstructured((x, y), field, bins)

# fit the variogram with a stable model. (no nugget fitted)

- fit_model = Stable(dim=2)

+ fit_model = gs.Stable(dim=2)

fit_model.fit_variogram(bin_center, gamma, nugget=False)

# output

ax = fit_model.plot(x_max=40)

@@ -268,6 +209,7 @@ An important part of geostatistics is Kriging and conditioning spatial random

fields to measurements. With conditioned random fields, an ensemble of field realizations

with their variability depending on the proximity of the measurements can be generated.

+

Example

-------

@@ -277,8 +219,8 @@ generate 100 realizations and plot them:

.. code-block:: python

import numpy as np

- from gstools import Gaussian, SRF

import matplotlib.pyplot as plt

+ import gstools as gs

# conditions

cond_pos = [0.3, 1.9, 1.1, 3.3, 4.7]

@@ -287,8 +229,8 @@ generate 100 realizations and plot them:

gridx = np.linspace(0.0, 15.0, 151)

# spatial random field class

- model = Gaussian(dim=1, var=0.5, len_scale=2)

- srf = SRF(model)

+ model = gs.Gaussian(dim=1, var=0.5, len_scale=2)

+ srf = gs.SRF(model)

srf.set_condition(cond_pos, cond_val, "ordinary")

# generate the ensemble of field realizations

@@ -321,10 +263,10 @@ which takes a non-dimensional distance :class:`h = r/l`

.. code-block:: python

- from gstools import CovModel

import numpy as np

+ import gstools as gs

# use CovModel as the base-class

- class Gau(CovModel):

+ class Gau(gs.CovModel):

def cor(self, h):

return np.exp(-h**2)

@@ -345,12 +287,11 @@ Example

.. code-block:: python

import numpy as np

- import matplotlib.pyplot as plt

- from gstools import SRF, Gaussian

+ import gstools as gs

x = np.arange(100)

y = np.arange(100)

- model = Gaussian(dim=2, var=1, len_scale=10)

- srf = SRF(model, generator='VectorField')

+ model = gs.Gaussian(dim=2, var=1, len_scale=10)

+ srf = gs.SRF(model, generator='VectorField')

srf((x, y), mesh_type='structured', seed=19841203)

srf.plot()

@@ -370,10 +311,10 @@ create a VTK/PyVista dataset for use in Python with to :class:`.to_pyvista()` me

.. code-block:: python

- from gstools import SRF, Gaussian

+ import gstools as gs

x = y = range(100)

- model = Gaussian(dim=2, var=1, len_scale=10)

- srf = SRF(model)

+ model = gs.Gaussian(dim=2, var=1, len_scale=10)

+ srf = gs.SRF(model)

srf((x, y), mesh_type='structured')

srf.vtk_export("field") # Saves to a VTK file

mesh = srf.to_pyvista() # Create a VTK/PyVista dataset in memory

@@ -382,16 +323,20 @@ create a VTK/PyVista dataset for use in Python with to :class:`.to_pyvista()` me

Which gives a RectilinearGrid VTK file :file:`field.vtr` or creates a PyVista mesh

in memory for immediate 3D plotting in Python.

+.. image:: https://raw.githubusercontent.com/GeoStat-Framework/GSTools/master/docs/source/pics/pyvista_export.png

+ :width: 600px

+ :align: center

+

Requirements

============

- `Numpy >= 1.14.5 `_

- `SciPy >= 1.1.0 `_

-- `hankel >= 0.3.6 `_

+- `hankel >= 1.0.2 `_

- `emcee >= 3.0.0 `_

-- `pyevtk `_

-- `six `_

+- `pyevtk >= 1.1.1 `_

+

Optional

--------

@@ -403,4 +348,4 @@ Optional

License

=======

-`LGPLv3 `_ © 2018-2019

+`LGPLv3 `_

diff --git a/docs/source/krige.rst b/docs/source/krige.rst

index 148c5c8d..e7eb6bd4 100644

--- a/docs/source/krige.rst

+++ b/docs/source/krige.rst

@@ -2,10 +2,6 @@ gstools.krige

=============

.. automodule:: gstools.krige

- :members:

- :undoc-members:

- :inherited-members:

- :show-inheritance:

.. raw:: latex

diff --git a/docs/source/pics/gstools.ico b/docs/source/pics/gstools.ico

new file mode 100644

index 00000000..9119caaa

Binary files /dev/null and b/docs/source/pics/gstools.ico differ

diff --git a/docs/source/tutorial_01_srf.rst b/docs/source/tutorial_01_srf.rst

deleted file mode 100644

index 9ce2164e..00000000

--- a/docs/source/tutorial_01_srf.rst

+++ /dev/null

@@ -1,281 +0,0 @@

-Tutorial 1: Random Field Generation

-===================================

-

-The main feature of GSTools is the spatial random field generator :any:`SRF`,

-which can generate random fields following a given covariance model.

-The generator provides a lot of nice features, which will be explained in

-the following

-

-Theoretical Background

-----------------------

-

-GSTools generates spatial random fields with a given covariance model or

-semi-variogram. This is done by using the so-called randomization method.

-The spatial random field is represented by a stochastic Fourier integral

-and its discretised modes are evaluated at random frequencies.

-

-GSTools supports arbitrary and non-isotropic covariance models.

-

-A very Simple Example

----------------------

-

-We are going to start with a very simple example of a spatial random field

-with an isotropic Gaussian covariance model and following parameters:

-

-- variance :math:`\sigma^2=1`

-- correlation length :math:`\lambda=10`

-

-First, we set things up and create the axes for the field. We are going to

-need the :any:`SRF` class for the actual generation of the spatial random field.

-But :any:`SRF` also needs a covariance model and we will simply take the :any:`Gaussian` model.

-

-.. code-block:: python

-

- from gstools import SRF, Gaussian

-

- x = y = range(100)

-

-Now we create the covariance model with the parameters :math:`\sigma^2` and

-:math:`\lambda` and hand it over to :any:`SRF`. By specifying a seed,

-we make sure to create reproducible results:

-

-.. code-block:: python

-

- model = Gaussian(dim=2, var=1, len_scale=10)

- srf = SRF(model, seed=20170519)

-

-With these simple steps, everything is ready to create our first random field.

-We will create the field on a structured grid (as you might have guessed from the `x` and `y`), which makes it easier to plot.

-

-.. code-block:: python

-

- field = srf.structured([x, y])

- srf.plot()

-

-Yielding

-

-.. image:: pics/srf_tut_gau_field.png

- :width: 600px

- :align: center

-

-Wow, that was pretty easy!

-

-The script can be found in :download:`gstools/examples/00_gaussian.py<../../examples/00_gaussian.py>`

-

-Creating an Ensemble of Fields

-------------------------------

-

-Creating an ensemble of random fields would also be

-a great idea. Let's reuse most of the previous code.

-

-.. code-block:: python

-

- import numpy as np

- import matplotlib.pyplot as pt

- from gstools import SRF, Gaussian

-

- x = y = np.arange(100)

-

- model = Gaussian(dim=2, var=1, len_scale=10)

- srf = SRF(model)

-

-This time, we did not provide a seed to :any:`SRF`, as the seeds will used

-during the actual computation of the fields. We will create four ensemble

-members, for better visualisation and save them in a list and in a first

-step, we will be using the loop counter as the seeds.

-

-.. code-block:: python

-

- ens_no = 4

- field = []

- for i in range(ens_no):

- field.append(srf.structured([x, y], seed=i))

-

-Now let's have a look at the results:

-

-.. code-block:: python

-

- fig, ax = pt.subplots(2, 2, sharex=True, sharey=True)

- ax = ax.flatten()

- for i in range(ens_no):

- ax[i].imshow(field[i].T, origin='lower')

- pt.show()

-

-Yielding

-

-.. image:: pics/srf_tut_gau_field_ens.png

- :width: 600px

- :align: center

-

-The script can be found in :download:`gstools/examples/05_srf_ensemble.py<../../examples/05_srf_ensemble.py>`

-

-Using better Seeds

-^^^^^^^^^^^^^^^^^^

-

-It is not always a good idea to use incrementing seeds. Therefore GSTools

-provides a seed generator :any:`MasterRNG`. The loop, in which the fields are generated would

-then look like

-

-.. code-block:: python

-

- from gstools.random import MasterRNG

- seed = MasterRNG(20170519)

- for i in range(ens_no):

- field.append(srf.structured([x, y], seed=seed()))

-

-Creating Fancier Fields

------------------------

-

-Only using Gaussian covariance fields gets boring. Now we are going to create much rougher random fields by using an exponential covariance model and we are going to make them anisotropic.

-

-The code is very similar to the previous examples, but with a different covariance model class :any:`Exponential`. As model parameters we a using following

-

-- variance :math:`\sigma^2=1`

-- correlation length :math:`\lambda=(12, 3)^T`

-- rotation angle :math:`\theta=\pi/8`

-

-

-.. code-block:: python

-

- import numpy as np

- from gstools import SRF, Exponential

-

- x = y = np.arange(100)

-

- model = Exponential(dim=2, var=1, len_scale=[12., 3.], angles=np.pi/8.)

- srf = SRF(model, seed=20170519)

-

- srf.structured([x, y])

- srf.plot()

-

-Yielding

-

-.. image:: pics/srf_tut_exp_ani_rot.png

- :width: 600px

- :align: center

-

-The anisotropy ratio could also have been set with

-

-.. code-block:: python

-

- model = Exponential(dim=2, var=1, len_scale=12., anis=3./12., angles=np.pi/8.)

-

-Using an Unstructured Grid

---------------------------

-

-For many applications, the random fields are needed on an unstructured grid.

-Normally, such a grid would be read in, but we can simply generate one and

-then create a random field at those coordinates.

-

-.. code-block:: python

-

- import numpy as np

- from gstools import SRF, Exponential

- from gstools.random import MasterRNG

-

- seed = MasterRNG(19970221)

- rng = np.random.RandomState(seed())

- x = rng.randint(0, 100, size=10000)

- y = rng.randint(0, 100, size=10000)

-

- model = Exponential(dim=2, var=1, len_scale=[12., 3.], angles=np.pi/8.)

-

- srf = SRF(model, seed=20170519)

- srf([x, y])

- srf.plot()

-

-Yielding

-

-.. image:: pics/srf_tut_unstr.png

- :width: 600px

- :align: center

-

-Comparing this image to the previous one, you can see that be using the same

-seed, the same field can be computed on different grids.

-

-The script can be found in :download:`gstools/examples/06_unstr_srf_export.py<../../examples/06_unstr_srf_export.py>`

-

-Exporting a Field

------------------

-

-Using the field from `previous example `__, it can simply be exported to the file

-``field.vtu`` and viewed by e.g. paraview with following lines of code

-

-.. code-block:: python

-

- srf.vtk_export("field")

-

-Or it could visualized immediately in Python using `PyVista `__:

-

-.. code-block:: python

-

- mesh = srf.to_pyvista("field")

- mesh.plot()

-

-The script can be found in :download:`gstools/examples/04_export.py<../../examples/04_export.py>` and

-in :download:`gstools/examples/06_unstr_srf_export.py<../../examples/06_unstr_srf_export.py>`

-

-Merging two Fields

-------------------

-

-We can even generate the same field realisation on different grids. Let's try

-to merge two unstructured rectangular fields. The first field will be generated

-exactly like in example `Using an Unstructured Grid`_:

-

-.. code-block:: python

-

- import numpy as np

- import matplotlib.pyplot as pt

- from gstools import SRF, Exponential

- from gstools.random import MasterRNG

-

- seed = MasterRNG(19970221)

- rng = np.random.RandomState(seed())

- x = rng.randint(0, 100, size=10000)

- y = rng.randint(0, 100, size=10000)

-

- model = Exponential(dim=2, var=1, len_scale=[12., 3.], angles=np.pi/8.)

-

- srf = SRF(model, seed=20170519)

-

- field = srf([x, y])

-

-But now we extend the field on the right hand side by creating a new

-unstructured grid and calculating a field with the same parameters and the

-same seed on it:

-

-.. code-block:: python

-

- # new grid

- seed = MasterRNG(20011012)

- rng = np.random.RandomState(seed())

- x2 = rng.randint(99, 150, size=10000)

- y2 = rng.randint(20, 80, size=10000)

-

- field2 = srf((x2, y2))

-

- pt.tricontourf(x, y, field.T)

- pt.tricontourf(x2, y2, field2.T)

- pt.axes().set_aspect('equal')

- pt.show()

-

-Yielding

-

-.. image:: pics/srf_tut_merge.png

- :width: 600px

- :align: center

-

-The slight mismatch where the two fields were merged is merely due to

-interpolation problems of the plotting routine. You can convince yourself

-be increasing the resolution of the grids by a factor of 10.

-

-Of course, this merging could also have been done by appending the grid

-point ``(x2, y2)`` to the original grid ``(x, y)`` before generating the field.

-But one application scenario would be to generate hugh fields, which would not

-fit into memory anymore.

-

-The script can be found in :download:`gstools/examples/07_srf_merge.py<../../examples/07_srf_merge.py>`

-

-.. raw:: latex

-

- \clearpage

diff --git a/docs/source/tutorial_02_cov.rst b/docs/source/tutorial_02_cov.rst

deleted file mode 100644

index 0b1bd8d6..00000000

--- a/docs/source/tutorial_02_cov.rst

+++ /dev/null

@@ -1,500 +0,0 @@

-Tutorial 2: The Covariance Model

-================================

-

-One of the core-features of GSTools is the powerful :any:`CovModel`

-class, which allows you to easily define arbitrary covariance models by

-yourself. The resulting models provide a bunch of nice features to explore the

-covariance models.

-

-Theoretical Backgound

----------------------

-

-A covariance model is used to characterize the

-`semi-variogram `_,

-denoted by :math:`\gamma`, of a spatial random field.

-In GSTools, we use the following form for an isotropic and stationary field:

-

-.. math::

- \gamma\left(r\right)=

- \sigma^2\cdot\left(1-\mathrm{cor}\left(r\right)\right)+n

-

-Where:

- - :math:`\mathrm{cor}(r)` is the so called

- `correlation `_

- function depending on the distance :math:`r`

- - :math:`\sigma^2` is the variance

- - :math:`n` is the nugget (subscale variance)

-

-.. note::

-

- We are not limited to isotropic models. We support anisotropy ratios for

- length scales in orthogonal transversal directions like:

-

- - :math:`x` (main direction)

- - :math:`y` (1. transversal direction)

- - :math:`z` (2. transversal direction)

-

- These main directions can also be rotated, but we will come to that later.

-

-Example

--------

-

-Let us start with a short example of a self defined model (Of course, we

-provide a lot of predefined models [See: :any:`gstools.covmodel`],

-but they all work the same way).

-Therefore we reimplement the Gaussian covariance model by defining just the

-`correlation `_ function:

-

-.. code-block:: python

-

- from gstools import CovModel

- import numpy as np

- # use CovModel as the base-class

- class Gau(CovModel):

- def correlation(self, r):

- return np.exp(-(r/self.len_scale)**2)

-

-Now we can instantiate this model:

-

-.. code-block:: python

-

- model = Gau(dim=2, var=2., len_scale=10)

-

-To have a look at the variogram, let's plot it:

-

-.. code-block:: python

-

- from gstools.covmodel.plot import plot_variogram

- plot_variogram(model)

-

-Which gives:

-

-.. image:: pics/cov_model_vario.png

- :width: 400px

- :align: center

-

-Parameters

-----------

-

-We already used some parameters, which every covariance models has. The basic ones

-are:

-

- - **dim** : dimension of the model

- - **var** : variance of the model (on top of the subscale variance)

- - **len_scale** : length scale of the model

- - **nugget** : nugget (subscale variance) of the model

-

-These are the common parameters used to characterize a covariance model and are

-therefore used by every model in GSTools. You can also access and reset them:

-

-.. code-block:: python

-

- print(model.dim, model.var, model.len_scale, model.nugget, model.sill)

- model.dim = 3

- model.var = 1

- model.len_scale = 15

- model.nugget = 0.1

- print(model.dim, model.var, model.len_scale, model.nugget, model.sill)

-

-Which gives:

-

-.. code-block:: python

-

- 2 2.0 10 0.0 2.0

- 3 1.0 15 0.1 1.1

-

-.. note::

-

- - The sill of the variogram is calculated by ``sill = variance + nugget``

- So we treat the variance as everything **above** the nugget, which is sometimes

- called **partial sill**.

- - A covariance model can also have additional parameters.

-

-Anisotropy

-----------

-

-The internally used (semi-) variogram represents the isotropic case for the model.

-Nevertheless, you can provide anisotropy ratios by:

-

-.. code-block:: python

-

- model = Gau(dim=3, var=2., len_scale=10, anis=0.5)

- print(model.anis)

- print(model.len_scale_vec)

-

-Which gives:

-

-.. code-block:: python

-

- [0.5 1. ]

- [10. 5. 10.]

-

-As you can see, we defined just one anisotropy-ratio and the second transversal

-direction was filled up with ``1.`` and you can get the length-scales in each

-direction by the attribute :any:`len_scale_vec`. For full control you can set

-a list of anistropy ratios: ``anis=[0.5, 0.4]``.

-

-Alternatively you can provide a list of length-scales:

-

-.. code-block:: python

-

- model = Gau(dim=3, var=2., len_scale=[10, 5, 4])

- print(model.anis)

- print(model.len_scale)

- print(model.len_scale_vec)

-

-Which gives:

-

-.. code-block:: python

-

- [0.5 0.4]

- 10

- [10. 5. 4.]

-

-Rotation Angles

----------------

-

-The main directions of the field don't have to coincide with the spatial

-directions :math:`x`, :math:`y` and :math:`z`. Therefore you can provide

-rotation angles for the model:

-

-.. code-block:: python

-

- model = Gau(dim=3, var=2., len_scale=10, angles=2.5)

- print(model.angles)

-

-Which gives:

-

-.. code-block:: python

-

- [2.5 0. 0. ]

-

-Again, the angles were filled up with ``0.`` to match the dimension and you

-could also provide a list of angles. The number of angles depends on the

-given dimension:

-

-- in 1D: no rotation performable

-- in 2D: given as rotation around z-axis

-- in 3D: given by yaw, pitch, and roll (known as

- `Tait–Bryan `_

- angles)

-

-Methods

--------

-

-The covariance model class :any:`CovModel` of GSTools provides a set of handy

-methods.

-

-Basics

-^^^^^^

-

-One of the following functions defines the main characterization of the

-variogram:

-

-- ``variogram`` : The variogram of the model given by

-

- .. math::

- \gamma\left(r\right)=

- \sigma^2\cdot\left(1-\mathrm{cor}\left(r\right)\right)+n

-

-- ``covariance`` : The (auto-)covariance of the model given by

-

- .. math::

- C\left(r\right)= \sigma^2\cdot\mathrm{cor}\left(r\right)

-

-- ``correlation`` : The (auto-)correlation (or normalized covariance)

- of the model given by

-

- .. math::

- \mathrm{cor}\left(r\right)

-

-As you can see, it is the easiest way to define a covariance model by giving a

-correlation function as demonstrated by the above model ``Gau``.

-If one of the above functions is given, the others will be determined:

-

-.. code-block:: python

-

- model = Gau(dim=3, var=2., len_scale=10, nugget=0.5)

- print(model.variogram(10.))

- print(model.covariance(10.))

- print(model.correlation(10.))

-

-Which gives:

-

-.. code-block:: python

-

- 1.7642411176571153

- 0.6321205588285577

- 0.7357588823428847

- 0.36787944117144233

-

-Spectral methods

-^^^^^^^^^^^^^^^^

-

-The spectrum of a covariance model is given by:

-

-.. math:: S(\mathbf{k}) = \left(\frac{1}{2\pi}\right)^n

- \int C(\Vert\mathbf{r}\Vert) e^{i b\mathbf{k}\cdot\mathbf{r}} d^n\mathbf{r}

-

-Since the covariance function :math:`C(r)` is radially symmetric, we can

-calculate this by the

-`hankel-transformation `_:

-

-.. math:: S(k) = \left(\frac{1}{2\pi}\right)^n \cdot

- \frac{(2\pi)^{n/2}}{(bk)^{n/2-1}}

- \int_0^\infty r^{n/2-1} C(r) J_{n/2-1}(bkr) r dr

-

-Where :math:`k=\left\Vert\mathbf{k}\right\Vert`.

-

-Depending on the spectrum, the spectral-density is defined by:

-

-.. math:: \tilde{S}(k) = \frac{S(k)}{\sigma^2}

-

-You can access these methods by:

-

-.. code-block:: python

-

- model = Gau(dim=3, var=2., len_scale=10)

- print(model.spectrum(0.1))

- print(model.spectral_density(0.1))

-

-Which gives:

-

-.. code-block:: python

-

- 34.96564773852395

- 17.482823869261974

-

-.. note::

- The spectral-density is given by the radius of the input phase. But it is

- **not** a probability density function for the radius of the phase.

- To obtain the pdf for the phase-radius, you can use the methods

- :any:`spectral_rad_pdf` or :any:`ln_spectral_rad_pdf` for the logarithm.

-

- The user can also provide a cdf (cumulative distribution function) by

- defining a method called ``spectral_rad_cdf`` and/or a ppf (percent-point function)

- by ``spectral_rad_ppf``.

-

- The attributes :any:`has_cdf` and :any:`has_ppf` will check for that.

-

-Different scales

-----------------

-

-Besides the length-scale, there are many other ways of characterizing a certain

-scale of a covariance model. We provide two common scales with the covariance

-model.

-

-Integral scale

-^^^^^^^^^^^^^^

-

-The `integral scale `_

-of a covariance model is calculated by:

-

-.. math:: I = \int_0^\infty \mathrm{cor}(r) dr

-

-You can access it by:

-

-.. code-block:: python

-

- model = Gau(dim=3, var=2., len_scale=10)

- print(model.integral_scale)

- print(model.integral_scale_vec)

-

-Which gives:

-

-.. code-block:: python

-

- 8.862269254527579

- [8.86226925 8.86226925 8.86226925]

-

-You can also specify integral length scales like the ordinary length scale,

-and len_scale/anis will be recalculated:

-

-.. code-block:: python

-

- model = Gau(dim=3, var=2., integral_scale=[10, 4, 2])

- print(model.anis)

- print(model.len_scale)

- print(model.len_scale_vec)

- print(model.integral_scale)

- print(model.integral_scale_vec)

-

-Which gives:

-

-.. code-block:: python

-

- [0.4 0.2]

- 11.283791670955127

- [11.28379167 4.51351667 2.25675833]

- 10.000000000000002

- [10. 4. 2.]

-

-Percentile scale

-^^^^^^^^^^^^^^^^

-

-Another scale characterizing the covariance model, is the percentile scale.

-It is the distance, where the normalized variogram reaches a certain percentage

-of its sill.

-

-.. code-block:: python

-

- model = Gau(dim=3, var=2., len_scale=10)

- print(model.percentile_scale(0.9))

-

-Which gives:

-

-.. code-block:: python

-

- 15.174271293851463

-

-.. note::

-

- The nugget is neglected by this percentile_scale.

-

-Additional Parameters

----------------------

-

-Let's pimp our self-defined model ``Gau`` by setting the exponent as an additional

-parameter:

-

-.. math:: \mathrm{cor}(r) := \exp\left(-\left(\frac{r}{\ell}\right)^{\alpha}\right)

-

-This leads to the so called **stable** covariance model and we can define it by

-

-.. code-block:: python

-

- class Stab(CovModel):

- def default_opt_arg(self):

- return {"alpha": 1.5}

- def correlation(self, r):

- return np.exp(-(r/self.len_scale)**self.alpha)

-

-As you can see, we override the method :any:`CovModel.default_opt_arg` to provide

-a standard value for the optional argument ``alpha`` and we can access it

-in the correlation function by ``self.alpha``

-

-Now we can instantiate this model:

-

-.. code-block:: python

-

- model1 = Stab(dim=2, var=2., len_scale=10)

- model2 = Stab(dim=2, var=2., len_scale=10, alpha=0.5)

- print(model1)

- print(model2)

-

-Which gives:

-

-.. code-block:: python

-

- Stab(dim=2, var=2.0, len_scale=10, nugget=0.0, anis=[1.], angles=[0.], alpha=1.5)

- Stab(dim=2, var=2.0, len_scale=10, nugget=0.0, anis=[1.], angles=[0.], alpha=0.5)

-

-.. note::

-

- You don't have to overrid the :any:`CovModel.default_opt_arg`, but you will

- get a ValueError if you don't set it on creation.

-

-Fitting variogram data

-----------------------

-

-The model class comes with a routine to fit the model-parameters to given

-variogram data. Have a look at the following:

-

-.. code-block:: python

-

- # data

- x = [1.0, 3.0, 5.0, 7.0, 9.0, 11.0]

- y = [0.2, 0.5, 0.6, 0.8, 0.8, 0.9]

- # fitting model

- model = Stab(dim=2)

- # we have to provide boundaries for the parameters

- model.set_arg_bounds(alpha=[0, 3])

- # fit the model to given data, deselect nugget

- results, pcov = model.fit_variogram(x, y, nugget=False)

- print(results)

- # show the fitting

- from matplotlib import pyplot as plt

- from gstools.covmodel.plot import plot_variogram

- plt.scatter(x, y, color="k")

- plot_variogram(model)

- plt.show()

-

-Which gives:

-

-.. code-block:: python

-

- {'var': 1.024575782651677,

- 'len_scale': 5.081620691462197,

- 'nugget': 0.0,

- 'alpha': 0.906705123369987}

-

-.. image:: pics/stab_vario_fit.png

- :width: 400px

- :align: center

-

-As you can see, we have to provide boundaries for the parameters.

-As a default, the following bounds are set:

-

-- additional parameters: ``[-np.inf, np.inf]``

-- variance: ``[0.0, np.inf]``

-- len_scale: ``[0.0, np.inf]``

-- nugget: ``[0.0, np.inf]``

-

-Also, you can deselect parameters from fitting, so their predefined values

-will be kept. In our case, we fixed a ``nugget`` of ``0.0``, which was set

-by default. You can deselect any standard or optional argument of the covariance model.

-The second return value ``pcov`` is the estimated covariance of ``popt`` from

-the used scipy routine :any:`scipy.optimize.curve_fit`.

-

-You can use the following methods to manipulate the used bounds:

-

-.. currentmodule:: gstools.covmodel.base

-

-.. autosummary::

- CovModel.default_opt_arg_bounds

- CovModel.default_arg_bounds

- CovModel.set_arg_bounds

- CovModel.check_arg_bounds

-

-You can override the :any:`CovModel.default_opt_arg_bounds` to provide standard

-bounds for your additional parameters.

-

-To access the bounds you can use:

-

-.. autosummary::

- CovModel.var_bounds

- CovModel.len_scale_bounds

- CovModel.nugget_bounds

- CovModel.opt_arg_bounds

- CovModel.arg_bounds

-

-Provided Covariance Models

---------------------------

-

-The following standard covariance models are provided by GSTools

-

-.. currentmodule:: gstools.covmodel.models

-

-.. autosummary::

- Gaussian

- Exponential

- Matern

- Stable

- Rational

- Linear

- Circular

- Spherical

- Intersection

-

-As a special feature, we also provide truncated power law (TPL) covariance models

-

-.. currentmodule:: gstools.covmodel.tpl_models

-

-.. autosummary::

- TPLGaussian

- TPLExponential

- TPLStable

-

-.. raw:: latex

-

- \clearpage

diff --git a/docs/source/tutorial_03_vario.rst b/docs/source/tutorial_03_vario.rst

deleted file mode 100644

index 6343985d..00000000

--- a/docs/source/tutorial_03_vario.rst

+++ /dev/null

@@ -1,356 +0,0 @@

-Tutorial 3: Variogram Estimation

-================================

-

-Estimating the spatial correlations is an important part of geostatistics.

-These spatial correlations can be expressed by the variogram, which can be

-estimated with the subpackage :any:`gstools.variogram`. The variograms can be

-estimated on structured and unstructured grids.

-

-Theoretical Background

-----------------------

-

-The same `(semi-)variogram `_ as

-:doc:`the Covariance Model` is being used

-by this subpackage.

-

-An Example with Actual Data

----------------------------

-

-This example is going to be a bit more extensive and we are going to do some

-basic data preprocessing for the actual variogram estimation. But this example

-will be self-contained and all data gathering and processing will be done in

-this example script.

-

-The complete script can be found in :download:`gstools/examples/08_variogram_estimation.py<../../examples/08_variogram_estimation.py>`

-

-*This example will only work with Python 3.*

-

-The Data

-^^^^^^^^

-

-We are going to analyse the Herten aquifer, which is situated in Southern

-Germany. Multiple outcrop faces where surveyed and interpolated to a 3D

-dataset. In these publications, you can find more information about the data:

-

-| Bayer, Peter; Comunian, Alessandro; Höyng, Dominik; Mariethoz, Gregoire (2015): Physicochemical properties and 3D geostatistical simulations of the Herten and the Descalvado aquifer analogs. PANGAEA, https://doi.org/10.1594/PANGAEA.844167,

-| Supplement to: Bayer, P et al. (2015): Three-dimensional multi-facies realizations of sedimentary reservoir and aquifer analogs. Scientific Data, 2, 150033, https://doi.org/10.1038/sdata.2015.33

-|

-

-Retrieving the Data

-^^^^^^^^^^^^^^^^^^^

-

-To begin with, we need to download and extract the data. Therefore, we are

-going to use some built-in Python libraries. For simplicity, many values and

-strings will be hardcoded.

-

-.. code-block:: python

-

- import os

- import urllib.request

- import zipfile

- import numpy as np

- import matplotlib.pyplot as pt

-

- def download_herten():

- # download the data, warning: its about 250MB

- print('Downloading Herten data')

- data_filename = 'data.zip'

- data_url = 'http://store.pangaea.de/Publications/Bayer_et_al_2015/Herten-analog.zip'

- urllib.request.urlretrieve(data_url, 'data.zip')

-

- # extract the data

- with zipfile.ZipFile(data_filename, 'r') as zf:

- zf.extract(os.path.join('Herten-analog', 'sim-big_1000x1000x140',

- 'sim.vtk'))

-

-That was that. But we also need a script to convert the data into a format we

-can use. This script is also kindly provided by the authors. We can download

-this script in a very similar manner as the data:

-

-.. code-block:: python

-

- def download_scripts():

- # download a script for file conversion

- print('Downloading scripts')

- tools_filename = 'scripts.zip'

- tool_url = 'http://store.pangaea.de/Publications/Bayer_et_al_2015/tools.zip'

- urllib.request.urlretrieve(tool_url, tools_filename)

-

- # only extract the script we need

- with zipfile.ZipFile(tools_filename, 'r') as zf:

- zf.extract(os.path.join('tools', 'vtk2gslib.py'))

-

-These two functions can now be called:

-

-.. code-block:: python

-

- download_herten()

- download_scripts()

-

-

-Preprocessing the Data

-^^^^^^^^^^^^^^^^^^^^^^

-

-First of all, we have to convert the data with the script we just downloaded

-

-.. code-block:: python

-

- # import the downloaded conversion script

- from tools.vtk2gslib import vtk2numpy

-

- # load the Herten aquifer with the downloaded vtk2numpy routine

- print('Loading data')

- herten, grid = vtk2numpy(os.path.join('Herten-analog', 'sim-big_1000x1000x140', 'sim.vtk'))

-

-The data only contains facies, but from the supplementary data, we know the

-hydraulic conductivity values of each facies, which we will simply paste here

-and assign them to the correct facies

-

-.. code-block:: python

-

- # conductivity values per fazies from the supplementary data

- cond = np.array([2.50E-04, 2.30E-04, 6.10E-05, 2.60E-02, 1.30E-01,

- 9.50E-02, 4.30E-05, 6.00E-07, 2.30E-03, 1.40E-04,])

-

- # asign the conductivities to the facies

- herten_cond = cond[herten]

-

-Next, we are going to calculate the transmissivity, by integrating over the

-vertical axis

-

-.. code-block:: python

-

- # integrate over the vertical axis, calculate transmissivity

- herten_log_trans = np.log(np.sum(herten_cond, axis=2) * grid['dz'])

-

-The Herten data provides information about the grid, which was already used in

-the previous code block. From this information, we can create our own grid on

-which we can estimate the variogram. As a first step, we are going to estimate

-an isotropic variogram, meaning that we will take point pairs from all

-directions into account. An unstructured grid is a natural choice for this.

-Therefore, we are going to create an unstructured grid from the given,

-structured one. For this, we are going to write another small function

-

-.. code-block:: python

-

- def create_unstructured_grid(x_s, y_s):

- x_u, y_u = np.meshgrid(x_s, y_s)

- len_unstruct = len(x_s) * len(y_s)

- x_u = np.reshape(x_u, len_unstruct)

- y_u = np.reshape(y_u, len_unstruct)

- return x_u, y_u

-

- # create a structured grid on which the data is defined

- x_s = np.arange(grid['ox'], grid['nx']*grid['dx'], grid['dx'])