diff --git a/yellow-paper/docs/contract-deployment/classes.md b/yellow-paper/docs/contract-deployment/classes.md

index aff9bdc64ad..5fa7fa14ef4 100644

--- a/yellow-paper/docs/contract-deployment/classes.md

+++ b/yellow-paper/docs/contract-deployment/classes.md

@@ -12,7 +12,7 @@ Read the following discussions for additional context:

- [Abstracting contract deployment](https://forum.aztec.network/t/proposal-abstracting-contract-deployment/2576)

- [Implementing contract upgrades](https://forum.aztec.network/t/implementing-contract-upgrades/2570)

- [Contract classes, upgrades, and default accounts](https://forum.aztec.network/t/contract-classes-upgrades-and-default-accounts/433)

- :::

+:::

## `ContractClass`

diff --git a/yellow-paper/docs/data-publication-and-availability/index.md b/yellow-paper/docs/data-publication-and-availability/index.md

index fc601823a9b..4342a4ee1b4 100644

--- a/yellow-paper/docs/data-publication-and-availability/index.md

+++ b/yellow-paper/docs/data-publication-and-availability/index.md

@@ -3,28 +3,34 @@ title: Data Availability (and Publication)

---

:::info

-This page is heavily based on the Rollup and Data Ramblings documents. As for that, I highly recommend reading [this very nice post](https://dba.xyz/do-rollups-inherit-security/) written by Jon Charbonneau.

+This page is heavily based on the Rollup and Data Ramblings documents.

+As for that, we highly recommend reading [this very nice post](https://dba.xyz/do-rollups-inherit-security/) written by Jon Charbonneau.

:::

-- **Data Availability**: The data is available to me right now

+- **Data Availability**: The data is available to anyone right now

- **Data Publication**: The data was available for a period when it was published.

-Essentially Data Publication $\subset$ Data Availability, since if it is available, it must also have been published. This difference might be small but becomes important in a few moments.

+Essentially Data Publication $\subset$ Data Availability, since if it is available, it must also have been published.

+This difference might be small but becomes important in a few moments.

-Progressing the state of the validating light node requires that we can convince it (and therefore the [availability oracle](./index.md#availability-oracle)) that the data was published - as it needs to compute the public inputs for the proof. The exact method of computing these public inputs can vary depending on the data layer, but generally, it would be by providing the data directly or by using data availability sampling or a data availability committee.

+Progressing the state of the validating light node requires that we can convince it (and therefore the [availability oracle](./index.md#availability-oracle)) that the data was published - as it needs to compute the public inputs for the proof.

+The exact method of computing these public inputs can vary depending on the data layer, but generally, it could be by providing the data directly or by using data availability sampling or a data availability committee.

-The exact mechanism greatly impacts the security and cost of the system, and will be discussed in the following sections. Before that we need to get some definitions in place.

+The exact mechanism greatly impacts the security and cost of the system, and will be discussed in the following sections.

+Before that we need to get some definitions in place.

## Definitions

:::warning **Security**

-Security is often used quite in an unspecific manner, "good" security etc, without specifying what security is. From distributed systems, the _security_ of a protocol or system is defined by:

+Security is often used quite in an unspecific manner, "good" security etc, without specifying what security is.

+From distributed systems, the _security_ of a protocol or system is defined by:

- **Liveness**: Eventually something good will happen.

- **Safety**: Nothing bad will happen.

- :::

+:::

-In the context of blockchain, this _security_ is defined by the confirmation rule, while this can be chosen individually by the user, our validating light node (L1 bridge) can be seen as a user, after all, it's "just" another node. For the case of a validity proof based blockchain, a good confirmation rule should satisfy the following sub-properties (inspired by [Sreeram's framing](https://twitter.com/sreeramkannan/status/1683735050897207296)):

+In the context of blockchain, this _security_ is defined by the confirmation rule, while this can be chosen individually by the user, our validating light node (L1 bridge) can be seen as a user, after all, it's "just" another node.

+For the case of a validity proof based blockchain, a good confirmation rule should satisfy the following sub-properties (inspired by [Sreeram's framing](https://twitter.com/sreeramkannan/status/1683735050897207296)):

- **Liveness**:

- Data Availability - The chain data must be available for anyone to reconstruct the state and build blocks

@@ -35,19 +41,27 @@ In the context of blockchain, this _security_ is defined by the confirmation rul

- Data Publication - The state changes of the block is published for validation check

- State Validity - State changes along with validity proof allow anyone to check that new state _ROOTS_ are correct.

-Notice, that safety relies on data publication rather than availability. This might sound strange, but since the validity proof can prove that the state transition function was followed and what changes were made, we strictly don't need the entire state to be available for safety.

+Notice, that safety relies on data publication rather than availability.

+This might sound strange, but since the validity proof can prove that the state transition function was followed and what changes were made, we strictly don't need the entire state to be available for safety.

-With this out the way, we will later be able to reason about the choice of data storage/publication solutions. But before we dive into that, let us take a higher level look at Aztec to get a understanding of our requirements.

+With this out the way, we will later be able to reason about the choice of data storage/publication solutions.

+But before we dive into that, let us take a higher level look at Aztec to get a understanding of our requirements.

-In particular, we will be looking at what is required to give observers (nodes) different guarantees similar to what Jon did in [his post](https://dba.xyz/do-rollups-inherit-security/). This can be useful to get an idea around what we can do for data publication and availability later.

+In particular, we will be looking at what is required to give observers (nodes) different guarantees similar to what Jon did in [his post](https://dba.xyz/do-rollups-inherit-security/).

+This can be useful to get an idea around what we can do for data publication and availability later.

-## Quick Catch-up

+## Rollup 101

-A rollup is broadly speaking a blockchain that put its blocks on some other chain (the host) to make them available to its nodes. Most rollups have a contract on this host blockchain which validates its state transitions (through fault proofs or validity proofs) taking the role of a full-validating light-node, increasing the accessibility of running a node on the rollup chain, making any host chain node indirectly validate its state.

+A rollup is broadly speaking a blockchain that put its blocks on some other chain (the host) to make them available to its nodes.

+Most rollups have a contract on this host blockchain which validates its state transitions (through fault proofs or validity proofs) taking the role of a full-validating light-node, increasing the accessibility of running a node on the rollup chain, making any host chain node indirectly validate its state.

-With its state being validated by the host chain, the security properties can eventually be enforced by the host-chain if the rollup chain itself is not progressing. Bluntly, the rollup is renting security from the host. The essential difference between a L1 and a rollup then comes down to who are required for block production (liveness) and to convince the validating light-node (security), for the L1 it is the nodes of the L1, and for the Rollup the nodes of its host (eventually). This in practice means that we can get some better properties for how easy it is to get sufficient assurance that no trickery is happening.

+With its state being validated by the host chain, the security properties can eventually be enforced by the host-chain if the rollup chain itself is not progressing.

+Bluntly, the rollup is renting security from the host.

+The essential difference between an L1 and a rollup then comes down to who are required for block production (liveness) and to convince the validating light-node (security).

+For the L1 it is the nodes of the L1, and for the Rollup the nodes of its host (eventually).

+This in practice means that we can get some better properties for how easy it is to get sufficient assurance that no trickery is happening.

| |Security| Accessibility|

@@ -55,7 +69,11 @@ With its state being validated by the host chain, the security properties can ev

Full node| 😃 | 😦 |

Full-verifier light node (L1 state transitioner)| 😃 | 😃 |

-With that out the way, we can draw out a model of the rollup as a two-chain system, what Jon calls the _dynamically available ledger_ and the _finalized prefix ledger_. The point where we jump from one to the other depends on the confirmation rules applied. In Ethereum the _dynamically available_ chain follows the [LMD-ghost](https://eth2book.info/capella/part2/consensus/lmd_ghost/) fork choice rule and is the one block builders are building on top of. Eventually consensus forms and blocks from the _dynamic_ chain gets included in the _finalized_ chain ([Gasper](https://eth2book.info/capella/part2/consensus/casper_ffg/)). Below image is from [Bridging and Finality: Ethereum](https://jumpcrypto.com/writing/bridging-and-finality-ethereum/).

+With that out the way, we can draw out a model of the rollup as a two-chain system, what Jon calls the _dynamically available ledger_ and the _finalized prefix ledger_.

+The point where we jump from one to the other depends on the confirmation rules applied.

+In Ethereum the _dynamically available_ chain follows the [LMD-ghost](https://eth2book.info/capella/part2/consensus/lmd_ghost/) fork choice rule and is the one block builders are building on top of.

+Eventually consensus forms and blocks from the _dynamic_ chain gets included in the _finalized_ chain ([Gasper](https://eth2book.info/capella/part2/consensus/casper_ffg/)).

+Below image is from [Bridging and Finality: Ethereum](https://jumpcrypto.com/writing/bridging-and-finality-ethereum/).

In rollup land, the _available_ chain will often live outside the host where it is built upon before blocks make their way onto the host DA and later get _finalized_ by the the validating light node that lives on the host as a smart contract.

@@ -68,28 +86,40 @@ One of the places where the existence of consensus make a difference for the rol

### Consensus

-For a consensus based rollup you can run LMD-Ghost similarly to Ethereum, new blocks are built like Ethereum, and then eventually reach the host chain where the light client should also validate the consensus rules before progressing state. In this world, you have a probability of re-orgs trending down as blocks are built upon while getting closer to the finalization. Users can then rely on their own confirmation rules to decide when they deem their transaction confirmed. You could say that the transactions are pre-confirmed until they convince the validating light-client on the host.

+For a consensus based rollup you can run LMD-Ghost similarly to Ethereum, new blocks are built like Ethereum, and then eventually reach the host chain where the light client should also validate the consensus rules before progressing state.

+In this world, you have a probability of re-orgs trending down as blocks are built upon while getting closer to the finalization.

+Users can then rely on their own confirmation rules to decide when they deem their transaction confirmed.

+You could say that the transactions are pre-confirmed until they convince the validating light-client on the host.

### No-consensus

-If there is no explicit consensus for the Rollup, staking can still be utilized for leader selection, picking a distinct sequencer which will have a period to propose a block and convince the validating light-client. The user can as earlier define his own confirmation rules and could decide that if the sequencer acknowledge his transaction, then he sees it as confirmed. This can be done fully on trust, of with some signed message the user could take to the host and "slash" the sequencer for not upholding his part of the deal.

+If there is no explicit consensus for the Rollup, staking can still be utilized for leader selection, picking a distinct sequencer which will have a period to propose a block and convince the validating light-client.

+The user can as earlier define his own confirmation rules and could decide that if the sequencer acknowledge his transaction, then he sees it as confirmed.

+This have a weaker guarantees than the consensus based as the sequencer could be malicious and not uphold his part of the deal.

+Nevertheless, the user could always do an out of protocol agreement with the sequencer, where the sequencer guarantees that he will include the transaction or the user will be able to slash him and get compensated.

:::info Fernet

Fernet lives in this category if you have a single sequencer active from the proposal to proof inclusion stage.

:::

-Common for both consensus and no-consensus rollups is that the user can decide when he deems his transaction confirmed. If the user is not satisfied with the guarantee provided by the sequencer, he can always wait for the block to be included in the host chain and get the guarantee from the host chain consensus rules.

+Common for both consensus and no-consensus rollups is that the user can decide when he deems his transaction confirmed.

+If the user is not satisfied with the guarantee provided by the sequencer, he can always wait for the block to be included in the host chain and get the guarantee from the host chain consensus rules.

## Data Availability and Publication

-As alluded to earlier, I am of the school of thought that Data Availability and Publication is different things. Generally, what is referred to as Availability is merely publication, e.g., whether or not the data have been published somewhere. For data published on Ethereum you will currently have no issues getting a hold of the data because there are many full nodes and they behave nicely, but they could are not guaranteed to be so. New nodes are essentially bootstrapped by other friendly nodes.

+As alluded to earlier, we belong to the school of thought that Data Availability and Publication is different things.

+Generally, what is often referred to as Data Availability is merely Data Publication, e.g., whether or not the data have been published somewhere.

+For data published on Ethereum you will currently have no issues getting a hold of the data because there are many full nodes and they behave nicely, but they are not guaranteed to continue doing so.

+New nodes are essentially bootstrapped by other friendly nodes.

-With that out the way, I think it would be prudent to elaborate on my definition from earlier:

+With that out the way, it would be prudent to elaborate on our definition from earlier:

-- **Data Availability**: The data is available to me right now

+- **Data Availability**: The data is available to anyone right now

- **Data Publication**: The data was available for a period when it was published.

-With this split, we can map the methods of which we can include data for our rollup. Below we have included only systems that are live or close to live where we have good ideas around the throughput and latency of the data. The latency is based on using Ethereum L1 as the home of the validating light node, and will therefore be the latency from being included in the data layer until statements can be included in the host chain.

+With this split, we can map the methods of which we can include data for our rollup.

+Below we have included only systems that are live or close to live where we have good ideas around the throughput and latency of the data.

+The latency is based on using Ethereum L1 as the home of the validating light node, and will therefore be the latency between point in time when data is included on the data layer until a point when statements about the data can be included in the host chain.

|Method | Publication | Availability | Quantity | Latency | Description |

@@ -102,9 +132,12 @@ With this split, we can map the methods of which we can include data for our rol

### Data Layer outside host

-When using a data layer that is not the host chain, cost (and safety guarantees) are reduced, and we rely on some "bridge" to tell the host chain about the data. This must happen before our validating light node can progress the block, hence the block must be published, and the host must know about it before the host can use it as input to block validation.

+When using a data layer that is not the host chain, cost (and safety guarantees) are reduced, and we rely on some "bridge" to tell the host chain about the data.

+This must happen before our validating light node can progress the block.

+Therefore the block must be published, and the host must know about it before the host can use it as input to block validation.

-This influences how blocks can practically be built, since short "cycles" of publishing and then including blocks might not be possible for bridges with significant delay. This means that a suitable data layer has both sufficient data throughput but also low (enough) latency at the bridge level.

+This influences how blocks can practically be built, since short "cycles" of publishing and then including blocks might not be possible for bridges with significant delay.

+This means that a suitable data layer has both sufficient data throughput but also low (enough) latency at the bridge level.

Briefly the concerns we must have for any supported data layer that is outside the host chain is:

@@ -115,11 +148,18 @@ Briefly the concerns we must have for any supported data layer that is outside t

#### Celestia

-Celestia mainnet is starting with a limit of 2 mb/block with 12 second blocks supporting ~166 KB/s. But they are working on increasing this to 8 mb/block.

+Celestia mainnet is starting with a limit of 2 mb/block with 12 second blocks supporting ~166 KB/s.

+:::note

+They are working on increasing this to 8 mb/block.

+:::

-As Celestia has just recently launched, it is unclear how much competition there will be for the data throughput, and thereby how much we could expect to get a hold of. Since the security assumptions differ greatly from the host chain (Ethereum) few L2's have been built on top of it yet, and the demand is to be gauged in the future.

+As Celestia has just recently launched, it is unclear how much competition there will be for the data throughput, and thereby how much we could expect to get a hold of.

+Since the security assumptions differ greatly from the host chain (Ethereum) few L2s have been built on top of it yet, and the demand is to be gauged in the future.

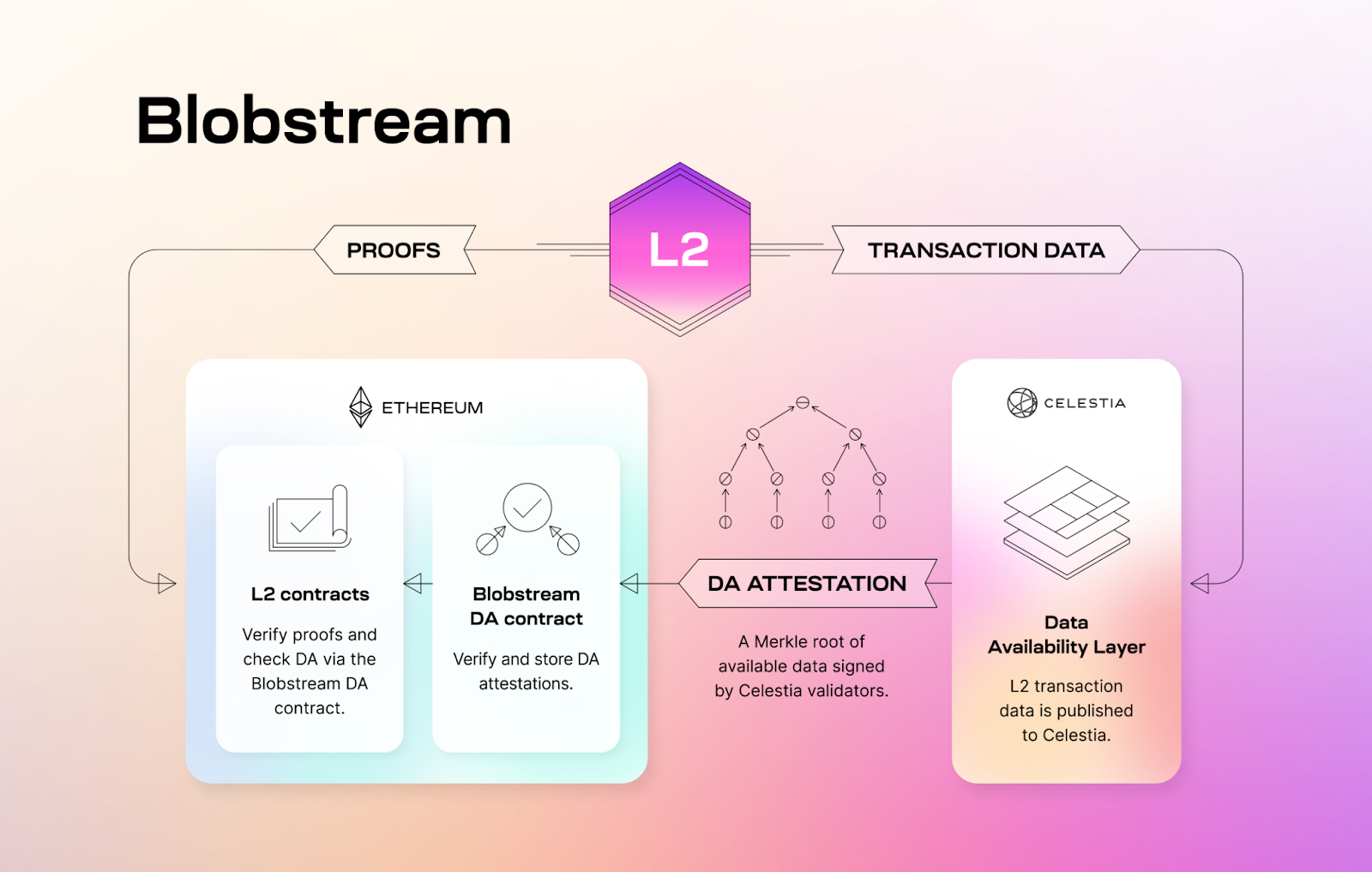

-Beyond the pure data throughput, we also need Ethereum L1 to know that the data was made available on Celestia, which here will require the [blobstream](https://blog.celestia.org/introducing-blobstream/) (formerly the quantum gravity bridge) to relay data roots that the rollup contract can process.This is currently done approximately every 100 minutes. Note however, that a separate blobstream is being build with Succinct labs (live on goerli) which should make relays cheaper and more frequent.

+Beyond the pure data throughput, we also need Ethereum L1 to know that the data was made available on Celestia.

+This will require the [blobstream](https://blog.celestia.org/introducing-blobstream/) (formerly the quantum gravity bridge) to relay data roots that the rollup contract can process.

+This is currently done approximately every 100 minutes.

+Note however, that a separate blobstream is being build by Succinct labs (live on goerli) which should make relays cheaper and more frequent.

Neat structure of what the availability oracles will look like created by the Celestia team:

@@ -128,17 +168,21 @@ Neat structure of what the availability oracles will look like created by the Ce

Espresso is not yet live, so the following section is very much in the air, it might be that the practical numbers will change when it is live.

-> My knowledge of hotshot is limited here. So keeping my commentary likewise limited until more educated in this matter.

+> Our knowledge of hotshot is limited here - keeping commentary limited until more educated in this matter.

-From their [benchmarks](https://docs.espressosys.com/sequencer/releases/doppio-testnet-release/benchmarks), it seems like the system can support 25-30MB/s of throughput by using small committees of 10 nodes. The throughput further is impacted by the size of the node-set from where the committee is picked.

+From their [benchmarks](https://docs.espressosys.com/sequencer/releases/doppio-testnet-release/benchmarks), it seems like the system can support 25-30MB/s of throughput by using small committees of 10 nodes.

+The throughput further is impacted by the size of the node-set from where the committee is picked.

-While the committee is small, it seems like they can ensure honesty through the other nodes. But the nodes active here might need a lot of bandwidth to handle both DA Proposals and VID chunks.

+While the committee is small, it seems like they can ensure honesty through the other nodes.

+But the nodes active here might need a lot of bandwidth to handle both DA Proposals and VID chunks.

-It is not fully clear how often blocks would be relayed to the hotshot contract for consumption by our rollup, but the team says it should be frequent. Cost is estimated to be ~400K gas.

+It is not fully clear how often blocks would be relayed to the hotshot contract for consumption by our rollup, but the team says it should be frequent.

+Cost is estimated to be ~400K gas.

## Aztec-specific Data

-As part of figuring out the data throughput requirements, we need to know what data we need to publish. In Aztec we have a bunch of data with varying importance; some being important to **everyone** and some being important to **someone**.

+As part of figuring out the data throughput requirements, we need to know what data we need to publish.

+In Aztec we have a bunch of data with varying importance; some being important to **everyone** and some being important to **someone**.

The things that are important to **everyone** are the things that we have directly in state, meaning the:

@@ -149,15 +193,22 @@ The things that are important to **everyone** are the things that we have direct

- L1 -> L2

- L2 -> L1

-Some of these can be moved around between layers, and others are hard-linked to live on the host. For one, moving the cross-chain message L1 -> L2 and L2 -> L1 anywhere else than the host is fighting an up-hill battle. Also, beware that the state for L2 -> L1 messages is split between the data layers, as the messages don't strictly need to be available from the L2 itself, but must be for consumption on L1.

+Some of these can be moved around between layers, and others are hard-linked to live on the host.

+For one, moving the cross-chain message L1 -> L2 and L2 -> L1 anywhere else than the host is fighting an up-hill battle.

+Also, beware that the state for L2 -> L1 messages is split between the data layers, as the messages don't strictly need to be available from the L2 itself, but must be for consumption on L1.

-We need to know what these things are to be able to progress the state. Without having the state, we don't know how the output of a state transition should look and cannot prove it.

+We need to know what these things are to be able to progress the state.

+Without having the state, we don't know how the output of a state transition should look and cannot prove it.

-Beyond the above data that is important to everyone, we also have data that is important to _someone_, these are the logs, both unencrypted and encrypted. Knowing the historic logs are not required to progress the chain, but are important for the users to ensure that they learn about their notes etc.

+Beyond the above data that is important to everyone, we also have data that is important to _someone_.

+These are encrypted and unencrypted logs.

+Knowing the historic logs is not required to progress the chain, but they are important for the users to ensure that they learn about their notes etc.

-A few transaction examples based on our E2E tests have the following data footprints. We will need a few more bytes to specify the sizes of these lists but it will land us in the right ball park.

+A few transaction examples based on our E2E tests have the following data footprints.

+We will need a few more bytes to specify the sizes of these lists but it will land us in the right ball park.

-> These were made back in august and are a bit outdated. They should be updated to also include more complex transactions.

+> These were made back in August 2023 and are a bit outdated.

+> They should be updated to also include more complex transactions.

```

Tx ((Everyone, Someone) bytes).

@@ -204,7 +255,9 @@ Using the values from just above for transaction data requirements, we can get a

Assuming that we are getting $\frac{1}{9}$ of the blob-space or $\frac{1}{20}$ of the calldata and amortize to the Aztec available space.

-For every throughput column, we insert 3 marks, for everyone, someone and the total. e.g., ✅✅✅ meaning that the throughput can be supported when publishing data for everyone, someone and the total. 💀💀💀 meaning that none of it can be supported.

+For every throughput column, we insert 3 marks, for everyone, someone and the total;

+✅✅✅ meaning that the throughput can be supported when publishing data for everyone, someone and the total.

+💀💀💀 meaning that none of it can be supported.

|Space| Aztec Available | 1 TPS | 10 TPS | 50 TPS | 100 Tps |

@@ -216,15 +269,14 @@ For every throughput column, we insert 3 marks, for everyone, someone and the to

|Celestia (8mb/13s blocks)| $68,376 \dfrac{byte}{s}$ | ✅✅✅ | ✅✅✅ | ✅✅💀 | ✅💀💀

|Espresso| Unclear but at least 1 mb per second | ✅✅✅ | ✅✅✅ | ✅✅✅| ✅✅✅

-> **Disclaimer**: Remember that these fractions for available space are pulled out of my ass.

-

-

+> **Disclaimer**: Remember that these fractions for available space are pulled out of thin air.

With these numbers at hand, we can get an estimate of our throughput in transactions based on our storage medium.

## One or multiple data layers?

-From the above estimations, it is unlikely that our data requirements can be met by using only data from the host chain. It is therefore to be considered whether data can be split across more than one data layer.

+From the above estimations, it is unlikely that our data requirements can be met by using only data from the host chain.

+It is therefore to be considered whether data can be split across more than one data layer.

The main concerns when investigating if multiple layers should be supported simultaneously are:

@@ -232,11 +284,15 @@ The main concerns when investigating if multiple layers should be supported simu

- **Ossification**: By ossification we mean changing the assumptions of the deployments, for example, if an application was deployed at a specific data layer, changing the layer underneath it would change the security assumptions. This is addressed through the [Upgrade mechanism](../decentralization/governance.md).

- **Security**: Applications that depend on multiple different data layers might rely on all its layers to work to progress its state. Mainly the different parts of the application might end up with different confirmation rules (as mentioned earlier) degrading it to the least secure possibly breaking the liveness of the application if one of the layers is not progressing.

-The security aspect in particular can become a problem if users deploy accounts to a bad data layer for cost savings, and then cannot access their funds (or other assets) because that data layer is not available. This can be a problem, even though all the assets of the user lives on a still functional data layer.

+The security aspect in particular can become a problem if users deploy accounts to a bad data layer for cost savings, and then cannot access their funds (or other assets) because that data layer is not available.

+This can be a problem, even though all the assets of the user lives on a still functional data layer.

Since the individual user burden is high with multi-layer approach, we discard it as a viable option, as the probability of user failure is too high.

-Instead, the likely design, will be that an instance has a specific data layer, and that "upgrading" to a new instance allows for a new data layer by deploying an entire instance. This ensures that composability is ensured as everything lives on the same data layer. Ossification is possible hence the [upgrade mechanism](../decentralization/governance.md) doesn't "destroy" the old instance. This means that applications can be built to reject upgrades if they believe the new data layer is not secure enough and simple continue using the old.

+Instead, the likely design, will be that an instance has a specific data layer, and that "upgrading" to a new instance allows for a new data layer by deploying an entire instance.

+This ensures that composability is ensured as everything lives on the same data layer.

+Ossification is possible hence the [upgrade mechanism](../decentralization/governance.md) doesn't "destroy" the old instance.

+This means that applications can be built to reject upgrades if they believe the new data layer is not secure enough and simple continue using the old.

## Privacy is Data Hungry - What choices do we really have?

@@ -244,9 +300,11 @@ With the target of 10 transactions per second at launch, in which the transactio

For one, EIP-4844 is out of the picture, as it cannot support the data requirements for 10 TPS, neither for everyone or someone data.

-At Danksharding with 64 blobs, we could theoretically support 50 tps, but will not be able to address both the data for everyone and someone. Additionally this is likely years in the making, and might not be something we can meaningfully count on to address our data needs.

+At Danksharding with 64 blobs, we could theoretically support 50 tps, but will not be able to address both the data for everyone and someone.

+Additionally this is likely years in the making, and might not be something we can meaningfully count on to address our data needs.

-With the current target, data cannot fit on the host, and we must work to integrate with external data layers. Of these, Celestia has the current most "out-the-box" solution, but Eigen-da and other alternatives are expected to come online in the future.

+With the current target, data cannot fit on the host, and we must work to integrate with external data layers.

+Of these, Celestia has the current best "out-the-box" solution, but Eigen-da and other alternatives are expected to come online in the future.

## References

diff --git a/yellow-paper/docs/rollup-circuits/index.md b/yellow-paper/docs/rollup-circuits/index.md

index d09ceec0f71..192a7693274 100644

--- a/yellow-paper/docs/rollup-circuits/index.md

+++ b/yellow-paper/docs/rollup-circuits/index.md

@@ -90,6 +90,7 @@ Note that the diagram does not include much of the operations for kernels, but m

+

```mermaid

classDiagram

direction TB

diff --git a/yellow-paper/docs/rollup-circuits/root-rollup.md b/yellow-paper/docs/rollup-circuits/root-rollup.md

index 9663f8dfea1..2e50d6ab5b9 100644

--- a/yellow-paper/docs/rollup-circuits/root-rollup.md

+++ b/yellow-paper/docs/rollup-circuits/root-rollup.md

@@ -16,7 +16,7 @@ This might practically happen through a series of "squisher" circuits that will

:::

## Overview

-

+

```mermaid

classDiagram

direction TB

@@ -40,7 +40,8 @@ class GlobalVariables {

version: Fr

chain_id: Fr

coinbase: EthAddress

- fee_recipient: Address}

+ fee_recipient: Address

+}

class ContentCommitment {

tx_tree_height: Fr

diff --git a/yellow-paper/docs/state/index.md b/yellow-paper/docs/state/index.md

index e84259b8623..b37e2a4e214 100644

--- a/yellow-paper/docs/state/index.md

+++ b/yellow-paper/docs/state/index.md

@@ -2,32 +2,40 @@

title: State

---

-

-

# State

The global state is the set of data that makes up Aztec - it is persistent and only updates when new blocks are added to the chain.

-The state consists of multiple different categories of data with varying requirements. What all of the categories have in common is that they need strong integrity guarantees and efficient membership proofs. Like most other blockchains, this can be enforced by structuring the data as leaves in Merkle trees.

+The state consists of multiple different categories of data with varying requirements.

+What all of the categories have in common is that they need strong integrity guarantees and efficient membership proofs.

+Like most other blockchains, this can be enforced by structuring the data as leaves in Merkle trees.

-However, unlike most other blockchains, our contract state cannot use a Merkle tree as a key-value store for each contract's data. The reason for this is that we have both private and public state; while public state could be stored in a key-value tree, private state cannot, as doing so would leak information whenever the private state is updated, even if encrypted.

+However, unlike most other blockchains, our contract state cannot use a Merkle tree as a key-value store for each contract's data.

+The reason for this is that we have both private and public state; while public state could be stored in a key-value tree, private state cannot, as doing so would leak information whenever the private state is updated, even if encrypted.

-To work around this, we use a two-tree approach for state that can be used privately. Namely we have one (or more) tree(s) where data is added to (sometimes called a data tree), and a second tree where we "nullify" or mark the data as deleted. This allows us to "update" a leaf by adding a new leaf to the data trees, and add the nullifier of the old leaf to the second tree (the nullifier tree). That way we can show that the new leaf is the "active" one, and that the old leaf is "deleted".

+To work around this, we use a two-tree approach for state that can be used privately.

+Namely we have one (or more) tree(s) where data is added to (sometimes called a data tree), and a second tree where we "nullify" or mark the data as deleted.

+This allows us to "update" a leaf by adding a new leaf to the data trees, and add the nullifier of the old leaf to the second tree (the nullifier tree).

+That way we can show that the new leaf is the "active" one, and that the old leaf is "deleted".

-When dealing with private data, only the hash of the data is stored in the leaf in our data tree and we must set up a derivation mechanism that ensures nullifiers can be computed deterministically from the pre-image (the data that was hashed). This way, no-one can tell what data is stored in the leaf (unless they already know it), and therefore won't be able to derive the nullifier and tell if the leaf is active or deleted.

+When dealing with private data, only the hash of the data is stored in the leaf in our data tree and we must set up a derivation mechanism that ensures nullifiers can be computed deterministically from the pre-image (the data that was hashed).

+This way, no-one can tell what data is stored in the leaf (unless they already know it), and therefore won't be able to derive the nullifier and tell if the leaf is active or deleted.

-Convincing someone that a piece of data is active can then be done by proving its membership in the data tree, and that it is not deleted by proving its non-membership in the nullifier tree. This ability to efficiently prove non-membership is one of the extra requirements we have for some parts of our state. To support the requirements most efficiently, we use two families of Merkle trees:

+Convincing someone that a piece of data is active can then be done by proving its membership in the data tree, and that it is not deleted by proving its non-membership in the nullifier tree.

+This ability to efficiently prove non-membership is one of the extra requirements we have for some parts of our state.

+To support the requirements most efficiently, we use two families of Merkle trees:

- The [Append-only Merkle tree](./tree-implementations.md#append-only-merkle-trees), which supports efficient membership proofs,

- The [Indexed Merkle tree](./tree-implementations.md#indexed-merkle-trees), which supports efficient membership and non-membership proofs but increases the cost of adding leaves.

### Private State Access

-Whenever a user is to read or use data, they must then convince the "rollup" that the their data is active. As mentioned above, they must prove that the data is in the data tree (membership proof) and that it is still active (non-membership proof). However, there are nuances to this approach!

+Whenever a user is to read or use data, they must then convince the "rollup" that the their data is active.

+As mentioned above, they must prove that the data is in the data tree (membership proof) and that it is still active (non-membership proof).

+However, there are nuances to this approach!

-One important aspect to consider is _when_ state can be accessed. In most blockchains, state is always accessed at the head of the chain and changes are only made by the sequencer as new blocks are added.

+One important aspect to consider is _when_ state can be accessed.

+In most blockchains, state is always accessed at the head of the chain and changes are only made by the sequencer as new blocks are added.

However, since private execution relies on proofs generated by the user, this would be very impractical - one users transaction could invalidate everyone elses.

@@ -35,21 +43,31 @@ While proving inclusion in the data tree can be done using historical state, the

Membership can be proven using historical state because we are using an append-only tree, so anything that was there in the past must still be in the append-only tree now.

-However, this doesn't work for the non-membership proof, as it can only prove that the data was active at the time the proof was generated, not that it is still active today! This would allow a user to create multiple transactions spending the same data and then send those transactions all at once, creating a double spend.

+However, this doesn't work for the non-membership proof, as it can only prove that the data was active at the time the proof was generated, not that it is still active today!

+This would allow a user to create multiple transactions spending the same data and then send those transactions all at once, creating a double spend.

-To solve this, we need to perform the non-membership proofs at the head of the chain, which only the sequencer knows! This means that instead of the user proving that the nullifier of the data is not in the nullifier tree, they provide the nullifier as part of their transaction, and the sequencer then proves non-membership **AND** inserts it into the nullifier tree. This way, if multiple transactions include the same nullifier, only one of them will be included in the block as the others will fail the non-membership proof.

+To solve this, we need to perform the non-membership proofs at the head of the chain, which only the sequencer knows!

+This means that instead of the user proving that the nullifier of the data is not in the nullifier tree, they provide the nullifier as part of their transaction, and the sequencer then proves non-membership **AND** inserts it into the nullifier tree.

+This way, if multiple transactions include the same nullifier, only one of them will be included in the block as the others will fail the non-membership proof.

-**Why does it need to insert the nullifier if I'm reading?** Why can't it just prove that the nullifier is not in the tree? Well, this is a privacy concern. If you just make the non-membership proof, you are leaking that you are reading data for nullifier $x$, so if you read that data again at a later point, $x$ is seen by the sequencer again and it can infer that it is the same actor reading data. By emitting the nullifier the read is indistinguishable from a write, and the sequencer cannot tell what is happening and there will be no repetitions.

+**Why does it need to insert the nullifier if I'm reading?** Why can't it just prove that the nullifier is not in the tree? Well, this is a privacy concern.

+If you just make the non-membership proof, you are leaking that you are reading data for nullifier $x$, so if you read that data again at a later point, $x$ is seen by the sequencer again and it can infer that it is the same actor reading data.

+By emitting the nullifier the read is indistinguishable from a write, and the sequencer cannot tell what is happening and there will be no repetitions.

-This however also means, that whenever data is only to be read, a new note with the same data must be inserted into the data tree. This note have new randomness, so anyone watching will be unable to tell if it is the same data inserted, or if it is new data. This is good for privacy, but comes at an additional cost.

+This however also means, that whenever data is only to be read, a new note with the same data must be inserted into the data tree.

+This note have new randomness, so anyone watching will be unable to tell if it is the same data inserted, or if it is new data.

+This is good for privacy, but comes at an additional cost.

A side-effect of this also means that if multiple users are "sharing" their notes, any one of them reading the data will cause the note to be updated, so pending transaction that require the note will fail.

## State Categories

@@ -58,19 +76,20 @@ Below is a short description of the state catagories (trees) and why they have t

- [**Note Hashes**](./note-hash-tree.md): A set of hashes (commitments) of the individual blobs of contract data (we call these blobs of data notes). New notes can be created and their hashes inserted through contract execution. We need to support efficient membership proofs as any read will require one to prove validity. The set is represented as an [Append-only Merkle tree](./tree-implementations.md#append-only-merkle-trees), storing the note hashes as leaves.

- [**Nullifiers**](./nullifier-tree.md): A set of nullifiers for notes that have been spent. We need to support efficient non-membership proofs since we need to check that a note has not been spent before it can be used. The set is represented as an [Indexed Merkle tree](./tree-implementations.md#indexed-merkle-trees).

- [**Public Data**](./public-data-tree.md): The key-value store for public contract state. We need to support both efficient membership and non-membership proofs! We require both, since the tree is "empty" from the start. Meaning that if the key is not already stored (non-membership), we need to insert it, and if it is already stored (membership) we need to just update the value.

-- **Contracts**: The set of deployed contracts. We need to support efficient membership proofs as we need to check that a contract is deployed before we can interact with it. The set is represented as an [Append-only Merkle tree](./tree-implementations.md#append-only-merkle-trees).

-- **L1 to L2 Messages**: The set of messages sent from L1 to L2. The set itself only needs to support efficient membership proofs, so we can ensure that the message was correctly sent from L1. However, it utilizes the Nullifier tree from above to ensure that the message cannot be processed twice. The set is represented as an [Append-only Merkle tree](./tree-implementations.md#append-only-merkle-trees).

+- **L1 to L2 Messages**: The set of messages sent from L1 to L2. The set itself only needs to support efficient membership proofs, so we can ensure that the message was correctly sent from L1. However, it utilizes the Nullifier tree from above to ensure that the message cannot be processed twice. The set is represented as an [Append-only Merkle tree](./tree-implementations.md#append-only-merkle-trees). For more information on how the L1 to L2 messages are used, see the [L1 Smart Contracts](../l1-smart-contracts) page.

- [**Archive Tree**](./archive.md): The set of block headers that have been processed. We need to support efficient membership proofs as this is used in private execution to get the roots of the other trees. The set is represented as an [Append-only Merkle tree](./tree-implementations.md#append-only-merkle-trees).

To recall, the global state in Aztec is represented by a set of Merkle trees: the [Note Hash tree](./note-hash-tree.md), [Nullifier tree](./nullifier-tree.md), and [Public Data tree](./public-data-tree.md) reflect the latest state of the chain, while the L1 to L2 message tree allows for [cross-chain communication](../l1-smart-contracts/#l2-outbox) and the [Archive Tree](./archive.md) allows for historical state access.

+

+

+

```mermaid

classDiagram

direction TB

-

class PartialStateReference {

note_hash_tree: Snapshot

nullifier_tree: Snapshot

@@ -93,13 +112,21 @@ class GlobalVariables {

fee_recipient: Address

}

+class ContentCommitment {

+ tx_tree_height: Fr

+ txs_hash: Fr[2]

+ in_hash: Fr[2]

+ out_hash: Fr[2]

+}

+

class Header {

- previous_archive_tree: Snapshot

- body_hash: Fr[2]

+ last_archive: Snapshot

+ content_commitment: ContentCommitment

state: StateReference

global_variables: GlobalVariables

}

-Header *.. Body : body_hash

+Header *.. Body : txs_hash

+Header *-- ContentCommitment: content_commitment

Header *-- StateReference : state

Header *-- GlobalVariables : global_variables

@@ -132,7 +159,6 @@ TxEffect *-- "m" PublicDataWrite: public_writes

TxEffect *-- Logs : logs

class Body {

- l1_to_l2_messages: List~Fr~

tx_effects: List~TxEffect~

}

Body *-- "m" TxEffect

@@ -149,18 +175,6 @@ class NoteHashTree {

leaves: List~Fr~

}

-class NewContractData {

- function_tree_root: Fr

- address: Address

- portal: EthAddress

-}

-

-class ContractTree {

- type: AppendOnlyMerkleTree

- leaves: List~NewContractData~

-}

-ContractTree *.. "m" NewContractData : leaves

-

class PublicDataPreimage {

key: Fr

value: Fr

@@ -196,7 +210,6 @@ class State {

note_hash_tree: NoteHashTree

nullifier_tree: NullifierTree

public_data_tree: PublicDataTree

- contract_tree: ContractTree

l1_to_l2_message_tree: L1ToL2MessageTree

}

State *-- L1ToL2MessageTree : l1_to_l2_message_tree

@@ -204,15 +217,8 @@ State *-- ArchiveTree : archive_tree

State *-- NoteHashTree : note_hash_tree

State *-- NullifierTree : nullifier_tree

State *-- PublicDataTree : public_data_tree

-State *-- ContractTree : contract_tree

```

import DocCardList from '@theme/DocCardList';

-

-

----

-

-:::warning **Discussion Point**:

-"Indexed merkle tree" is not a very telling name, as our normal merkle trees are indexed too. I propose we call them "successor merkle trees" instead since each leaf refers to its successor. The low-nullifiers are also the predecessor of the nullifier you are inserting, so it seems nice that you prove that the nullifier you are inserting has a predecessor and that the predecessors successor would also be the successor of the nullifier you are inserting.

-:::

+

\ No newline at end of file